Abstract

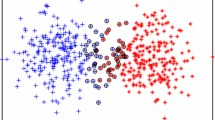

The present training method of support vector machine has large computational cost. Integrating SVM’s geometrical interpretation and structural risk minimization, a new fast support vector machine: Centroid Normal Direct Support Vector Machine (CNDSVM) was proposed. The normal vector of optimal separating hyperplane was determined by the connective direction of centroids. The optimal separating hyperplane was directly obtained through the distributing of the samples normal projection. The experiments on artificial data sets and benchmarks data sets show that the method can get almost the same classify result as SVM and LSSVM with very low computational cost.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Wang D, Zheng J, Zhou Y, Li J (2010) A scalable support vector machine for distributed classification in ad hoc sensor networks. Neurocomputing 74:394–400

Wei J, Jian-qi Z, Xiang Z (2011) Face recognition method based on support vector machine and particle swarm optimization. Expert Syst Appl 38:4390–4393

Zheng J, Lu B-L (2011) A support vector machine classifier with automatic confidence and its application to gender classification. Neurocomputing 74:1926–1935

Al-Anazi A, Gates ID (2010) A support vector machine algorithm to classify lithofacies and model permeability in heterogeneous reservoirs. Eng Geol 114:267–277

Vapnik V (1998) Statistical Learning Theory. Wiley, New York

Osuna E, Freund R, Girosi F (1996) Support Vector Machines: Training and Applications. A.I. Memo AIM-1602, MIT A.I. Lab

Platt J (1999) Fast training of support vector machines using sequential minimal optimization. In: Schölkopf B, Burges CJC, Smola AJ (eds) Advances in Kernel Methods—Support Vector Learning. MIT Press, Cambridge

Jayadeva, Khemchandai R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell (TPAMI) 29(5):905–910

Xinjun P (2011) TPMSVM: a novel twin parametric-margin support vector machine for pattern recognition. Pattern Recogn 44:2678–2692

Fung G, Mangasarian OL (2001) Proximal support vector machine classifiers. In: Provost F, Srikant R (eds) Proceedings KDD-2001: knowledge discovery and data mining, Association for Computing Machinery, San Francisco/New York, pp 77–86

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Yuh-Jye L, Mangasarian Olvi L (2001) RSVM: reduced support vector machines. Proceedings of the 1st SIAM international conference on data mining, Chicago, pp 5–7

De Brabanter K, De Brabanter J, Suykens JAK, De Moor B (2010) Optimized fixed-size Kernel models for large data sets. Comput Stat Data Anal 54(6):1484–1504

Jiao LC, Zhang L, Zhou WD (2001) Pre-extracting support vectors for support vector machine. Acta Electron Sin 29(3):383–386

Tang X, Zhuang L, Cai J, Li C (2010) Multi-fault classification based on support vector machine trained by chaos particle swarm optimization. Knowl Based Syst 23:486–490

Danny R (2000) DirectSVM: a fast and simple support vector machine perception. Proc IEEE Int Workshop Neural Netw Signal Process 1:356–365

Pelckmans K, Suykens JAK, De Moor B (2007) A risk minimization principle for a class of Parzen estimators. Proceeding of the Neural Information Processing Systems (NIPS 2007), Vancouver, Canada

Lin Y, Lee Y, Wahba G (2002) Support vector machines for classification in nonstandard situations. Mach Learn 46(2):191–202

Kaizhu H, Haiqin Y et al (2008) Maxi-min margin machine: learning large margin classifiers locally and globally. IEEE Trans Neural Netw 19(2):260–272

Acknowledgments

This work is supported by National Natural Science Foundation of China (Grant No. 61472443, 61201209) National Nature Science Basic Research Plan in Shaanxi Province of China (No. 2013JQ8042).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wen, Xx., Xiang-ru, M., Xiao-long, L. et al. Centroid Normal Direct Support Vector Machine. Neural Process Lett 45, 563–575 (2017). https://doi.org/10.1007/s11063-016-9539-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-016-9539-5