Abstract

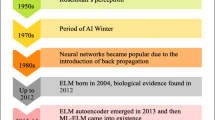

Deep learning has drawn extensive attention in machine learning because of its excellent performance, especially the convolutional neural network (CNN) architecture for image classification task. Therefore, many variant deep models based on CNN have been proposed in the past few years. However, the success of these models depends mostly on fine-tuning using backpropagation, which is a time-consuming process and suffers from troubles including slow convergence rate, local minima, intensive human intervention,etc. And these models achieve excellent performance only when their architectures are deeper enough. To overcome the above problems, we propose a simple, effective and fast deep architecture called ELMAENet, which uses extreme learning machines auto-encoder (ELM-AE) to get the filters of convolutional layer. ELMAENet incorporates the power of convolutional layer and ELM-AE (Kasun et al. in IEEE Intell Syst 28(6):31–34, 2013), which no longer need parameter tuning but still has a good performance for image classification. Experiments on several datasets have shown that the proposed ELMAENet achieves comparable or even better performance than that of the state-of-the-art models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Park DC (2000) Centroid neural network for unsupervised competitive learning. IEEE Trans Neural Netw 11(2):520–528

Lee H, Grosse R, Ranganath R, Ng AY (2009) Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations. In: Proceedings of international conference on machine learning

Masci J, Meier U, Cirean D, Schmidhuber J (2011) Stacked convolutional auto-encoders for hierarchical feature extraction. In: International conference on artificial neural networks

Yim J, Ju J, Jung H, Kim J (2015) Image classification using convolutional neural networks with multi-stage feature. In: Robot intelligence technology and applications 3. Advances in Intelligent Systems and Computing, vol 345. Springer, Cham

Krizhevsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. Proc Adv Neural Inf Process Syst 25:1090–1098

Norouzi M, Ranjbar M, Mori G (2009) Stacks of convolutional restricted Boltzmann machines for shift-invariant feature learning. In: IEEE conference on computer vision and pattern recognition, 2009. CVPR 2009. IEEE, pp 2735–2742

Le QV, Ngiam J, Chen Z, Chia D, Koh PW, Ng AY (2010) Tiled convolutional neural networks. In: NIPS’10 Proceedings of the 23rd International Conference on Neural Information Processing Systems, vol 1, pp 1279–1287

Cho K et al (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of conference on empirical methods in natural language processing, pp 1724–1734

Wang X, Gupta A (2015) Unsupervised learning of visual representations using videos. In: Proceedings of the IEEE international conference on computer vision, pp 2794–2802

Dosovitskiy A, Springenberg JT, Riedmiller M, Brox T (2014) Discriminative unsupervised feature learning with convolutional neural networks. In: NIPS’14 Proceedings of the 27th International Conference on Neural Information Processing Systems, vol 1, pp 766–774

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434

Doersch C, Gupta A, Efros A A (2015) Unsupervised visual representation learning by context prediction. In: Proceedings of the IEEE international conference on computer vision, pp 1422–1430

Huang C, Change Loy C, Tang X (2016) Unsupervised learning of discriminative attributes and visual representations. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5175–5184

Mairal J, Koniusz P, Harchaoui Z, Schmid C (2014) Convolutional kernel networks. In: Advances in neural information processing systems 27, pp 2627–2635

Perronnin F, Larlus D (2015) Fisher vectors meet neural networks: a hybrid classification architecture. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3743–3752

Chan T-H, Jia K, Gao S, Lu J, Zeng Z, Ma Y (2015) PCANet: a simple deep learning baseline for image classification? IEEE Trans Image Process 24(12):5017–5032

Tian L, Fan C, et al (2015) SRDANet: an efficient deep learning algorithm for face analysis. In: International conference on intelligent robotics and applications, pp 499–510

Feng Z, Jin L, Tao D, Huang S (2015) DLANet: a manifold-learning-based discriminative feature learning network for scene classification. Neurocomputing 157:11–21

Tianl L, Fan C (2015) Stacked PCA network (SPCANet): an effective deep learning for face recognition. In: IEEE international conference on digital signal processing, pp 1039–1043

Huang G-B, Bai Z, Kasun LLC, Vong CM (2015) Local receptive fields based extreme learning machine. IEEE Comput Intell Mag 10(2):18–29

Cao W, Gao J et al (2015) A hybrid deep learning CNN-ELM model and its application in handwritten numeral recognition. J Comput Inf Syst 11:2673–2680

Wentao Z, Jun M, Qing LY et al (2015) Hierarchical extreme learning machine for unsupervised representation learning. In: Neural networks (IJCNN), pp 1–8

Gurpnar F, Kaya H, et al (2016) Kernel ELM and CNN based facial age estimation. In: Computer vision and pattern recognition workshops, pp 785–791

Pang S, Yang X (2016) Deep convolutional extreme learning machine and its application in handwritten digit classification. Comput Intell Neurosci. https://doi.org/10.1155/2016/3049632

Wang Y, Xie Z et al (2016) An efficient and effective convolutional auto-encoder extreme learning machine network for 3d feature learning. Neurocomputing 174:988–998

Huang J, Yu ZL, Cai Z et al (2017) Extreme learning machine with multi-scale local receptive fields for texture classification. Multidimens Syst Signal Process 7(28):995–1011

Bai Z, Kasun LLC, Huang GB (2015) Generic object recognition with local receptive fields based extreme learning machine. Proc Comput Sci 53:391–399

Tissera MD, McDonnell MD (2016) Deep extreme learning machines: supervised autoencoding architecture for classification. Neurocomputing 174:42–49

Bengio Y, Goodfellow IJ, Courville A (2015) Deep learning. Nature 521:436–444

LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD (1989) Backpropagation applied to handwritten zip code recognition. Neural Comput 1:541–551

LeCun Y, Bottou L, Bengio Y, Haner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Hinton GE, Salakhutdinov R (2006) Reducing the dimensionality of data with neural networks. Science 313:504–507

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Szegedy C, Ioffe S, Vanhoucke V, et al (2016) Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv preprint arXiv:1602.07261

Huang G-B, Chen L, Siew C-K (2006) Universal approximation using incremental networks with random hidden computation nodes. IEEE Trans Neural Netw 17(4):879–892

Kasun LLC, Zhou H, Huang G-B, Vong CM (2013) Representational learning with extreme learning machines for big data. IEEE Intell Syst 28(6):31–34

Chang C-C, Lin C-J (2001) LIBSVM: a library for support vector machines (Online). Available: http://www.csie.ntu.edu.tw/~cjlin/libsvm

Zhou D, Bousquet O, Lal TN, Weston J, Scholkopf B (2004) Learning with local and global consistency. Adv Neural Inf Process Syst 16(3):21–328

LeCun Y, Huang FJ, Bottou L (2004) Learning methods for generic object recognition with invariance to pose and lighting. In: Proceedinngs of international conference on computer vision pattern recognition, vol 2, pp II-97-104

Tian L, Fan C, Ming Y, Shi J (2015) SRDANet: an efficient deep learning algorithm for face analysis. ICIRA 8:499–510

Cai D, He X, Hu Y, Han J, Huang T (2007) Learning a spatially smooth subspace for face recognition. In: Proceedings of IEEE conference on computer vision and pattern recognition machine learning (CVPR 2007)

Cai D, He X, Han J (2008) SRDA: an efficient algorithm for large-scale discriminant analysis. IEEE Trans Knowl Data Eng 20(1):1–12

Wright J, Yang AY, Ganesh A, Sastry SS, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31(2):210–227

Guo Z, Zhang D (2010) A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process 19(6):1657–1663

Lei Z, Pietikainen M, Li SZ (2014) Learning discriminant face descriptor. IEEE Trans Pattern Anal Mach Intell 36(2):289–302

Yin Y, Gelenbe E (2016) Deep learning in multi-layer architectures of dense nuclei. arXiv preprint arXiv:1609.07160

Yin Y, Gelenbe E (2017) FIEEE. Single-cell based random neural network for deep learning. In: International joint conference on neural networks

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18(7):1527–1554

Ciresan DC, Meier U, Masci J et al (2011) Flexible, high performance convolutional neural networks for image classification. In: IJCAI proceedings-international joint conference on artificial intelligence, vol 22(1), p 1237

McDonnell M D, Vladusich T (2015) Enhanced image classification with a fast-learning shallow convolutional neural network. In: International joint conference on neural networks (IJCNN). IEEE, pp 1–7

Keonhee L, Dong-Chul P (2015) Image classification using fast learning convolutional neural networks. Adv Sci Technol Lett 113:50–55

McDonnell MD, Tissera MD et al (2015) Fast, simple and accurate handwritten digit classification by training shallow neural network classifiers with the ‘extreme learning machine’ algorithm. PLoS ONE 10(8):e0134254

Yu K, Lin Y, Lafferty J (2011) Learning image representations from the pixel level via hierarchical sparse coding. In: CVPR

Nemkov RM, Mezentseva OS, Mezentsev D (2016) Using of a convolutional neural network with changing receptive fields in the tasks of image recognition. In: Proceedings of the first international scientific conference

Wei X, Zhang L, Dua B, Tao D (2017) Combining local and global: Rich and robust feature pooling for visual recognition. Pattern Recognit 62:225–235

Wan L, Zeiler M, Zhang S, LeCun Y, Fergus R (2013) Regularization of neural networks using DropConnect. In: Proceedings of the 30th international conference on machine learning, Atlanta, Georgia, USA; JMLR: WCP volume 28

Goodfellow IJ, Warde-Farley D, Mirza M, Courville A, Bengio Y (2013) Maxout networks. Technical Report Arxiv report 1302.4389, Universite de Montreal

Tang Y (2013) Deep learning using linear support vector machines. In: Workshop on challenges in representation learning, ICML

Yang Z, Moczulski M, Denil M, de Freitas N, Smola A, Song L, Wang Z (2015) Deep fried convnets. In: ICV

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778. 1, 5, 6, 7, 8

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. 1, 5, 6, 7, 8

Sabour S, Frosst N, Hinton GE (2017) Dynamic routing between capsules. arXiv preprint arXiv:1710.09829, 5, 6, 7

Ma B, Xia Y (2018) Autonomous deep learning: a genetic DCNN designer for image classification. arXiv:1807.00284

Acknowledgements

This work is supported by the National Key Research and Development Program of China (No.2018YFC0809001).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chang, P., Zhang, J., Wang, J. et al. ELMAENet: A Simple, Effective and Fast Deep Architecture for Image Classification. Neural Process Lett 51, 129–146 (2020). https://doi.org/10.1007/s11063-019-10079-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10079-9