Abstract

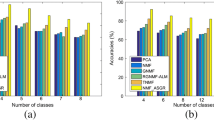

Non-negative matrix factorization (NMF) is becoming an important tool for information retrieval and pattern recognition. However, in the applications of image decomposition, it is not enough to discover the intrinsic geometrical structure of the observation samples by only considering the similarity of different images. In this paper, symmetric manifold regularized objective functions are proposed to develop NMF based learning algorithms (called SMNMF), which explore both the global and local features of the manifold structures for image clustering and at the same time improve the convergence of the graph regularized NMF algorithms. For different initializations, simulations are utilized to confirm the theoretical results obtained in the convergence analysis of the new algorithms. Experimental results on COIL20, ORL, and JAFFE data sets demonstrate the clustering effectiveness of the proposed algorithms by comparing with the state-of-the-art algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Paatero P, Tapper U (1994) Positive matrix factorization: a nonnegative factor model with optimal utilization of error estimates of data values. Environmetrics 5:111–126

Lee DD, Seung HS (1999) Learning of the parts of objects by non-negative matrix factorization. Nature 401:788–791

Amari S (1995) Information geometry of the EM and em algorithms for neural networks. Neural Netw 8:1379–1408

Guillameta D, Vitria J, Schieleb B (2003) Introducing a weighted non-negative matrix factorization for image classification. Pattern Recognit Lett 24:2447–2454

Berry M, Gillis N, Glineur F (2009) Document classification using nonnegative matrix factorization and under approximation. In: IEEE international symposium on circuits and systems. Knoxville, TN, USA, pp 2782–2785

Sajda P, Du S, Parra L (2003) Recovery of constituent spectra using non-negative matrix factorization. In: Proceedings of SPIE, wavelets: applications in signal and image processing, vol 5207, pp 321–331

Hoyer P (2004) Non-negative matrix factorization with sparseness constraints. J Mach Learn Res 5:1457–1469

Cichocki A, Zdunek R, Amari S (2006) New algorithms for non-negative matrix factorization in applications to blind source separation. In: ICASSP. Toulouse, France, pp 621–625

Lee DD, Seung HS (2001) Algorithms for nonnegative matrix factorization. In: NIPS, vol 13. MIT Press, Cambridge, USA, pp 556–562

Chu M, Diele F, Plemmons R, Ragni S (2004) Optimality, computation, and interpretation of nonnegative matrix factorizations. Technical report, Wake Forest University. North Carolina

Berry M, Browne M, Langville A, Pauca V, Plemmons R (2007) Algorithms and applications for approximate nonnegative matrix factorization. Comput Stat Data Anal 52:155–173

Gonzales EF, Zhang Y (2005) Accelerating the Lee–Seung algorithm for non-negative matrix factorization. Technical report, Department of computational and applied mathematics. Rice University, USA

Lin C-J (2007) On the convergence of multiplicative update algorithms for non-negative matrix factorization. IEEE Trans Neural Netw 18:1589–1596

Yang S, Ye M (2013) Global minima analysis of Lee and Seung’s nonnegative matrix factorization algorithms. Neural Process Lett 38:29–51

Badeau R, Bertin N, Vincent E (2010) Stability analysis of multiplicative update algorithms and application to non-negative matrix factorization. IEEE Trans Neural Netw 21:1869–1881

Yang S, Yi Z, Ye M, He X (2014) Convergence analysis of graph regularized non-negative matrix factorization. IEEE Trans Knowl Data Eng 26:2151–2165

Sun R, Luo Z (2016) Guaranteed matrix completion via non-convex factorization. IEEE Trans Inf Theory 62(11):6535–6579

Zhao R, Tan V (2017) A unified convergence analysis of the multiplicative update algorithm for nonnegative matrix factorization. In: IEEE ICASSP 2017 - 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP) - New Orleans, LA, USA, 5–9 March 2017

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. In: Dietterich TG, Becker S, Ghahramani Z (eds) Advances in neural information processing systems, vol 14. MIT Press, Cambridge, pp 585–591

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from examples. J Mach Learn Res 7:2399–2434

Roweis S, Saul L (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290:2323–2326

Tenenbaum J, de Silva V, Langford J (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290:2319–2323

Brun A, Western C, Herberthson M et al (2005) Fast manifold learning based on Riemannian normal coordinates. In: Proceeding of the 14th Scandinavian Conon image analysis, pp 921–929

Zhang Z, Zhao K (2013) Low-rank matrix approximation with manifold regularization. IEEE Trans Pattern Anal Mach Intell 35(7):1717–1729

Zhu R, Liu J, Zhang Y et al (2017) A robust manifold graph regularized nonnegative matrix factorization algorithm for cancer gene clustering. Molecules 22(12):2131–2143

Liu F, Yang X, Guan N, Yi X (2016) Online graph regularized non-negative matrix factorization for large-scale datasets. Neurocomputing 204(C):162–171

Hadsell R, Chopra S, LeCun Y (2006) Dimensionality reduction by learning an invariant mapping. In: Proceedings of the 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), pp 1735–1742

Yang J, Yan S, Fu Y, Li X, Huang TS (2008) Non-negative graph embedding. In: Proceedings of the 2008 IEEE conference on computer vision and pattern recognition (CVPR’08), pp 1–8

Cai D, He X, Han J, Huang TS (2011) Graph regularization non-negative matrix factorization for data representation. IEEE Trans Pattern Anal Mach Intell 33:1548–1560

Gao Z, Guan N, Huang X, Peng X, Luo Z, Tang Y (2017) Distributed graph regularized non-negative matrix factorization with greedy coordinate descent. In: Proceeding of the IEEE international conference on systems, vol 2017. Budapest, Hungary

Zhang X, Gao H, Li G et al (2018) Multi-view clustering based on graph-regularized nonnegative matrix factorization for object recognition. Inf Sci 432(1):463–478

Yi Y, Wang J, Zhou W et al (2019) Non-negative matrix factorization with locality constrained adaptive graph. IEEE Trans Circuits and Syst Video Technol. https://doi.org/10.1109/tcsvt.2019.2892971

Fang Y, Wang R, Dai B, Wu X (2015) Graph-based learning via auto-grouped sparse regularization and kernelized extension. IEEE Trans Knowl Data Eng 27:142–155

Liu H, Wu Z, Cai D, Huang TS (2012) Constrained nonnegative matrix factorization for image representation. IEEE Trans Pattern Anal Mach Intell 34:1299–1311

Yang S, Yi Z, He Xi, Li X (2015) A class of manifold regularized multiplicative update algorithms for image clustering. IEEE Trans Image Process 24:5302–5314

Gu Q, Zhou J (2009) Co-clustering on manifolds. In: Proceedings of the 15th ACM SIGKDD international conference on knowledge discovery and data mining (KDD), pp 359–368

Wang F, Li P (2010) Efficient nonnegative matrix factorization with random projections. In: Proceedings of the 10th SIAM conference on data mining (SDM), pp 281–292

Shang F, Jiao LC, Wang F (2012) Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recognit 45:2237–2250

Wang D, Gao X, Wang X (2016) Semi-supervised nonnegative matrix factorization via constraint propagation. IEEE Trans Cybern 46:233–244

Li Z, Tang J, He X (2017) Robust structured nonnegative matrix factorization for image representation. IEEE Trans Neural Netw Learn Syst 99:1–14

Wang J, Tian F, Liu CH, Wang X (2015) Robust semi-supervised nonnegative matrix factorization. In: 2015 International joint conference on neural networks (IJCNN) 2015, pp. 1–8

Shi J, Malik J (2000) Normalized cuts and image segmentation. IEEE Trans Pattern Anal Mach Intell 22:888–905

Nene SA, Nayar SK, Murase H (1996) Technical report CUCS-005-96, February 1996

Samaria F, Harter A (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of 2nd IEEE workshop on applications of computer vision. Sarasota FL, USA

Lyons M, Akamatsu S, Kamachi M, Gyoba J (1998) Coding facial expressions with gabor wavelets. In: Proceedings of the 3rd IEEE international conference on automatic face and gesture recognition. Nara Japan, pp 200–205

Acknowledgements

This research was supported in part by the National Natural Science Foundation of China (NSFC) under grants 61572107, the National Science and Technology Major Project of the Ministry of Science and Technology of China under grant 2018ZX10715003, the National Key R&D Program of China under grant 2017YFC1703905, and the Sichuan Science and Technology Program under grants 2018GZ0192, 2018SZ0065, and 2019YFS0283.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proofs of theorems

Appendix: Proofs of theorems

For matrix \(\mathbf{A }\), its row number m and column number n are limited numbers. Thus in the proofs of theorems, m and n can be considered as constants. Because of the normalization, we have \(\sum _{i=1}^{m}a_{ij}=1\) or \(\sum _{i=1}^{m}a_{ij}^2=1\). For all i, \(a_{ij}\le 1\), from (20), it holds that there always exists i, such that \(e_{i}>0\). For the update algorithm of \(x_{kj}\), if \(x_{kj} > 0\), \(c_{i} > 0\), from (20) and (21), \(\forall \, i\), \(d_{i}\ge 0\), it holds that

If \(\exists i\), such that \(e_{i} =0\), then \(c_{i}= 0\), it follows that

From (20), if \(\forall \, i\), \(e_{i} \ne 0\), then \(a_{ij}>0\); it holds that

For all i, k, and p, \(y_{ik}\) and \(l_{kp}\) are constants and \(y_{ik}\) are nonnegative. Therefore, for all i and p, there always exist some \(y_{ik}\) and \(l_{kp}\) such that \(y_{ik}>0\) and \(l_{pk}\ne 0\). Denote

Proof of Theorem 1

From (21) and (27), for the \(t+1\)-th update, if \(x_{kj}(t) = 0\), then \(x_{kj}(t+1) = 0\), Thus \(x_{kj}(t+1)\) is bounded by any positive constant. If \(x_{kj}(t) > 0\), it follows that

From the definition of matrix \(\mathbf{L }\), if \(\lambda _{\mathbf{X }}\) is small enough, we have \(\lambda _{\mathbf{X }}\sum _{p=1 }^{N}l_{kp}x_{pj}(t)< \sum _{q=1}^{m}a_{qj}\). Thus \(x_{kj}(t+1)\) will be upper bounded by \(\sum _{i=1}^{m}y_{ik}\).

Inequality (29) shows that in any update step, \(x_{kj}\) is always upper bounded by a positive constant.

On the other hand, assuming that \(C_{0}\) is a nonnegative constant, if \(x_{kj}(t)\ge C_{0}\), from Eq. (19), it follows that:

From (31), assume

For any update step t, if \(x_{kj}(t)\ge C_{0}\), then it holds that

Inequality (32) shows for any update of \(x_{kj}\), if initialization \(x_{kj}(0)>0\), then in any update step t, it holds that \(x_{kj}(t)>0\); if initialization \(x_{kj}(0)=0\), then in any update step t, it holds that \(x_{kj}(t)=0\). The update of \(a_{ij}\) has the similar result; to save page, we omit the detail analysis steps here.

For the update of \(a_{ij}\), since \(m_{ii}\ge 0\), from (24), (25), and (32), it follows that

If \(\lambda _{\mathbf{A }}\sum _{p=1, p\ne i}^{m}m_{ip}+NM_{0} \le 0\), it holds that

Inequality (33) shows that after the t+1-th update, \(a_{ij}\) will be a limited number under the condition (32). But in the update the denominator may become zero if we don’t have the constraint \(\sum _{p=1}^{N}x_{pj} +\lambda _{\mathbf{A }}[\mathbf{M }\mathbf{A }]_{ij} \ge \varepsilon \). Thus, we cannot guarantee the non-divergence of the learning update of \(a_{ij}\). With this constraint, the normalization of \(a_{ij}\) in each update iteration can guarantee \(a_{ij} \le 1\) for any i and j. Thus, the proof of Theorem 1 is complete. \(\square \)

Proof of Theorem 2

Assume

For the updates of \(x_{kj}\), at any update step t, if

then it follows that

Since \(\frac{\sqrt{N}\sum _{i=1}^{m}y_{ij}}{1-\sqrt{N}\lambda _{\mathbf{X }}||{\mathbf {l}}_{r\cdot }||}\) is a constant, if the initialization \(||{\mathbf {x}}_{\cdot j}(0)||\le \frac{\sqrt{N}\sum _{i=1}^{m}y_{ij}}{1-\sqrt{N}\lambda _{X}||{\mathbf {l}}_{k\cdot }||}\), (34) shows that \(x_{kj}\) is always upper bounded. Thus, the proof is complete. \(\square \)

Proof of Theorem 3

The update algorithms (9) and (10) show that for each update iteration, all the elements in \({\mathbf {A}}\) and \({\mathbf {X}}^T\) will be updated. However the updates of \(x_{kj}\) can be considered column by column and the updates of \(a_{ij}\) can be considered row by row. Assuming \({\mathbf {x}}_{k\cdot }=(x_{k1}, x_{k2},\ldots , x_{kn})^T\), \({\mathbf {a}}_{i\cdot }=(a_{i1}, a_{i2},\ldots , a_{in})\), we have the following update systems for \( x_{kj}\) and \( a_{ij}\):

From systems (35) and (36), it is clear that for the update rules of \(x_{kj}\), if \(\lambda _{\mathbf{X }}=0\), they only include the column vector \({\mathbf {x}}_{k\cdot }\) of matrix \({\mathbf {X}}^T\) as a variable, and for the update rules of \(a_{ij}\), if \(\lambda _{\mathbf{A }}=0\), they only include the row vector \({\mathbf {a}}_{i\cdot }\) of matrix \({\mathbf {A}}\) as a variable. Denoting

the following two systems hold:

For NMF, since \({\mathbf {A}}\) and \({\mathbf {X}}\) are variables, the objective functions are not convex in both variables together. Therefore, the learning algorithms have multiple fixed points if all the elements in \({\mathbf {A}}\) and \({\mathbf {X}}\) are updated at the same time. However, in the practical data decomposition, updates for matrix \({\mathbf {A}}\) and \({\mathbf {X}}\) are computed alternately. A reasonable assumption is that update system (39) is used to find a group fixed points \(x_{kj0}\), \(j = 1, 2,\ldots , n\) for some given matrix \({\mathbf {A}}\), and elements in \({\mathbf {A}}\) are not changed in the updates of \(x_{kj}\). Thus, we can temporarily consider \({\mathbf {A}}\) as a constant matrix in the study of fixed point \(x_{kj0}\). For the update of \(a_{ij}\), we have similar assumption.

For the kj-th update, if \(x_{kj}=0\) is a solution of Eq. (11), then \(x_{kj}=0\) is a fixed point of the kj-th update in (9). However, elements in \({\mathbf {x}}_{k\cdot }\) cannot be all zeros, otherwise the denominators in the learning algorithms may be zeros. In fact, inequality (31) shows that for any point \(x_{kj}\), it its initial point \(x_{kj}(0)>0\), then at any update step t, it always holds that \(x_{kj}(t)>0\). \(a_{ij}\) has the similar result.

Let us find all the nonnegative fixed points for the kj-th update. From Eqs. (11) and (12), if all the solutions \(x_{kj0}\) and \(a_{ij0}\) are nonzero, they are included in the following two linear equation systems separately:

and

For linear equation system (41), only \(x_{kj}\) (j = \(1,2,3,\ldots , N\)) are considered as variables of the system. Denoting \(v_{i}(x_{k1}, x_{k2},\ldots , x_{kn})= v_{i}\), linear equation system (41) can be simplified to

which can be rewritten as

Assuming \((v_{10}, v_{20},\ldots , v_{m0})\) is a solution of the equation system (44), if there exists some i, such that \(y_{ik}=0\), then \(v_{i0} =0\); the number of variables will reduce to \(m-1\). Thus, we can always assume for any i, \(v_{i0} >0\). From (37), it holds that

which can be rewritten as

If for some \(\mathbf{A }\), linear equation systems (44) and (46) have nonnegative solutions, then update algorithm (9) will have nonnegative fixed points.

Similarly for the update of \(a_{ij}\), from (38) and (40), we have the following linear equation systems.

Assuming \((s_{10}, s_{20},\ldots , s_{N0})\) is a solution of the equation system (47), it follows that

(48) is equivalent to

Thus, if for some \(\mathbf{X }\), linear equation systems (47) and (49) have nonnegative solutions, then update algorithm (10) will have nonnegative fixed points.

For the given initializations and observation sample matrix \(\mathbf{Y }\), the process of the NMF learning is equivalent to solve linear systems Eqs. (44), (46) and (47), (49) alternately. When solving Eqs. (44), (46), matrix \(\mathbf{A }\) is temporarily considered as a constant. Similarly, when solving Eqs. (47), (49), \(\mathbf{X }^T\) is considered temporarily as a constant. When the learning converges, the fixed points of the learning algorithms are obtained, which indicates that the solutions of these systems are obtained.

If \(\lambda _{{\mathbf {A}}} = \lambda _{{\mathbf {X}}}=0\), systems (41) and (42) can be rewritten as

and

For any \({\mathbf {a}}_{i\cdot }\) and \({\mathbf {x}}_{k\cdot }\), the only solutions for systems (50) and (51) are \(v_{1} = v_{2} =\ldots , = v_{m} = s_{1} = s_{2} =\ldots , = s_{N} = 1\). From Eqs. (37) and (38), it follows that

Thus, For sample matrix \(\mathbf{Y }\), NMF learning is to find matrices \(\mathbf{A }\) and \(\mathbf{X }\) such that \(\mathbf{Y }=\mathbf AX ^T\). Clearly, this type of matrices \(\mathbf{A }\) and \(\mathbf{X }\) exists. The conditions of the solution existing are:

- (a)

For variables \(x_{kj}\), \(j=1, 2,\ldots , n\), if the ranks of matrix \({\mathbf {A}}\) and the augmented matrix of Eq. (46) are equal, then Eq. (46) has one or more groups of nonzero solutions.

- (b)

For variables \(a_{ij}\), \(j=1, 2,\ldots , n\), if the ranks of matrix \({\mathbf {X}}^{T}\) and the augmented matrix of Eq. (49) are equal, then Eq. (49) has one or more groups of nonzero solutions.

Clearly, only if the conditions in both (a) and (b) are satisfied, the NMF learning algorithms can reach their equilibrium state. At this state, we can say that the learning algorithms converge.

In fact, for all k, if \({\mathbf {X}}\) is a group solution of Eq. (46), then the corresponding \({\mathbf {A}}\) is a group solution of Eq. (49). For \(a_{ij}\), we have the same result.

At the equilibrium state, to simplify the expression, \(x_{1}\), \(x_{2},\ldots , x_{n}\) are used to replace variables \(x_{k1}\), \(x_{k2}\ldots , x_{kn}\) in the following linear equation system. For the case of \(\lambda _{\mathbf{X }}=0\), (\(v_{1}\), \(v_{2},\ldots , v_{m}) = {\mathbf {1}}\) is the only solution of Eq. (50). In the update of \(x_{kj}\), \(\mathbf{A }\) is temporarily considered as a constant matrix. From (46), for any given matrix \({\mathbf {A}}\), assume \(r = \hbox {rank}({\mathbf {A}})\). Using Gaussian elimination, the following equivalent linear equation system holds:

where \(g_{ii}\ne 0\) (\(i=1, 2,\ldots , r\)), \(x_{r+1},x_{r+2},\ldots , x_{n}\) are the free variables of the system, and (\({\bar{d}}_{1}\), \({\bar{d}}_{2},\ldots , {\bar{d}}_{r}\)) is uniquely determined by \({\mathbf {v}}= (v_{1}, v_{2},\ldots , v_{m})\). Clearly, system (52) has infinite number of solutions since usually we have \(n \ge r\) in the NMF applications. The solutions of system (52) depend on the values of \(x_{r+1},x_{r+2},\ldots , x_{n}\). For each group of determined values of \(x_{r+1},x_{r+2}, \ldots , x_{n}\), the linear equation system has only one group solution. However, \(x_{r+1},x_{r+2},\ldots , x_{n}\) can be uniquely determined by the initializations in the learning. Thus for a group of given initializations, the solution vector of system (52) \({\mathbf {x}} = (x_{1},x_{2},\ldots , x_{n})\) is unique. Thus, the update will converge to the unique fixed point of the learning algorithm.

For the SMNMF, it always holds that \(\lambda _{\mathbf{A }}\ne 0\) and/or \(\lambda _{\mathbf{X }}\ne 0\). Thus, we have the following different cases:

If \(\lambda _{{\mathbf {A}}}=0\), \(\lambda _{{\mathbf {X}}}\ne 0\), it holds that

If \(\lambda _{{\mathbf {A}}}\ne 0\), \(\lambda _{{\mathbf {X}}}=0\), it holds that

In these two cases, the fixed points that simultaneously satisfy Eqs. (11) and (12) do not exist. Therefore, the learning algorithms can only obtain their fixed points approximately.

If both \(\lambda _{{\mathbf {A}}}>0\) and \(\lambda _{{\mathbf {X}}}>0\), then it holds that

Although the separation results have \(\mathbf {AX}^T<{\mathbf {Y}}\), systems (44) and (46) may have solutions, which are the fixed points of the learning algorithms (9) and (10) and at the same time the sparseness is imposed. Assuming the ik-th error is \(d_{ik}\), it follows that

Combining Eqs. (44), (46), (47) and (49), we have \(v_{i}\ge 1\) and \(s_{k}\ge 1\). Thus, a unique separation result \(\mathbf{Y }\) = \(\mathbf AX ^T\) + \(\mathbf{d }\) can be achieved, where \(\mathbf{d }\) is a displacement matrix. The proof is complete. \(\square \)

Rights and permissions

About this article

Cite this article

Yang, S., Liu, Y., Li, Q. et al. Non-negative Matrix Factorization with Symmetric Manifold Regularization. Neural Process Lett 51, 723–748 (2020). https://doi.org/10.1007/s11063-019-10111-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10111-y