Abstract

Compared with traditional neural networks, extreme learning machine (ELM) shows outstanding performances on speed and computation. Aiming at the problems that ELM needs more hidden layer neurons and meaningful features of data sometimes are sacrificed in order to improve the training speed, a novelty network multi-parallel extreme learning machine with excitatory and inhibitory neurons (MEI-ELM) is proposed based on the idea of biological neurons. In MEI-ELM, (1) A parallel system is introduced to make it more compact and reduce the number of hidden layer neurons. (2) The property of excitatory and inhibitory of biological neuronal for data processing is introduced to improve its performance. Through applying MEI-ELM, ELM, Fast Learning Network (FLN) and Fast Learning Network with Parallel Layer Perceptrons (PLP-FLN) to 11 classical regression problems, it can be obtained that MEI-ELM performs much better than the other methods in generalization and stability.

Similar content being viewed by others

1 Introduction

Over the past decades, Artificial Neural Network (ANN), a feasible tool to address various regression problems, has aroused wide attention [1,2,3]. From the perspective of information processing, ANN can be considered the abstract representation of human brain. Nevertheless, with the deepening of research, various shortcomings have been revealed in the early neural networks. For instance, its learning speed is much lower than the required, which may take hours or even days; the optimization algorithm based on gradient descent is easy to fall into local minima [4], etc. Accordingly, how to address these shortcomings is an open critical question.

For the mentioned questions, Huang et al. [5] presented a single hidden layer feedforward neural network named Extreme Learning Machine (ELM) in 2006. For the prominent learning speed, ELM has been extensively employed in various fields (e.g., function approximation [6, 7], pattern classification [7,8,9] and so on [10]. Over the past few years, ELM has aroused huge attention for its advantages. For structural optimization, Huang et al. [11] designed incremental ELM (I-ELM). Besides, two extreme learning machine models, semi-supervised ELM (SS-ELM) and unsupervised ELM (US-ELM), were presented based on the manifold regularization to conduct multi-class classification or multi-cluster clustering [12]. In [13], a residual compensation ELM (RC-ELM) was proposed with a multi-layer structure. To remodel the non-modeling prediction error of the previous layer, the residual compensation was performed in RC-ELM layer by layer. Numerous variant models of ELM exhibit good performance on their corresponding problems [14,15,16]. However, ELM and its variant still have several limitations. For instance, in some scenarios, some of them require more hidden layer neurons, and the optimal parameters of their random initialized weights are always difficult to obtain.

To decrease the effectiveness of random initialized weights and biases, Li et al. proposed Fast Learning Network (FLN) based on ELM [17, 18]. The major differences between FLN and ELM lie in that FLN receives the nonlinear information from hidden layer neurons (e.g., ELM), as well as the linear information from input neurons. To further enhance the performance of FLN, Li et al., proposed the Fast Learning Network with Parallel Layer Perceptrons (PLP-FLN) [19]. PLP-FLN, a three-parallel network, is proposed given the structural characteristics of parallel layer perceptorns (PLP), exhibiting high performance in regression and classification [20].

As a matter of fact, the activity in ELM, FLN, PLP-FLN is driven entirely by excitatory neurons [21], and the connected mode between them are identical to the most feedforward network models. Nevertheless, the inhibitory neurons in all of them are not mentioned [21, 22], which have been proved by Billeh [23] to be more active in the brain in 2017.

To summarize, the highlights of this paper are listed as follows:

- 1.

We proposed a novelty learning method called Multi-parallel Extreme Learning Machine with Excitatory and Inhibitory neurons (MEI-ELM) for regression. The model consists of two stages: multi-parallel link information transmitting stage, excitation and inhibition neurons data processing stage.

- 2.

A multi-input and multi-parallel structure are used in MEI-ELM, which make MEI-ELM have a more compact structure [24] and less number of hidden layer neurons.

- 3.

The property of biological neuron [25, 26] is introduced into MEI-ELM. According to the properties of biological neurons, a data processing function is proposed to process the information after multiple parallel links.

The paper is organized as follows: Section 2 is the review of ELM. Section 3 introduces the mathematical methods of Multi-parallel Extreme Learning Machine with excitatory and inhibitory neurons (MEI-ELM). In Sect. 4, performance evaluation of MEI-ELM and comparison with three networks are given. Finally, Sect. 5 discusses and summarizes this article.

2 Review of ELM

Extreme Learning Machine is a single hidden layer feedforward network, which has a good effect in dealing with various regression problems. Compared with the traditional networks, there are two advantages: (1) fast training speed; (2) the uniqueness of the output weights on the condition of the randomly initialized input weights and hidden biases.

The mathematical model of the Extreme Learning Machine is given as follows:

Let\(\left\{ \left( x_{i},y_{i} \right) \right\} _{i=1}^{N}\) be a N distinct samples, \(x_{i}= \left[ x_{1}^{i},x_{2}^{i},\ldots ,x_{n}^{i}\right] \in R^{n}\), \(y_{i}= \left[ y_{1}^{i},y_{2}^{i},\ldots , y_{l}^{i}\right] ^{T}\in R^{l}\). If the number of hidden layer neurons is set as m, then the input weight W, hidden layer bias B and output weight \(\beta \) are matrices of \(m\times n\), \(m\times 1\), \(l\times m\), respectively.

The output of the extreme learning machine can be expressed as:

where \(g_{j}\left( \cdot \right) \) is the hidden layer activation function.

Equation (1) can be rewritten as Eq. (2).

where H is the output matrix of hidden layer.

The output weight matrix \(\beta \) can be calculated by Moore–Penrose generalized inverse.

where \(H^{+}\) is the MP generalized inverse of H .

There are three steps of ELM as the following:

Step 1: The input weights W and bias B are generated randomly.

Step 2: Calculate the hidden layer output matrix H.

Step 3: Compute the output weights \(\beta \).

3 Multi-parallel Extreme Learning Machine with Excitatory and Inhibitory Neurons

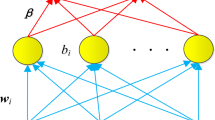

In order to further the performance of ELM, a novelty learning algorithm, Multi-parallel Extreme Learning Machine with Excitatory and Inhibitory neurons (MEI-ELM), is proposed, whose structure is given in Fig. 1. The multi-parallel, a system consisting of many units with simple structure and single function, is introduced to our algorithm, which has the advantages of processing multiple information at the same time, improving the processing speed and fault tolerance of the network. The parallel structure of MEI-ELM is mainly embodied in two parts: (1) In the input layer, two single hidden layer and one single layer feedforward neural networks transmit information at the same time. (2) There are two ways to connect the hidden layer and the output layer. In MEI-ELM, the major structure features are given as follows:

- 1.

There are two single hidden layer feedforward neural networks (SLFN) with parallel links. One is called top network and the other is bottom network. Moreover, the hidden outputs of two networks are not only fed to output neurons, but also fed to an operator.

- 2.

Two operators are introduced, which make both hidden outputs interact with each other.

- 3.

The original input information in the middle is directly fed to output neurons, which make the output neurons could receive not only the nonlinear information from hidden layer and two operators, but also the linear information from the original input neurons.

- 4.

The neurons with the property of excitatory and inhibitory of biological neuronal are introduced.

3.1 Learning Process of MEI-ELM

As seen from Fig. 1, MEI-ELM could be thought of a multi-parallel system that could deal with linear and nonlinear problems. Furthermore, the network structure of MEI-ELM is more compact and more closely related. The learning process is given as follows:

Suppose, there are N samples \(\left\{ \left( x_{t},y_{t} \right) \right\} _{t=1}^{N}\). And \(x_{t}= \left[ x_{0t},x_{1t},\ldots ,x_{nt}\right] ^{T}\in R^{n+1}\) is the feature vector of the t-th sample. Where \(x_{0t}\) is the biases of hidden neurons. Let the number of neurons in the hidden layer be m. The output vector of the t-th sample is \(y_{t}\). The network can be described as:

where \(\delta (\cdot )\) and \(\varphi (\cdot )\) are the activation functions of two hidden layers, respectively. The input of the top, middle and bottom layers are expressed as \(a_{jt}\), \(b_{jt}\), \(c_{kt}\). The output of the top and bottom hidden layers are \(d_{mt}\), \(e_{mt}\), respectively. They make up all the inputs of this multi-parallel system. They are expressed as:

The input weights of the top and bottom networks are \(w^{T}\), \(w^{B}\), respectively. The weight between input neurons and output neurons is \(w^{in}\). Where \(w^{TO}\), \(w^{BO}\) are the weights between top and bottom hidden layer neurons and output neurons.

To facilitate calculations, the hidden layer output matrix can be expressed as two parts:

The hidden layer output of the top network is

The hidden layer output of the bottom network is

The Eqs. (12) and (13) can be respectively expressed in the matrix form:

After an operation is introduced , the new matrix can be obtained:

So, the input matrix can be represented as:

where H can be seen as the input matrix of the output layer, and X1 is the input sample matrix that not consider the hidden layer bias \(x_{0t}\). The matrix representation of hidden layer feature \(d_{mt}\) and \(e_{mt}\) are D and E. In summary, the output of MEI-ELM can be expressed as Eq. (18)

where \(f\left( \cdot \right) \) is the activation function, \(\beta \) is the output weight.

The principle of solving output weights is the same as ELM.

According to the knowledge of Moore–Penrose generalized inverse, the least norm least squares solution of the output weight in the equation is given as Eq. (19).

3.2 The Excitatory and Inhibitory Neurons

According to the reference [23], it could be known that the information transmission process of biological neurons has the following characteristics: (1) Signals can be excitatory or inhibitory. (2) The cumulative effect received by a neuron determines the working state of the neuron. (3) Each neuron has a threshold.

Inspired by the biological neurons, the additional layer is added to deal with the output information, which makes the MEI-ELM own the property of excitatory and inhibitory.

The additional layer will deal with the fed information by two data processing functions.

where x represents the input of the additional layer. The threshold \(\alpha \) is between (0, 1). For artificial neurons, the functions of inhibiting neurons are not generally considered in traditional neural network. However, the process of inhibition and selection are mainly dominated by inhibition neurons. Hence, a more accurate result can be got by adding simple data processing functions whose basic operating principles are similar to the action of excitatory and inhibition neuronal.

So, the final result can be expressed as Eq. (22).

In order to make the procedure of MEI-ELM clearer, a pseudo-code of the proposed MEI-ELM is given in Algorithm 1.

4 Experiments on Regression Problems

In this section, we will evaluate the performance of the proposed MEI-ELM using 11 regression problems listed in Table 1 compared with ELM, Fast Learning Network (FLN), Fast Learning Network with Parallel Layer Perceptrons (PLP-FLN). In the experiments, 5 evaluation criteria, the mean and Standard Deviation (SD) of Root Mean Square Error (RMSE), Mean Absolute Percentage Error (MAPE), R\(^{2}\) (R-Square) and Variance are employed to test the performances of the four networks.

Experiment Setup Each experiment is repeated 10 times, and the threshold \(\alpha \) of data processing function is 0.001. All the initialization weights are generated randomly. The activation function is set to sigmoid. All the simulations for MEI-ELM, PLP-FLN, ELM and FLN are carried out in MATLAB 8.3 environment running in a desktop PC with Windows 7(64 bit), 3.30 GHz CPU.

The basic information of all the regression data sets is shown in Table 1. All data sets are randomly divided into training set and testing set. Five contents are given in Table 1: the type of data set, sample size, the size of training set , the size of testing set and attribute.

4.1 Parameter Selection of MEI-ELM

In this part, the setting of hidden layer nodes and the selection of activation function are mainly considered. The related parameters are set the same as the previous section.

The number of hidden layer neurons is critical in single hidden layer feedforward network. In this paper, it is determined by threefold cross validation method. Taking the Sevro data set as an example, the data set is split into 3 groups. In each experiment, one group acts as a test set, and the other two groups are employed as training sets for cross-validation. Subsequently, the number of hidden layer nodes is set from 1 to 10 to calculate the optimal number by comparing the average of mean and standard deviation of RMSE. The experimental results are listed in Tables 2 and 3.

From the Table 2, it can be seen that the data set is split into 3 groups (A, B and C). Besides, with the rise of the number of nodes, the training effect is improved, except for 5 and 10. For the test set, in Table 2 and Fig. 2, when it is 3, the test effect reached the best according to the mean. Therefore, the optimal number of hidden layer neurons in MEI-ELM is set to 3. We tried to ascertain the optimal number of the hidden layer for MEI-ELM on all the data sets, and found most of them only requiring relatively less hidden neurons and exhibiting the similar trend. Thus, the number of hidden layer is set to 3 for all the data sets.

Six activation functions (Sigmoid, Hardlim, Sine, Tribas, Radbas and Tanh) are mainly considered as candidates. Table 4 shows the performance of MEI-ELM under six different activation functions with three hidden nodes. It can be seen from the table that when the activation function is sigmoid, the experimental effect is the best, sine and tanh are the second. Hence, the activation function is set to sigmoid.

4.2 Performance Estimation

In this part, the experiments are performed in two cases: (1) set the same number of hidden nodes for each network; (2) set the optimal hidden layer nodes for respective network. The parameters and environments are identical to those of the previous section. The activation function is set to sigmoid. 11 regression data sets and 5 evaluation criteria, the mean and SD of RMSE, MAPE, R-Square and variance are taken to assess the performance of ELM, FLN, PLP-FLN and MEI-ELM. Except for R-Square, the smaller the other four evaluation criteria are, the better the performance will be. Nevertheless, when the value of R-Square is closer to 1, the prediction ability is better.

4.2.1 Performance Comparison of Four Networks with the Same Hidden Layer Nodes

In this part, the number of hidden layer nodes of four networks is set to 3.

Figure 3 is the mean of the RMSE of the training samples measured by ELM, FLN, PLP-FLN and MEI-ELM. Compared with ELM, the RMSE of the training samples of MEI-ELM is much better on all data sets. Compared with PLP-FLN, MEI-ELM has a little better accuracy improvement on the data sets of Machine CPU, Auto price, 2Abalone and CCPP. However, in the remaining seven data sets (Servo, Airfoil self noise, California Housing, Concrete, Concrete Slump, Housing, Energy efficiency), the regression accuracy of MEI-ELM has been greatly enhanced. Overall, the regression accuracy of MEI-ELM is the best and gradually improved on 11 regression data sets.

Table 5 shows the mean and standard deviation of testing RMSE of ELM, FLN, PLP-FLN and MEI-ELM. For RMSE, it can be obtained from Table 5 and Fig. 4 that the testing RMSE of MEI-ELM is obvious better than the other algorithms on all data sets. For SD, although six data sets (Airfoil self noise, Concrete Slump, Housing, Machine CPU, Auto price and CCPP) results achieved by FLN are better than MEI-ELM, the performance of MEI-ELM is close to FLN. Only four data sets (Servo, Airfoil self noise, Concrete, as well as CCPP) results achieved by PLP-FLN have more advantages than MEI-ELM.

The MAPE and R-Square of the test samples of ELM, FLN, PLP-FLN and MEI-ELM with three hidden nodes are listed in Table 6. For MAPE, MEI-ELM is noticeably better than the other three methods (ELM, FLN as well as PLP-FLN) on all data sets, except for the results of PLP-FLN on only two date sets (2Abalone and CCPP). For R-Square, MEI-ELM is much better than the other three methods (ELM, FLN and PLP-FLN) on all data sets, except for the results of FLN on only 1 date sets (Machine CPU). By comparing the MAPE and R-Square of four networks, it is obvious obtained that MEI-ELM has good performance on most of the data sets and exhibits better prediction ability.

Table 7 shows the variance of four networks to test the stability of test sets. It can be seen that, for Variance, MEI-ELM is much better than the other three methods (ELM, FLN and PLP-FLN) on all data sets, except for the results of FLN on only 1 date sets (Machine CPU). This verifies that MEI-ELM is more stable than ELM, FLN, PLP-FLN when the hidden layer nodes are all 3.

4.2.2 Performance Comparison of Four Networks with the Optimal Hidden Layer Nodes

In this part, it is considered that different networks have different optimal number of hidden layer nodes. Similarly, threefold cross validation method is used to determine the optimal hidden layer nodes of each network. An optimal node within the optimal range for the four methods is selected because that the number of hidden layer nodes of some data sets can not be determined as a specific value via testing all the data sets of the four algorithms. The results are as follows: the optimal number of ELM nodes can be set to about 34, FLN about 18, PLP-FLN about 4, MEI-ELM about 3.

The comparison of training RMSE of ELM, FLN, PLP-FLN and MEI-ELM is shown in Fig. 5. The mean and standard deviation testing RMSE of ELM, FLN, PLP-FLN and MEI-ELM are shown in Table 8 and Fig. 6.

It can be obtained from Fig. 5 that the training RMSE of MEI-ELM can be optimal or close to optimal on four data sets (Concrete Slump, Housing, Auto price, CCPP). However, in Table 8 and Fig. 6, the accuracy of testing RMSE is better than ELM, FLN and PLP-FLN on seven data sets (Servo, Concrete Slump, Housing, Machine CPU, Auto price, 2Abalone, CCPP). Therefore, with the optimal hidden layer nodes for all four networks, MEI-ELM can not only achieve the best accuracy on more than half of the data sets, but also has the least number of hidden layer nodes at the same time. For SD of RMSE on 11 regression data sets, MEI-ELM shows a better performance on four data sets (Servo, Concrete, Housing, 2Abalone). In other data sets, MEI-ELM is almost equal to the optimal results. In summary, MEI-ELM has obvious advantages than the other three networks in regression performance and stability.

Table 9 shows the MAPE and R-Square of the test samples of ELM, FLN, PLP-FLN and MEI-ELM with the optimal hidden layer nodes of each network. In 11 regression data sets, for MAPE, MEI-ELM is much better than the other three methods (ELM, FLN and PLP-FLN) on seven data sets (Servo, Airfoil self noise, California Housing, Concrete Slump, Housing, Machine CPU and Auto price). For R-Square, MEI-ELM is much better than the other 3 methods (ELM, FLN and PLP-FLN) on eight data sets (Servo, California Housing, Concrete Slump, Housing, Machine CPU, Auto price, 2Abalone and CCPP). Therefore, even if each network is tested in their optimal hidden layer nodes, MEI-ELM still has better regression and generalization performance.

Table 10 shows the Variance of the test samples of ELM, FLN, PLP-FLN and MEI-ELM with the optimal hidden layer nodes of each network. In 11 regression data sets, for Variance, MEI-ELM is much better than those of the other three methods (ELM, FLN and PLP-FLN) on seven data sets (Servo, California Housing, Concrete Slump, Housing, Machine CPU, 2Abalone and Auto price). On Airfoil self noise, the Variance of MEI-ELM is close to the optimal result of FLN. The results of Variance on 11 regression data sets prove that in two different cases, the proposed MEI-ELM is more stable and has a greater advantage in dealing with the regression problem.

5 Conclusions

In this paper, MEI-ELM is introduced, which combines the parallel structure and the property of excitatory and inhibitory neurons. Furthermore, in MEI-ELM, the multi-parallel structure makes our network more compact and the output is reprocessed based on the information transmission process of biological neurons. The proposed MEI-ELM shows better performance and stability than the other three networks on 11 regression data sets. Hence, the MEI-ELM could be a useful machine learning tool to deal with various regression problems.

MEI-ELM is a parallel combination model of single hidden layer feedforward network, which increases its width rather than the depth. Its performance may not better than some deep networks in large sample data sets. Therefore, how to combine MEI-ELM with deep learning schemes to improve the performance further also should be considered in the future.

References

Seifert Jeffrey W (2004) Data mining: an overview. In: World engineering congress

Green M, Ekelund U, Edenbrandt L et al (2009) Exploring new possibilities for case-based explanation of artificial neural network ensembles. Neural Netw 22:75–81

May RJ, Maier HR, Dandy GC (2010) Data splitting for artificial neural networks using SOM-based stratified sampling. Neural Netw Off J Int Neural Netw Soc 23:283–294

Bin LI, Yi-Bin LI (2011) Chaotic time series prediction based on ELM learning algorithm. Tianjin Daxue Xuebao 44:701–704

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Li MB, Meng JE (2006) Nonlinear system identification using extreme learning machine. In: 9th International conference on control, automation, robotics and vision, 2006. ICARCV’06. IEEE, pp 1–4

Suresh S, Babu RV, Kim HJ (2009) No-reference image quality assessment using modified extreme learning machine classifier. Appl Soft Comput 9:541–552

Rong HJ, Huang GB, Sundararajan N et al (2009) Online sequential fuzzy extreme learning machine for function approximation and classification problems. IEEE Trans Syst Man Cybern B Cybern A Publ IEEE Syst Man Cybern Soc 39:1067–1072

Han F, Huang DS (2006) Improved extreme learning machine for function approximation by encoding a priori information. Neurocomputing 69:2369–2373

Rong HJ, Ong YS, Tan AH et al (2008) A fast pruned-extreme learning machine for classification problem. Neurocomputing 72:359–366

Huang GB, Chen L (2008) Enhanced random search based incremental extreme learning machine. Neurocomptuing 71:3460–3468

Huang G, Song SJ, Gupta JND, Wu C (2014) Semi-supervised and unsupervised extreme learning machines. IEEE Trans Cybern 44(12):2405–2417

Jie Z, Wendong X, Yanjiao L et al (2018) Residual compensation extreme learning machine for regression. Neurocomputing 311:126–136

Lv F, Han M (2019) Hyperspectral image classification based on multiple reduced kernel extreme learning machine. Int J Mach Learn Cybern 6:1–9

Feixiang Zhao ID, Liu Y, Huo K et al (2018) Radar HRRP target recognition based on stacked autoencoder and extreme learning machine. Sensors 18(1):173

Khatab ZE, Hajihoseini A, Ghorashi SA (2018) A fingerprint method for indoor localization using autoencoder based deep extreme learning machine. IEEE Sens Lett 2(1):1–4

Li G, Niu P, Duan X et al (2013) Fast learning network: a novel artificial neural network with a fast learning speed. Neural Comput Appl 24:1683–1695

Caminhas WM, Vieira DAG, Vasconcelos JA (2003) Parallel layer perceptron. Neurocomputing 55:771–778

Li G et al (2017) Fast learning network with parallel layer perceptrons. Neural Process Lett 47:549–564

Caminhas WM, Vieira DAG, Vasconcelos JA (2003) Parallel layer perceptron. Neurocomputing 55:771–778

Uchizono K (1965) Characteristics of excitatory and inhibitory synapses in the central nervous system of the cat. Nature 207(4997):642–643

Wilson HR, Cowan JD (1972) Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J 12(1):1–24

Billeh Yazan N, Schaub MT (2018) Feedforward architectures driven by inhibitory interactions. J Comput Neurosci 44(18):63–74

Yao Mingchen, Li W, Liu Y (2011) Double parallel extreme learning machine. Energy Procedia 13:7413–7418

Corinto F et al (2011) Synchronization in networks of FitzHugh–Nagumo neurons with memristor synapses. In: European conference on circuit theory and design IEEE

Gerard R (1941) The interaction of neurones. Ohio J 41:160–172

Acknowledgements

Project supported by the National Natural Science Foundation of China (Grant No. 61403331), Program for the Top Young Talents of Higher Learning Institutions of Hebei (Grant No. BJ2017033), Natural Science Foundation of Hebei Province (Grant No. F2016203427), China Postdoctoral Science Foundation (Grant No. 2015M571280)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Li, G., Zou, J. Multi-parallel Extreme Learning Machine with Excitatory and Inhibitory Neurons for Regression. Neural Process Lett 51, 1579–1597 (2020). https://doi.org/10.1007/s11063-019-10160-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10160-3