Abstract

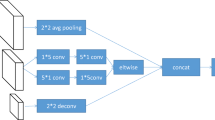

While the convolutional layer deepens during the feature extraction process in deep learning networks, the performance of the object detection decreases associated with the gradual loss of feature integrity. In this paper, the convolutional feature frequency adaptive fusion object detection network is proposed to effectively compensate for the missing frequency information in the convolutional feature propagation. Two branches are used for high- and low-frequency-domain channel information to maintain the stability of feature delivery. The adaptive feature fusion network complements the advantages of missing high-frequency features, enhances the feature extraction integrity of convolutional neural networks, and improves network detection performance. The simulation tests showed that this algorithm’s detection results are significantly enhanced on blurred objects, overlapping objects, and objects with low contrast between the object and background. The detection results on the Common Objects in Context dataset was more than 1% higher than the CornerNet algorithm. Thus, the proposed algorithm performs well for detecting pedestrians, vehicles, and other objects. Consequently, this algorithm is suitable for application in autonomous vehicle systems and smart robots.

Similar content being viewed by others

References

He K, Zhang X, Zhang X, Ren S, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Girshick R (2015) Fast R-CNN[C]. In: International conference on computer vision, pp 1440–1448

Redmon J, Ali F (2018) YOLOv3: an incremental improvement. ArXiv Preprint ArXiv:1804.02767

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition[C]. Comput Vis Pattern Recog pp 770–778

Jisoo J, Hyojin P, Nojun K (2017) Enhancement of SSD by concatenating feature maps for object detection. In: Kim TK, Zafeiriou S, Brostow G, Mikolajczyk K (eds) Proceedings of the British Machine Vision Conference (BMVC), pp 76.1–76.12. BMVA Press

Sun F, Kong T, Huang W, Tan C, Fang B, Liu H (2019) Feature pyramid reconfiguration with consistent loss for object detection[J]. IEEE Trans Image Process 28(10):5041–5051

Ghiasi G, Lin T-Y, Le QV (2019) NAS-FPN: learning scalable feature pyramid architecture for object detection[C]. Comput Vis Pattern Recogn, pp 7036-7045

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2019) M2Det: a single-shot object detector based on multi-level feature pyramid network[J]. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol 33, pp 9259–9266

Law H, Deng J (2018) CornerNet: Detecting objects as paired keypoints[C]. In: European conference on computer vision, pp 765–781

Law H, Teng Y, Russakovsky O et al (2020) CornerNet-lite: ef-ficient keypoint based object detection[OL]. [2020–04–08]. ArXiv: 1904.08900

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) CenterNet: keypoint triplets for object detection[C]. In: International conference on computer vision, pp 6569–6578

Newell A, Yang K, Deng J (2016) Stacked Hourglass Networks for Human Pose Estimation[C]. In: European conference on computer vision, pp 483–499

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions[C]. Comput Vis Pattern Recogn, pp 1–9

Kong T, Yao A, Chen Y, Sun F (2016) Hypernet: towards accurate region proposal generation and joint object detection[A]. Comput Vis Pattern Recogn[C]. USA: IEEE, pp 845–853

Lin T, Dollar P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection[C]. Comput Vis Pattern Recogn, pp 936–944

Singh B, Davis LS (2018) An analysis of scale invariance in object detection - SNIP[C]. Comput Vis Pattern Recogn, pp 3578-3587

Chen Y, Fan H, Xu B, Yan Z, Kalantidis Y, Rohrbach M, Shuicheng Y, Feng J (2019) Drop an octave: reducing spatial redundancy in convolutional neural networks with octave convolution[C]. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp 3434–3443.

Li J, Hou Q, Xing J, Ju J (2020) SSD object detection model based on multi-frequency feature theory[J]. IEEE Access 8:82294–82305

Ye L, Wang L, Sun Y, Zhao L, Wei Y (2018) Parallel multi-stage features fusion of deep convolutional neural networks for aerial scene classification[J]. J Remote Sens Lett 9(3):294–303

Yu Y, Liu F (2018) A two-stream deep fusion framework for high-resolution aerial scene classification[J]. J Comput Intell Neurosci p 8639367

Sun N, Li W, Liu J, Han G, Wu CJ (2019) Fusing object semantics and deep appearance features for scene recognition[J]. IEEE Trans Circuits Technol Syst Video 29(6):1715–1728

Sun S-L, Deng Z-L (2004) Multi-sensor optimal information fusion Kalman filter[J]. Automatica 40(6):1017–1023

Lin T, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollar P, Zitnick CL (2014) Microsoft COCO: common objects in context[C]. In: European conference on computer vision, pp 740–755.

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-ResNet and the impact of residual connections on learning[C]. In: National conference on artificial intelligence, pp 4278–4284.

Wang X, Yang M, Zhu S, Lin Y (2013) Regionlets for Generic Object Detection[C]. In: International conference on computer vision, pp 17–24.

He K, Gkioxari G, Dollar P, Girshick R (2017) Mask R-CNN[C]. In: International conference on computer vision, pp 2980–2988.

Xie S, Girshick R, Dollar P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks[C]. Comput Vis Pattern Recogn, pp 5987–5995.

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C, Berg AC (2016) SSD: single shot multibox detector[C]. In: European conference on computer vision, pp 21–37

Fu C-Y, Liu W, Ranga A, Tyagi A, Berg AC (2017) DSSD : deconvolutional single shot detector ArXiv Preprint ArXiv:1701.06659

Zhang S, Wen L, Bian X, Lei Z, Li SZ (2018) Single-shot refinement neural network for object detection[C]. Comput Vis Pattern Recogn, pp 4203–4212

Funding

This work was supported by National Natural Science Foundation of China (Funding No.: 61673084) and Natural Science Foundation of Liaoning Province of China (Funding No.: 20170540192, 20180550866).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mao, L., Li, X., Yang, D. et al. Convolutional Feature Frequency Adaptive Fusion Object Detection Network. Neural Process Lett 53, 3545–3560 (2021). https://doi.org/10.1007/s11063-021-10560-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10560-4