Abstract

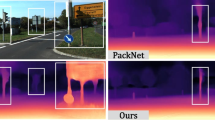

Dense depth estimation based on a single image is a basic problem in computer vision and has exciting applications in many robotic tasks. Modelling fully supervised methods requires the acquisition of accurate and large ground truth data sets, which is often complex and expensive. On the other hand, self-supervised learning has emerged as a promising alternative to monocular depth estimation as it does not require ground truth depth data. In this paper, we propose a novel self-supervised joint learning framework for depth estimation using consecutive frames from monocular and stereo videos. Our architecture leverages two new ideas for improvement: (1) triplet attention and (2) funnel activation (FReLU). By adding triplet attention to the deep and pose networks, this module captures the importance of features across dimensions in a tensor without any information bottlenecks, making the optimisation learning framework more reliable. FReLU is used at the non-linear activation layer to grasp the local context adaptively in images, rather than using more complex convolutions at the convolution layer. FReLU extracts the spatial structure of objects by the pixel-wise modeling capacity provided by the spatial condition, making the details of the complex image richer. The experimental results show that the proposed method is comparable with the state-of-the-art self-supervised monocular depth estimation method.

Similar content being viewed by others

References

Desouza G N, Kak A C (2002) Vision for mobile robot navigation: a survey. IEEE Trans Pattern Anal Mach Intell 237–267

Chen C, Seff A, Kornhauser A et al (2015) Deepdriving: learning affordance for direct perception in autonomous driving. In: Proceedings of the IEEE international conference on computer vision, pp 2722–2730

Karsch K, Liu C, Kang S B (2014) Depth transfer: Depth extraction from video using non-parametric sampling. IEEE Trans Pattern Anal Mach Intell 2144–2158

Saxena A, Chung S H, Ng A Y (2005) Learning depth from single monocular images. In: Conference and workshop on neural information processing system, pp 1–8

Saxena A, Sun M, Ng A Y (2008) Make3d: learning 3d scene structure from a single still image. IEEE Trans Pattern Anal Mach Intell 824–840

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. arXiv preprint arXiv: 1406.2283

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: Proceedings of the IEEE international conference on computer vision, pp 2650–2658

Fu H, Gong M, Wang C, et al (2018) Deep ordinal regression network for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2002–2011

Yang N, Wang R, Stuckler J, et al (2018) Deep virtual stereo odometry: leveraging deep depth prediction for monocular direct sparse odometry. In: Proceedings of the European conference on computer vision, pp 817–833

Mayer N, Ilg E, Fischer P et al (2018) What makes good synthetic training data for learning disparity and optical flow estimation. Int J Comput Vis 942–960

Gupta S, Girshick R, Arbeláez P, et al (2014) Learning rich features from RGB-D images for object detection and segmentation. In: European conference on computer vision, pp 345–360

Garg R, Bg V K, Carneiro G, et al (2016) Unsupervised CNN for single view depth estimation: geometry to the rescue. In: Proceedings of the European conference on computer vision, pp 740–756

Godard C, Mac Aodha O, Brostow G J (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Zhou T, Brown M, Snavely N, et al (2017) Unsupervised learning of depth and ego-motion from video. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1851–1858

Guizilini V, Ambrus R, Pillai S et al (2019) Packnet-SFM: 3d packing for self-supervised monocular depth estimation. arXiv preprint arXiv: 1905.02693

Bian J W, Li Z, Wang N et al (2019) Unsupervised scale-consistent depth and ego-motion learning from monocular video. arXiv preprint arXiv: 1908.10553

Vijayanarasimhan S, Ricco S, Schmid C et al (2017) Sfm-Net: learning of structure and motion from video. arXiv preprint arXiv: 1704.07804

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need. arXiv prepri arXiv: 1706.03762

Godard C, Mac Aodha O, Firman M, et al (2019) Digging into self-supervised monocular depth estimation. In: Proceedings of the IEEE international conference on computer vision, pp 3828–3838

Misra D,Nalamada T, Arasanipalai A U, et al (2021) Rotate to attend: convolutional triplet attention module. In: Proceedings of the IEEE winter conference on applications of computer vision, pp 3139–3148

Ma N, Zhang X, Sun J (2020) Funnel activation for vision recognition. arXiv preprint arXiv: 2007.11842

Nair V, Hinton G E (2010) Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the international conference on machine learning, pp 807–814

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference and computer vision, pp 1026–1034

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Menze M, Geiger A (2015) Object scene flow for autonomous vehicles. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3061–3070

Dudek G, Jenkin M (2010) Computational principles of mobile robotics. Cambridge University Press, pp 1827–1834

Achtelik M, Bachrach A, He R, et al (2009) Stereo vision and laser odometry for autonomous helicopters in GPS-denied indoor environments. In: Proceedings of the SPIE unmanned systems technology XI, Orlando, FL, pp 733219-1–733219-10

Ranjan A, Jampani V, Balles L, et al (2019) Competitive collaboration: joint unsupervised learning of depth, camera motion, optical flow and motion segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12240–12249

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. arXiv preprint arXiv:1406.2283

Li B, Shen C, Dai Y, et al (2015) Depth and surface normal estimation from monocular images using regression on deep features and hierarchical CRFs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1119–1127

Laina I, Rupprecht C, Belagiannis V, et al (2016) Deeper depth prediction with fully convolutional residual networks. In: International conference on 3D vision (3DV), pp 239–248

Zoran D, Isola P, Krishnan D, et al (2015) Learning ordinal relationships for mid-level vision. In: Proceedings of the IEEE international conference on computer vision, pp 388–396

Chen W, Fu Z, Yang D et al (2016) Single-image depth perception in the wild. arXiv preprint arXiv: 1604.03901

Wu Y, Ying S, Zheng L (2018) Size-to-depth: a new perspective for single image depth estimation. arXiv preprint arXiv: 1801.04461

Zbontar J, LeCun Y (2016) Stereo matching by training a convolutional neural network to compare image patches. J Mach Learn Res 2287–2318

Zhan H, Garg R, Weerasekera C S, et al (2018) Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 340–349

Kundu J N, Uppala P K, Pahuja A, et al (2018) Adadepth: Unsupervised content congruent adaptation for depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2656–2665

Atapour-Abarghouei A, Breckon T P (2018) Real-time monocular depth estimation using synthetic data with domain adaptation via image style transfer. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2800–2810

Zou Y, Luo Z, Huang J B (2018) Df-net: unsupervised joint learning of depth and flow using cross-task consistency. In: Proceedings of the European conference on computer vision, pp 36–53

Xie J, Girshick R, Farhadi A (2016) Deep3d: fully automatic 2d-to-3d video conversion with deep convolutional neural networks. In: Proceedings of the European conference on computer vision, pp 842–857

Guizilini V, Ambrus R, Pillai S, et al (2020) 3d packing for self-supervised monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2485–2494

Kuznietsov Y, Stuckler J, Leibe B (2017) Semi-supervised deep learning for monocular depth map prediction. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6647–6655

Luo Y, Ren J, Lin M, et al (2018) Single view stereo matching. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 155–163

Aleotti F, Tosi F, Poggi M, et al (2018) Generative adversarial networks for unsupervised monocular depth prediction. In: Proceedings of the European conference on computer vision, pp 298–313

Pilzer A, Xu D, Puscas M, et al (2018) Unsupervised adversarial depth estimation using cycled generative networks. In: International conference on 3D vision, pp 587–595

Poggi M, Tosi F, Mattoccia S (2018) Learning monocular depth estimation with unsupervised trinocular assumptions. In: International conference on 3d vision, pp 324–333

Li R, Wang S, Long Z, et al (2018) Undeepvo: monocular visual odometry through unsupervised deep learning. In: IEEE International conference on robotics and automation, pp 7286–7291

Babu V M, Das K, Majumdar A, et al (2018) Undemon: unsupervised deep network for depth and ego-motion estimation. In: IEEE International conference on intelligent robots and systems, pp 1082–1088

Poggi M, Aleotti F, Tosi F, et al (2018) Towards real-time unsupervised monocular depth estimation on cpu. In: 2018 IEEE international conference on intelligent robots and systems, pp 5848–5854

Byravan A, Fox D (2017) Se3-nets: Learning rigid body motion using deep neural networks. In: 2017 IEEE international conference on robotics and automation, pp 173–180

Yin Z, Shi J (2018) Geonet: unsupervised learning of dense depth, optical flow and camera pose. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1983–1992

Johnston A, Carneiro G (2020) Self-supervised monocular trained depth estimation using self-attention and discrete disparity volume. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4756–4765

Dai J, Qi H, Xiong Y, et al (2017) Deformable convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 764–773

Holschneider M, Kronland-Martinet R, Morlet J et al (1990) A real-time algorithm for signal analysis with the help of the wavelet transform. Wavelets. Inverse Problems and Theoretical Imaging 286–297

Qiu S, Xu X, Cai B (2018) FReLU: flexible rectified linear units for improving convolutional neural networks. In: International conference on pattern recognition, pp 1223–1228

Clevert D A, Unterthiner T, Hochreiter S (2015) Fast and accurate deep network learning by exponential linear units (elus). arXiv preprint arXiv:1511.07289

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: Medical image computing and computer assisted intervention society, pp 234–241

Wang Z, Bovik AC, Sheikh HR et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 600–612

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? The kitti vision benchmark suite. In: IEEE Conference on computer vision and pattern recognition, pp 3354–3361

Liu F, Shen C, Lin G et al (2015) Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans Pattern Anal Mach Intell 2024–2039

Mindspore. https://www.mindspore.cn/,2020

Kingma D P, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Klodt M, Vedaldi A (2018) Supervising the new with the old: learning SFM from SFM. In: Proceedings of the European conference on computer vision, pp 698–713

Guo X, Li H, Yi S, et al (2018) Learning monocular depth by distilling cross-domain stereo networks. In: Proceedings of the European conference on computer vision, pp 484–500

Yang Z, Wang P, Xu W et al (2017) Unsupervised learning of geometry with edge-aware depth-normal consistency. arXiv preprint arXiv:1711.03665

Mahjourian R, Wicke M, Angelova A (2018) Unsupervised learning of depth and ego-motion from monocular video using 3d geometric constraints. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5667–5675

Wang C, Buenaposada J M, Zhu R, et al (2018) Learning depth from monocular videos using direct methods. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2022–2030

Yang Z, Wang P, Wang Y, et al (2018) Lego: learning edge with geometry all at once by watching videos. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 225–234

Luo C, Yang Z, Wang P et al (2019) Every pixel counts++: joint learning of geometry and motion with 3d holistic understanding. IEEE Trans Pattern Anal Mach Intell 2624–2641

Casser V, Pirk S, Mahjourian R, et al (2019) Depth prediction without the sensors: Leveraging structure for unsupervised learning from monocular videos. In: Proceedings of the AAAI conference on artificial intelligence, pp 8001–8008

Mehta I, Sakurikar P, Narayanan P J (2018) Structured adversarial training for unsupervised monocular depth estimation. In: International conference on 3D vision, pp 314–323

Pillai S, Ambruş R, Gaidon A (2019) Superdepth: self-supervised, super-resolved monocular depth estimation. In: International conference on robotics and automation, pp 9250–9256

Wang J, Zhang G, Wu Z et al (2020) Self-supervised joint learning framework of depth estimation via implicit cues. arXiv preprint arXiv:2006.09876

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by CAAI-Huawei MindSpore Open Fund,in part by the National Natural Science Foundation of China under Grant 61401113, in part by the Natural Science Foundation of Heilongjiang Province of China under Grant LH2021F011, in part by the Fundamental Research Funds for the Central Universities of China under Grant 3072021CF0811, in part by the Key Laboratory of Advanced Marine Communication and Information Technology Open Fund under Grant AMCIT2103-03.

Rights and permissions

About this article

Cite this article

Xiang, X., Kong, X., Qiu, Y. et al. Self-supervised Monocular Trained Depth Estimation Using Triplet Attention and Funnel Activation. Neural Process Lett 53, 4489–4506 (2021). https://doi.org/10.1007/s11063-021-10608-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10608-5