Abstract

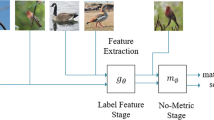

Few-shot learning (FSL), aiming to address the problem of data scarcity, is a hot topic of current researches. The most commonly used FSL framework is composed of two components: (1) Pre-train. Employing the base data to train a feature extraction model (FEM). (2) Meta-test. Utilizing the trained FEM to extract novel data’s feature embedding and then recognize them with the to-be-designed classifier. Due to the constraint of limited labeled samples, some researchers attempt to exploit the unlabeled samples to strengthen the classifier by introducing self-training strategy. However, a single classifier (based on scarce labeled samples) usually incorrectly identifies unlabeled sample classes as its insufficient discrimination, which is dubbed as Single-Classifier-Misclassify-Data (SCMD) problem. To address this fundamental problem, we design a Co-learning (CL) method for FSL. Typically, we find that different classifiers have different adaptability to the same feature distribution. Hence we try to exploit two basic classifiers to separately infer pseudo-labels for unlabeled samples, and crossly expand them to the labeled data. The two complementary classifiers make the predicted accuracy more reliable. We evaluate our CL on five benchmark datasets (e.g., mini-ImageNet, tiered-ImageNet, CIFAR-FS, FC100, CUB), and can exceed other state-of-the-arts 0.63–4.6%. The outstanding performance has demonstrated the efficiency of our method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Bertinetto L, Henriques JF, Torr P, Vedaldi A(2019) Meta-learning with differentiable closed-form solvers. In ICLR,

Boudiaf M, Ziko IM, Jérôme R, José D, Pablo P, Ismail BA(2020). Transductive information maximization for few-shot learning, In NeurIPS

Chapelle O, Scholkopf B, Zien A,Eds.(20096) Semi-supervised learning (chapelle, o. et al., eds.; 2006) [book reviews]. TNN, 20(3):542

Chen C, Li K, Wei W, Joey TZ, Zeng Z(2020). Hierarchical graph neural networks for few-shot learning, TCSVT

Chen W-Y, Liu Y-C, Kira Z, Yu-Chiang FW, Jia-Bin H(2019). A closer look at few-shot classification, In ICLR

Fei-Fei Li, Fergus Rob, Perona Pietro (2006) One-shot learning of object categories. TPAMI 28(4):594–611

Finn C, Abbeel P, Levine S(2017) Model-agnostic meta-learning for fast adaptation of deep networks. In International conference on machine learning, pages 1126–1135,

Yanwei F, Hospedales TM, Xiang T, Gong S (2015) Transductive multi-view zero-shot learning. TPAMI 37(11):2332–2345

Garcia V, Bruna J(2018) Few-shot learning with graph neural networks. In ICLR,

Ghiasi G, Lin TY, Le QV(2018) Dropblock: a regularization method for convolutional networks. In NeurIPS,

He K, Zhang X, Ren S, Sun J(2016) Deep residual learning for image recognition. In CVPR, pages 770–778,

Hihn H, Braun DA (2020) Specialization in hierarchical learning systems. Neural Process Lett 52(3):2319–2352

Shell XH, Pablo GM, Yang X, Xi S, Guillaume O, Neil DL, Andreas D (2020). Empirical bayes transductive meta-learning with synthetic gradients, In ICLR

Jiang W, Huang K, Geng J, Deng X (2020) Multi-scale metric learning for few-shot learning. TCSVT 31(3):1091–1102

Joachims T(1999) Transductive inference for text classification using support vector machines. In ICML,

Kim J, Kim H, Kim G(2020) Model-agnostic boundary-adversarial sampling for test-time generalization in few-shot learning. In European conference on computer vision , pages 599–617

Kim J, Kim T, Kim S, Yoo C D(2019) Edge-labeling graph neural network for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 11–20

Koch G, Zemel R, Salakhutdinov R(2015) Siamese neural networks for one-shot image recognition. In ICML deep learning workshop, volume 2. Lille

Krizhevsky A, Hinton G et al.(2009)Learning multiple layers of features from tiny images. Citeseer

Lee K, Maji S, Ravichandran A, Soatto S(2019) Meta-learning with differentiable convex optimization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10657–10665

Li H, Eigen D, Dodge S, Zeiler M, Wang X(2019) Finding task-relevant features for few-shot learning by category traversal. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 1–10

Li X, Sun Q, Liu Y, Zhou Q, Zheng S, Chua T-S, Schiele B (2019) Learning to self-train for semi-supervised few-shot classification. NeurIPS 32:10276–10286

Liu B, Cao Y, Lin Y, Li Q, Zhang Z, Long M, Hu H(2020) Negative margin matters: understanding margin in few-shot classification. In European conference on computer vision, pages 438–455

Liu Y, Lee J, Park M, Kim S, Yang E, Sung Ju Hwang, and Yi Yang (2019). Transductive propagation network for few-shot learning. In ICLR, Learning to propagate labels

Mangla P, Kumari N, Sinha A, Singh M, Krishnamurthy B, Balasubramanian VN(2020) Charting the right manifold: manifold mixup for few-shot learning. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 2218–2227

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv preprintarXiv:1803.02999

Oreshkin B, López PR, Lacoste A(2018) Tadam: task dependent adaptive metric for improved few-shot learning. In NeurIPS, pages 721–731

Qiao L, Shi Y, Li J, Wang Y, Huang T, Tian Y(2019) Transductive episodic-wise adaptive metric for few-shot learning. In Proceedings of the IEEE/CVF international conference on computer vision, pages 3603–3612

Ravi S, Larochelle H(2016)Optimization as a model for few-shot learning. In ICLR

Rodríguez P, Laradji I, Drouin A and Alexandre L(2020). Smoother manifold for few-shot classification. In European conference on computer vision , Embedding propagation

Rosenberg C, Hebert M, Schneiderman H(2005) Semi-supervised self-training of object detection models. In WACV, volume 1

Russakovsky Olga, Deng Jia, Hao Su, Krause Jonathan, Satheesh Sanjeev, Ma Sean, Huang Zhiheng, Karpathy Andrej, Khosla Aditya, Bernstein Michael et al (2015) Imagenet large scale visual recognition challenge. IJCV 115(3):211–252

Rusu AA, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R(2019) Meta-learning with latent embedding optimization. In ICLR

Shao S, Xing L, Wang Y, Xu R, Zhao C, Wang Y, Liu B(2021) Mhfc: multi-head feature collaboration for few-shot learning. In Proceedings of the 29th ACM international conference on multimedia, pages 4193–4201

Snell J, Swersky K, Zemel R(2017) Prototypical networks for few-shot learning. In NeurIPS, pages 4077–4087

Srivastava Nitish, Hinton Geoffrey, Krizhevsky Alex, Sutskever Ilya, Salakhutdinov Ruslan (2014) Dropout: a simple way to prevent neural networks from overfitting. J Machine Learn Res 15(1):1929–1958

Sung F, Yang Y, Zhang L, Xiang T, Torr PHS, Hospedales TM(2018) Learning to compare: relation network for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1199–1208

Tseng H-Y, Lee H-Y, Huang J-B, Yang M-H(2020) Cross-domain few-shot classification via learned feature-wise transformation. In ICLR

Verma V, Lamb A , Beckham C, Najafi A, Mitliagkas I, Lopez-Paz D, Bengio Y(2019) Manifold mixup: better representations by interpolating hidden states. In International conference on machine learning , pages 6438–6447

Vinyals Oriol, Blundell Charles, Lillicrap Timothy, Wierstra Daan et al (2016) Matching networks for one shot learning. NeurIPS 29:3630–3638

Wang Q, Wang G, Kou G, Zang M, Wang H (2021) Application of meta-learning framework based on multiple-capsule intelligent neural systems in image classification. Neural Process Lett 54(3):2581–2602

Wang Y, Xu C, Liu C, Zhang L, Fu Y(2020) Instance credibility inference for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12836–12845

Xing C, Rostamzadeh N, Oreshkin B, Pinheiro PO (2019) Adaptive cross-modal few-shot learning. In Advances in neural information processing systems, pages 4847–4857

Xu C, Liu C, Zhang L, Wang C, Li J, Huang F, Xue X, Fu Y(2021) Learning dynamic alignment via meta-filter for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 5182–5191

Yang L, Li L, Zhang Z, Zhou X, Zhou E, Liu Y(202) Dpgn: distribution propagation graph network for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 13390–13399

Yang S, Liu L and Min Xu, (2021) Distribution calibration. In International conference on machine learning, Free lunch for few-shot learning

Yarowsky D(1995) Unsupervised word sense disambiguation rivaling supervised methods. In 33rd annual meeting of the association for computational linguistics, pages 189–196

Yoon S W, Seo J, Moon J(2019) Tapnet: neural network augmented with task-adaptive projection for few-shot learning. In International conference on machine learning, pages 7115–7123

Yu Z, Chen L , Cheng Z, Luo J(2020) Transmatch: a transfer-learning scheme for semi-supervised few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12856–12864

Ziko I, Dolz J, Granger E, Ayed I B(2020) Laplacian regularized few-shot learning. In International conference on machine learning, pages 11660–11670

Acknowledgements

The paper was supported by the National Natural Science Foundation of China (Grant No.61671480), the Open Project Program of the National Laboratory of Pattern Recognition (NLPR) (Grant No.202000009),the Major Scientific and Technological Projects of CNPC under Grant ZD2019-183-008, and the Graduate Innovation Project of China University of Petroleum (East China) YCX2021123.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, R., Xing, L., Shao, S. et al. Co-Learning for Few-Shot Learning. Neural Process Lett 54, 3339–3356 (2022). https://doi.org/10.1007/s11063-022-10770-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-10770-4