Abstract

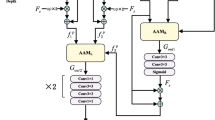

Deep modal can provide supplementary features for RGB images, which deeply improves the performance of salient object detection (SOD). However, depth images are disturbed by external factors during the acquisition process, resulting in low-quality acquisitions. Moreover, there are differences between the RGB and depth modals, so simply fusing the two modals cannot fully complement the depth information into the RGB modal. To enhance the quality of the depth image and integrate the cross-modal information effectively, we propose a depth enhanced cross-modal cascaded network (DCCNet) for RGB-D SOD. The entire cascaded network includes a depth cascaded branch, a RGB cascaded branch and a cross-modal fusion strategy. In the depth cascaded branch, we design a depth preprocessing algorithm to enhance the quality of the depth image. And in the process of depth feature extraction, we adopt four cascaded cross-modal guided modules to guide the RGB feature extraction process. In the RGB cascaded branch, we design five cascaded residual adaptive selection modules to output the RGB image feature extraction in each stage. In the cross-modal fusion strategy, a cross-modal channel-wise refinement is adopted to fuse the top-level features of the different modal feature branches. Finally, the multiscale loss is adopted to optimize the network training. Experimental results on six common RGB-D SOD datasets show that the performance of the proposed DCCNet is comparable to that of the state-of-the-art RGB-D SOD methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Mahadevan V, Vasconcelos N (2013) Biologically inspired object tracking using center-surround saliency mechanisms. IEEE Trans Pattern Anal Mach Intell 35:541–554. https://doi.org/10.1109/TPAMI.2012.98

Zhang T, Liu S, Ahuja N et al (2015) Robust visual tracking via consistent low-rank sparse learning. Int J Comput Vis 111:171–190. https://doi.org/10.1007/s11263-014-0738-0

Wei Y, Liang X, Chen Y et al (2017) STC: a simple to complex framework for weakly-supervised semantic segmentation. IEEE Trans Pattern Anal Mach Intell 39:2314–2320. https://doi.org/10.1109/TPAMI.2016.2636150

Li Y, Chen X, Zhu Z, et al (2019) Attention-guided unified network for panoptic segmentation. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 7019–7028. https://doi.org/10.1109/CVPR.2019.00719

Fu J, Liu J, Tian H, et al (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 3141–3149. https://doi.org/10.1109/CVPR.2019.00326

Kompella A, Kulkarni RV (2021) A semi-supervised recurrent neural network for video salient object detection. Neural Comput Appl 33:2065–2083. https://doi.org/10.1007/s00521-020-05081-5

Wang W, Shen J, Shao L (2018) Video salient object detection via fully convolutional networks. IEEE Trans Image Process 27:38–49. https://doi.org/10.1109/TIP.2017.2754941

Gidaris S, Komodakis N (2016) LocNet: improving localization accuracy for object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 789–798. https://doi.org/10.1109/CVPR.2016.92

Cai Z, Vasconcelos N (2018) cascaded R-CNN: delving into high quality object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 6154–6162. https://doi.org/10.1109/CVPR.2018.00644

Wang J, Zhao Z, Yang S et al (2022) Global contextual guided residual attention network for salient object detection. Appl Intell 52:6208–6226. https://doi.org/10.1007/s10489-021-02713-8

Liu Y, Wang Y, Kong AWK (2021) Pixel-wise ordinal classification for salient object grading. Image Vis Comput 106:104086. https://doi.org/10.1016/j.imavis.2020.104086

Meng M, Lan M, Yu J et al (2020) Constrained discriminative projection learning for image classification. IEEE Trans Image Process 29:186–198. https://doi.org/10.1109/TIP.2019.2926774

Liu JJ, Hou Q, Cheng MM, et al (2019) A simple pooling-based design for real-time salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 3912–3921. https://doi.org/10.1109/CVPR.2019.00404

Wang W, Shen J, Cheng MM, et al (2019) An iterative and cooperative top-down and bottom-up inference network for salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 5961–5970. https://doi.org/10.1109/CVPR.2019.00612

Wu R, Feng M, Guan W, et al (2019) A mutual learning method for salient object detection with intertwined multi-supervision. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 8142–8151. https://doi.org/10.1109/CVPR.2019.00834

Ju R, Ge L, Geng W, et al (2014) Depth saliency based on anisotropic center-surround difference. In: 2014 IEEE international conference on image processing, ICIP 2014. pp 1115–1119. https://doi.org/10.1109/ICIP.2014.7025222

Ren J, Gong X, Yu L, et al (2015) Exploiting global priors for RGB-D saliency detection. In: IEEE computer society conference on computer vision and pattern recognition workshops. pp 25–32. https://doi.org/10.1109/CVPRW.2015.7301391

Feng D, Barnes N, You S, et al (2016) Local background enclosure for RGB-D salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 2343–2350. https://doi.org/10.1109/CVPR.2016.257

Chen H, Li Y, Su D (2019) Multi-modal fusion network with multi-scale multi-path and cross-modal interactions for RGB-D salient object detection. Pattern Recognit 86:376–385. https://doi.org/10.1016/j.patcog.2018.08.007

Zhou W, Lv Y, Lei J et al (2021) Global and local-contrast guides content-aware fusion for RGB-D saliency prediction. IEEE Trans Syst Man, Cybern Syst 51:3641–3649. https://doi.org/10.1109/TSMC.2019.2957386

Chen H, Li Y, Su D (2020) Discriminative cross-modal transfer learning and densely cross-level feedback fusion for RGB-D salient object detection. IEEE Trans Cybern 50:4808–4820. https://doi.org/10.1109/TCYB.2019.2934986

Fan DP, Zhai Y, Borji A, et al (2020) BBS-Net: RGB-D salient object detection with a bifurcated backbone strategy network. In: European conference on computer vision. pp 275–292. https://doi.org/10.1007/978-3-030-58610-2_17

Chen H, Deng Y, Li Y et al (2020) RGBD salient object detection via disentangled cross-modal fusion. IEEE Trans Image Process 29:8407–8416. https://doi.org/10.1109/TIP.2020.3014734

Wang N, Gong X (2019) Adaptive fusion for rgb-d salient object detection. IEEE Access 7:55277–55284. https://doi.org/10.1109/ACCESS.2019.2913107

Zhao Z, Yang Q, Yang S, Wang J (2021) Depth guided cross-modal residual adaptive network for RGB-D salient object detection. J Phys. https://doi.org/10.1088/1742-6596/1873/1/012024

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Qu L, He S, Zhang J et al (2017) RGBD salient object detection via deep fusion. IEEE Trans Image Process 26:2274–2285. https://doi.org/10.1109/TIP.2017.2682981

Zhao JX, Cao Y, Fan DP, et al (2019) Contrast prior and fluid pyramid integration for rgbd salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 3922–3931. https://doi.org/10.1109/CVPR.2019.00405

Liu Z, Liu J, Zuo X et al (2021) Multi-scale iterative refinement network for RGB-D salient object detection. Eng Appl Artif Intell. https://doi.org/10.1016/j.engappai.2021.104473

Fan DP, Lin Z, Zhang Z et al (2021) Rethinking RGB-D salient object detection: models, data sets, and large-scale benchmarks. IEEE Trans Neural Networks Learn Syst 32:2075–2089. https://doi.org/10.1109/TNNLS.2020.2996406

Chen H, Li Y (2018) Progressively complementarity-aware fusion network for RGB-D salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 3051–3060. https://doi.org/10.1109/CVPR.2018.00322

Yu J, Tan M, Zhang H et al (2022) Hierarchical deep click feature prediction for fine-grained image recognition. IEEE Trans Pattern Anal Mach Intell 44:563–578. https://doi.org/10.1109/TPAMI.2019.2932058

Piao Y, Rong Z, Zhang M, et al (2020) A2dele: Adaptive and attentive depth distiller for efficient RGB-D salient object detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 9057–9066. https://doi.org/10.1109/CVPR42600.2020.00908

Lopez-Paz D, Bottou L, Schölkopf B, et al. (2016) Unifying distillation and privileged information. In: 4th international conference on learning representations, ICLR 2016 - Conference track proceedings. https://doi.org/10.48550/arXiv.1511.03643

Yu J, Rui Y, Tao D (2014) Click prediction for web image reranking using multimodal sparse coding. IEEE Trans Image Process 23:2019–2032. https://doi.org/10.1109/TIP.2014.2311377

Meng M, Wang H, Yu J et al (2021) Asymmetric supervised consistent and specific hashing for cross-modal retrieval. IEEE Trans Image Process 30:986–1000. https://doi.org/10.1109/TIP.2020.3038365

Liu Z, Shi S, Duan Q et al (2019) Salient object detection for RGB-D image by single stream recurrent convolution neural network. Neurocomputing 363:46–57. https://doi.org/10.1016/j.neucom.2019.07.012

Peng H, Li B, Xiong W, et al (2014) RGBD salient object detection: a benchmark and algorithms. In: European conference on computer vision. pp 92–109. https://doi.org/10.1007/978-3-319-10578-9_7

Shigematsu R, Feng D, You S, et al (2017) Learning RGB-D salient object detection using background enclosure, depth contrast, and top-down features. In: Proceedings of the IEEE international conference on computer vision workshops. pp 2749–2757. https://doi.org/10.1109/ICCVW.2017.323

Zhu C, Cai X, Huang K, et al (2019) PDNet: prior-model guided depth-enhanced network for salient object detection. In: Proceedings - IEEE international conference on multimedia and expo. pp 199–204. https://doi.org/10.1109/ICME.2019.00042

Chen H, Li YF, Su D (2018) Attention-aware cross-modal cross-level fusion network for RGB-D salient object detection. In: IEEE international conference on intelligent robots and systems. pp 6821–6826. https://doi.org/10.1109/IROS.2018.8594373

Piao Y, Ji W, Li J, et al (2019) Depth-induced multi-scale recurrent attention network for saliency detection. In: Proceedings of the IEEE International Conference on Computer Vision. pp 7253–7262. https://doi.org/10.1109/ICCV.2019.00735

Le AV, Jung SW, Won CS (2014) Directional joint bilateral filter for depth images. Sensors 14:11362–11378. https://doi.org/10.3390/s140711362

Achanta R, Shaji A, Smith K et al (2012) SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell 34:2274–2281. https://doi.org/10.1109/TPAMI.2012.120

Cheng Y, Fu H, Wei X, et al (2014) Depth enhanced saliency detection method. In: ACM international conference proceeding series. pp 23–27. https://doi.org/10.1145/2632856.2632866

Li N, Ye J, Ji Y et al (2017) Saliency detection on light field. IEEE Trans Pattern Anal Mach Intell 39:1605–1616. https://doi.org/10.1109/TPAMI.2016.2610425

Borji A, Cheng MM, Jiang H et al (2015) Salient object detection: a benchmark. IEEE Trans Image Process 24:5706–5722. https://doi.org/10.1109/TIP.2015.2487833

Fan DP, Cheng MM, Liu Y, et al (2017) Structure-measure: a new way to evaluate foreground maps. In: proceedings of the IEEE international conference on computer vision. pp 4558–4567. https://doi.org/10.1109/ICCV.2017.487

Fan DP, Gong C, Cao Y, et al (2018) Enhanced-alignment measure for binary foreground map evaluation. In: IJCAI international joint conference on artificial intelligence. pp 698–704. https://doi.org/10.48550/arXiv.1805.10421

Perazzi F, Krahenbuhl P, Pritch Y, et al (2012) Saliency filters: Contrast based filtering for salient region detection. In: Proceedings of the IEEE computer society conference on computer vision and pattern recognition. pp 733–740. https://doi.org/10.1109/CVPR.2012.6247743

Li G, Zhu C (2017) A three-pathway psychobiological framework of salient object detection using stereoscopic technology. In: Proceedings of the IEEE international conference on computer vision workshops. pp 3008–3014. https://doi.org/10.1109/ICCVW.2017.355

Cong R, Lei J, Zhang C et al (2016) Saliency detection for stereoscopic images based on depth confidence analysis and multiple cues fusion. IEEE Signal Process Lett 23:819–823. https://doi.org/10.1109/LSP.2016.2557347

Song H, Liu Z, Du H et al (2017) Depth-aware salient object detection and segmentation via multiscale discriminative saliency fusion and bootstrap learning. IEEE Trans Image Process 26:4204–4216. https://doi.org/10.1109/TIP.2017.2711277

Quo J, Ren T, Bei J (2016) Salient object detection for RGB-D image via saliency evolution. In: Proceedings - IEEE international conference on multimedia and expo pp 1–6. https://doi.org/10.1109/ICME.2016.7552907

Chen H, Li Y (2019) Three-stream attention-aware network for RGB-D salient object detection. IEEE Trans Image Process 28:2825–2835. https://doi.org/10.1109/TIP.2019.2891104

Han J, Chen H, Liu N et al (2018) CNNs-based RGB-D saliency detection via cross-view transfer and multiview fusion. IEEE Trans Cybern 48:3171–3183. https://doi.org/10.1109/TCYB.2017.2761775

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No. 61802111) and the Science and Technology Foundation of Henan Province of China (No. 212102210156).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhao, Z., Huang, Z., Chai, X. et al. Depth Enhanced Cross-Modal Cascaded Network for RGB-D Salient Object Detection. Neural Process Lett 55, 361–384 (2023). https://doi.org/10.1007/s11063-022-10886-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-10886-7