Abstract

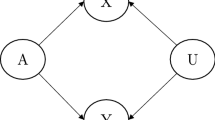

Recently, in the field of fair machine learning, a large number of studies have considered how to remove discriminatory information from the data and achieve fairness in downstream tasks. Fair representation learning considers removing sensitive information (e.g. race, gender, etc) in the latent space, and the learned representations can prevent machine learning systems from being biased by discriminatory information. In this paper, we study the problems of existing methods and propose a novel fair representation learning method for the fair transfer learning where the labels of the downstream tasks are unknown. Specifically, we bring a new training model with information-theoretically motivated objective which avoids the problem of alignment for learning disentangled fair representations. Empirical results in various settings demonstrate the broad applicability and utility of our approach.

Similar content being viewed by others

References

Hellman D (2018) Indirect discrimination and the duty to avoid compounding injustice. Hart Publishing Company, Foundations of Indirect Discrimination Law

Bechavod, Y., Ligett, K.: Learning fair classifiers: A regularization-inspired approach. arXiv preprint arXiv:1707.00044, 1–49 (2017)

Bechavod Y, Ligett K, Roth A, Waggoner B, Wu SZ (2019) Equal opportunity in online classification with partial feedback. In: Advances in Neural Information Processing Systems, pp. 8974–8984

Kamishima T, Akaho S, Asoh H, Sakuma J (2012) Fairness-aware classifier with prejudice remover regularizer. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 35–50. Springer

Zemel R, Wu Y, Swersky K, Pitassi T, Dwork C (2013) Learning fair representations. In: International Conference on Machine Learning, 325–333

Zhang BH, Lemoine B, Mitchell M (2018) Mitigating unwanted biases with adversarial learning. In: AAAI/ACM Conference on AI, Ethics, and Society, 335–340

Liu LT, Simchowitz M, Hardt M (2019) The implicit fairness criterion of unconstrained learning. In: International Conference on Machine Learning, 4051–4060

Calmon F, Wei D, Vinzamuri B, Ramamurthy KN, Varshney KR (2017) Optimized pre-processing for discrimination prevention. In: Advances in Neural Information Processing Systems, 3992–4001

Kleinberg J, Mullainathan S, Raghavan M (2017) Inherent trade-offs in the fair determination of risk scores. In: Innovations in Theoretical Computer Science Conference, 67: 43–65

Hébert-Johnson Ú, Kim M, Reingold O, Rothblum G (2018) Multicalibration: Calibration for the (computationally-identifiable) masses. In: International Conference on Machine Learning, 1939–1948

Locatello F, Abbati G, Rainforth T, Bauer S, Schölkopf B, Bachem O (2019) On the fairness of disentangled representations. In: Advances in Neural Information Processing Systems, 14611–14624

Pleiss G, Raghavan M, Wu F, Kleinberg J, Weinberger KQ (2017) On fairness and calibration. In: Advances in Neural Information Processing Systems, 5680–5689

Hajian S, Domingo-Ferrer J, Monreale A, Pedreschi D, Giannotti F (2015) Discrimination-and privacy-aware patterns. Data Mining and Knowledge Discovery 29(6):1733–1782

Madras D, Creager E, Pitassi T, Zemel R (2018) Learning adversarially fair and transferable representations. In: International Conference on Machine Learning, 3384–3393

Song J, Kalluri P, Grover A, Zhao S, Ermon S (2019) Learning controllable fair representations. In: International Conference on Artificial Intelligence and Statistics, 2164–2173

Creager E, Madras D, Jacobsen J-H, Weis M, Swersky K, Pitassi T, Zemel R (2019) Flexibly fair representation learning by disentanglement. In: International Conference on Machine Learning, 1436–1445

Beutel A, Chen J, Zhao Z, Chi EH (2017) Data decisions and theoretical implications when adversarially learning fair representations. arXiv preprint arXiv:1707.00075, 1–5

Kumar A, Sattigeri P, Balakrishnan A (2018) Variational inference of disentangled latent concepts from unlabeled observations. In: International Conference on Learning Representations

Higgins I, Amos D, Pfau D, Racaniere S, Matthey L, Rezende D, Lerchner A (2018) Towards a definition of disentangled representations. arXiv preprint arXiv:1812.02230, 1–29

van Steenkiste S, Locatello F, Schmidhuber J, Bachem O (2019) Are disentangled representations helpful for abstract visual reasoning? In: Advances in Neural Information Processing Systems, 14245–14258

Locatello F, Bauer S, Lucic M, Raetsch G, Gelly S, Schölkopf B, Bachem O (2019) Challenging common assumptions in the unsupervised learning of disentangled representations. In: International Conference on Machine Learning, 4114–4124

Kim H, Mnih A (2018) Disentangling by factorising. In: International Conference on Machine Learning, 2654–2663

Chen TQ, Li X, Grosse RB, Duvenaud D (2018) Isolating sources of disentanglement in variational autoencoders. In: Advances in Neural Information Processing Systems, 2615–2625

Dwork C, Hardt M, Pitassi T, Reingold O, Zemel R (2012) Fairness through awareness. In: Theoretical Computer Science Conference, 214–226

Feldman M, Friedler SA, Moeller J, Scheidegger C, Venkatasubramanian S (2015) Certifying and removing disparate impact. In: International Conference on Knowledge Discovery and Data Mining, 259–268

Zafar MB, Valera I, Rodriguez M, Gummadi K, Weller A (2017) From parity to preference-based notions of fairness in classification. In: Advances in Neural Information Processing Systems, 229–239

Berk R, Heidari H, Jabbari S, Kearns M, Roth A (2018) Fairness in criminal justice risk assessments: The state of the art. Sociological Methods & Research 1:42

Hardt M, Price E, Srebro N (2016) Equality of opportunity in supervised learning. In: Advances in Neural Information Processing Systems, 3315–3323

Kim M, Reingold O, Rothblum G (2018) Fairness through computationally-bounded awareness. In: Advances in Neural Information Processing Systems, 4842–4852

Narasimhan H, Cotter A, Gupta MR, Wang S (2020) Pairwise fairness for ranking and regression. In: AAAI Conference on Artificial Intelligence, 5248–5255

Edwards H, Storkey A (2015) Censoring representations with an adversary. arXiv preprint arXiv:1511.05897, 1–14

Chouldechova A (2017) Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big Data 5(2):153–163

Zliobaite I (2015) On the relation between accuracy and fairness in binary classification. arXiv preprint arXiv:1505.05723, 1–5

Louizos C, Swersky K, Li Y, Welling M, Zemel RS (2016) The variational fair autoencoder. In: International Conference on Learning Representations

Elliott MN, Fremont A, Morrison PA, Pantoja P, Lurie N (2008) A new method for estimating race/ethnicity and associated disparities where administrative records lack self-reported race/ethnicity. Health Services Research

De Freitas N, Højen-Sørensen P, Jordan MI, Russell S (2001) Variational MCMC. In: Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence, 120–127. Morgan Kaufmann Publishers Inc

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Salimans T, Kingma D, Welling M (2015) Markov chain Monte Carlo and variational inference: Bridging the gap. In: International Conference on Machine Learning, 1218–1226

Kingma DP, Welling M (2013) Auto-encoding variational Bayes. arXiv preprint arXiv:1312.6114, 1–14

Zhao S, Song J, Ermon S (2017) Towards deeper understanding of variational autoencoding models. arXiv preprint arXiv:1702.08658, 1–11

Zhao S, Song J, Ermon S (2019) Infovae: Balancing learning and inference in variational autoencoders. In: AAAI Conference on Artificial Intelligence, 33: 5885–5892

Moyer D, Gao S, Brekelmans R, Galstyan A, Ver Steeg G (2018) Invariant representations without adversarial training. In: Advances in Neural Information Processing Systems, 9084–9093

Klys J, Snell J, Zemel R (2018) Learning latent subspaces in variational autoencoders. In: Advances in Neural Information Processing Systems, 6444–6454

Watanabe S (1960) Information theoretical analysis of multivariate correlation. IBM Journal of Research and Development 4(1):66–82

Nguyen X, Wainwright MJ, Jordan MI (2010) Estimating divergence functionals and the likelihood ratio by convex risk minimization. IEEE Transactions on Information Theory 56(11):5847–5861

Sugiyama M, Suzuki T, Kanamori T (2012) Density-ratio matching under the Bregman divergence: a unified framework of density-ratio estimation. Annals of the Institute of Statistical Mathematics 64(5):1009–1044

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: International Conference on Machine Learning, 807–814

Asuncion A, Newman D (2007) UCI machine learning repository. http://archive.ics.uci.edu/ml

Network HP (2011) Heritage provider network health prize description. https://www.kaggle.com/c/hhp/data

Acknowledgements

This work was supported by the NSFC Projects 62076096 and 62006078, the Shanghai Municipal Project 20511100900, the Shanghai Knowledge Service Platform Project (No. ZF1213), STCSM Project 22ZR1421700, the Open Research Fund of KLATASDS-MOE, and the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, S., Sun, S. & Zhao, J. Fair Transfer Learning with Factor Variational Auto-Encoder. Neural Process Lett 55, 2049–2061 (2023). https://doi.org/10.1007/s11063-022-10920-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-10920-8