Abstract

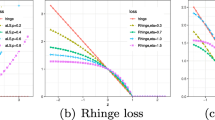

The mechanism of L2-norm loss can be explained from the perspective of maximizing margin and minimizing margin variance, which is equivalent to the idea of margin distribution. Recent studies suggest that the margin distribution is more vital for the generalization performance of models. Inspired by the idea, a nonparallel support vector machine (NPSVM) with L2-norm loss is proposed in this paper, termed as L2-NPSVM. We first reconstruct a L2-norm loss for NPSVM. The L2-norm loss can make full use of the margin distribution information in the training samples. Based on the L2-norm loss, we formulate the L2-NPSVM algorithm. L2-NPSVM is a more reliable training model with stronger generalization ability. Since L2-norm loss is used to measure the empirical risk in the objective function, L2-NPSVM can also reduce the impact of outliers. Furthermore, due to the L2-norm loss, the dual coordinate descent (DCD) method can be applied to linear and nonlinear L2-NPSVM. Under the premise of high accuracy, DCD can accelerate the solving process. At last, we conduct a series of comparative experiments on UCI datasets, noise-added UCI datasets and real-world datasets, which demonstrate the feasibility and effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Cortes C, Vapnik V (1995) Support-vector Networks. Mach Learn 20(3):273–297

Judith E, Deleo J (2001) Artificial neural network. Cancer 91(S8):1615–1635

Gunn SR (1998) Support vector machines for classification and regression. ISIS Tech Rep 14(1):5–16

Kurek J, Osowski S (2010) Support vector machine for fault diagnosis of the broken rotor bars of squirrel-cage induction motor. Neural Comput Appl 19(4):557–564

Luo X (2021) Efficient english text classification using selected machine learning techniques. Alex Eng J 60(3):3401–3409

Quan Q, Hao Z, Xifeng H, Jingchun L (2020) Research on water temperature prediction based on improved support vector regression. Neural Comput Appl pp 1–10

Liu L, Li P, Chu M, Gao C (2021) End-point prediction of 260 tons basic oxygen furnace (BOF) steelmaking based on WNPSVR and WOA. J Intell Fuzzy Syst pp 1–15

Syriopoulos T, Tsatsaronis M, Karamanos I (2021) Support vector machine algorithms: An application to ship price forecasting. Comput Econ 57(1):55–87

Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Peng X, Xu D (2013) A twin-hypersphere support vector machine classifier and the fast learning algorithm. Inf Sci 221:12–27

Tian Y, Qi Z, Ju X, Shi Y, Liu X (2014) Nonparallel support vector machines for pattern classification. IEEE Trans Cybern 44(7):1067–1079

Reyzin L, Schapire RE (2006) How boosting the margin can also boost classifier complexity. In: Proceedings of the 23rd international conference on machine learning, ACM pp 753–760

Garg A, Roth D (2003) Margin distribution and learning algorithms. In: Proceedings of the 20th International conference of machine learning, Washington, DC, pp 201–217

Aiolli F, Martino GS, Sperduti A (2008) A kernel method for the optimization of the margin distribution. In: Proceedings of the 18th International conference on artificial neural networks, pp 305–314

Zhang T, Zhou Z (2014) Large margin distribution machine. In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining, ACM pp 313–322

Doktorski L (2011) L2-SVM: dependence on the regularization parameter. Pattern Recognit Image Anal 21(2):254–257

Gestel T, Suykens J, Lanckriet G, Lambrechts A, Vandewalle J (2002) Bayesian framework for least-squares support vector machine classifiers, gaussian processes, and kernel Fisher discriminant analysis. Neural Comput 14(5):1115–1147

Zhang T, Zhou Z (2019) Optimal margin distribution machine. IEEE T Knowl Data En pp. 1–1

Rastogi R, Saigal P (2017) Tree-based localized fuzzy twin support vector clustering with square loss function. Appl Intell 47(1):96–113

Saigal P, Khanna V, Rastogi R (2017) Divide and conquer approach for semi-supervised multi-category classification through localized kernel spectral clustering. Neurocomputing 238:296–306

Mir A, Nasiri JA (2018) KNN-based least squares twin support vector machine for pattern classification. Appl Intell 48(12):4551–4564

Lu S, Wang H, Zhou Z (2019) All-in-one multicategory ramp loss maximum margin of twin spheres support vector machine. Appl Intell 49(6):2301–2314

Hazarika BB, Gupta D (2021) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl 33(9):4243–4261

Gupta U, Gupta D (2021) Kernel-target alignment based fuzzy Lagrangian twin bounded support vector machine. Int J Uncertain Fuzz 29(05):677–707

Hazarika BB, Gupta D, Borah P (2021) An intuitionistic fuzzy kernel ridge regression classifier for binary classification. Appl Soft Comput 112:107816

Hsieh C (2016) A dual coordinate descent method for large-scale linear SVM. In: Proc international conference on machine learning, ACM

Yuan G, Ho C, Lin C (2012) Recent advances of large-scale linear classification. Proc IEEE 100(9):2584–2603

Shao Y, Deng N, Chen W (2013) A proximal classifier with consistency. Knowl-Based Syst 49:171–178

Shalev-Shwartz S, Singer Y, Srebro N, Cotter A (2011) Pegasos: primal estimated sub-gradient solver for SVM. Math Program 127(1):3–30

Zhen W, Shao Y, Lan B, Li C, Liu L, Deng N (2018) Insensitive stochastic gradient twin support vector machines for large scale problems. Inf Sci 462:114–131

The Math Works (MATLAB 2016b) Inc. [Online]. Available: http://www.mathworks.com.

Dua D, Taniskidou EK UCI machine learning repository. [Online]. Available: http://archive.ics.uci.edu/ml/.

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Nemenyi (1963) Distribution-free multiple comparisons. URL https://books.google.fi/books?id=nhDMtgAACAAJ

Liu L, Chu M, Gong R, Peng Y (2020) Nonparallel support vector machine with large margin distribution for pattern classification. Pattern Recogn 106:107374

He Y, Song K, Meng Q, Yan Y (2020) An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE T Instrum Meas 69(4):1493–1504

Dong H, Song K, He Y, Xu J, Yan Y, Meng Q (2020) PGA-net: pyramid feature fusion and global context attention network for automated surface defect detection. IEEE T Ind Inform. https://doi.org/10.1109/TII.2019.2958826

Liu L, Chu M, Yang Y, Gong R (2020) Twin support vector machine based on adjustable large margin distribution for pattern classification. Int J Mach Learn Cyber 11:2371–2389. https://doi.org/10.1007/s13042-020-01124-4

USPS Digit Dataset. [online]. Available: https://www.csie.ntu.edu.tw/

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants (61673199), Natural Science Foundation of Liaoning Province of China (20180550067 and 2022-MS-353), Liaoning Province Ministry of Education Scientific Study Project (2017LNQN11), University of Science and Technology Liaoning Talent Project Grants (601011507–20), University of Science and Technology Liaoning Team Building Grants (601013360–17) and Graduate Science and Technology Innovation Program of University of Science and Technology Liaoning (LKDYC201909).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, L., Li, P., Chu, M. et al. Nonparallel Support Vector Machine with L2-norm Loss and its DCD-type Solver. Neural Process Lett 55, 4819–4841 (2023). https://doi.org/10.1007/s11063-022-11067-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-022-11067-2