Abstract

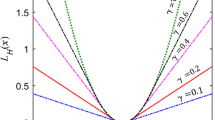

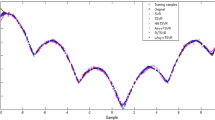

Twin support vector regression (TSVR) is an important algorithm to handle regression problems developed on the basis of support vector regression (SVR). However, TSVR adopts ε-insensitive loss function which is not well capable to deal with noise or outliers. In order to reduce the influence of noise or outliers on the performance of TSVR, in this paper, we propose a novel robust twin support vector regression with smooth truncated Hε loss function, termed as THε-TSVR. At first, we construct smooth truncated Hε loss function by combining ε-insensitive loss function and Huber loss function. Then, a concave-convex programming (CCCP) is employed to solve the nonconvex optimization problem in the primal space. In addition, the convergence of THε-TSVR is also proved. The experimental results on some artificial datasets and UCI datasets with noise and without noise verified the effectiveness and robustness of the proposed THε-TSVR.

Similar content being viewed by others

References

Vapnik VN (1998) Statistical learning theory. Wiley Press, New York, pp 401–421

Peng XJ (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Shao YH, Zhang CH, Yang ZM et al (2013) An ε-twin support vector machine for regression. Neural Comput Appl 23(1):175–185

Zhao YP, Zhao J, Zhao M (2013) Twin least squares support vector regression. Neurocomputing 118:225–236

Peng XJ, Xu D, Shen JD (2014) A twin projection support vector machine for data regression. Neurocomputing 138:131–141

Shao YH, Chen WJ, Deng NY (2014) Nonparallel hyperplane support vector machine for binary classification problems. Inf Sci 263:22–35

Balasundaram S, Gupta D (2014) Training lagrangian twin support vector regression via unconstrained convex minimization. Knowl Based Syst 59:85–96

Ye YF, Bai L, Hua XY et al (2016) Weighted lagrange ε-twin support vector regression. Neurocomputing 197:53–68

Xu YT, Yang ZJ, Pan XL (2017) A novel twin support vector machine with pinball loss. IEEE Trans Neural Netw Learn Syst 28(2):359–370

Shen X, Niu LF, Qi ZQ et al (2017) Support vector machine classifier with truncated pinball loss. Pattern Recogn 68:199–210

Anagha P, Balasundaram S, Meena Y (2018) On robust twin support vector regression in primal using squared pinball loss. J Intell Fuzzy Syst 35(5):5231–5239

Niu JY, Chen J, Xu YT (2017) Twin support vector regression with Huber loss. J Intell Fuzzy Syst 32(6):4247–4258

Balasundaram S, Prasad SC (2020) Robust twin support vector regression based on Huber loss function. Neural Comput Appl 32(15):11285–11309

Gupta U, Gupta D (2021) On regularization based twin support vector regression with Huber loss. Neural Process Lett 53(1):459–515

Zhong P (2012) Training robust support vector regression with smooth non-convex loss function. Optim Methods Softw 27(6):1039–1058

Tang L, Tian YJ, Yang CY et al (2018) Ramp-loss nonparallel support vector regression: robust, sparse and scalable approximation. Knowl Based Syst 147:55–67

Gupta D, Gupta U (2021) On robust asymmetric Lagrangian ν-twin support vector regression using pinball loss function. Appl Soft Comput 102:107099

Ye YF, Gao JB, Shao YH et al (2020) Robust support vector regression with generic quadratic nonconvex ε-insensitive loss. Appl Math Model 82:235–251

Dong HW, Yang LM (2020) Training robust support vector regression machines for more general noise. J Intell Fuzzy Syst 39(4):1–12

Chen CF, Yan CQ, Zhao N et al (2017) A robust algorithm of support vector regression with a trimmed Huber loss function in the primal. Soft Comput 21(18):5235–5243

Xu YT, Li XY, Pan XL et al (2018) Asymmetric ν-twin support vector regression. Neural Comput Appl 30(12):3799–3814

Tanveer M, Sharma A, Suganthan PN (2019) General twin support vector machine with pinball loss function. Inf Sci 494:311–327

Gupta U, Gupta D (2019) An improved regularization based Lagrangian asymmetric ν-twin support vector regression using pinball loss function. Appl Intell 49(10):3606–3627

Singla M, Ghosh D, Shukla KK et al (2020) Robust twin support vector regression based on rescaled hinge loss. Pattern Recogn 105:107395

Liu MZ, Shao YH, Li CN et al (2021) Smooth pinball loss nonparallel support vector machine for robust classification. Appl Soft Comput 98:106840

Chapelle O (2007) Training a support vector machine in the primal. Neural Comput 19(5):1155–1178

Peng XJ (2010) Primal twin support vector regression and its sparse approximation. Neurocomputing 73(16–18):2846–2858

Zheng SF (2015) A fast algorithm for training support vector regression via smoothed primal function minimization. Int J Mach Learn Cybern 6(1):155–166

Wang LD, Gao C, Zhao NN et al (2019) A projection wavelet weighted twin support vector regression and its primal solution. Appl Intell 49(8):3061–3081

Huang HJ, Wei XX, Zhou YQ (2022) An overview on twin support vector regression. Neurocomputing 490:80–92

Tanveer M, Rajani T, Rastogi R et al (2022) Comprehensive review on twin support vector machines. Ann Oper Res. https://doi.org/10.1007/s10479-022-04575-w

Yuille AL, Rangarajan A (2003) The concave-convex procedure. Neural Comput 15(4):915–936

UCI data repository. [online], http://archive.ics.uci.edu/ml/, 2020.

Maulud D, Abdulazeez AM (2020) A review on linear regression comprehensive in machine learning. J Appl Sci Technol Trends 1(4):140–147

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

KEEL time series datasets. https://sci2s.ugr.es/keel/html/, 2020 [online].

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (Grant Nos. 61702012, 62006097), the University Natural Science Research Project of Anhui Province (Grant No. KJ2020A0505), the Natural Science Foundation of Anhui Province (Grant Nos. 1908085MF195, 200808085MF193) and the Natural Science Foundation of Jiangsu Province (Grant No. BK20200593).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1 Symbols Description

Symbols | Implication |

|---|---|

\(A\) | All training samples, \(n \times m\) matrix |

\(A_{i} \in R^{m}\) | The \(i\)-th training sample |

\(Y = (y_{1} ,\,y_{2} , \ldots ,y_{n} )\) | The response vector of training samples |

\(\alpha ,\gamma\) | Lagrange multipliers |

\(c_{1} ,c_{2} > 0\) | Penalty parameters |

\(\varepsilon_{1} ,\varepsilon_{2} \ge 0\) | Insensitive parameters |

\(\xi ,\eta \in R^{n}\) | Slack variables |

\(I\) | Identity matrix |

\(u\) | Arbitrary variable |

\(L_{\varepsilon } \left( u \right)\) | ε-insensitive loss function |

\(L_{h} \left( u \right)\) | Huber loss function |

\(L_{h\varepsilon }^{v} \left( u \right)\) | Smooth truncated Hε loss function |

\(u_{1} = [\omega_{1}^{T} \, b_{1} ]^{T}\), \(u_{2} = [\omega_{2}^{T} \, b_{2} ]^{T}\) | Optimal solutions |

\(s^{i} (u_{1} ) \in R^{n}\), \(s^{i} (u_{2} ) \in R^{n}\) | Sign vectors |

\(s_{j}^{i} (u_{1} ) \in R^{n} \, (j = 1,2, \cdots ,n)\) | The \(j\)-th element of \(s^{i} (u_{1} ) \in R^{n} \, (i = 1,2,3,4)\) |

\(s_{j}^{i} (u_{2} ) \in R^{n} \, (j = 1,2, \cdots ,n)\) | Thej-th element of \(s^{i} (u_{2} ) \in R^{n} \;(i = 5,6,7,8)\) |

Appendix 2 Abbreviations Description

Abbreviations | Full name |

|---|---|

SVR | Support vector regression |

TSVR | Twin support vector regression |

ε-TSVR | ε-Twin support vector regression |

TWSVM | Twin support vector machine |

THε-TSVR | Robust twin support vector regression with smooth truncated Hε loss function |

HN-TSVR | Twin support vector regression with Huber loss |

RHN-TSVR | Regularization based TSVR with Huber loss |

CCCP | Concave-convex programming |

SOR | Successive overrelaxation technique |

SRM | Structural risk minimization |

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shi, T., Chen, S. Robust Twin Support Vector Regression with Smooth Truncated Hε Loss Function. Neural Process Lett 55, 9179–9223 (2023). https://doi.org/10.1007/s11063-023-11198-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11198-0