Abstract

In video action recognition, the existing methods mostly utilize global average pooling at the end of the network to aggregate spatio-temporal features of the video to generate global video representations, which are insufficient in modeling complex spatio-temporal feature distributions and capturing spatio-temporal dynamic information. To address the issue, we propose a novel group second-order aggregation network (GSoANet), the core of which is to integrate the group second-order aggregation module (GSoAM) at the end of the network to aggregate video spatio-temporal features. GSoAM first adopts the grouping strategy to decompose input features into a group of relatively low-dimensional vectors, and then aggregates video spatio-temporal features in the low-dimensional space. Then the subspaces represented by codewords are introduced, where in each subspace, differences between spatio-temporal features and codewords are aggregated with soft assignment refecting their proximity. Finally, the nonlinear geometric structure of the fused subspaces is modeled by using the iterative matrix square root normalized covariance. In addition, GSoANet also introduces a high-performance convolutional network ConvNeXt as a backbone to improve network accuracy at a lower computational cost. Extensive experimental results on four challenging video datasets demonstrate the effectiveness of the proposed method in aggregating spatio-temporal features as well as its competitive results.

Similar content being viewed by others

References

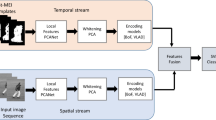

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: NIPS, vol. 27

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: ICCV, pp 4489–4497

Lin J, Gan C, Han S (2019) TSM: Temporal shift module for efficient video understanding. In: ICCV, pp. 7083–7093

Neimark D, Bar O, Zohar M, Asselmann D (2021) Video transformer network. In: ICCV, pp 3163–3172

Wang L, Xiong Y, Wang Z, Qiao Y (2015) Towards good practices for very deep two-stream convnets. arXiv preprint arXiv:1507.02159

Christoph R, Pinz FA (2016) Spatiotemporal residual networks for video action recognition. arXiv preprint arXiv:1611.02155

Liu T, Zhao R, Xiao J, Lam K-M (2020) Progressive motion representation distillation with two-branch networks for egocentric activity recognition. IEEE Signal Process Lett 27:1320–1324

Hara K, Kataoka H, Satoh Y (2018) Can spatiotemporal 3D CNNs retrace the history of 2D CNNs and imagenet? In: CVPR, pp 6546–6555

Tran D, Wang H, Torresani L, Ray J, LeCun Y, Paluri M (2018) A closer look at spatiotemporal convolutions for action recognition. In: CVPR, pp 6450–6459

Du X, Li Y, Cui Y, Qian R, Li J, Bello I (2021) Revisiting 3D ResNets for video recognition. arXiv preprint arXiv:2109.01696

Li Y, Ji B, Shi X, Zhang J, Kang B, Wang L (2020) TEA: Temporal excitation and aggregation for action recognition. In: CVPR, pp 909–918

Sharir G, Noy A, Zelnik-Manor L (2021) An image is worth 16x16 words, what is a video worth? arXiv preprint arXiv:2103.13915

Bertasius G, Wang H, Torresani L (2021) Is space-time attention all you need for video understanding? In: ICML, vol. 139, pp 813–824

Yan S, Xiong X, Arnab A, Lu Z, Zhang M, Sun C, Schmid C (2022) Multiview transformers for video recognition. arXiv preprint arXiv:2201.04288

Wu C-Y, Li Y, Mangalam K, Fan H, Xiong B, Malik J, Feichtenhofer C (2022) Memvit: Memory-augmented multiscale vision transformer for efficient long-term video recognition. In: CVPR, pp 13587–13597

Wang H, Kläser A, Schmid C, Liu C-L (2011) Action recognition by dense trajectories. In: CVPR, pp. 3169–3176

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: ICCV, pp 3551–3558

Jégou H, Douze M, Schmid C, Pérez P (2010) Aggregating local descriptors into a compact image representation. In: CVPR, pp 3304–3311

Sánchez J, Perronnin F, Mensink T, Verbeek J (2013) Image classification with the fisher vector: theory and practice. Int J Comput Vision 105(3):222–245

Canas G, Poggio T, Rosasco L (2012) Learning manifolds with k-means and k-flats. Adv Neural Inform Process Syst 25

Arandjelovic R, Gronat P, Torii A, Pajdla T, Sivic J (2016) NetVLAD: CNN architecture for weakly supervised place recognition. In: CVPR, pp 5297–5307

Miech A, Laptev I, Sivic J (2017) Learnable pooling with context gating for video classification. arXiv preprint arXiv:1706.06905

Girdhar R, Ramanan D, Gupta A, Sivic J, Russell B (2017) Actionvlad: Learning spatio-temporal aggregation for action classification. In: CVPR, pp 971–980

Sun Q, Wang Q, Zhang J, Li P (2018) Hyperlayer bilinear pooling with application to fine-grained categorization and image retrieval. Neurocomputing 282:174–183

Li P, Xie J, Wang Q, Zuo W (2017) Is second-order information helpful for large-scale visual recognition? In: ICCV, pp 2070–2078

Li P, Xie J, Wang Q, Gao Z (2018) Towards faster training of global covariance pooling networks by iterative matrix square root normalization. In: CVPR, pp 947–955

Wang Q, Li P, Hu Q, Zhu P, Zuo W (2019) Deep global generalized gaussian networks. In: CVPR, pp 5080–5088

Liu Z, Mao H, Wu C-Y, Feichtenhofer C, Darrell T, Xie S (2022) A ConvNet for the 2020s. In: CVPR, pp 11976–11986

Lin R, Xiao J, Fan J (2018) NeXtVLAD: An efficient neural network to aggregate frame-level features for large-scale video classification. In: ECCV

Wang L, Li W, Li W, Van Gool L (2018) Appearance-and-relation networks for video classification. In: CVPR, pp 1430–1439

Zhou B, Andonian A, Oliva A, Torralba A (2018) Temporal relational reasoning in videos. In: ECCV, pp 803–818

Li X, Wang Y, Zhou Z, Qiao Y (2020) Smallbignet: Integrating core and contextual views for video classification. In: CVPR, pp 1092–1101

Wang L, Tong Z, Ji B, Wu G (2021) TDN: Temporal difference networks for efficient action recognition. In: CVPR, pp 1895–1904

Huang Z, Zhang S, Pan L, Qing Z, Tang M, Liu Z, Ang Jr MH (2021) TAda! temporally-adaptive convolutions for video understanding. arXiv preprint arXiv:2110.06178

Philbin J, Chum O, Isard M, Sivic J, Zisserman A (2007) Object retrieval with large vocabularies and fast spatial matching. In: 2007 IEEE conference on computer vision and pattern recognition, pp 1–8

Laptev I, Marszalek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies. In: CVPR, pp 1–8

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local SVM approach. In: ICPR, vol. 3, pp 32–36

Xu Y, Han Y, Hong R, Tian Q (2018) Sequential video VLAD: Training the aggregation locally and temporally. IEEE Trans Image Process 27(10):4933–4944

Lin T-Y, RoyChowdhury A, Maji S (2015) Bilinear CNN models for fine-grained visual recognition. In: ICCV, pp 1449–1457

Gao Y, Beijbom O, Zhang N, Darrell T (2016) Compact bilinear pooling. In: CVPR, pp 317–326

Zhang B, Wang Q, Lu X, Wang F, Li P (2020) Locality-constrained affine subspace coding for image classification and retrieval. Pattern Recogn 100:107167

Sun Q, Zhang Z, Li P (2021) Second-order encoding networks for semantic segmentation. Neurocomputing 445:50–60

Diba A, Sharma V, Van Gool L (2017) Deep temporal linear encoding networks. In: CVPR, pp 2329–2338

Girdhar R, Ramanan D (2017) Attentional pooling for action recognition. In: NIPS, vol. 30

Zhu X, Xu C, Hui L, Lu C, Tao D (2019) Approximated bilinear modules for temporal modeling. In: ICCV, pp 3494–3503

Li Y, Song S, Li Y, Liu J (2019) Temporal bilinear networks for video action recognition. In: AAAI, vol. 33, pp 8674–8681

Gao Z, Wang Q, Zhang B, Hu Q, Li P (2021) Temporal-attentive covariance pooling networks for video recognition. In: NIPS, vol. 34, pp 13587–13598

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: CVPR, pp 6299–6308

Xie S, Sun C, Huang J, Tu Z, Murphy K (2018) Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. In: ECCV, pp 305–321

Soomro K, Zamir AR, Shah M (2012) UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402

Kuehne H, Jhuang H, Garrote E, Poggio T, Serre T (2011) HMDB: A large video database for human motion recognition. In: ICCV, pp 2556–2563

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Crasto N, Weinzaepfel P, Alahari K, Schmid C (2019) MARS: Motion-augmented RGB stream for action recognition. In: CVPR, pp 7882–7891

Zhang S, Guo S, Huang W, Scott MR, Wang L (2020) V4D: 4D convolutional neural networks for video-level representation learning. arXiv preprint arXiv:2002.07442

Chi L, Yuan Z, Mu Y, Wang C (2020) Non-local neural networks with grouped bilinear attentional transforms. In: CVPR, pp 11804–11813

Pang B, Peng G, Li Y, Lu C (2021) PGT: A progressive method for training models on long videos. In: CVPR, pp 11379–11389

Li X, Liu C, Shuai B, Zhu Y, Chen H, Tighe J (2022) NUTA: Non-uniform temporal aggregation for action recognition. In: WACV, pp 3683–3692

Feichtenhofer C, Fan H, Malik J, He K (2019) Slowfast networks for video recognition. In: ICCV, pp 6202–6211

Yang C, Xu Y, Shi J, Dai B, Zhou B (2020) Temporal pyramid network for action recognition. In: CVPR, pp 591–600

Jiang Y, Gong X, Wu J, Shi H, Yan Z, Wang Z (2022) Auto-X3D: Ultra-efficient video understanding via finer-grained neural architecture search. In: WACV, pp 2554–2563

Sun R, Zhang T, Wan Y, Zhang F, Wei J (2023) Wlit: Windows and linear transformer for video action recognition. Sensors 23(3):1616

Wang H, Tran D, Torresani L, Feiszli M (2020) Video modeling with correlation networks. In: CVPR, pp 352–361

Zhou Y, Sun X, Zha Z-J, Zeng W (2018) MiCT: Mixed 3D/2D convolutional tube for human action recognition. In: CVPR, pp 449–458

Liu Z, Hu H (2019) Spatiotemporal relation networks for video action recognition. IEEE Access 7:14969–14976

Yang G, Yang Y, Lu Z, Yang J, Liu D, Zhou C, Fan Z (2022) STA-TSN: Spatial-temporal attention temporal segment network for action recognition in video. PLoS ONE 17(3):0265115

Liu Z, Luo D, Wang Y, Wang L, Tai Y, Wang C, Li J, Huang F, Lu T (2020) TEINet: Towards an efficient architecture for video recognition. In: AAAI, vol. 34, pp 11669–11676

Zhang Y, Li X, Liu C, Shuai B, Zhu Y, Brattoli B, Chen H, Marsic I, Tighe J (2021) VidTr: Video transformer without convolutions. In: ICCV, pp 13577–13587

Chen B, Meng F, Tang H, Tong G (2023) Two-level attention module based on spurious-3d residual networks for human action recognition. Sensors 23(3):1707

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China under Grant 61972062 and 61902220, the NSFC-Liaoning Province United Foundation under Grant U1908214, and the Young and Middle-aged Talents Program of the National Civil Affairs Commission.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Z., Dong, W., Zhang, B. et al. GSoANet: Group Second-Order Aggregation Network for Video Action Recognition. Neural Process Lett 55, 7493–7509 (2023). https://doi.org/10.1007/s11063-023-11270-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11270-9