Abstract

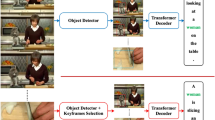

Video captioning generation has become one of the research hotspots in recent years due to its wide range of potential application scenarios. It captures the situation where recognition errors occur in the description due to insufficient interaction between visual features and text features during model encoding, and the attention mechanism is difficult to explicitly model the visual and verbal coherence. In this paper, we propose a video captioning algorithm CAVF (Cascaded Attention-guided Visual Features Fusion for Video Captioning) based on cascaded attention-guided visual features fusion. In the encoding stage, a cascaded attention mechanism is proposed to model the visual content correlation between different frames, and the global semantic information can better guide the visual features fusion, through which the network further enhances the correlation between the visual features of the model and the decoder. In the decoding stage, the overall features and word vectors obtained from the multilayer long- and short-term memory network are encoded for de-enhancement to generate the current words. Experiments on the public datasets MSVD and MSR-VTT validate the effectiveness of the model in this paper, and the proposed method in this paper improves 5.6%, 1.3%, and 4.3% in BLEU_4, ROUGE, and CIDER metrics, respectively, on the MSR-VTT public dataset compared with the benchmark method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data Availability

The datasets generated or analyzed during this study are available from the corresponding author on reasonable request.

Code Availability

While we cannot release the full source code, we do provide sufficiently detailed instructions and guidance to anyone who needs this information to replicate or reproduce the results.

References

Gao W, Chen LDZ et al (2020) Implementation of pre-standardized transformer in Ukrainian–English machine translation. Small Microcomput Syst 41(11):2286–2291

Zhang H, Shao YYD et al (2021) Neural machine translation based on hierarchical analysis of syntactic rules. Small Microcomput Syst 42(11):2300–2306

Fang K, Zhou L, Jin C et al (2019) Fully convolutional video captioning with coarse-to-fine and inherited attention. In: proceedings of the AAAI conference on artificial intelligence, pp 8271–8278

Lian Z, Li H, Wang R et al (2020) Enhanced soft attention mechanism with an inception-like module for image captioning. In: 2020 IEEE 32nd international conference on tools with artificial intelligence (ICTAI), IEEE, pp 748–752

Li X, Zhang LXH (2019) Research on multi-theme image description generation method. Small Microcomput Syst 40(05):1064–1068

Wu X, Li G, Cao Q et al (2018) Interpretable video captioning via trajectory structured localization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6829–6837

Liu S, Ren Z, Yuan J (2018) Sibnet: sibling convolutional encoder for video captioning. In: Proceedings of the 26th ACM international conference on multimedia, pp 1425–1434

Chen S, Jiang YG (2019) Motion guided spatial attention for video captioning. In: Proceedings of the AAAI conference on artificial intelligence, pp 8191–8198

Cherian A, Wang J, Hori C et al (2020) Spatio-temporal ranked-attention networks for video captioning. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 1617–1626

Huang L, Wang W, Chen J et al (2019) Attention on attention for image captioning. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4634–4643

Chen S, Zhong X, Wu S et al (2021) Memory-attended semantic context-aware network for video captioning. Soft Comput pp 1–13

Sun Z, Zhong X, Chen S et al (2021) Modeling context-guided visual and linguistic semantic feature for video captioning. In: International conference on artificial neural networks, Springer, pp 677–689

Zhang J, Peng Y (2019) Object-aware aggregation with bidirectional temporal graph for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8327–8336

Hu Y, Chen Z, Zha ZJ et al (2019) Hierarchical global-local temporal modeling for video captioning. In: Proceedings of the 27th ACM international conference on multimedia, pp 774–783

Qin Y, Du J, Zhang Y et al (2019) Look back and predict forward in image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8367–8375

Shi J, Li Y, Wang S (2019) Cascade attention: multiple feature based learning for image captioning. In: 2019 IEEE international conference on image processing (ICIP), IEEE, pp 1970–1974

Deng J, Li L, Zhang B et al (2021) Syntax-guided hierarchical attention network for video captioning. IEEE Trans Circuits Syst Video Technol 32(2):880–892

Zhu Y, Jiang S (2019) Attention-based densely connected lstm for video captioning. In: Proceedings of the 27th ACM international conference on multimedia, pp 802–810

Chen S, Zhong X, Li L et al (2020) Adaptively converting auxiliary attributes and textual embedding for video captioning based on bilstm. Neural Process Lett 52(3):2353–2369

Pan B, Cai H, Huang DA, et al (2020) Spatio-temporal graph for video captioning with knowledge distillation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10,870–10,879

Gao L, Li X, Song J et al (2019) Hierarchical lstms with adaptive attention for visual captioning. IEEE Trans Pattern Anal Mach Intell 42(5):1112–1131

Li X, Zhao B, Lu X, et al (2017) Mam-RNN: multi-level attention model based RNN for video captioning. In: IJCAI, pp 2208–2214

Wang H, Xu Y, Han Y (2018) Spotting and aggregating salient regions for video captioning. In: Proceedings of the 26th ACM international conference on multimedia, pp 1519–1526

Jiang K, Wang Z, Yi P et al (2020) Decomposition makes better rain removal: an improved attention-guided de raining network. IEEE Trans Circuits Syst Video Technol 31(10):3981–3995

Huang Z, Wang Z, Tsai CC et al (2020) Dotscn: group re-identification via domain-transferred single and couple representation learning. IEEE Trans Circuits Syst Video Technol 31(7):2739–2750

Tan G, Liu D, Wang M et al (2020) Learning to discretely compose reasoning module networks for video captioning. arXiv preprint arXiv:2007.09049

Zheng Q, Wang C, Tao D (2020) Syntax-aware action targeting for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13,096–13,105

Xu K, Ba J, Kiros R et al (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning, PMLR, pp 2048–2057

Pan Y, Yao T, Li Y et al (2020) X-linear attention networks for image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10,971–10,980

Ren S, He K, Girshick R et al (2015) Faster R-CNN: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28

Venugopalan S, Rohrbach DJM et al (2015) Sequence to sequence -video to text. In: Proceedings of IEEE international conference on computer vision, pp 4534–4542

Xu J, Mei T, Yao T et al (2016) Msr-vtt: a large video description dataset for bridging video and language. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5288–5296

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Xie S, Girshick R, Dollár P et al (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1492–1500

Russakovsky O, Deng J, Su H et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252

Kay W, Carreira J, Simonyan K et al (2017) The kinetics human action video dataset. arXiv preprint arXiv:1705.06950

Chen J, Pan Y, Li Y et al (2019) Temporal deformable convolutional encoder-decoder networks for video captioning. In: Proceedings of the AAAI conference on artificial intelligence, pp 8167–8174

Chen Y, Wang S, Zhang W et al (2018) Less is more: picking informative frames for video captioning. In: Proceedings of the European conference on computer vision (ECCV), pp 358–373

Wang B, Ma L, Zhang W et al (2018) Reconstruction network for video captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7622–7631

Aafaq N, Akhtar N, Liu W et al (2019) Spatio-temporal dynamics and semantic attribute enriched visual encoding for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12,487–12,496

Shi X, Cai J, Joty S et al (2019) Watch it twice: video captioning with a refocused video encoder. In: Proceedings of the 27th ACM international conference on multimedia, pp 818–826

Wang B, Ma L, Zhang W et al (2019) Controllable video captioning with pos sequence guidance based on gated fusion network. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 2641–2650

Hou J, Wu X, Zhao W et al (2019) Joint syntax representation learning and visual cue translation for video captioning. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8918–8927

Chen J, Pan Y, Li Y et al (2019) Temporal deformable convolutional encoder-decoder networks for video captioning. In: Proceedings of the AAAI conference on artificial intelligence, pp 8167–8174

Li S, Yang B, Zou Y (2022) Adaptive curriculum learning for video captioning. IEEE Access 10:31,751-31,759

Chen J, Pan Y, Li Y et al (2023) Retrieval augmented convolutional encoder-decoder networks for video captioning. ACM Trans Multimed Comput Commun Appl 19(1s):1–24

Tu Y, Zhou C, Guo J et al (2021) Enhancing the alignment between target words and corresponding frames for video captioning. Pattern Recogn 111(107):702

Ye H, Li G, Qi Y et al (2022) Hierarchical modular network for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 17,939–17,948

Pei W, Zhang J, Wang X et al (2019) Memory-attended recurrent network for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8347–8356

Chen J, Chao H (2020) Videotrm: pre-training for video captioning challenge 2020. In: Proceedings of the 28th ACM international conference on multimedia, pp 4605–4609

Liu S, Ren Z, Yuan J (2018) Sibnet: sibling convolutional encoder for video captioning. In: Proceedings of the 26th ACM international conference on multimedia, pp 1425–1434

Lin K, Li L, Lin CC et al (2022) Swinbert: end-to-end transformers with sparse attention for video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 17,949–17,958

Li L, Gao X, Deng J et al (2022) Long short-term relation transformer with global gating for video captioning. IEEE Trans Image Process 31: 2726–2738

Wang T, Zhang R, Lu Z et al (2021) End-to-end dense video captioning with parallel decoding. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6847–6857

Iashin V, Rahtu E (2020) Multi-modal dense video captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 958–959

Acknowledgements

This work was supported in part by the Hubei Institute of Education Science under Grant 2022ZA41; in part by the Scientific Research Foundation of Hubei University of Education for Talent Introduction under Grant ESRC20230009; in part by the Department of Science and Technology, Hubei Provincial People’s Government under Grant 2023AFB206

Author information

Authors and Affiliations

Contributions

Material preparation, data collection and analysis were performed by SC and LY. The first draft of the manuscript was written by SC and YH. All authors contributed to the study conception and design. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Ethical Approval

This study did not involve any human or animal trials and the required permits and approvals have been obtained.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, S., Yang, L. & Hu, Y. Video Captioning Based on Cascaded Attention-Guided Visual Feature Fusion. Neural Process Lett 55, 11509–11526 (2023). https://doi.org/10.1007/s11063-023-11386-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11386-y