Abstract

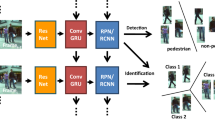

Multi-object tracking (MOT) is mainly used for detecting and tracking the object on multi-cameras, which is widely applied in intelligent video surveillance and intelligent security. The process of MOT generally involves three import parts: feature extracting, multi-task learning and object matching. Unfortunately, the existed methods still have some drawbacks. Firstly, the feature extracting module cannot effectively fuse the shallow and deep features. What’s more, the multi-task learning module cannot strike a good balance between the detection and re-identification. In addition, the object matching module associates with pedestrian by using a traditional method rather than training a model. For these problems, we propose a method of joint detection and association (JDA) for end-to-end multi-object tracking network, which involves the multi-scale feature extraction and the learnable object association. It first combines a feature extraction backbone based on multi-scale feature fusion and a point-based multi-task object detection branch, to solve the task of feature extraction and object detection. Then, a learnable object motion association module is embedded, which uses the historical frames information to infer the position of the object, and associate the object identity between previous frames and subsequent frames. In addition, the JDA can be end-to-end trained when handling the detection and matching tasks. The proposed JDA is evaluated through a series of experiments on MOT16 and MOT17. The results shows that JDA the existing methods in terms of precision and stability of MOT.

Similar content being viewed by others

References

Kokkinos I (2017) Ubernet: training a universal convolutional neural network for low-, mid-, and high-level vision using diverse datasets and limited memory. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6129–6138

Ranjan R, Patel VM, Chellappa R (2017) Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans Pattern Anal Mach Intell 41(1):121–135

Sener O, Koltun V (2018) Multi-task learning as multi-objective optimization. In: Advances in neural information processing systems, vol 31

Voigtlaender P, Krause M, Osep A, Luiten J, Sekar BBG, Geiger A, Leibe B (2019) Mots: multi-object tracking and segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7942–7951

Wang Z, Zheng L, Liu Y, Li Y, Wang S (2020) Towards real-time multi-object tracking. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XI 16. Springer, pp 107–122

Bewley A, Ge Z, Ott L, Ramos F, Upcroft B (2016) Simple online and realtime tracking. In: 2016 IEEE international conference on image processing (ICIP), pp 3464–3468

Ren S, He K, Girshick R, Sun J (2017) Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 39(6):1137–1149

Wojke N, Bewley A, Paulus D (2017) Simple online and realtime tracking with a deep association metric. In: 2017 IEEE international conference on image processing (ICIP), pp 3645–3649

Kim C, Li F, Ciptadi A, Rehg JM (2015) Multiple hypothesis tracking revisited. In: IEEE international conference on computer vision

Yu F, Li W, Li Q, Liu Y, Shi X, Yan J (2016) Poi: multiple object tracking with high performance detection and appearance feature. In: European conference on computer vision, pp 36–42

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: single shot multibox detector. In: Computer vision–ECCV 2016: 14th European conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp 21–37

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767

Redmon J, Farhadi A (2017) Yolo9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7263–7271

He Q, Sun X, Yan Z, Fu K (2022) Dabnet: deformable contextual and boundary-weighted network for cloud detection in remote sensing images. IEEE Trans Geosci Remote Sens 60:1–16. https://doi.org/10.1109/TGRS.2020.3045474

Xu Y, Osep A, Ban Y, Horaud R, Leal-Taixe L, Alameda-Pineda X (2019) How to train your deep multi-object tracker. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6786–6795

Pang B, Li Y, Zhang Y, Li M, Lu C (2020) Tubetk: adopting tubes to track multi-object in a one-step training model. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6307–6317

Feichtenhofer C, Pinz A, Zisserman A (2017) Detect to track and track to detect. In: Proceedings of the IEEE international conference on computer vision, pp 3038–3046

Bergmann P, Meinhardt T, Leal-Taixe L (2019) Tracking without bells and whistles. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 941–951

He Q, Sun X, Yan Z, Li B, Fu K (2022) Multi-object tracking in satellite videos with graph-based multitask modeling. IEEE Trans Geosci Remote Sens 60:1–13. https://doi.org/10.1109/TGRS.2022.3152250

Zhou X, Wang D, Krähenbühl P (2019) Objects as points. arXiv preprint arXiv:1904.07850

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) Centernet: keypoint triplets for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6569–6578

Zhang Y, Wang C, Wang X, Zeng W, Liu W (2021) Fairmot: on the fairness of detection and re-identification in multiple object tracking. Int J Comput Vis 129(11):3069–3087

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Zhang Y, Wang C, Wang X, Zeng W, Liu W (2021) Fairmot: on the fairness of detection and re-identification in multiple object tracking. Int J Comput Vis 1–19

Henriques JF, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Danelljan M, Häger G, Khan F, Felsberg M (2014) Accurate scale estimation for robust visual tracking. In: British machine vision conference, Nottingham, September 1–5, 2014. Bmva Press

Li Y, Luo X, Hou S, Li C, Yin G (2021) End-to-end network embedding unsupervised key frame extraction for video-based person re-identification. In: 2021 11th international conference on information science and technology (ICIST), pp 404–410 . https://doi.org/10.1109/ICIST52614.2021.9440586

Zhou X, Koltun V, Krähenbühl P (2020) Tracking objects as points. In: European conference on computer vision. Springer, pp 474–490

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE international conference on computer vision, pp 4310–4318

Bernardin K, Stiefelhagen R (2008) Evaluating multiple object tracking performance: the clear mot metrics. EURASIP J Image Video Process 2008:1–10

Ristani E, Solera F, Zou R, Cucchiara R, Tomasi C (2016) Performance measures and a data set for multi-target, multi-camera tracking. In: Computer vision–ECCV 2016 workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II. Springer, pp 17–35

Pang B, Li Y, Zhang Y, Li M, Lu C (2020) Tubetk: adopting tubes to track multi-object in a one-step training model. In: 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 6307–6317. https://doi.org/10.1109/CVPR42600.2020.00634

Bergmann P, Meinhardt T, Leal-Taixe L (2019) Tracking without bells and whistles. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 941–951

Xu Y, Ban Y, Alameda-Pineda X, Horaud R (2019) Deepmot: a differentiable framework for training multiple object trackers. arXiv preprint arXiv:1906.06618

Brasó G, Leal-Taixé L (2020) Learning a neural solver for multiple object tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6247–6257

Bewley A, Ge Z, Ott L, Ramos F, Upcroft B (2016) Simple online and realtime tracking. In: 2016 IEEE international conference on image processing (ICIP). IEEE, pp 3464–3468

Meneses M, Matos L, Prado B, Carvalho A, Macedo H (2021) Smartsort: an mlp-based method for tracking multiple objects in real-time. J Real-Time Image Proc 18:913–921

He Y, Wei X, Hong X, Ke W, Gong Y (2022) Identity-quantity harmonic multi-object tracking. IEEE Trans Image Process 31:2201–2215. https://doi.org/10.1109/TIP.2022.3154286

Wojke N, Bewley A, Paulus D (2017) Simple online and realtime tracking with a deep association metric. In: 2017 IEEE international conference on image processing (ICIP). IEEE, pp 3645–3649

Wang G, Wang Y, Gu R, Hu W, Hwang J-N (2022) Split and connect: a universal tracklet booster for multi-object tracking. IEEE Trans Multimedia. https://doi.org/10.1109/TMM.2022.3140919

Funding

This work was supported in part by the Young People Fund of Xinjiang Science and Technology Department (No. 2022D01B05).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The data that support the findings of this study are available online. These datasets were derived from the following public domain resources: MOT17 and MOT16. The authors declare that they have no conflict of interest/competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Y., Luo, X., Shi, J. et al. Joint Detection and Association for End-to-End Multi-object Tracking. Neural Process Lett 55, 11823–11844 (2023). https://doi.org/10.1007/s11063-023-11397-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-023-11397-9