Abstract

The development of high-stakes decision-making neural agents that interact with complex environments, such as video games, is an important aspect of AI research with numerous potential applications. Reinforcement learning combined with deep learning architectures (DRL) has shown remarkable success in various genres of games. The performance of DRL is heavily dependent upon the neural networks resides within them. Although these algorithms perform well in offline testing but the performance deteriorates in noisy and sub-optimal conditions, creating safety and security issues. To address these, we propose a hybrid deep learning architecture that combines a traditional convolutional neural network with worm brain-inspired neural circuit policies. This allows the agent to learn key coherent features from the environment and interpret its dynamics. The obtained DRL agent was not only able to achieve an optimal policy quickly, but it was also the most noise-resilient with the highest success rate. Our research indicates that only 20 control neurons (12 inter-neurons and 8 command neurons) are sufficient to achieve competitive results. We implemented and analyzed the agent in the popular video game Doom, demonstrating its effectiveness in practical applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, there has been a growing emphasis on developing computational intelligence algorithms that mimic biological structures. These techniques have proven capable of not only matching but sometimes surpassing current state-of-the-art methods. Researchers have made significant progress in discerning the structural connectivity of diverse systems and organisms at various resolutions [7, 25, 27]. The C.elegans nematode tap-withdrawal circuit [37] stands out as an exemplar of this progress, faithfully replicating worm reflexes [10] and showing promise for tasks such as navigation [8], locomotion [16], and motor control [31]. Repurposing the tap-withdrawal circuit into Neural Circuit Policies (NCPs) [21] has yielded outstanding performance in high-stakes decision-making tasks, including autonomous flight control [33], autonomous vehicles [20, 28], and classification tasks [11, 32]. The utilization of NCPs facilitates the integration of interpretability and explainability into the decision-making process, crucial for practical applications. Furthermore, it bestows adaptability and robustness upon the neural agent, enabling effective operation in unpredictable and dynamic environments. Reinforcement learning (RL) plays a crucial role in AI research by facilitating the creation of precise neural agents proficient in high-stakes decision-making tasks. RL has demonstrated significant success in tackling complex challenges across various domains, including robotics, finance, healthcare, and management. The combination of RL and deep neural networks also known as Deep Reinforcement Learning (DRL) has proven highly successful in tackling complex environments, marking notable progress in video games such as Atari [24], StarCraft [34], Go [30], Dota [3], and Gran Turismo [38]. In the context of robotics, the success of DRL relies on researcher’s ability to adeptly control complex systems. Given that numerous robotic applications necessitate close human interaction and compliance with imprecisely specified human norms, it becomes paramount for these systems to be developed with a high degree of accuracy and sensitivity to human behavior. The Doom precisely presents the challenges required for the development of effective agents in complex systems, demanding precise control of an agent within a highly dynamic environment and specific parameters. Moreover, the game provides a remarkably realistic simulation, making it well-suited for experimenting with AI algorithms. The complex environment of Doom offer an ideal testing ground to hone agents’capabilities in navigating complex, dynamic scenarios, further advancing the RL field. To succeed in the Doom, the agent must excel in three skills: target identification, target chasing tactics, and protagonist movement control (navigation). For the agent to effectively pursue its target, it is essential to have a comprehensive understanding of the dynamics of the target and the track on which it is being pursued. This understanding provides the fundamental basis for the agent to acquire the necessary skills. Several research studies have been conducted to address the challenges presented by the Doom [1, 18, 29, 41]. Among these, the most notable is the VizDoom Competition [39], where various trained neural agents compete against each other. Few of these neural agents are F1, trained using the Asynchronous Advantage Actor-Critic (A3C) [9], Arnold trained using the Deep Q-Network (DQN), and TUHO trained using the Dueling DQN [35]. The performance of these neural agents heavily relies on the neural network that resides within them [6] that directly maps visual perception inputs to protagonist actions (end-to-end approach) [22]. These neural agents perform well in offline testing, but their performance decreases drastically in noisy and sub-optimal scenarios, raising safety and security issues. To tackle this issue, Recurrent Neural Networks (RNNs) have been proposed. These work in a sequential manner and handle temporal features for decision-making but suffer from vanishing gradients [2]. Long Short Term Memory (LSTM) networks [40] have been developed to address these gradient problems, ensuring a constant flow of information and the removal of non-linearity to create long-term relationships [15]. However, this might prove problematic, especially in our case where the availability of short-term causality can generate erroneous agents. Moreover, LSTM has many interlinked gates and states that slow the training process, thereby increasing the overall training time. The Gated Recurrent Unit (GRU) [5] is a simplified version of LSTM which has fewer gates and states, but it also suffers from the vanishing gradients problem. The goal of this research is to design a deep learning architecture that address these safety issues. We proposed an end-to-end architecture that combines worm-brain inspired Neural Circuit Policies (NCPs) with a Convolutional Neural Network (CNN). The CNN extract spatial information from the environment and pass it to the NCPs to handle temporal features for decision-making and action (see Fig. 1). The NCPs employ a four-layer hierarchy architecture that processes the extracted information obtained through sensory-neurons (\(N_s\)) in inter-neurons (\(N_i\)), and command-neurons (\(N_c\)) before selecting actions through motor-neurons (\(N_m\)). The connections between layers of NCPs are sparse, meaning not all neurons are fully-connected, and the connections between motor-neurons and command-neurons are highly recurrent. The sparse connectivity between NCPs layers play important role as each neuron is only linked to only select group of neurons in the subsequent layer, it simplifies the process of comprehending the function of individual neuron and their contribution to output [21]. The foundational block of NCPs is a continuous-time recurrent neural network called Liquid Time Constants (LTC) [12, 13] (briefly overviewed in Sect. 2). In the basic scenario, We found that a configuration of only 20 control-neurons, consisting of 12 inter-neurons and 8 command-neurons, not only matches but sometimes even outperforms competition. The design instructions of hierarchal architecture of NCPs can be found in author’s previous work [28]. The paper is organized as follows: Sect. 1 provides a concise review of related work and identifies areas requiring improvement. In Sect. 2, we comprehensively outline all the constituent building blocks of our proposed architecture. Section 3 elaborates on the methodology employed for implementing the architecture. Section 4 presents a thorough evaluation of our network, encompassing considerations such as network size, performance metrics, noise test results, and explanability. Finally, Sect. 5 concludes the paper, offering insights into potential future updates.

2 Preliminaries

In this section, we overviewed the building blocks of our designed architecture. We first introduced the Liquid Time Constant (LTC) neural network which is the foundational block of NCPs architecture that is discussed afterwards. Lastly we briefly described the RL algorithm.

2.1 Liquid Time Constant (LTC)

The Liquid Time Constant (LTC) belongs to the family of CT-RNN models, illustrating a dynamic system characterized by varying time constants linked to its hidden state. The model employs a fused solver, a specialized numerical differential solver to compute its output. Instead of defining a system’s dynamics through implicit nonlinearities and utilizing linear Ordinary Differential Equations (ODEs) for derivative construction, the LTC model adopts first-order linear dynamical systems interconnected with nonlinear gates [12].

Here the neural network is denoted by \(f(\cdot )\), the time constant by \(\tau \), the hidden layer by \(x(t)\), the input by \(I(t)\), and the parameters by \(A\) and \(\theta \). Beyond computing the derivative of the hidden state, the neural network also acts as an input-dependent variable time constant. This unique property enables specific components of the hidden state to interact with the system’s dynamics based on a given input at a particular time [12].

2.2 Neural Circuit Policies (NCPs)

Neural Circuit Policies (NCPs) represent an approach to construct interpretable neural control agents through modifications to the tap-withdrawal neural circuit identified in the worm C. elegans [27]. In this circuit, the majority of neurons exhibit electronic dynamics, characterized by the passive flow of electric charges, resulting in graded potentials rather than the typical spiking activity. NCPs consists of sensory neurons (denoted as \(N_s\)), responsible for perceiving and responding to external inputs. It also encompasses decision-making motor neurons (\(N_m\)), as well as interneurons (\(N_i\)) and command neurons (\(N_c\)) for internal processing. Each sensory neuron \(N_s\) consists of two neurons (\(S_p\) and \(S_n\)) and a system variable (\(x\)). The activation of \(S_p\) or \(S_n\) depends on the sign of \(x\), with \(S_p\) becoming active for positive \(x\) and \(S_n\) for negative \(x\). This mapping of \(x\) to a membrane potential range of \([-70\, \text {mV}, -20\, \text {mV}]\) aligns with the biophysics of nerve cells, having a resting potential of approximately \(-70\, \text {mV}\) and an active potential of around \(-20\, \text {mV}\). Similarly, each motor neuron comprises two neurons (\(M_p\) and \(M_n\)) and a controllable variable (\(y\)), with \(M_p\) and \(M_n\) mapping to \([-70\, \text {mV}, -20\, \text {mV}]\) [21]. Equation 3 defines the modeled dynamics of hidden or output neurons, denoted as \(x_i(t)\) in an LTC RNN functioning as a membrane integrator which is building block of NCPs [13, 27].

Here, \(C_m\) represents membrane capacitance, \(x_{leak_i}\) is the leaked potential of neuron i, I signifies external stimulus current, and \(G_{leak_i}\) stands for the leak conductance of neurons i. The electrical synapse between two nodes (i, j) is expressed using Ohm’s law:

For chemical synapses transmitting from j to i, a sigmoidal nonlinearity (\(\alpha _{ij}, \beta _{ij}\)) models the relationship as a function of membrane state, with a maximum weight of \(w_i\). Further details and derivation can be found in [13].

Here, \(E_{ij}\) represents the state of the neuron from i to j, indicating whether it excites or inhibits succeeding neurons. By substituting Eq. 5 into Eq. 3 and rearranging, we obtain:

This equation is responsible for noise resilent behavior of NCPs yielding \(\tau _{sys}\)

2.3 Reinforcement Learning

In RL, the interaction between the agent and the environment is preferably modeled as a Markov Decision Process (MDP) [26]. At each discrete time step, the agent receives a state \(\tilde{s}_t\) from the set \(\tilde{S}\), and in response, it sends an action \(\tilde{a}_t\) from the set \(\tilde{A}\) to the environment. Subsequently, a state transition occurs with a probability distribution \(\tilde{P}\), leading to a new state \(\tilde{s}_{t+1}\) and the agent receives a reward \(\tilde{R}\). This entire sequential process is characterized by the tuple \(\{\tilde{S}, \tilde{A}, \tilde{P}, \tilde{R}, \tilde{\gamma }\}\), where \(\tilde{\gamma }\) is the discount factor ranging from 0 to 1. The discount factor represents the agent’s inclination toward immediate rewards over long-term rewards. The agent selects an action by sampling from a policy \(\tilde{\pi }: \tilde{S} \rightarrow P(\tilde{A})\). This policy serves as a strategic guide for the agent’s decision-making process. Throughout the RL process, the policy is adjusted to encourage the selection of advantageous actions and discourage undesirable ones. The overarching objective of this learning process is to achieve an optimal policy, denoted as \(\tilde{\pi }^*\), that maximizes the cumulative discounted reward over time.

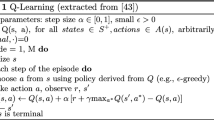

2.3.1 Deep Q-learning

Q-learning is an off-policy, model-free algorithm [36] that is employed to estimate the anticipated long-term reward for taking an action in a particular state, as represented by Q-values. The Q-values are acquired iteratively by updating the current estimate in the Q-table. Like in our case, environments with a large number of unique states maintaining a separate estimation to approximate all Q-values is infeasible. As a solution, the Q-table is replaced with a neural network that is parameterized by weights and biases collectively denoted as \(\tilde{\theta }\). The Q-values are estimated by forward passing observations to this neural network, and instead of updating individual Q-values, updates are made to the network’s parameters to minimize the Q-values.

where \(\tilde{Q}(\tilde{s}', \tilde{a}' | \tilde{\theta })\) is the target network Q-values. Deep Q-learning (DQL) employs three techniques to restore learning stability. Firstly, experiences are stored in a replay memory and uniformly sampled during training. This technique is known as the experience replay buffer (ERB). Secondly, a target network is used to provide Q-value updates to the main network. The target network’s parameters are updated at a slower rate, typically using a moving average of the main model’s parameters. Lastly, an adaptive learning method such as Adam [19] or SGD [23] is used to maintain a per-parameter learning rate that adjusts according to the history of gradient updates to that parameter.

3 Methods

In this section, we briefly outline the methodology used to implement our architecture. We start by explaining the basics of RL, describing the Doom environment as a Markov Decision Process (MDP) and its key components. After that, we discuss the internal structure of the pre-processing stage. Following this, we move on to the system setup and, finally, provide details on our implementation.

3.1 Doom as Markov Decision Process

In 1993, id Software released Doom, a popular game that sold nearly 3.5 million physical copies and 1.15 million shareware copies. The game features a protagonist and variety of different opponent to chase and kill them. To explore the game’s potential and advance RL research, researchers programmed the game into an AI research platform called VizDoom [17]. This platform features various scenarios and modes including sync, async, single, and multiplayer, and the basic scenario was chosen for this research. Figure 2 shows the Vizdoom environment as MDP system.

3.1.1 State (S)

The VizDoom states comprises of 3x240x320 RGB raw pixels and a reset signal after every 300 steps to facilitate learning. To handle the state constraints we wrapped it into a OpenAI gym environment [4].

3.1.2 Action Space (A)

The basic scenario in VizDoom involves an action space that corresponds to the identity matrix (\(I_D\)) of dimensions (3x3). The first row of the matrix pertains to the action of moving left, the second row corresponds to the action of moving right, and the last row relates to the action of shooting. In order to select the appropriate action, we configured agent to predict discrete values from 0 to 2.

\(\tilde{A} = I_D = \begin{bmatrix} 1 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 \\ 0 &{} 0 &{} 1 \\ \end{bmatrix}\)

3.1.3 Reward (R)

The reward allocation mechanism in the original VizDoom environment is complex and challenging to comprehend. The reward value fluctuates from -400 to 95 depending upon the number of steps performed. During the initial stages of training, the substantial negative reward hinders the learning capability of the model. To overcome this issue, we adopted a success-oriented approach for the reward. We designed the reward such that if the agent is successful in pursuing the target, it will receive a reward of 1, otherwise it will receive a reward of 0.

\(\tilde{R} = {\left\{ \begin{array}{ll} 1,&{} \text {if chased}\\ 0, &{} \text {otherwise} \end{array}\right. }\)

3.1.4 Policy

In machine learning, convergence denotes a state where further training fails to enhance performance. However, in RL, the exploration of the environment and the random sampling of experience can cause the policy to continue to vary even after convergence. This results in policies that differ slightly in their ability to execute the desired skills. Given that the basic scenario comprises three discrete actions (\(\tilde{a_t}\)), we opted for the Boltzmann exploration policy, which is specifically designed for discrete action spaces. This policy assumes that each possible action is associated with certain Q-values and leverages a s function and a temperature schedule (\(\tau \)) to transform these values into a distribution over actions.

3.2 Pre-process

In order to reduce the computational overhead associated with processing game states, we implemented a pre-processor, which includes converting the observed state to gray-scale, resizing it to a lower dimension (85x85 in our implementation), and subsequently normalizing the batch. The internal architecture of pre-processor is illustrated in Fig. 3

3.3 System Setup

We employed the Robot Operating System 2 (ROS2) framework for the implementation and evaluation of our algorithm. The utilization of ROS2 offers numerous advantages, streamlining the development and integration of complex systems by providing a common interface and communication standards. The framework (illustrated in Fig. 4) comprises three distinct nodes: states, agent, and visualization, each briefly outlined below.

3.3.1 State Node

This node incorporates crucial simulation files to convey the state of VizDoom. It publishes the state and reward topics and subscribes to the action topic. The internal architecture of the state node is depicted in Fig. 5.

3.3.2 AI Node

This node encompasses the algorithm and preprocessor, subscribing to the state topic. Upon receiving states, they undergo preprocessing before being fed into the RL agent. The AI node publishes an action node containing the actions predicted by our designed agent. The internal architecture of AI node is illustrated in Fig. 6.

3.3.3 Visualization Node

Specifically designed to monitor the agent’s performance, this node dynamically illustrates the success-rate and average steps taken in real-time.

3.4 Implementation

The proposed architecture comprises a sequence of convolutional neural layers followed by an LTC network that includes NCPs wiring. More precisely, our implemented architecture consists of two convolutional layers with 10 and 20 filters, respectively. The complete parameters for neural network layers are provided in Table 1. We implemented the architecture using Python 3.10 and Tensorflow in the ROS2 Humble framework. The hardware consists of an Intel Xeon e5 2650v3 CPU with 16GB of RAM. We employed the RNN-based Deep Q-network, also known as the Deep Recurrent Q-network (DRQN) algorithm [14], to train our agent. This algorithm involves teaching a policy that selects actions based on states received from the environment, estimating the future rewards for each possible action. DRQN trains the agent synchronously, sampling data from an experience replay buffer (ERB) while simultaneously pursuing the target using the most recent policy and continuously filling it with new experiences in an online training configuration. Table 2 provides all the hyper-parameters for our implemented algorithm. Similar to other physical and virtual sports, the game at hand requires the presence of human referees. These officials promptly assess chase occurrences and determine the outcome of each chase. A certain degree of inadvertent shots, considered tolerable, is typical. The agent’s input includes a signal stipulating that the player must pursue the target within a margin of 300 steps; failure to do so results in a loss.

4 Evaluation

In this section, we conduct an evaluation of our architecture. Initially, we compare the sizes of the respective networks. Subsequently, we assess the performance of each network by deploying the neural agent in a live environment. Following this, we test the robustness by introducing noise to the environment. Finally, we provide a brief overview of the explainability aspect.

4.1 Network comparison

The hyper-parameters of convolutional heads are designed so that the first layer extracts important features from environment and passes them to the second layer, which identifies the target and localization. For a fair comparison with NCPs, we use the same convolutional heads and combine them with different state-of-the-art recurrent networks, i.e. Simple Recurrent Neural Network (SimpleRNN), Long Short Term Memory (LSTM), and Gated Recurrent Unit (GRU). The hyperparameters of each model are designed to attain the optimal policy and undergo training for 1.5 million steps. The comparison of network sizes is presented in Table 3.

4.2 Performance Test

To assess the performance of each architecture, we devised five tests, each comprising 30 random episodes. We found that all models successfully addressed the challenges achieving an average of 90\(\%\) success-rate. However, our agent exhibited the highest consistency in performance and requiring fewer steps to achieve its objectives in each test. The results are depicted in Fig. 8.

4.3 Noise Test

To assess the robustness of the architecture, we introduced Gaussian noise \(f(x | \mu , \sigma )\) to the states of Vizdoom within the state node, using mean(\(\mu \)) of 0 and a standard deviation(\(\sigma \)) of 50. We found that our designed architecture exhibited the highest resilience to noise, requiring fewer average steps per test while achieving the highest success rate. The GRU model ranked second, followed by the LSTM model in third place. The SimpleRNN model experienced the most significant decline in performance, making it the most susceptible to noise. The results are depicted in Fig. 9.

4.4 Explainability

Explainability in AI refers to the ability to make the decision-making process more understandable and interpretable to humans. NCPs output is designed to have biological plausibility, meaning that the output of each neuron will have a certain range of voltages [21]. The activity of neurons refers to the output voltages of each neuron at a particular instant, which describes the activation of neurons at that moment [28]. To measure the output voltages of neurons in NCPs, we utilized three sample images for inferring and verifying the relevant voltages. The neural network undergoes three key steps during this process: target identification, navigation towards the target, and execution (see Fig. 10). To determine the activated neurons at a particular instance, we selected three test cases: Left (indicating the agent should navigate left), Middle (suggesting the target is aligned with the agent), and Right (indicating the agent needs to navigate right). The summarized results are presented in Table 4, with specific neuron activation is highlighted in bold.

5 Conclusion

In this research, we developed a hybrid deep learning architecture that integrates Convolutional Neural Networks (CNN) with Neural Circuit Policies (NCPs), inspired by the structure of worm brains. The CNN extracts important spatial information from the environment and passes them to the NCPs, which handle temporal features for high-stakes decisions. This combined architecture was incorporated into a state-of-the-art reinforcement learning algorithm and applied to the complex environment of VizDoom, characterized by realistic and intricate physics. Our experimental results indicate that the NCPs architecture, consisting of only 20 control neurons (comprising 12 inter-neurons and 8 command neurons), not only matches but outperforms others, demonstrating robustness and noise resilience. We highlight the potential of the NCPs architecture to compete with cutting-edge algorithms and emphasize its ability to produce interpretable and expressive neural agents, which is crucial for real-world applications such as robotics where the environment is consistently changing. Future Work: While our results exhibit promise, there exist areas for improvement within our architecture. The implemented reward mechanism is a simplified version. A more precise reward mechanism with penalties has the potential to significantly enhance the capabilities of our neural network. Additionally, our current scope is constrained by hardware limitations, and our focus has been primarily on a basic game scenario. To fully exploit the proposed methodology, future research endeavors should encompass diverse scenarios, allowing for a more comprehensive understanding and application of proposed architecture.

Code Availability

The code is available at https://github.com/itxwaleedrazzaq/VizdoomNCPs.

References

Adil K, Jiang F, Liu S, Grigorev A, Gupta BB, Rho S (2017) Training an agent for fps doom game using visual reinforcement learning and vizdoom. Int J Adv Comput Sci Appl. https://doi.org/10.14569/IJACSA.2017.081205

Bengio Y, Simard P, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Berner C, Brockman G, Chan B, Cheung V, Dębiak P, Dennison C, Farhi D, Fischer Q, Hashme S, Hesse C, et al. (2019) Dota 2 with large scale deep reinforcement learning. arXiv preprint arXiv:1912.06680

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) Openai gym. arXiv preprint arXiv:1606.01540, 2016

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555

Ghanbari A, Vaghei Y, Sayyed NSMR (2014). Reinforcement learning in neural networks: A survey. Int J Adv Biol Biomed Res (IJABBR)

Gordon JA, Stryker MP (1996) Experience-dependent plasticity of binocular responses in the primary visual cortex of the mouse. J Neurosci 16(10):3274–3286

Gray JM, Hill JJ, Bargmann CI (2005) A circuit for navigation in caenorhabditis elegans. Proc Nat Acad Sci 102(9):3184–3191

Grondman I, Busoniu L, Lopes Gabriel AD, Babuska R (2012) A survey of actor-critic reinforcement learning: standard and natural policy gradients. IEEE Trans Syst Man Cybernet Part C (Applications and Reviews) 42(6):1291–1307

Hasani R, Lechner M, Amini A, Rus D, Grosu R (2018) Can a compact neuronal circuit policy be re-purposed to learn simple robotic control? arXiv preprint arXiv:1809.04423

Hasani R, Lechner M, Amini A, Rus D, Grosu R (2020) A natural lottery ticket winner: Reinforcement learning with ordinary neural circuits. In: international conference on machine learning, pp 4082–4093. PMLR

Hasani R, Lechner M, Amini A, Rus D, Grosu R (2021) Liquid time-constant networks. Proc AAAI Conf Artif Intell 35:7657–7666

Hasani RM, Mathias L, Alexander A, Daniela R, Radu G (2018) Liquid time-constant recurrent neural networks as universal approximators. arXiv preprint arXiv:1811.00321

Hausknecht M, Stone P (2015) Deep recurrent q-learning for partially observable mdps. arXiv preprint arXiv:1507.06527

Hochreiter S, Schmidhuber J (1996) Lstm can solve hard long time lag problems. Adv Neural Inf Process Syst, 9

Kato S, Kaplan HS, Schrödel T, Skora S, Lindsay TH, Yemini E, Lockery S, Zimmer M (2015) Global brain dynamics embed the motor command sequence of caenorhabditis elegans. Cell 163(3):656–669

Kempka M, Wydmuch M, Runc G, Toczek J, Jaśkowski W (2016) Vizdoom: a doom-based ai research platform for visual reinforcement learning. In 2016 IEEE conference on computational intelligence and games (CIG), pp 1–8. IEEE

Khan A, Naeem M, Zubair AM, Ud Din A, Khan A (2020) Playing first-person shooter games with machine learning techniques and methods using the vizdoom game-ai research platform. Entertain Comput 34:100357

Kingma DP, Ba J (2014). Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Lechner M, Hasani R, Amini A, Henzinger TA, Rus D, Grosu R (2020) Neural circuit policies enabling auditable autonomy. Nat Mach Intell 2(10):642–652

Lechner M, Hasani Ramin M, Grosu R (2018) Neuronal circuit policies. arXiv preprint arXiv:1803.08554

Li J, Jing Yu, Nie Yu, Wang Z (2020) End-to-end learning and intervention in games. Adv Neural Inf Process Syst 33:16653–16665

Loshchilov I, Hutter F (2016) Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602

Morante J, Desplan C (2008) The color-vision circuit in the medulla of drosophila. Curr Biol 18(8):553–565

Puterman Martin L (1990) Markov decision processes. Handb Op Res Manag Sci 2:331–434

Rankin CH, Beck CDO, Chiba CM (1990) Caenorhabditis elegans: a new model system for the study of learning and memory. Behav Brain Res 37(1):89–92

Razzaq W, Hongwei M (2023) Neural circuit policies imposing visual perceptual autonomy. Neural Processing Letters, pp 1–16

Shao K, Zhao D, Li N, Zhu Y (2018) Learning battles in vizdoom via deep reinforcement learning. In 2018 IEEE conference on computational intelligence and games (CIG), pp 1–4. IEEE

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, Hubert T, Baker L, Lai M, Bolton A (2017) Mastering the game of go without human knowledge. Nature 550(7676):354–359

Stephens Greg J, Johnson-Kerner B, Bialek W, Ryu William S (2008) Dimensionality and dynamics in the behavior of c. elegans. PLoS Comput. Biol. 4(4):e1000028

Truong Hieu M, Trung HH (2022) A novel approach of using neural circuit policies for covid-19 classification on ct-images. In: Future Data and Security Engineering. Big Data, Security and Privacy, Smart City and Industry 4.0 Applications: 9th International Conference, FDSE 2022, Ho Chi Minh City, Vietnam, November 23–25, 2022, Proceedings, pp 640–652. Springer

Tylkin P, Wang T-H, Palko K, Allen R, Siu HC, Wrafter D, Seyde T, Amini A, Rus D (2022) Interpretable autonomous flight via compact visualizable neural circuit policies. IEEE Robotics Autom Lett 7(2):3265–3272

Vinyals O, Babuschkin I, Czarnecki WM, Mathieu M, Dudzik A, Chung J, Choi DH, Powell R, Ewalds T, Georgiev P (2019) Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature 575(7782):350–354

Wang Z, Schaul T, Hessel M, Hasselt H, Lanctot M, Freitas N (2016) Dueling network architectures for deep reinforcement learning. In: International conference on machine learning, pp 1995–2003. PMLR

Christopher JCH Watkins and Peter Dayan (1992) Q-learning. Mach Learn 8:279–292

Wicks SR, Roehrig CJ, Rankin CH (1996) A dynamic network simulation of the nematode tap withdrawal circuit: predictions concerning synaptic function using behavioral criteria. J Neurosci 16(12):4017–4031

Wurman PR, Barrett S, Kawamoto K, MacGlashan J, Subramanian K, Walsh TJ, Capobianco R, Devlic A, Eckert F, Fuchs F et al (2022) Outracing champion gran turismo drivers with deep reinforcement learning. Nature 602(7896):223–228

Wydmuch M, Kempka M, Jaśkowski W (2018) Vizdoom competitions: playing doom from pixels. IEEE Trans Games 11(3):248–259

Yong Yu, Si X, Changhua H, Zhang J (2019) A review of recurrent neural networks: Lstm cells and network architectures. Neural Comput 31(7):1235–1270

Zakharenkov A, Makarov I (2021) Deep reinforcement learning with dqn vs. ppo in vizdoom. In: 2021 IEEE 21st international symposium on computational intelligence and informatics (CINTI), pp 000131–000136. IEEE

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Razzaq, W., Raza, K. Neural Circuit Policies for Virtual Character Control. Neural Process Lett 56, 188 (2024). https://doi.org/10.1007/s11063-024-11640-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11640-x