Abstract

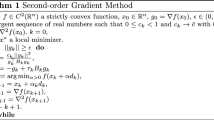

In this paper, we introduce a new concept of approximate optimal stepsize for gradient method, use it to interpret the Barzilai-Borwein (BB) method, and present an efficient gradient method with approximate optimal stepsize for large unconstrained optimization. If the objective function f is not close to a quadratic on a line segment between the current iterate x k and the latest iterate x k−1, we construct a conic model to generate the approximate optimal stepsize for gradient method if the conic model is suitable to be used. Otherwise, we construct a new quadratic model or two other new approximation models to generate the approximate optimal stepsize for gradient method. We analyze the convergence of the proposed method under some suitable conditions. Numerical results show the proposed method is very promising.

Similar content being viewed by others

References

Cauchy, A.: Méthode générale pour la résolution des systéms d’equations simultanées. Comp. Rend. Sci. Paris 25, 46–89 (1847)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Asmundis, R.D., Serafino, Riccio, F., et al.: On spectral properties of steepest descent methods. IMA J. Numer. Anal. 33(4), 1416–1435 (2013)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13, 321–326 (1993)

Dai, Y.H., Liao, L.Z.: R-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Dai, Y.H., Hager, W.W., Schittkowski, K., et al.: The cyclic Barzilai-Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26(3), 604–627 (2006)

Dai, Y.H., Yuan, J.Y., Yuan, Y.X.: Modified two-point stepsize gradient methods for unconstrained optimization problems. Comput. Optim. Appl. 22, 103–109 (2002)

Xiao, Y.H., Wang, Q.Y., Wang, D., et al.: Notes on the Dai-Yuan-Yuan modified spectral gradient method. J. Comput. Appl. Math. 234(10), 2986–2992 (2010)

Biglari, F., Solimanpur, M.: Scaling on the spectral gradient method. J. Optim. Theory Appl. 158(2), 626–635 (2013)

Miladinović, M., Stanimirović, P., Miljković, S.: Scalar correction method for solving large scale unconstrained minimization problems. J. Optim. Theory Appl. 151(2), 304–320 (2011)

Zhou, B., Gao, L., Dai, Y.H.: Gradient methods with adaptive stepsizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Hager, W.W., Zhang, H.C.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Han, Q.M., Sun, W.Y., Han, J.Y., et al.: An adaptive conic trust-region method for unconstrained optimization. Optim. Methods Softw. 20(6), 665–677 (2005)

Sun, W.Y., Xu, D.: A filter-trust-region method based on conic model for unconstrained optimization (in Chinese). Sci. Sin. Math 55(5), 527–543 (2012)

Sun, W.Y.: Optimization methods for non-quadratic model. Asia Pac. J. Oper. Res. 13(1) (1996)

Davidon, W.C.: Conic approximations and collinear scalings for optimizers. SIAM J. Numer. Anal. 17(2), 268–281 (1980)

Sorensen, D.C.: The Q-Superlinear convergence of a collinear scaling algorithm for unconstrained optimization. SIAM J. Numer. Anal. 17(17), 84–114 (1980)

Zhang, J.Z., Deng, N.Y., Chen, L.H.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102, 147–167 (1999)

Friedlander, A., Martinez, J.M., Molina, B., et al.: Gradient method with retards and generalizations. SIAM J. Numer. Anal. 36(1), 275–289 (1998)

Zhang, H.C., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14, 1043–1056 (2004)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods for convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Andrei, N.: Accelerated hybrid conjugate gradient algorithm with modified secant condition for unconstrained optimization. Numer. Algorithms 54(1), 23–46 (2010)

Dolan, E.D., More, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Acknowledgements

We would like to thank two anonymous referees for their valuable comments, which will help to improve the quality of this paper. We also thank professor Dai, Y. H. and Dr. Kou caixia for their C code of CGOPT, and thank Hager and Zhang, H. C. for their C code of CG _DESCENT (5.3). This research is supported by National Science Foundation of China (No.11461021), Guangxi Science Foundation (Nos.2014GXNSFAA118028, 2015GXNSFAA139011), Scientific Research Project of Hezhou University (Nos.2014YBZK06, 2016HZXYSX03), Guangxi Colleges and Universities Key Laboratory of Symbolic Computation and Engineering Data Processing.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, Z., Liu, H. An efficient gradient method with approximate optimal stepsize for large-scale unconstrained optimization. Numer Algor 78, 21–39 (2018). https://doi.org/10.1007/s11075-017-0365-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-017-0365-2

Keywords

- Approximate optimal stepsize

- Barzilai-Borwein (BB) method

- Quadratic model

- Conic model

- BFGS update formula