Abstract

Bilevel problems model instances with a hierarchical structure. Aiming at an efficient solution of a constrained multiobjective problem according with some pre-defined criterion, we reformulate this semivectorial bilevel optimization problem as a classic bilevel one. This reformulation intents to encompass all the objectives, so that the properly efficient solution set is recovered by means of a convenient weighted-sum scalarization approach. Inexact restoration strategies potentially take advantage of the structure of the problem under consideration, being employed as an alternative to the Karush-Kuhn-Tucker reformulation of the bilevel problem. Genuine multiobjective problems possess inequality constraints in their modeling, and these constraints generate theoretical and practical difficulties to our lower level problem. We handle these difficulties by means of a perturbation strategy, providing the convergence analysis, together with enlightening examples and illustrative numerical tests.

Similar content being viewed by others

References

Andreani, R., Castro, S.L.C., Chela, J.L., Friedlander, A., Santos, S.A.: An inexact-restoration method for nonlinear bilevel programming problems. Comput. Optim. Appl. 43(3), 307–328 (2009)

Benson, H.P.: An improved definition of proper efficiency for vector maximization with respect to cones. J. Math. Anal. Appl. 71(1), 232–241 (1979)

Benson, H.P.: Optimization over the efficient set. J. Math. Anal. Appl. 98(2), 562–580 (1984)

Bonnel, H., Morgan, J.: Semivectorial bilevel optimization problem: penalty approach. J. Optim. Theory Appl. 131(3), 365–382 (2006)

Bonnel, H., Morgan, J.: Optimality conditions for semivectorial bilevel convex optimal control problems, Proceedings in Mathematics & Statistics, vol. 50, pp. 5–78. Springer, Heidelberg (2013)

Borwein, J.: Proper efficient points for maximizations with respect to cones. SIAM J. Control. Optim. 15(1), 57–63 (1977)

Bskens, C., Wassel, D.: The ESA NLP Solver WORHP, chap. 8. Springer. Springer Optimization and Its Applications 73, 85–110 (2012)

Bueno, L.F., Haeser, G., Martínez, J.M.: An inexact restoration approach to optimization problems with multiobjective constraints under weighted-sum scalarization. Optim. Lett. 10(6), 1315–1325 (2016)

Deb, K.: Multi-objective genetic algorithms: problem difficulties and construction of test problems. Evol. Comput. 7(3), 205–230 (1999)

Ehrgott, M.: Multicriteria Optimization. Springer, Berlin (2005)

El-Bakry, A.S., Tapia, R.A., Tsuchiya, T., Zhang, Y.: On the formulation and theory of the newton interior-point method for nonlinear programming. J. Optim. Theory Appl. 89(3), 507–541 (1996)

Fülöp, J.: On the equivalence between a linear bilevel programming problem and linear optimization over the efficient set. Technical report WP93-1, Laboratory of Operations Research and Decision Systems, Computer and Automation Institute, Hungarian Academy of Sciences (1993)

Fletcher, R., Leyffer, S.: Numerical experience with solving MPECs as NLPs. Tech. rep., University of Dundee Report NA pp. 210 (2002)

Fletcher, R., Leyffer, S.: Solving mathematical programs with complementarity constraints as nonlinear programs. Optimization Methods and Software 19(1), 15–40 (2004)

Fletcher, R., Leyffer, S., Ralph, D., Scholtes, S.: Local convergence of SQP methods for mathematical programs with equilibrium constraints. SIAM J. Optim. 17(1), 259–286 (2006)

Geoffrion, A.M.: Proper efficiency and the theory of vector maximization. J. Math. Anal. Appl. 22(3), 618–630 (1968)

Guo, X.L., Li, S.J.: Optimality conditions for vector optimization problems with difference of convex maps. J. Optim. Theory Appl. 162(3), 821–844 (2014)

HSL. A collection of Fortran codes for large scale scientific computation. Available at http://www.hsl.rl.ac.uk/

Huband, S., Barone, L., While, L., Hingston, P.: A scalable multi-objective test problem toolkit, pp. 280–295. Springer, Berlin (2005)

Kuhn, H.W., Tucker, A.W.: Nonlinear programming. In: Neyman, J. (ed.) Proceedings of the second Berkeley symposium on mathematical statistics and probability, pp. 481–492. University of California Press, Berkeley (1951)

Martínez, J.M.: Inexact-restoration method with Lagrangian tangent decrease and new merit function for nonlinear programming. J. Optim. Theory Appl. 3(1), 39–58 (2001)

Martínez, J.M., Pilotta, E.A.: Inexact-restoration algorithm for constrained optimization. J. Optim. Theory Appl. 104(1), 135–163 (2000)

Martínez, J.M., Svaiter, B.F.: A practical optimality condition without constraint qualifications for nonlinear programming. J. Optim. Theory Appl. 118(1), 117–133 (2003)

Miettinen, K.M.: Nonlinear Multiobjective Optimization. Kluwer Academic Publishers, Boston (1999)

Pilotta, E.A., Torres, G.A.: An inexact restoration package for bilevel programming problems. Appl. Math. 15(10A), 1252–1259 (2012)

Scholtes, S.: Convergence properties of a regularization scheme for mathematical programs with complementarity constraints. SIAM J. Optim. 11(4), 918–936 (2001)

Srinivas, N., Deb, K.: Muiltiobjective optimization using nondominated sorting in genetic algorithms. Evol. Comput. 2(3), 221–248 (1994)

Tanaka, M., Watanabe, H., Furukawa, Y., Tanino, T.: GA-based decision support system for multicriteria optimization. In: IEEE International Conference on Systems, Man and Cybernetics. Intelligent Systems for the 21St Century, vol. 2, pp. 1556–1561 (1995)

Zitzler, E., Deb, K., Thiele, L.: Comparison of multiobjective evolutionary algorithms: empirical results. Evol. Comput. 8(2), 173–195 (2000)

Acknowledgements

The authors are thankful to an anonimous reviewer, whose remarks and suggestions have provide improvements upon the original version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work has been partially supported by the Brazilian funding agencies Fundação de Amparo à Pesquisa do Estado de São Paulo – FAPESP (grants 2013/05475-7 and 2013/07375-0) and Conselho Nacional de Desenvolvimento Científico e Tecnológico – CNPq (grants 303013/2013-3 and 302915/2016-8).

Appendix: Test Problems

Appendix: Test Problems

Problem 1.

This is the problem (10)–(11) of example 1 on page 8.

Problem 2.

We define problem 2 as the minimization of \(F(x_{1},x_{2})={x_{1}^{2}}+{x_{2}^{2}}\) over the set \(\mathscr X^{*}\) of efficient solutions of the multiobjective constrained problem [28]

\(\mathscr X^{*}\) is discontinuous and nonconvex. There are four optimal solutions with functional value F∗≈ 0.95 (see Fig. 3).

Problem 3.

Problem 3 consists in minimizing \(F(x_{1},x_{2})={x_{1}^{2}}+{x_{2}^{2}}\) over the set of efficient solutions of

The feasible set is \(\mathscr X^{*}=\{ (x_{1},x_{2})\in \mathbb{R} ^{2};: x_{2}={x_{1}^{2}}, : 0\leq x_{1}\leq 2 \}\) and (0, 0)T is the optimal solution.

Problem 4.

The lower level multiobjective problem is exactly the same of the problem 3, and thus the feasible set \(\mathscr X^{*}\) is the same. The upper level objective function of problem 4 is F(x1, x2) = (x1 − 1)2 + (x2 − 1)2, and (1, 1)T is the optimal solution.

Problem 5.

This is a slight modification of problem 4. It consists in minimizing F(x1, x2) = (x1 − 1)2 + (x2 − 1)2 over the set of efficient solutions of

The feasible set is \(\mathscr X^{*}=\{ (x_{1},x_{2})\in \mathbb{R} ^{2}; x_{2}={x_{1}^{2}}, 0\leq x_{1}\leq 2^{-2/3} \}\) and the optimal solution is (2− 2/3, 2− 4/3)T ≈ (0.63, 0.40)T.

Problem 6.

The lower level problem is

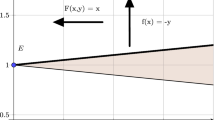

Geometrically, the set \(\mathscr X^{*}\) of efficient solutions of this multiobjective problem is composed by the simultaneously tangential points of the level sets of both objective functions, when it is contained in the semispace defined by x1 + x2 ≥ 2, and by the line x1 + x2 = 2 otherwise. More specifically, \(\mathscr X^{*}\) is the union of the curves

The upper level objective function is \(F(x_{1},x_{2}) = (x_{1}-9/2)^{2} + {x_{2}^{2}}\), for which (9/2, 0)T is the optimal solution. Figure 4 illustrates its geometry.

Problem 7.

This is the problem (12)–(13) of example 2 on page 9.

Problems 8 to 11 (ZDT family instances).

Multiobjective problems of [29] (here named ZDT problems) are constructed with three functions f1, g, h in the following way:

where

(see also [9]). We were inspired in four combinations suggested in Definition 4 of [29]. For each combination, we minimize the following:

over the set of efficient solutions defined by the minimization of functions f1 and f2 on certain box constraints. We summarized the properties of these test problems in Table 5. For these problems, the set of efficient solutions is split into discontinuous components, one for each (local) minimum of g, formed with all possible values of f1 (see Section 5.1 of [9]). Thus, problems 8 to 10 have a unique set of efficient solutions component, for which x2 = ⋯ = xn = 0 (i.e. g(x2,…,xn) = 1), while Problem 11 has several components. For problems 8, 9, and 11, the possible values of x1 = f1(x1) are the whole interval [0, 1]. For problem 10, the possible values of x1 are the intervals for which h decreases (see Fig. 5). Hence, (1, 0,…, 0) is the optimal solution of problems 8, 9, and 11, whereas (0.954, 0…, 0)T is the (approximated) optimal solution of problem 10. Problem 11 has 21n− 1 sets of efficient solutions components, one for each combination of the local minimizers of \({x_{i}^{2}}-2\cos (4\pi x_{i})\), i = 2,…,n (see Fig. 6).

Geometry of problem 10. In the set of efficient solutions, \(g(x_{2}^{*},\ldots ,x_{n}^{*})= 1\) and x1 lies on the intervals for which \(\tilde f_{2}(x_{1})=f_{2}(x_{1},x_{2}^{*},\ldots ,x_{n}^{*}) = 1-x_{1}(1-\sin (10\pi x_{1}))\) decreases (huge lines). These are the non-dominated values corresponding to the two objectives f1(x1) = x1 and \(\tilde f_{2}(x_{1})\)

Problems 12 and 13 (WFG family instances).

Based on the Walking Fish Group (WFG) Toolkit [19], we consider two instances, for which particular choices of the parameters were made to come up with smooth functions:

-

Problem 12, that consists in minimizing \(F(x) = {\sum }_{i = 1}^{n} {x_{i}^{2}}\), over the set of efficient solutions defined by the minimization of

$$\begin{array}{@{}rcl@{}} \displaystyle f_{1}(x) &=& \frac{x_{n}}{2n} + 2\overset{n-1}{\underset{i = 1}{\prod}}\left[1 - \cos\left( \frac{\pi x_{i}}{4i}\right)\right],\\ \displaystyle f_{j}(x) &=& \frac{x_{n}}{2n} + 2j\left[1-\sin\left( \frac{\pi x_{n-j + 1}}{4(n-j + 1)}\right)\right]\overset{n-j}{\underset{i = 1}{\prod}}\left[1 - \cos\left( \frac{\pi x_{i}}{4i}\right)\right],\ \\j&=&2,\ldots,n-1,\\ \displaystyle f_{n}(x) &=& \frac{x_{n}}{2n} + 2n\left[1-\frac{x_{1}}{2} - \frac{\cos(5\pi x_{1} + \pi/2)}{10\pi} \right] \end{array} $$on [0, 1]n. The optimal solution is the origin.

-

Problem 13, that consists in minimizing \(F(x) = {\sum }_{i = 1}^{n} {x_{i}^{2}}\), over the set of efficient solutions defined by the minimization of

$$\begin{array}{@{}rcl@{}} \displaystyle f_{1}(x) &=& \frac{x_{n}}{2n} + 2\overset{n-1}{\underset{i = 1}{\prod}}\left[1 - \cos\left( \frac{\pi x_{i}}{4i}\right)\right],\\ \displaystyle f_{j}(x) &=& \frac{x_{n}}{2n} + 2j\left[1-\sin\left( \frac{\pi x_{n-j + 1}}{4(n-j + 1)}\right)\right]\overset{n-j}{\underset{i = 1}{\prod}}\left[1 - \cos\left( \frac{\pi x_{i}}{4i}\right)\right],\\ j&=&2,\ldots,n-1,\\ \displaystyle f_{n}(x) &=& \frac{x_{n}}{2n} + 2n\left[ 1-\frac{x_{1}}{2}\cos^{2}\left( \frac{5\pi x_{1}}{2}\right) \right] \end{array} $$on [0, 1]n. Again, the optimal solution is the origin.

Rights and permissions

About this article

Cite this article

Andreani, R., Ramirez, V.A., Santos, S.A. et al. Bilevel optimization with a multiobjective problem in the lower level. Numer Algor 81, 915–946 (2019). https://doi.org/10.1007/s11075-018-0576-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-018-0576-1

Keywords

- Bilevel optimization

- Inexact restoration

- Multiobjective optimization

- KKT reformulation

- Numerical experiments