Abstract

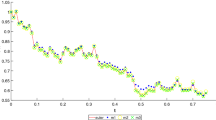

In this paper, a class of new Magnus-type methods is proposed for non-commutative Itô stochastic differential equations (SDEs) with semi-linear drift term and semi-linear diffusion terms, based on Magnus expansion for non-commutative linear SDEs. We construct a Magnus-type Euler method, a Magnus-type Milstein method and a Magnus-type Derivative-free method, and give the mean-square convergence analysis of these methods. Numerical tests are carried out to present the efficiency of the proposed methods compared with the corresponding underlying methods and the specific performance of the simulation Itô integral algorithms is investigated.

Similar content being viewed by others

References

Debrabant, K., Kværnø, A., Mattsson, N.C.: Runge–Kutta Lawson schemes for stochastic differential equations. arXiv:1909.11629 (2019)

Erdoǧan, U., Lord, G.J.: A new class of exponential integrators for SDEs with multiplicative noise. IMA J. Numer. Anal. 39, 820–846 (2019)

Yang, G., Burrage, K., Ding, X.: A new class of structure-preserving stochastic exponential Runge–Kutta integrators for stochastic differential equations. (Submitted)

Komori, Y., Burrage, K.: A stochastic exponential Euler scheme for simulation of stiff biochemical reaction systems. BIT Numer Math. 54, 1067–1085 (2014)

Komori, Y., Cohen, D., Burrage, K.: Weak second order explicit exponential Runge–Kutta methods for stochastic differential equations. SIAm J. Sci. Comput. 39, A2857–A2878 (2017)

Magnus, W.: On the exponential solution of differential equations for a linear operator. Commun. Pure Appl. Math. 7, 649–673 (1954)

Khanamiryan, M.: Modified Magnus expansion in application to highly oscillatory differential equations. BIT Numer. Math. 52, 383–405 (2012)

Iserles, A., Nørsett, SP: On the solution of linear differential equations in Lie groups. Phil. Trans. R. Soc. 357, 983–1019 (1999)

Iserles, A., MacNamara, S.: Applications of Magnus expansions and pseudospectra to Markov processes. Eur. J. Appl. Math. 30, 400–425 (2019)

Burrage, K., Burrage, P.M.: High strong order methods for non-commutative stochastic ordinary differential equation systems and the Magnus formula. Phys. D Nonlinear Phenom. 133, 34–48 (1999)

Wang, Z., Ma, Q., Yao, Z., Ding, X.: The Magnus expansion for stochastic differential equations. J. Nonlinear Sci. 30, 419–447 (2020)

Wang, X., Guan, X., Yin, P.: A new explicit Magnus expansion for nonlinear stochastic differential equations. Mathematics 8, 183 (2020)

Kamm, K., Pagliaraniy, S., Pascucciz, A.: On the stochastic Magnus expansion and its application to SPDEs. arXiv:2001.01098 (2020)

Tambue, A., Mukam, J.D.: Magnus-type integrator for non-autonomous SPDEs driven by multiplicative noise. Discrete Contin. Dyn. Syst. Ser. A. 40, 4597–4624 (2020)

Blanes, S., Casas, F., Oteo, J.A., Ros, J.: The Magnus expansion and some of its applications. Phys. Rep. Rev. Sect. Phys. Lett. 470, 151–238 (2009)

Mao, X.: Stochastic differential equations and applications. Horwood, Chichester (2007)

Burrage, P.M.: Runge–Kutta methods for stochastic differential equations. Ph.D. Thesis, Dept. Maths., Univ. Queensland (1999)

Gard, T.C.: Introduction to Stochastic Differential Equations. Marcel Dekker Inc, New York-Basel (1988)

Milstein, G.N.: Numerical integration of stochastic differential equations. Kluwer Academic Publishers, Dordrecht (1995)

Kuznetsov, D.F.: Development and application of the Fourier method for the numerical solution of Itô stochastic differential equations. Comput. Math. Math. Phys. 58, 1058–1070 (2018)

Kuznetsov, D.F.: A comparative analysis of efficiency of using the Legendre polynomials and trigonometric functions for the numerical solution of Itô stochastic differential equations. Comput. Math. Math. Phys. 59, 1236–1250 (2019)

Wiktorsson, M.: Joint characteristic function and simultaneous simulation of iterated Itô integrals for multiple independent Brownian motions. Ann. Appl. Probab. 11, 470–487 (2001)

Kloeden, P.E., Platen, E.: Numerical solution of stochastic differential equations. Applications of Mathematics: Stochastic Modelling and Applied Probability. Springer, Berlin (1995)

Debrabant, K., Kværnø, A., Mattsson, N.C.: Lawson schemes for highly oscillatory stochastic differential equations and conservation of invariants. arXiv:1909.12287 (2019)

de Bouard, A.: Gazeau., M.: A diffusion approximation theorem for a nonlinear PDE with application to random birefringent optical fibers. Ann. Appl. Probab. 22, 2460–2504 (2012)

Berg, A., Cohen, D., Dujardin, G.: Exponential integrators for the stochastic Manakov equation. arXiv:2005.04978v1 (2020)

Abdulle, A., Cirilli, S.: S-ROCK: Chebyshev methods for stiff stochastic differential equations. SIAM J. Sci. Comput. 30, 997–1014 (2008)

Komori, Y., Burrage, K.: Weak second order S-ROCK methods for Stratonovich stochastic differential equations. J. Comput. Appl. Math. 236, 2895–2908 (2012)

Acknowledgements

The authors appreciate the valuable comments of the referee.

Funding

Guoguo Yang was supported by China Scholarship Council (CSC) and the National Natural Science Foundation of China (No. 62073103 and 11701124) during his study at Queensland University of Technology. Yoshio Komori was partially supported for this work by JSPS Grant-in-Aid for Scientific Research 17K05369. Xiaohua Ding was partially supported by the National Key R&D Program of China (No. 2017YFC1405600).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A.1 The expansion of iterated Itô stochastic integrals based on the generalized multiple Fourier series

Let \(\left \{\phi _{j}(x)\right \}_{j=0}^{\infty }\) be an orthonormal basis of the space \(L_{2}\left (\left [t_{n}, t_{n+1}\right ]\right ).\) If the trigonometric series

where r = 1, 2,…, are selected as an orthonormal basis, for the iterated Itô integral (7), using the expansion theorem in [20], the following representations are obtained,

where \(\zeta _{0}^{(i)} \underset {=}{\text { def }} W_{i}(h)/\sqrt {h}\) and \(\zeta _{j}^{(i)} \underset {=}{\text { def }} {{\int \limits }_{t}^{T}} \phi _{j}(s) d W_{i}(s)\), i = 1,…,m. The expansion (A.1) coincides with Kloeden, Platen and Wright’s (1992) algorithm [23] based on Kahunen–Loève expansion and it converges to the iterated Itô integral (7) in the mean-square sense.

If orthonormal Legendre polynomials are selected as an orthonormal basis,

the expansion is

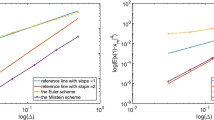

As the expansions (A.1) and (A.2) are infinite series, we need to truncate them for practical simulation. During the implementation of the Milstein and MM methods, the mean-square error of the approximated iterated integrals should not be larger than h3, which is to ensure mean-square convergence of 1.

Truncating the infinite series (A.1) and (A.2) to q terms, we have \(I_{i j}^{q}\). From the truncated mean-square error in [21], we obtain the mean-square error for truncating (A.1)

Here we need 3h2/(2π2q) ≤ h3, so we should choose

Truncating the expansion (A.1) to q, we get the mean-square error

Here we need the mean-square error less than h3, so we should choose

Since qp ≈ 1/(8h), the truncated indices of A.1 and (A.2) both are O(1/h), and it can be calculated that the convergence speed of (A.1) is about qt/qp ≈ 1.22 times that of (A.2). This means that the approximation based on the multiple Legendre Fourier series is slightly more efficient than that based on the multiple trigonometric Fourier series.

1.2 A.2 Wiktorsson’s algorithm

If I(h) and A(h) are matrices with elements \(I_{i j}\left (t_{n}, t_{n}+h\right )\), Aii = 0 and

respectively, the approximation based on the multiple trigonometric Fourier series can be written in matrix form

where \({\varDelta } \mathbf {W}(h) \sim N\left (0, h I_{m}\right ), \mathbf {X}_{k} \sim N\left (0_{m}, I_{m}\right )\) and \(\mathbf {Y}_{k} \sim N\left (0_{m}, I_{m}\right ), k=\)1, 2,…,q are all independent. As the Lévy stochastic area has the relationship Iij − Iji = 2Aij, we only need to simulate Iij for i < j or i > j in the simulation.

Wiktorsson’s algorithm is based on approximation of the tail-sum distribution of (A.3)

which improves the rate of convergence. Let vec \(\left (\mathbf {I}(h)^{T}\right )\) be column vectors of (A.3), and the following is the procedure of Wiktorsson’s algorithm [22]:

-

1.

First simulate \({\varDelta } \mathbf {W}(h) \sim N\left (0_{m}, \sqrt {h} I_{m}\right )\).

-

2.

Simulate the truncated first q terms

$$ \tilde{A}^{(q)}(h)=\frac{h}{2 \pi} {\sum}_{k=1}^{q} \frac{1}{k} K_{m}\left( P_{m}-I_{m^{2}}\right)\left\{\left( \mathbf{Y}_{k}+\sqrt{\frac{2}{h}} {\varDelta} \mathbf{W}(h)\right) \otimes \mathbf{X}_{k}\right\} $$where \(\mathbf {X}_{k} \sim N\left (0_{m}, I_{m}\right )\) and \(\mathbf {Y}_{k} \sim N\left (0_{m}, I_{m}\right )\)

-

3.

Then simulate \(\mathbf {G}_{q} \sim N\left (0_{M}, I_{M}\right )\) and add the approximation of tail-sum distribution:

$$ \widetilde{A}^{(q)^{\prime}}(h)=\widetilde{A}^{(q)}(h)+\frac{h}{2 \pi} a_{q}^{1 / 2} \sqrt{{\Sigma}_{\infty}} \mathbf{G}_{q} $$where \(a_{q}={\sum }_{k=q+1}^{\infty } 1 / k^{2}\)

-

4.

Finally obtain the approximation vec \(\left (\mathbf {I}(h)^{T}\right )^{(q)^{\prime }}\) of vec \(\left (\mathbf {I}(h)^{T}\right )\)

$$ \operatorname{vec}\left( \mathbf{I}(h)^{T}\right)^{(q)^{\prime}}=\frac{{\varDelta} \mathbf{W}(h) \otimes {\varDelta} \mathbf{W}(h)-\operatorname{vec}\left( h I_{m^{2}}\right)}{2}+\left( I_{m^{2}}-P_{m}\right) {K_{m}^{T}} \widetilde{A}^{(q)^{\prime}}(h) $$Here Pm is the m2 × m2 permutation matrix and for the specific expression of matrices Pm please refer to [22].

The mean-square error of Wiktorsson’s algorithm [22] is

Here we also need the mean-square error less than h3, so we should choose truncated indices as

and the truncated indices qw is \(O(1/\sqrt {h})\) that is much better than O(1/h).

Rights and permissions

About this article

Cite this article

Yang, G., Burrage, K., Komori, Y. et al. A class of new Magnus-type methods for semi-linear non-commutative Itô stochastic differential equations. Numer Algor 88, 1641–1665 (2021). https://doi.org/10.1007/s11075-021-01089-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01089-7