Abstract

There are many conjugate gradient methods to solving unconstrained optimization problems. Compared with the conjugate gradient method, the accelerated conjugate gradient method has better numerical effects for the unconstrained optimization problem. In this paper, we propose a modified conjugate gradient method with Wolfe line search, which generates descent direction. Under mild conditions, we prove that the proposed method is globally convergent. Numerical results indicate that the proposed method is considerable for our test functions.

Similar content being viewed by others

References

Wolfe, P.: Convergence conditions for ascent methods. II: some corrections. SIAM Rev. 13(2), 185–188 (1971)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Yuan, G., Wei, Z., Lu, X.: Global convergence of the BFGS method and the PRP method for general functions under a modified weak Wolfe-Powell line search. Appl. Math. Model. 47, 811–825 (2017)

Yuan, G., Wei, Z., Yang, Y.: The global convergence of the Polak-Ribière-Polyak conjugate gradient algorithm under inexact line search for nonconvex functions. J. Comput. Appl. Math. 362, 262–275 (2019)

Yuan, G., Sheng, Z., Wang, B., et al.: The global convergence of a modified BFGS method for nonconvex functions. J. Comput. Appl. Math. 327, 274–294 (2018)

Perry, A.: A modified conjugate gradient algorithm. Oper. Res. 26(6), 1073–1078 (1976)

Sun, W., Yuan, Y: Optimization theory and methods (2006)

Andrei, N.: A double parameter scaled BFGS method for unconstrained optimization. J. Comput. Appl. Math. 26–44 (2018)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45(3), 503–528 (1989)

Nocedal, J.: Updating quasi-newton matrices with limited storage. Math. Comput. 35(151), 773–782 (1980)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3(3), 244–256 (1978)

Andrei, N.: Scaled conjugate gradient algorithms for unconstrained optimization. Comput. Optim. Appl. 38(3), 401–416 (2007)

Andrei, N.: Accelerated adaptive Perry conjugate gradient algorithms based on the self-scaling memoryless BFGS update. J. Comput. Appl. Math. 149–164 (2017)

Yao, S., Ning, L.: An adaptive three-term conjugate gradient method based on self-scaling memoryless BFGS matrix. J. Comput. Appl. Math. 332, 72–85 (2018)

Andrei, N.: Acceleration of conjugate gradient algorithms for unconstrained optimization. Appl. Math. Comput. 213(2), 361–369 (2009)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

More, J.J., Garbow, B.S., Hillstrom, K.E., et al.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7(1), 17–41 (1981)

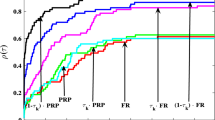

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Funding

This work was supported by the National Natural Science Foundation of China (No. 11961010, 61967004), Guangxi Key Laboratory of Automatic Testing Technology and Instruments, China (No. YQ19111).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, X., Zhao, W. A modified conjugate gradient method based on the self-scaling memoryless BFGS update. Numer Algor 90, 1017–1042 (2022). https://doi.org/10.1007/s11075-021-01220-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01220-8