Abstract

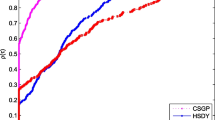

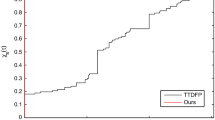

In this paper, based on the three-term conjugate gradient projection method and the inertial technique, we propose a modified inertial three-term conjugate gradient projection method for solving nonlinear monotone equations with convex constraints. Embedding the inertial extrapolation step in the design for the search direction, the resulting direction satisfies the sufficient descent property which is independent of any line search rules. The global convergence and Q-linear convergence rate of the proposed algorithm are established under standard conditions. Numerical comparisons with three existing methods demonstrate that the proposed algorithm possesses superior numerical performance and good robustness for solving large-scale equations. Finally, the proposed method is applied to solve the sparse signal problems and image restoration in compressed sensing.

Similar content being viewed by others

References

Meintjes, K., Morgan, A.P.: A methodology for solving chemical equilibrium systems. Appl. Math. Comput. 22(4), 333–361 (1987)

Xiao, Y.H., Wang, Q.Y., Hu, Q.J.: Non-smooth equations based method for ℓ1-norm problems with applications to compressed sensing. Nonlinear Anal. Theory Methods Appl. 74(11), 3570–3577 (2011)

Chorowski, J., Zurada, J.M.: Learning understandable neural networks with nonnegative weight constraints. IEEE Trans. Neural Netw. Learn. Syst. 26(1), 62–69 (2014)

Sun, D.F., Womersley, R.S., Qi, H.D.: A feasible semismooth asymptotically Newton method for mixed complementarity problems. Math. Program. 94(1), 167–187 (2002)

Qi, L.Q., Tong, X.J., Li, D.H.: Active-set projected trust-region algorithm for box-constrained nonsmooth equations. J. Optim. Theory Appl. 120 (3), 601–625 (2004)

Kanzow, C., Yamashita, N., Fukushima, M.: Levenberg-Marquardt methods for constrained nonlinear equations with strong local convergence properties. J. Comput. Appl. Math. 172, 375–397 (2004)

Zhou, G., Toh, K.C.: Superline convergence of a Newton-type algorithm for monotone equations. J. Optim. Theory Appl. 125(1), 205–221 (2005)

Hager, W.W., Zhang, H.C.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Solodov, M.V., Svaiter, B.F.: A globally convergent inexact Newton method for systems of monotone equations. In: Fukushima M., Qi L. (eds.) Reformulation: nonsmooth, piecewise smooth, semismooth and smoothing methods, pp. 355–369, Kluwer Academic (1998)

Liu, J.K., Feng, Y.M.: A derivative-free iterative method for nonlinear monotone equations with convex constraints. Numer. Algorithms 82(1), 245–262 (2019)

Sabi’u, J., Shah, A., Waziri, M.Y.: Two optimal Hager-Zhang conjugate gradient methods for solving monotone nonlinear equations. Appl. Numer. Math. 153, 217–233 (2020)

Hu, W.J., Wu, J.Z., Yuan, G.L.: Some modified Hestenes-Stiefel conjugate gradient algorithms with application in image restoration. Appl. Numer. Math. 158, 360–376 (2020)

Koorapetse, M., Kaelo, P., Lekoko, S., et al.: A derivative-free RMIL conjugate gradient projection method for convex constrained nonlinear monotone equations with applications in compressive sensing. Appl. Numer. Math. 165, 431–441 (2021)

Gao, P.T., He, C.J., Liu, Y.: An adaptive family of projection methods for constrained monotone nonlinear equations with applications. Appl. Math. Comput. 359, 1–16 (2019)

Yin, J.H., Jian, J.B., Jiang, X.Z., et al.: A hybrid three-term conjugate gradient projection method for constrained nonlinear monotone equations with applications. Numer. Algorithms 88(1), 389–418 (2021)

Gao, P.T., He, C.J.: An efficient three-term conjugate gradient method for nonlinear monotone equations with convex constraints. Calcolo. 55(4), 1–17 (2018)

Zheng, L., Yang, L., Liang, Y.: A conjugate gradient projection method for solving equations with convex constraints. J. Comput. Appl. Math. 375, 112781 (2020)

Yin, J.H., Jian, J.B., Jiang, X.Z.: A generalized hybrid CGPM-based algorithm for solving large-scale convex constrained equations with applications to image restoration. J. Comput. Appl. Math. 391, 113423 (2021)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Chen, C.H., Chan, R.H., Ma, S.Q., et al.: Inertial proximal ADMM for linearly constrained separable convex optimization. SIAM J. Imaging Sci. 8(4), 2239–2267 (2015)

Dou, M.Y., Li, H.Y., Liu, X.W.: An inertial proximal Peaceman-Rachford splitting method (in chinese). Scientia Sinica Mathematica 47(2), 333–348 (2017)

Gao, X., Cai, X.J., Han, D.R.: A Gauss-Seidel type inertial proximal alternating linearized minimization for a class of nonconvex optimization problems. J. Glob. Optim. 76(4), 863–887 (2020)

Ibrahim, A.H., Kumam, P., Sun, M., et al.: Projection method with inertial step for nonlinear equations: application to signal recovery. J. Ind. Manag. https://doi.org/10.3934/jimo.2021173 (2021)

Chen, C.H., Ma, S.Q., Yang, J.F.: A general inertial proximal point algorithm for mixed variational inequality problem. SIAM J. Optim. 25 (4), 2120–2142 (2015)

Thong, D.V., Van Hieu, D.: Inertial subgradient extragradient algorithms with line-search process for solving variational inequality problems and fixed point problems. Numer. Algorithms 80(4), 1283–1307 (2019)

Bot, R.I., Csetnek, E.R., Hendrich, C.: Inertial Douglas-Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 256, 472–487 (2015)

Jolaoso, L.O., Alakoya, T.O., Taiwo, A., et al.: Inertial extragradient method via viscosity approximation approach for solving equilibrium problem in Hilbert space. Optim. 70(2), 387–412 (2021)

Li, M.: A three term Polak-Ribiére-Polyak conjugate gradient method close to the memoryless BFGS quasi-Newton method. J. Ind.Manag. Optim. 16 (1), 245–260 (2020)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35(151), 773–782 (1980)

Amini, K., Kamandi, A.: A new line search strategy for finding separating hyperplane in projection-based methods. Numer. Algorithms 70(3), 559–570 (2015)

Yu, Z.S., Lin, J., Sun, J., et al.: Spectral gradient projection method for monotone nonlinear equations with convex constraints. Appl. Numer. Math. 59(10), 2416–2423 (2009)

Li, Q., Li, D.H.: A class of derivative-free methods for large-scale nonlinear monotone equations. IMA J. Numer. Anal. 31(4), 1625–1635 (2011)

Phelps, R.R.: Convex sets and nearest points. Proc. Am. Math. Soc. 8(4), 790–797 (1957)

Zarantonello, E.H.: Projections on convex sets in hilbert space and spectral theory. In: Zarantonello, E.H. (ed.) Contributions to nonlinear functional analysis, pp. 237–424. Academic Press. New York (1971)

Polyak, B.T.: Introduction to Optimization, Optimization Software, p. 49. Inc. Publications Division, New York (1987)

Wang, C.W., Wang, Y.J., Xu, C.L.: A projection method for a system of nonlinear monotone equations with convex constraints. Math. Methods Oper. Res. 66(1), 33–46 (2007)

Yu, G.H., Niu, S.Z., Ma, J.H.: Multivariate spectral gradient projection method for nonlinear monotone equations with convex constraints. J. Ind. Manag. Optim. 9(1), 117–129 (2013)

La Cruz, W., Martínez, J. M., Raydan, M.: Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 75(255), 1429–1448 (2006)

Moré, J. J., Garbow, B.S., Hillstrom, K.E.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7(1), 17–41 (1981)

Zhou, W.J., Li, D.H.: Limited memory BFGS method for nonlinear monotone equations. J. Comput. Math. 25, 89–96 (2007)

Dolan, E.D., Moré, J. J.: Benchmarking optimization software with performance profiles. Math. program. 91(2), 201–213 (2002)

Figueiredo, M.A., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1(4), 586–597 (2007)

Pang, J.S.: Inexact Newton methods for the nonlinear complementarity problem. Math. Program. 36(1), 54–71 (1986)

Funding

This work is supported by the National Natural Science Foundation of China (12171106), the Natural Science Foundation of Guangxi Province (2020GXNSFDA238017) and the Research Foundation of Guangxi Minzu University (2021KJQD04).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, G., Jin, J., Jian, J. et al. A modified inertial three-term conjugate gradient projection method for constrained nonlinear equations with applications in compressed sensing. Numer Algor 92, 1621–1653 (2023). https://doi.org/10.1007/s11075-022-01356-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01356-1

Keywords

- Inertial method

- Three-term conjugate gradient projection algorithm

- Linear convergence rate

- Compressed sensing