Abstract

We derive upper bounds on the tail distribution of the transient waiting time in the GI/GI/1 queue, given a truncated sequence of the moments of the service time and that of the interarrival time. Our upper bound is given as the objective value of the optimal solution to a semidefinite program (SDP) and can be calculated numerically by solving the SDP. We also derive the upper bounds in closed form for the case when only the first two moments of the service time and those of the interarrival time are given. The upper bounds in closed form are constructed by formulating the dual problem associated with the SDP. Specifically, we obtain the objective value of a feasible solution of the dual problem in closed from, which turns out to be the upper bound that we derive. In addition, we study bounds on the maximum waiting time in the first busy period.

Similar content being viewed by others

References

Altman, E.: Constrained Markov Decision Processes. Chapman & Hall/CRC, London (1999)

Ansell, P.S., Glazebrook, K.D., Mitrani, I., Niño-Mora, J.: A semidefinite programming approach to the optimal control of a single server queueing system with imposed second moment constraints. J. Oper. Res. Soc. 50, 765–773 (1999)

Ben-Tal, A., Nemirovski, A.: Lectures on Modern Convex Optimization: Analysis, Algorithms, and Engineering Applications. Philadelphia, SIAM (2001)

Bertsimas, D., Natarajan, K.: A semidefinite optimization approach to the steady-state analysis of queueing systems. Queueing Syst. 56, 27–39 (2007)

Bertsimas, D., Niño-Mora, J.: Optimization of multiclass queueing networks with changeover times via the achievable region approach: Part I, the single-station case. Math. Oper. Res. 24, 306–330 (1999)

Bertsimas, D., Niño-Mora, J.: Optimization of multiclass queueing networks with changeover times via the achievable region approach: Part II, the multi-station case. Math. Oper. Res. 24, 331–361 (1999)

Bertsimas, D., Popescu, I.: Optimal inequalities in probability theory: a convex optimization approach. SIAM J. Optim. 15, 780–804 (2005)

Daley, D.J.: Inequalities for moments of tails of random variables, with a queueing application. Z. Wahrscheinlichkeitstheor. Verw. Geb. 41, 139–143 (1977)

Gallager, R.G.: Discrete Stochastic Processes. Kluwer Academic, Dordrecht (1995)

Helmes, K.: Numerical methods for optimal stopping using linear and non-linear programming. Lect. Notes Control Inf. Sci. 280, 185–203 (2002)

Helmes, K., Rohl, S., Stockbridge, R.H.: Computing moments of the exit time distribution for Markov processes by linear programming. Oper. Res. 49, 516–530 (2001)

Kingman, J.F.C.: Some inequalities for the GI/GI/1 queue. Biometrika 49, 315–324 (1962)

Kleinrock, L.: Queueing Systems, vol. 2: Computer Applications. Wiley-Interscience, New York (1976)

Kobayashi, H., Mark, B.L.: System Modeling and Analysis: Foundations of System Performance Evaluation. Prentice Hall, New York (2008)

Lanckriet, G.R.G., Cristianini, N., Bartlett, P., Ghaoui, L.E., Jordan, M.I.: Learning the kernel matrix with semidefinite programming. J. Mach. Learn. Res. 5, 27–72 (2004)

Lasserre, J.B., Prieto-Rumeau, T.: SDP vs. LP relaxations for the moment approach in some performance evaluation problems. Stoch. Models 20, 439–456 (2004)

Lasserre, J.B., Prieto-Rumeau, T., Zervos, M.: Pricing a class of exotic options via moments and SDP relaxations. Math. Finance 16, 469–494 (2006)

Liu, Z., Nain, P., Towsley, D.: Exponential bounds with an application to call admission. J. ACM 44, 366–394 (1997)

Nakata, M.: A numerical evaluation of highly accurate multiple-precision arithmetic version of semidefinite programming solver: SDPA-GMP, -QD and -DD. In: Proceedings of 2010 IEEE Multi-Conference on Systems and Control, pp. 29–34 (2010)

Osogami, T., Raymond, R.: Semidefinite optimization for analysis of queues in closed forms. Technical Report RT0896, IBM Research–Tokyo, March (2010). http://www.research.ibm.com/trl/people/osogami/paper/RT0896.pdf

Osogami, T., Raymond, R.: Simple bounds for a transient queue. In: Proceedings of the 41st Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN 2011), pp. 562–573 (2011)

Rengarajan, B., Caramanis, C., de Veciana, G.: Analyzing queuing systems with coupled processors through semidefinite programming. Unpublished (2008)

Yamashita, M., Fujisawa, K., Fukuda, M., Kobayashi, K., Nakta, K., Nakata, M.: Latest developments in the SDPA family for solving large-scale SDPs. In: Anjos, M.F., Lasserre, J.B. (eds.) Handbook on Semidefinite, Cone and Polynomial Optimization: Theory, Algorithms, Software and Applications, pp. 687–714. Springer, New York (2011). Chap. 24

Acknowledgements

This work was supported by “Promotion program for Reducing global Environmental loaD through ICT innovation (PREDICT)” of the Ministry of Internal Affairs and Communications, Japan.

Author information

Authors and Affiliations

Corresponding author

Additional information

An erratum to this article is available at http://dx.doi.org/10.1007/s11134-015-9452-z.

Appendices

Appendix A: Proof of Lemma 2 and remarks

1.1 A.1 Proof of Lemma 2

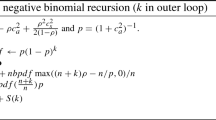

The weak duality suggests that a lower bound on \(m_{0}^{(r)}\) is given by the objective value of a feasible solution to the associated dual problem, which we formulate as follows:

Here, Z (i) for i=1,2,…,8 are the dual variables that respectively correspond to the eight PSD conditions for M (c), M (r), M (u), L (c,x), L (c,y), L (r), L (u,x), L (u,y) in (31). Note that Z (1) and Z (3) are 3×3 matrices; Z (2) is a 2×2 matrix ; Z (4),Z (5),…,Z (8) are 1×1 matrices (or, scalars). Also, B is the 3×3 matrix such that its (1,1) element is −1 and the other elements are zero. For k=1,…,6, A (k) is the 3×3 matrix such that its (i,j) element, or \(A^{(k)}_{i,j}\), is 1 if i+j=k and 0 otherwise. For k=7,8,9, A (k) is the 2×2 matrix such that its (i,j) element is 1 if i+j=k−6 and 0 otherwise. We define A (k) for k=10,…,15 as follows:

The following lemma shows the feasible solutions to (38) that induce the lower bounds in Lemma 2, which will complete the proof of Lemma 2. Following the heuristics in Sect. 2.1.3, we have eliminated variables in (38) such that the feasible solution depends on five (basic) variables. All the other variables are either 0 or can be expressed using the basics variables. Notice that \(Z^{(k)}_{i,j}\) denotes the (i,j) element of Z (k).

Lemma 3

The following assignments induce a feasible solution to (38): Z (1)=Z (4)=Z (5)=Z (6)=0, \(Z^{(2)}_{1,1} = Z^{(3)}_{1,1} + 2 Z^{(3)}_{1,3} + Z^{(3)}_{3,3} - b Z^{(8)} + 1\), \(Z^{(2)}_{1,2} = -n x_{1} Z^{(2)}_{2,2} + Z^{(3)}_{1,2} + Z^{(8)}/2\), \(Z^{(3)}_{3,3} = n (n x_{1}^{2} - \sigma_{X}^{2}) Z^{(2)}_{2,2} - 2 n x_{1} Z^{(3)}_{1,2} - 2 Z^{(3)}_{1,3} - n x_{1} Z^{(8)}\), \(Z^{(3)}_{2,2} = Z^{(2)}_{2,2}\), \(Z^{(3)}_{2,3} = -n x_{1} Z^{(2)}_{2,2}\), \(Z^{(7)} = -n^{2} x_{1}^{2} Z^{(2)}_{2,2}+ Z^{(3)}_{3,3}\), where the values of the basic variables (\(Z^{(2)}_{2,2}\), \(Z^{(3)}_{1,1}\), \(Z^{(3)}_{1,2}\), \(Z^{(3)}_{1,3}\), Z (8)) are assigned according to the three cases below.

(Case 1) If x 1<0, then

where

The value of the dual objective is the right-hand side of (32).

(Case 2) If x 1<0 and \(\sigma_{X}^{2} \leq4 n x_{1}^{2} - 2 b x_{1}\), then

The value of the dual objective is the right-hand side of (33).

(Case 3) If x 1≥0 and δ≡nx 1−b<0, or if \(\sigma_{X}^{2}\geq -2 x_{1} \delta^{2}/(b+n x_{1})\) and b+nx 1>0, then

The value of the dual objective is the right-hand side of (34).

Proof

It is straightforward by substitution to confirm that equalities in the dual formulation are satisfied by the assignments in Lemma 3. Hence, it suffices to verify the following four PSD conditions: Z (2),Z (3)⪰0 and Z (7),Z (8)≥0. To prove these PSD conditions, we will consider the three cases of the assignments as shown in the lemma. Then, the value of the objective function in each of the cases will be confirmed by evaluating

In the following, we verify the PSD conditions for each of the three cases. Observe that, to guarantee Z (2)⪰0, it suffices to show \(Z^{(2)}_{1,1} \ge0\), \(Z^{(2)}_{2,2} \ge0\), and \(Z^{(2)}_{1,1} Z^{(2)}_{2,2} - (Z^{(2)}_{1,2})^{2} \ge0\). To guarantee Z (3)⪰0, it suffices to show that \(Z^{(3)}_{1,1}>0\), \(Z^{(3)}_{2,2} \ge0\), \(Z^{(3)}_{3,3} \ge0\), and

by the Schur complement (e.g., see Lemma 8 from [15]).

(Case 1) We first show that the four PSD conditions hold if \(Z^{(3)}_{1,1}>0\). If \(Z^{(3)}_{1,1}>0\), we can show by simple algebra that Z (3) is a rank-1 PSD matrix such that \(Z^{(3)} = Z^{(3)}_{1,1} \mathbf{v} \mathbf{v}^{T}\) for \(\mathbf{v} = (1\ \frac{2 x_{1}}{\sigma_{X}^{2} d_{\mathrm{obj}}}\ \frac{-2 n x_{1}^{2}}{\sigma_{X}^{2} d_{\mathrm{obj}}} )^{T}\), where

is the value of the objective function. This also implies that \(Z^{(7)} = -n^{2} x_{1}^{2} Z^{(2)}_{2,2} + Z^{(3)}_{3,3} = -n^{2}x _{1}^{2} (Z^{(3)}_{1,2} )^{2}/Z^{(3)}_{1,1} + Z^{(3)}_{3,3} = 0\). If \(Z^{(3)}_{1,1}>0\), we can see that Z (2) is a rank-1 PSD matrix, since \(Z^{(2)}_{1,1} Z^{(2)}_{2,2} - (Z^{(2)}_{1,2} )^{2} = 0\) and \(Z^{(2)}_{2,2}\ge0\) (noted that the equality and the inequality also imply \(Z^{(2)}_{1,1}\ge0\)). Notice that the value of Z (8) is also positive if \(Z^{(3)}_{1,1}>0\).

It remains to show \(Z^{(3)}_{1,1}>0\). Observe that

where

From the above equations, we can see that \(Z^{(3)}_{1,1} > 0\) if c>0 and \(c^{2} - 16 \sigma_{X}^{2} c b x_{1} = c (\sigma_{X}^{2} + 4 x_{1} \delta ) > 0\). The latter inequality holds when c>0. Therefore, it is sufficient to show c>0 at Case 1. From the definition of c, we have

for x 1<0, since

(Case 2) Let the value of y be defined as

so that the objective value is d obj=by. First, we can confirm that Z (2) is a rank-1 PSD matrix, since \(Z^{(2)}_{2,2} = \frac{y}{\sqrt{b + 4 n \sigma_{X}^{2}}} \ge0\) and \(Z^{(2)}_{1,1} Z^{(2)}_{2,2} - (Z^{(2)}_{1,2} )^{2} = 0\), which together imply \(Z^{(2)}_{1,1}\ge0\) as well. Next, by a tedious calculation we can see that

is a rank-2 PSD matrix. The value of \(Z^{(7)} = -\frac{n (2 x_{1} + \sigma_{X}^{2} y )}{\sqrt{b^{2} + 4 n \sigma_{X}^{2}}}\) is nonnegative if \(2 x_{1} + \sigma_{X}^{2} y \ge 0\), which is satisfied when \(\sigma_{X}^{2} \le4 n x_{1}^{2} - 2 b x_{1}\). Finally, Z (8)>0 is trivial.

(Case 3) The values of the dual variables are

We can see that Z (2) is a rank-1 PSD matrix since \(Z^{(2)}_{1,1},Z^{(2)}_{2,2} \ge0\) and \(Z^{(2)}_{1,1} Z^{(2)}_{2,2} - (Z^{(2)}_{1,2} )^{2} = 0\). By some algebra, we can also see that Z (3) is a rank-2 PSD matrix for \(|x_{1}| \le\frac{b}{n}\). The positivity of Z (7) is satisfied because \(\sigma_{X}^{2} \ge -\frac{2 \delta^{2} x_{1}}{b+n x_{1}}\), and Z (8)≥0 is satisfied because δ<0. □

1.2 A.2 Optimality of the feasible solution in Lemma 3

In this section we will show that the feasible solutions given in Lemma 3 are in fact optimal. Specifically, the feasible solutions to the dual for Case 2 and Case 3 are optimal for each case, and the feasible solution to the dual for Case 1 is optimal when the conditions of Case 2 and the conditions of Case 3 are unsatisfied (and the conditions for Case 1 are satisfied). The optimality of the feasible solution to the dual will be verified by finding the feasible solution to the associated primal SDP (31) with the objective value matching with that for the dual for each case:

Lemma 4

The following assignments induce feasible solutions to (31).

(Case 3) If x 1≥0 and δ≡nx 1−b<0, or if \(\sigma_{X}^{2}\geq -2 x_{1} \delta^{2}/(b+n x_{1})\) and b+nx 1>0, then

for any k,ℓ, where f 3 is the right-hand side of (34).

(Case 2) If x 1<0 and \(\sigma_{X}^{2} \leq4 n x_{1}^{2} - 2 b x_{1}\), then

for any k,ℓ, where 00≡1, and f 2 is the right-hand side of (33).

(Case 1) If x 1<0 and neither Case 2 nor Case 3 holds, then

for any ℓ, where f 1 is the right-hand side of (32), and

A proof of Lemma 4 is postponed to Appendix B.

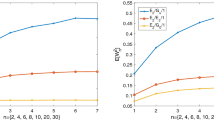

In Fig. 7 we show how the objective values f 1,f 2 and f 3 vary with x 1 for some fixed values of x 2,b and n (which is respectively 1,1 and 10 in the figure). We can see that as x 1 decreases from b/n and Case 3 holds, the objective function is obtained from Case 3 because f 3≥f 2 and f 3≥f 1. When Case 3 does not hold, the objective function is obtained from Case 2 and then from Case 1 as the objective value of Case 2 decreases.

Appendix B: Technical lemmas and proofs

Lemma 5

Let T i =X 1+⋯+X i be the random walk defined in Theorem 1 and N be the smallest i where either T i <−a or T i >b, for a,b>0. We assume that X 1,X 2,… are not identically zero. Let \(\mu_{c}(y)\equiv\mbox{\textsf{\textbf{E}}} [ \sum_{i=0}^{N-1} \mathsf{I} \{ T_{i}\leq y \} ]\). Then

Proof

Let F j (y)≡Pr(N>j & T j ≤y). Observe that

where the range of the last integral follows from −a<T j <b for j<N. We claim that the last expression in (39) is bounded because

and \(\mbox{\textsf{\textbf{E}}} [ N ]<\infty\) (see Lemma 1 in Sect. 7.5 from [9]). Thus, the summation and the integral in (39) can be exchanged to obtain

where the last equality follows from the following relations:

□

Proof of Proposition 1 (outline)

Let m(ξ) be the cumulant generating function of X:

Then m′(ξ)=x 1+σ 2 ξ 2. Let ξ 0 and ξ ⋆ be such that m(ξ 0)=0 and m′(ξ ⋆)=b/n. Specifically, ξ 0=−2x 1/σ 2 and ξ ⋆=(b−nx 1)/(nσ 2). Following the approach in Sect. 14.2 from [14], we then obtain

which implies the proposition. □

Proof of Lemma 4

Given the assigned values for each case, it is straightforward by substitution to verify that the equalities (27) and linear inequalities of the primal problem (31) are satisfied. Hence, it remains to verify the PSD conditions, M (c),M (r),M (u)⪰0, for each case. It is straightforward to verify these PSD conditions for Case 3 and Case 2:

(Case 3) The values of the primal variables are

so that M (c), M (r), and M (u) are rank-1 PSD matrices.

(Case 2) Let \(y \equiv\frac{-b + \sqrt{b^{2} + 4 n \sigma_{X}^{2}}}{2 n \sigma_{X}^{2}}\) as in Lemma 3. The values of the primal variables are

so that M (c), M (r), and M (u) are rank-1 PSD matrices.

For Case 1, the PSD conditions for the three matrices do not appear to be easily verified. To see why Case 1 is optimal when neither Case 2 nor Case 3 holds, we will outline steps to construct M (r),M (u),M (c) of the optimal solution of the primal SDP, using the optimal solution of the dual SDP.

First, consider M (r). By the complementary slackness condition, since Z (2) is a rank-1 PSD matrix and \(m_{0}^{(r)} = f_{1} > 0\), we find that M (r) is also a rank-1 PSD matrix such that

where \(\gamma\equiv Z^{(2)}_{1,1} / Z^{(2)}_{1,2}\).

What remains is to find M (u) and M (c). The values of the elements of M (u) depend on those of M (c) and M (r) as we will see below. So, let us first denote the values of the elements of M (c) that are constrained by the complementary slackness condition. Since Z (8)>0, by the complementary slackness condition, L (u,y)=0. So, we have

which together with \(m_{1}^{(r)} = -\gamma m_{0}^{(r)}\) implies that

This gives us

Now, let us derive the values of the elements of M (u). Since Z (3) is a rank-1 PSD matrix

by the complementary slackness condition, we have that M (u) must be a PSD matrix satisfying

Solving the above equation, we can write M (u) as follows:

What we have to show is that there exist \(m_{0,1}^{(c)}\) and \(m_{1,0}^{(c)}\) that guarantee the positive semidefiniteness of M (u). By (40), we obtain that

The above equation implies

which allows us to write

This means that it is sufficient to show that there is a range of values for \(m_{1,0}^{(c)}\) to guarantee the positive semidefiniteness of M (u) since the value of \(m_{0,1}^{(c)}\) can be determined from that of \(m_{1,0}^{(c)}\). Using (41) and the following inequalities that must be satisfied so that M (u)⪰0,

we can obtain the lower bound of \(m_{1,0}^{(c)}\):

Since L (u,x)≥0, we also have the upper bound of \(m_{1,0}^{(c)}\):

Therefore, to guarantee the positive semidefiniteness of M (u), we obtain the condition:

which is satisfiable if and only if

which implies

Substituting the value of \(m_{0}^{(r)}\), we can obtain the following condition for (42) to hold:

Hence,

Notice that the range of \(\sigma_{X}^{2}\) in this case is the compliment of the range of \(\sigma_{X}^{2}\) for other cases. We can choose any \(m_{1,0}^{(c)}\) that satisfy \(L_{B}\le m_{1,0}^{(c)}\le U_{B}\), which guarantees M (u)⪰0. Specifically, we choose \(m_{1,0}^{(c)} = U_{B}\). This completes the assignment of the elements of M (u).

It remains to show that the other elements of M (c) can be set appropriately to guarantee the positive semidefiniteness of M (c). Having determined the value of \(m_{1,0}^{(c)}=U_{B}\), the value of \(m_{0,1}^{(c)}\) follows from (41). Now, since L (c,y)≥0, the value of \(m_{0,1}^{(c)}\) must satisfy: \(m_{0,1}^{(c)} \le b m_{0,0}^{(c)}\), which is satisfied under the following condition:

Inequality (43) is proved at the end of the proof of the lemma.

Now, what remains are the values of \(m_{0,2}^{(c)}, m_{1,1}^{(c)}, \mbox{~and~} m_{2,0}^{(c)}\), which can be set as follows to satisfy the positive semidefiniteness of M (c) by the Schur complement:

It can be checked easily that L (c,x)≥0 holds with the above \(m_{2,0}^{(c)}\) because

This completes the assignment of all elements of M (c).

The proof for Case 1 is completed by proving (43) holds when the following three conditions hold:

Recall that since x 1<0, the first three terms of (43) are negative, and therefore the inequality holds if 2(5n−1)x 1+4b≤0. If 2(5n−1)x 1+4b>0, then 4(b+nx 1)>−2(3n−1)x 1>0, so that b+nx 1>0. Hence, (45) is reduced to

When 2(5n−1)x 1+4b>0, the left-hand side of (43) is bounded as follows:

Since x 1<0 and b+nx 1>0, it suffices to show that

is nonnegative for \(-\frac{2 b}{5 n-1} < x_{1} < 0\), where the first inequality follows from 2(5n−1)x 1+4b>0. Observe that

which together imply f(x 1)>0 for \(-\frac{2 b}{5 n-1} < x_{1} < 0\). □

Appendix C: Alternative proofs to Kingman’s and Daley’s

In this section we apply our techniques introduced in Sect. 2 to the SDPs formulated by Bertsimas and Natarajan [4]. This results in alternative proofs of the fact that the bounds derived from the SDPs formulated in [4] agree with Kingman’s bound and Daley’s bound on the expected waiting time, \(\mbox{\textsf{\textbf{E}}} [ W ]\), for a GI/GI/1 queue in steady state.

For completeness, we start by repeating relevant results from Bertsimas and Natarajan [4]:

Proposition 2

The objective value of the optimal solution to the following SDP gives an upper bound on \(\mbox{\textsf{\textbf{E}}} [ W ]\):

Proposition 3

The objective value of the optimal solution to the SDP in Proposition 2 with the following additional constraint gives an upper bound on \(\mbox{\textsf{\textbf{E}}} [ W ]\):

Notice that the notations in Propositions 2 and 3 are modified from [4] to be consistent with those used in this paper. In [20], we provide proofs of the propositions, using our notations.

Bertsimas and Natarajan [4] claim that the objective value of the optimal solution to the SDP in Proposition 2 agree with Kingman’s bound because the SDP in Proposition 2 includes all of the constraints used to derive Kingman’s bound, and there is a feasible solution to the SDP in Proposition 2 whose objective value agree with Kingman’s bound. They also claim that the objective value of the optimal solution to the SDP in Proposition 3 agree with Daley’s bound for analogous reasons. This means that the optimality of the approach of Bertsimas and Natarajan [4] relies on the knowledge of the existing bounds.

In contrast, our approach in Sect. 2 can be used to find a bound without the knowledge of the existing bounds by the weak duality principle of SDP. In the following, we apply our approach to rediscover the Kingman’s and Daley’s bounds to demonstrate the effectiveness of our approach beyond that presented in Sect. 2 and give rigorous proofs of the optimality of the approach in [4]. We will see that finding feasible solutions of the dual SDPs is quite easy for the formulations in this section because a large portion of the dual variables are zero.

Proposition 4

There is a feasible solution to the dual problem of the SDP in Proposition 2 such that the objective value of the feasible solution is \(-(x_{2}-x_{1}^{2})/(2x_{1})\), which agrees with Kingman’s bound.

Proof

Following the standard formulation of the primal SDP in Appendix D, we rewrite the objective of the primal problem as a minimization, changing the sign of the objective function. We will prove that the objective value of a feasible solution to the dual problem is \((x_{2}-x_{1}^{2})/(2x_{1})\). We also rewrite the constraints using the following matrices:

Now, our primal problem is

Notice that the above constraints is of the form \(\mathcal{A} m \succeq \mathcal{B}\), as in Appendix D, where \(\mathcal{A}\) and \(\mathcal{B}\) are collections of matrices that are arranged with columns and rows, and m is a column vector. Analogous to the operation with a matrix and a vector, the expression, \(\mathcal{A} m \succeq\mathcal{B}\), is understood as \(\sum_{j=1}^{6} \mathcal{A}_{i,j} m_{j} \succeq\mathcal{B}_{i}\) for each i, where \(\mathcal{A}_{i,j}\) is the (i,j) element of \(\mathcal{A}\), and \(\mathcal{B}_{i}\) is the ith element of \(\mathcal{B}\).

Hence, the corresponding dual SDP is:

It is straightforward to verify that the following assignment is feasible to the dual problem, and its objective value is \((x_{2}-x_{1}^{2})/(2x_{1})\):

□

Proposition 5

There is a feasible solution to the dual problem of the SDP with the additional constraint in Proposition 3 such that the objective value of the feasible solution is −(x 2−t 2(1−ρ)2)/(2x 1), which agrees with Daley’s bound.

Proof

Again, we rewrite the objective of the primal problem as a minimization and prove that the objective value of a feasible solution to the dual problem is (x 2−t 2(1−ρ)2)/(2x 1). Using the matrices defined in the proof of Proposition 4, the primal problem can be expressed as follows:

Hence, its dual is:

It is straightforward to verify that the following assignment is feasible to the dual problem, and its objective value is (x 2−t 2(1−ρ)2)/(2x 1): Z (1)=Z (2)=Z (3)=Z (4)=0, and Z (5)=−1/(2x 1). □

Appendix D: A brief tutorial on SDP

Here we present a brief tutorial on SDP with a focus on building the dual SDP and the duality principles. The tutorial mainly relies on p. 71 of [3] (with modification for ease of exposition) and is included in this paper for self-containment.

Let the following primal SDP formulation be denoted as (Pr).

Then the dual of (Pr) is as follows:

Here, c and x are vectors of length n, and \(\mathcal{A}_{i}\)’s are vectors whose jth element, denoted by \(\mathcal{A}_{ij}\), is a matrix. In this notation, \(\mathcal{A}_{i} x\) is a matrix obtained from the linear combination of elements of \(\mathcal{A}_{i}\) as \(\sum_{j=1}^{n}\mathcal{A}_{ij} x_{j}\) whose dimension is the same with the matrix B i and Z i . A⪰0 denotes that the matrix A is positive semidefinite, i.e., its eigenvalues are non-negative, while A≻0 denotes that the matrix A is positive definite, i.e., its eigenvalues are positive.

A feasible solution of the primal (resp., dual) SDP is the assignment of x (resp., Z i ’s) that satisfy the (in)equality conditions of the primal (resp., dual) SDP. The feasible solution of the primal (resp., dual) is optimal if there is no other solution gives lower (resp., higher) objective value. The weak duality principle of SDP states that the optimal objective value of the primal SDP is always at least that of the dual SDP.

If the objective value of the primal (resp., dual) is bounded below (resp., above) and it is strictly feasible, i.e., there exists a vector x s.t. \(\mathcal{A}_{i} x - B_{i} \succ0\) (resp., there exist Z i ≻0) for all i, then the optimal value of the primal is equal to that of the dual. This is called the strong duality principle and, in this case, the complementary slackness condition

is the necessary and sufficient condition for the optimality of the primal with x ∗ and the dual with \(Z_{i}^{*}\)’s.

Rights and permissions

About this article

Cite this article

Osogami, T., Raymond, R. Analysis of transient queues with semidefinite optimization. Queueing Syst 73, 195–234 (2013). https://doi.org/10.1007/s11134-012-9309-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-012-9309-7