Abstract

This paper examines the strength of association between the outcomes of National Research Foundation (NRF) peer review based rating mechanisms, and a range of objective measures of performance of researchers. The analysis is conducted on 1932 scholars that have received an NRF rating or an NRF research chair. We find that on average scholars with higher NRF ratings record higher performance against research output and impact metrics. However, we also record anomalies in the probabilities of different NRF ratings when assessed against bibliometric performance measures, and record a disproportionately large incidence of scholars with high peer-review based ratings with low levels of recorded research output and impact. Moreover, we find strong cross-disciplinary differences in terms of the impact that objective levels of performance have on the probability of achieving different NRF ratings. Finally, we report evidence that NRF peer review is less likely to reward multi-authored research output than single-authored output. Claims of a lack of bias in NRF peer review are thus difficult to sustain.

Similar content being viewed by others

Notes

The literature assessing the validity of peer review is vast. For a recent comprehensive review see Bornmann (2011). Earlier reviews of peer review in the context of grant evaluation can be found in Demicheli and Pietrantonj (2007) and Wessely (1998). For some of the evidence in support of peer review (not necessarily in relation to grant evaluation) see Goodman et al. (1994), Pierie et al. (1996), Bedeian (2003) and Shatz (2004). For critics see Eysenck and Eysenck (1992) and Frey (2003).

The 2012 Italian evaluation exercise and 2014 United Kingdom research assessments rely on both peer review and bibliometric indicators. The Australian research excellence exercise relies purely on bibliometrics. See the discussion in Abramo et al. (2011).

The impact on scholars can be substantial. While the average funding available per researcher in South Africa under NRF programs is approximately ZAR 20,000 per annum, researchers granted South African Research Chairs receive an annual budget allowance of ZAR 3 million.

For a comprehensive list of the metrics, their construction and characteristics, see Rehn et al. (2007).

In what follows only indexes actually computed for the present study are discussed. There are certainly other indexes—for instance see the discussion in Rehn et al. (2007), as well as the overview in Bornmann and Daniel (2007), and Bornmann et al. (2008, 2009a). Reasons for our choice are outlined in the discussion below.

See also the discussion in Thor and Bornmann (2011).

The L-rating has been discontinued as of 2010. Candidates who were eligible in this category included: black researchers, female researchers, those employed in a higher education institution that lacked a research environment and those who were previously established as researchers and have returned to a research environment.

For instance, of the members of the Specialist Committees listed on the NRF website at the time of data collection, 50 % were from the University of Cape Town, the University of the Witwatersrand and the University of Pretoria; if the University of Stellenbosch and KwaZulu-Natal were added, the proportion rises to 71.43 %. By contrast, 4.76 % come from historically disadvantaged institutions.

In 2012 an additional set of chairs were announced. These were not included in the analysis.

Bosman et al. (2006) found Google Scholar, Web of Science and Scopus coverage generally comparable. Nonetheless they report disciplinary variations, corroborated by Kousha and Thelwall (2007, 2008) who find Google Scholar underreports the natural sciences, and Bar-Ilan (2008) who finds variation within the natural sciences.

See the discussion in Archambault and Gagné (2004), Belew (2005), Derrick et al. (2010), García-Pérez (2010), Harzing (2007–2008, 2008), Kulkarni et al. (2009), Meho and Yang (2007), and Roediger (2006). While Jacsó (2005, 2006a, 2006b) reports that the social sciences and Humanities are underreported under Google Scholar, larger-scale studies reverse this finding—see Bosman et al. (2006) and Kousha and Thelwall (2007). Further evidence comes from Nisonger (2004) and Butler (2006). Testa (2004) reports that ISI itself estimates that of the 2000 new journals reviewed annually only 10–12 % are selected to be included in the Web of Science.

The weights for the discipinary categories in our study are as follows: biological 0.77; business 1.32; chemical 0.92; engineering 1.7; medical s0.625; physical 1.14; social 1.6.

Authors per paper reports a negative (though very low) correlation with all but the citations per paper measure—given the near zero level of correlation the inference is that authors per paper does not systematically co-vary with the rest of the output and impact measures.

The same patterns emerge for the medians of the measures. The second moment of the distribution is generally large across all categories, reflecting a wide range of measured output, and the impact of such output.

While in general the implied probability of receiving a specified rating is invariant to the use of the raw h-index or the discipline-adjusted h -index (there are only marginal differences in the plied densities), in the case of the A-rating significant differences do emerge—with the discipline adjusted h-index generating considerably lower probability values of the A-rating than the raw measure. The reason for this is that the probability of receiving an A-rating under any given objective performance in terms of bibliometric measures is not invariant to discipline—see the discussion below.

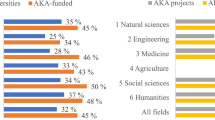

Use of the more disaggregated classifications that the NRF Specialist panels consider is precluded by considerations of sample size in the case of a number of categories that contain a relatively small number of scholars. In the case of some researchers assignment was to mulptiple categories: for instance biochemists might be recorded both in the biological and the chemical sciences.

Throughout, use of the median measure of central tendency leaves inferences unchanged.

It is perhaps worth reminding ourselves that if anything, the methodology by means of which the h-index is compiled favours the social, rather than the natural sciences. Thus the cross-disciplinary performance differential is, if anything, understated.

We report only the highest rating category, since this carries the greatest prestige and funding implications. Results for the remaining ratings categories are available from the author.

While we do not report the densities explicitly, in the case of a B-rating, the strongest probability response to a rising h-index again emerges for the physical sciences, followed by the social and chemical sciences, then engineering and the biological sciences, then business sciences and finally the medical sciences.

References

Abramo, G., & D’Angelo, C. A. (2011). Evaluating research: From informed peer review to bibliometrics. Scientometrics, 87(3), 499–514.

Abramo, G., D’Angelo, C. A., & Di Costa, F. (2011). National research assessment exercises: Acomparison of peer review and bibliometrics rankings. Scientometrics, 89(3), 929–941.

Adler, N., Elmquist, M., & Norrgre, F. (2009). The challenge of managing boundary-spanning research activities: Experiences from the Swedish context. Research Policy 38, 1136–1149.

Archambault, E., & Gagné, E. V. (2004). The use of bibliometrics in social sciences and Humanities. Montreal: Social Sciences and Humanities Research Council of Canada (SSHRCC).

Bar-Ilan, J. (2008). Which h-index?: A comparison of WoS, Scopus and Google Scholar. Scientometrics, 74(2), 257–271.

Batista, P. D., Campiteli, M. G., Kinouchi, O., & Martinez, A. S. (2006). Is it possible to compare researchers with different scientific interests?. Scientometrics, 68(1), 179–189.

Bedeian, A. G. (2003). The manuscript review process: The proper role of authors, referees, and editors. Journal of Management Inquiry, 12(4), 331–338.

Belew, R. K. (2005). Scientific impact quantity and quality: Analysis of two sources of bibliographic data, arXiv:cs.IR/0504036 v1, 11 August 2005.

Benner, M., & Sandström, U. (2000). Institutionalizing the triple helix: research funding and norms in the academic system. Research Policy, 29, 291–301.

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45, 199–245.

Bornmann, L., & Daniel, H. -D. (2005). Does the h-index for ranking of scientists really work? Scientometrics, 65(3), 391–392.

Bornmann, L., & Daniel, H. -D. (2007). What do we know about the h index? Journal of the American Society for Information Science and Technology, 58(9), 1381–1385.

Bornmann, L., Mutz, R., & Daniel, H. -D. (2008). Are there better indices for evaluation purposes than the h index? A comparison of nine different variants of the h index using data from biomedicine. Journal of the American Society for Information Science and Technology, 59(5), 830–837.

Bornmann, L., Mutz, R., Daniel, H. -D., Wallon, G., & Ledin, A. (2009a). Are there really two types of h index variants? A validation study by using molecular life sciences data. Research Evaluation, 18(3), 185–190.

Bornmann, L., Marx, W., Schier, H., Rahm, E., Thor, A., & Daniel, H. D. (2009b). Convergent validity of bibliometric Google Scholar data in the field of chemistry. Citation counts for papers that were accepted by Angewandte Chemie International Edition or rejected but published elsewhere, using Google Scholar, Science Citation Index, Scopus, and Chemical Abstracts. Journal of Informetrics, 3(1), 27–35.

Bosman, J, Mourik, I. van, Rasch, M.; Sieverts, E., & Verhoeff, H. (2006). Scopus reviewed and compared. The coverage and functionality of the citation database Scopus, including comparisons with Web of Science and Google Scholar. Utrecht: Utrecht University Library. Retrieved from http://igitur-archive.library.uu.nl/DARLIN/2006-1220-200432/Scopusdoorgelicht&vergeleken-translated.pdf.

Butler, L. (2006). RQF pilot study project: History and political science methodology for citation analysis, November 2006. Retrieved from http://www.chass.org.au/papers/bibliometrics/CHASS_Methodology.pdf.

Cronin, B., & Meho, L. (2006). Using the h-Index to rank Influential Information scientists. Journal of the American Association for Information Science and Technology, 57(9), 1275–1278.

Debackere, K., & Glänzel, W. (2003). Using a bibliometric approach to support research policy making: The case of the Flemish BOF-key. Scientometrics, 59(2), 253–276.

Demicheli, V., & Pietrantonj, C. (2007). Peer review for improving the quality of grant applications. Cochrane Database of Systematic Reviews, 2, Art. No.: MR000003. doi:10.1002/14651858.MR000003.pub2.

Derrick, G. E., Sturk, H., Haynes, A. S., Chapman, S., & Hall, W. D. (2010). A cautionary bibliometric tale of two cities. Scientometrics, 84(2), 317–320.

Egghe, L. (2006). Theory and practice of the g-index. Scientometrics, 69 (1), 131–152.

Egghe, L., & Rousseau, R. (2006). An informetric model for the Hirsch-index. Scientometrics, 69(1), 121–129.

Eysenck, H. J., & Eysenck, S. B. G. (1992). Peer review: Advice to referees and contributors. Personality and Individual Differences, 13(4), 393–399.

Falagas, M. E., Pitsouni, E. I., Malietzis, G. A., & Pappas, G. (2008). Comparison of PubMed, Scopus, Web of Science, and Google Scholar: Strengths and weaknesses. The FASEB Journal, 22, 338–342 doi:10.1096/fj.07-9492LSF

Frey, B. S. (2003). Publishing and prostitution? Choosing between one’s own ideas and academic success. Public Choice, 116(1–2), 205–223.

García-Aracil, A., Gracia, A. G., & Pérez-Marín, M. (2006). Analysis of the evaluation process of the research performance: An empirical case. Scientometrics, 67(2), 213–230.

García-Pérez, M. A. (2010). Accuracy and completeness of publication and citation records in the Web of Science, PsycINFO, and Google Scholar: A case study for the computation of h Indices in psychology. Journal of the American Society for Information Science and Technology, 61(10), 2070–2085.

Glänzel, W. (2006). On the opportunities and limitations of the H-index. Science Focus, 1(1), 10–11.

Goodman, S. N., Berlin, J., Fletcher, S. W., & Fletcher, R. H. (1994). Manuscript quality before and after peer review and editing at annals of internal medicine. Annals of Internal Medicine, 121(1), 11–21.

Gray, J. E., Hamilton, M. C., Hauser, A., Janz, M. M., Peters, J. P., & Taggart, F. (2012). Scholarish: Google Scholar and its value to the sciences. Issues in Science and Technology Librarianship, 70(Summer). doi::10:5062/F4MK69T9.

Harzing, A.-W. (2007–2008). Google Scholar: Anew data source for citation analysis. Retrieved from http://www.harzing.com/pop_gs.htm.

Harzing, A.-W. (2008). Reflections on the h-index. Retrieved from http://www.harzing.com/pop_hindex.htm.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output, arXiv:physics/0508025 v5, 29 Sep 2006.

Horrobin, D. F. (1990). The philosophical basis for peer review and the suppression of innovation. Journal of the American Medical Association, 263(10), 1438–1441.

Iglesias, J. E., & Pecharromán, C. (2007). Scaling the h-index for different scientific ISI fields. Scientometrics, 73(3), 303–320.

Jacsó, P. (2005). Google Scholar: The pros and the cons. Online Information Review, 29(2), 208–214.

Jacsó, P. (2006a). Dubious hit counts and cuckoo’s eggs. Online Information Review, 30(2), 188–193.

Jacsó, P. (2006b). Deflated, inflated and phantom citation counts. Online Information Review, 30(3), 297–309.

Jacsó, P. (2010). Metadata mega mess in Google Scholar. Online Information Review, 34(1), 175–191.

Jin, B. (2007). The AR-index: Complementing the h-index. ISSI Newsletter, 3(1), 6.

Kulkarni, A. V., Aziz, B., Shams, I., & Busse, J. W. (2009). Comparisons of citations in Web of Science, Scopus, and Google Scholar for articles published in general medical journals. Journal of the American Medical Association, 302(10), 1092–1096.

Kousha, K., & Thelwall, M. (2007). Google Scholar citations and Google Web/URL citations: A multi-discipline exploratory analysis. Journal of the American Society for Information Science and Technology, 58(7), 1055–1065.

Kousha, K, & Thelwall, M. (2008). Sources of Google Scholar citations outside the Science Citation index: A comparison between four science disciplines. Scientometrics, 74(2), 273–294.

Leydesdorff, L. & Etzkowitz, H. (1996). Emergence of a Triple-Helix of university–industry–government relations. Science and Public Policy, 23(5), 279–296.

Meho, L. I., & Yang, K. (2007). A new era in citation and bibliometric analyses: Web of Science, Scopus, and Google Scholar. Journal of the American Society for Information Science and Technology, 58(13), 2105–2125.

Moed, H. F. (2002). The impact-factors debate: The ISI’s uses and limits. Nature, 415, 731–732.

Moxam, H., & Anderson, J. (1992). Peer review. A view from the inside. Science and Technology Policy, 5(1), 7–15.

Nisonger, T. E. (2004). Citation autobiography: An investigation of ISI database coverage in determining author citedness. College & Research Libraries, 65(2), 152–163.

Pendlebury, D. A. (2008). Using bibliometrics in evaluating research. Philadelphia, PA: Research Department, Thomson Scientific.

Pendlebury, D. A. (2009). The use and misuse of journal metrics and other citation indicators. Scientometrics, 57(1), 1–11.

Pierie, J. P. E. N., Walvoort, H. C., & Overbeke, A. J. P. M. (1996). Reader’s evaluation of effect of peer review and editing on quality of articles in the Nederlans Tijdschrift voor Geneeskunde. Lancet, 348(9040), 1480–1483.

Rehn, C., Kronman, U., & Wadskog, D. (2007). Bibliometric indicators: Definitions and usage at Karolinska Institutet, Stickholm. Sweden: Karolinska Institutet University Library.

Roediger III, H. L. (2006). The h index in Science: A new measure of scholarly contribution. APS Observer: The Academic Observer, 19, 4.

Saad, G. (2006). Exploring the h-index at the author and journal levels using bibliometric data of productive consumer scholars and business-related journals respectively. Scientometrics, 69(1), 117–120.

Schreiber, M. (2008). To share the fame in a fair way, h m modifies h for multi-authored manuscripts. New Journal of Physics, 10, 040201-1–8.

Shatz, D. (2004). Peer review: A critical inqury. Lanham, MD: Rowman and Littlefield.

Sidiropoulos, A., Katsaros, D., & Manolopoulos, Y. (2006). Generalized h-index for disclosing latent facts in citation networks, arXiv:cs.DL/0607066 v1, 13 Jul 2006.

Testa, J. (2004). The Thomson scientific journal selection process. Retrieved from http://scientific.thomson.com/free/essays/selectionofmaterial/journalselection/.

Thor, A., & Bornmann, L. (2011). The calculation of the single publication h index and related performance measures: A web application based on Google Scholar data. Online Information Review, 35(2), 291–300.

Van Raan, A. F. J. (2005). Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics, 62(1), 133–143.

Van Raan, A. F. J. (2006). Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgement for 147 chemistry research groups. Scientometrics, 67(3), 491–502.

Vaughan, L., & Shaw, D. (2008). A new look at evidence of scholarly citations in citation indexes and from web sources. Scientometrics, 74(2), 317–330.

Wessely, S. (1998). Peer review of grant applications: What do we know?. Lancet, 352(9124), 301–305.

Zhang, C.-T. (2009). The e-index, complementing the h-index for excess citations. PLoS ONE, 5(5), e5429.

Acknowledgements

The author acknowledges the research support of Economic Research Southern Africa in completing this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fedderke, J.W. The objectivity of national research foundation peer review in South Africa assessed against bibliometric indexes. Scientometrics 97, 177–206 (2013). https://doi.org/10.1007/s11192-013-0981-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-013-0981-0