Abstract

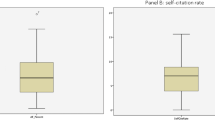

Faculty of 1000 (F1000) is a post-publishing peer review web site where experts evaluate and rate biomedical publications. F1000 reviewers also assign labels to each paper from a standard list or article types. This research examines the relationship between article types, citation counts and F1000 article factors (FFa). For this purpose, a random sample of F1000 medical articles from the years 2007 and 2008 were studied. In seven out of the nine cases, there were no significant differences between the article types in terms of citation counts and FFa scores. Nevertheless, citation counts and FFa scores were significantly different for two article types: “New finding” and “Changes clinical practice”: FFa scores value the appropriateness of medical research for clinical practice and “New finding” articles are more highly cited. It seems that highlighting key features of medical articles alongside ratings by Faculty members of F1000 could help to reveal the hidden value of some medical papers.

Similar content being viewed by others

Notes

The CPP/FCSm is an indicator which developed by Centre for Science and Technology Studies (CWTS) with the aim of normalization of citation among different fields. It has been renamed as crown indicator (See http://arxiv.org/pdf/1003.2167.pdf).

References

Aksnes, D. W., & Taxt, R. E. (2004). Peer reviews and bibliometric indicators: A comparative study at a Norwegian university. Research Evaluation, 13(1), 33–41.

Allen, L., Jones, C., Dolby, K., Lynn, D., & Walport, M. (2009). Looking for landmarks: the role of expert review and bibliometric analysis in evaluating scientific publication outputs. PLoS ONE, 4(6), e5910.

Archambault, E., Campbell, D., Gingras, Y., & Lariviere, V. (2009). Comparing bibliometric statistics obtained from the Web of Science and Scopus. Journal of the American Society for Information Science and Technology, 60(7), 1320–1326.

Banzi, R., Moja, L., Pistotti, V., Facchini, A., & Liberati, A. (2011). Conceptual frameworks and empirical approaches used to assess the impact of health research: An overview of reviews. Health research policy and systems/BioMed Central, 9, 26. doi:10.1186/1478-4505-9-26.

Bornmann, L., & Daniel, H.-D. (2008). What do citation counts measure? A review of studies on citing behavior. Journal of Documentation, 64(1), 45–80.

Bornmann, L., & Leydesdorff, L. (2012). The validation of (advanced) bibliometric indicators through peer assessments: A comparative study using data from InCites and F1000. Digital Libraries; Applications. http://arxiv.org/abs/1211.1154.

Camacho-Miñano, M–. M., & Núñez-Nickel, Manuel. (2009). The multilayered nature of reference selection. Journal of the American Society for Information Science and Technology, 60(4), 754–777. doi:10.1002/asi.21018.

Chalmers, I., & Glasziou, P. (2009). Avoidable waste in the production and reporting of research evidence. Lancet, 374(9683), 86–89. doi:10.1016/S0140-6736(09)60329-9.

Cole, S., Cole, J. R., & Simon, G. A. (1981). Chance and consensus in peer review. Science, 214(4523), 881–886.

Cronin, B. (1984). The citation process. The role and significance of citations in scientific communication. London: Taylor Graham.

F1000. (2012a). About F1000. http://f1000.com/prime/about/whatis.

F1000. (2012b). F1000 Faculty. http://f1000.com/prime/thefaculty.

Falagas, M. E., Kouranos, V. D., Arencibia-Jorge, R., & Karageorgopoulos, D. E. (2008). Comparison of SCImago journal rank indicator with journal impact factor. FASEB journal: official publication of the Federation of American Societies for Experimental Biology, 22(8), 2623–2628. doi:10.1096/fj.08-107938.

Field, A. (2009). Discovering statistics using SPSS. Thousand Oaks: SAGE.

Fienberg, S. E., & Martin, M. E. (1985). Sharing research data. Washington: Natl Academy.

Franceschet, M., & Costantini, A. (2011). The first Italian research assessment exercise: A bibliometric perspective. Journal of Informetrics, 5(2), 275–291.

Hanney, S., Frame, I., Grant, J., Buxton, M., Young, T., & Lewison, G. (2005). Using categorisations of citations when assessing the outcomes from health research. Scientometrics, 65(3), 357–379. doi:10.1007/s11192-005-0279-y.

Harnad, S. (1985). Rational disagreement in peer review. Science, Technology and Human Values, 10(3), 55–62.

Huggett, S. (2012). F1000 Journal Rankings: An alternative way to evaluate the scientific impact of scholarly communications. Research Trends, 26, 7–11.

Jones, T. H., Donovan, C., & Hanney, S. (2012). Tracing the wider impacts of biomedical research: A literature search to develop a novel citation categorisation technique. Scientometrics, 93(1), 125–134.

Koenig, M. E. D. (1982). Determinants of expert judgement of research performance. Scientometrics, 4(5), 361–378. doi:10.1007/BF02135122.

Kostoff, R. N. (1998). The use and misuse of citation analysis in research evaluation. Scientometrics, 43(1), 27–43. doi:10.1007/BF02458392.

Kostoff, R. N. (2007). The difference between highly and poorly cited medical articles in the journal Lancet. Scientometrics, 72(3), 513–520. doi:10.1007/s11192-007-1573-7.

Kousha, K., & Thelwall, M. (2008). Assessing the impact of disciplinary research on teaching: An automatic analysis of online syllabuses. Journal of the American Society for Information Science and Technology, 59(13), 2060–2069.

Kousha, K., Thelwall, M., & Rezaie, S. (2011). Assessing the citation impact of books: The role of Google Books, Google Scholar, and Scopus. Journal of the American Society for Information Science and Technology, 62(11), 2147–2164. doi:10.1002/asi.21608.

Kuruvilla, S., Mays, N., Pleasant, A., & Walt, G. (2006). Describing the impact of health research: A Research Impact Framework. BMC Health Services Research, 6(1), 134.

Lewison, G. (2005). Citations to papers from other documents. Handbook of Quantitative Science and Technology. http://www.springerlink.com/index/T2H0245570526217.pdf.

Lewison, T., & Sullivan, R. (2008). How do the media report cancer research? A study of the UK’s BBC website. British Journal of Cancer, 99(4), 569–576. doi:10.1038/sj.bjc.6604531.

Lewison, G., & Sullivan, R. (2008). The impact of cancer research: how publications influence UK cancer clinical guidelines. British Journal of Cancer, 98(12), 1944–1950. doi:10.1038/sj.bjc.6604405.

Li, & Thelwall, M. (2012). F1000, Mendeley and traditional bibliometric indicators. 17th International Conference on Science and Technology Indicators (Vol. 3, pp. 1–11).

MacRoberts, M. H., & MacRoberts, B. R. (1996). Problems of citation analysis. Scientometrics, 36(3), 435–444.

MacRoberts, M. H., & MacRoberts, B. R. (2010). Problems of citation analysis: A study of uncited and seldom-cited influences. Journal of the American Society for Information Science and Technology, 61(1), 1–12.

Mahdi, S., D’Este, P., & Neely, A. D. (2008). Citation counts: Are they good predictors of RAE scores?: A bibliometric analysis of RAE 2001. London: AIM Research.

Maier, G. (2006). Impact factors and peer judgment: The case of regional science journals. Scientometrics, 69(3), 651–667.

Moed, H. F. (2005). Citation analysis in research evaluation (Vol. 9). Norwell: Kluwer Academic.

Nederhof, A. J., & Van Raan, A. F. J. (1993). A bibliometric analysis of six economics research groups: A comparison with peer review. Research Policy, 22(4), 353–368.

Niederkrotenthaler, T., Dorner, T. E., & Maier, M. (2011). Development of a practical tool to measure the impact of publications on the society based on focus group discussions with scientists. BMC Public Health, 11, 588. doi:10.1186/1471-2458-11-588.

Norris, M., & Oppenheim, C. (2003). Citation counts and the Research Assessment Exercise V: Archaeology and the 2001 RAE. Journal of Documentation, 59(6), 709–730.

Oppenheim, C. (1995). The correlation between citation counts and the 1992 Research Assessment Exercise Ratings for British library and information science university departments. Journal of Documentation, 51(1), 18–27.

Oppenheim, C., & Summers, M. A. C. (2008). Citation counts and the Research Assessment Exercise, part VI: Unit of assessment 67 (music). Information Research, 13(2), 3.

Opthof, T., & Leydesdorff, L. (2011). A comment to the paper by Waltman et al., Scientometrics, 87, 467–481, 2011. Scientometrics, 88(3), 1011–1016.

Piwowar, H. A., Day, R. S., & Fridsma, D. B. (2007). Sharing detailed research data is associated with increased citation rate. PLoS ONE, 2(3), e308. doi:10.1371/journal.pone.0000308.

Price, & Simon, (2009). Patient education and the impact of new medical research. Journal of Health Economics, 28(6), 1166–1174. doi:10.1016/j.jhealeco.2009.08.005.

Priem, & Hemminger, B. M. H. (2010). Scientometrics 2.0: New metrics of scholarly impact on the social Web. First Monday, 15(7), http://frodo.lib.uic.edu/ojsjournals/index.php/fm/. Retrieved from http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/2874.

Priem, Taraborelli, D., Groth, P., & Neylon, C. (2011). Altmetrics: A manifesto. http://altmetrics.org/manifesto.

Reale, E., Barbara, A., & Costantini, A. (2007). Peer review for the evaluation of academic research: lessons from the Italian experience. Research Evaluation, 16(3), 216–228.

Sarli, C. C., Dubinsky, E. K., & Holmes, K. L. (2010). Beyond citation analysis: A model for assessment of research impact. Journal of the Medical Library Association: JMLA, 98(1), 17–23.

Sarli, C. C., & Holmes, K. L. (2012). The becker medical library model for assessment of research impact. St Louis: Bernard Becker Medical Library, Washington University School of Medicine.

Seglen, P. O. (1997). Citations and journal impact factors: questionable indicators of research quality. Allergy, 52(11), 1050–1056.

Seng, L. B., & Willett, P. (1995). The citedness of publications by United Kingdom library schools. Journal of Information Science, 21(1), 68–71.

Small, H. (2004). On the shoulders of Robert Merton: Towards a normative theory of citation. Scientometrics, 60(1), 71–79. http://www.springerlink.com/index/X6VTVM1209131570.pdf.

Smith, A. T., & Eysenck, M. (2002). The correlation between RAE ratings and citation counts in psychology. London.

Stern, R. E. (1990). Uncitedness in the biomedical literature. Journal of the American society for information science, 41(3), 193–196.

Tomlinson, S. (2000). The research assessment exercise and medical research. British Medical Journal, 320(7235), 636–639.

Van Raan, A. F. J. (2006). Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgment for 147 chemistry research groups. Scientometrics, 67(3), 491–502.

Vaughan, L., & Shaw, D. (2005). Web citation data for impact assessment: A comparison of four science disciplines. Journal of the American Society for Information Science and Technology, 56(10), 1075–1087.

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & van Raan, A. F. J. (2011). On the correlation between bibliometric indicators and peer review: Reply to Opthof and Leydesdorff. Scientometrics, 3, 1017–1022.

Wardle, D. A. (2010). Do’Faculty of 1000′(F1000) ratings of ecological publications serve as reasonable predictors of their future impact? Ideas in Ecology and Evolution, 3, 11–15.

Weiss, A. P. (2007). Measuring the impact of medical research: moving from outputs to outcomes. American Journal of Psychiatry, 164(2), 206.

Wets, K., Weedon, D., & Velterop, J. (2003). Post-publication filtering and evaluation: Faculty of 1000. Learned Publishing, 16(4), 249–258. doi:10.1087/095315103322421982.

Zaman, M. uz, & Britain, G. (2004). Review of the academic evidence on the relationship between teaching and research in higher education. https://www.education.gov.uk/publications/eOrderingDownload/RR506.pdf.

Zuccala, A. (2010). The mathematical review system: does reviewer status play a role in the citation process? Scientometrics, 84(1), 221–235.

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Mohammadi, E., Thelwall, M. Assessing non-standard article impact using F1000 labels. Scientometrics 97, 383–395 (2013). https://doi.org/10.1007/s11192-013-0993-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-013-0993-9