Abstract

Mean-based method may be the most popular linear method for field normalization of citation impact. However, the relatively good but not ideal performance of mean-based method, plus its being a special case of the general scaling method y = kx and the more general affine method y = kx + b, implies that more effective linear methods may exist. Under the idea of making the citation distribution of each field approximate a common reference distribution through the transformation of scaling method and affine method with unknown parameters k and b, we derived the scaling and affine methods under separate unweighted and weighted optimization models for 236 Web of Science subject categories. While the unweighted-optimization-based scaling and affine methods did not show full advantages over mean-based method, the weighted-optimization-based affine method showed a decided advantage over mean-based method along most parts of the distributions. At the same time, the trivial advantage of weighted-optimization-based scaling method over mean-based method indirectly validated the good normalization performance of mean-based method. Based on these results, we conclude that mean-based method is acceptable for general field normalization, but in the face of higher demands on normalization effect, the weighted-optimization-based affine method may be a better choice.

Similar content being viewed by others

Notes

"linear methods" in this paper include both the scaling method y = kx which is a linear transformation under the rigorous mathematical definition and the more general affine method y = kx + b which is an affine transformation. We will discuss this in detail later.

The position in a distribution can be described by the cumulative distribution function (CDF). The empirical CDF value for citation count c in a citation distribution is equal to the proportion of publications whose citation counts are less than or equal to c in this citation distribution.

There is not even a general consensus about the distribution models of skewed citation distributions (Albarrán et al. 2011; Albarrán and Ruiz-Castillo 2011; Clauset et al. 2009; Franceschet 2011; Gupta et al. 2005; Lehmann et al. 2003; Newman 2005; Perc 2010; Peterson et al. 2010; Redner 1998, 2005; Seglen 1992; Tsallis and Albuquerque 2000; Vieira and Gomes 2010), let along discussing the most effective linear method based on the distribution models.

The CCDF value for citation count c in a citation distribution is equal to the proportion of articles in this citation distribution whose citation counts are no less than c.

The field's CCDF curve is a polyline formed by connecting the CCDF values of distinct citations in the citation distribution. Unlike the case of reference distribution, the field's CCDF curve is not a fitted curve.

To better understand the concept of "distinct citation count in the citation distribution", we give a hypothetical example here. Suppose in a citation distribution there are five publications with citation count zero, four publications with citation count one, and one publication with citation count two. Then there are three distinct citation counts arranged in ascending order in the citation distribution: zero, one, two. Citation count zero, one and two are the first, second and third distinct citation count respectively, or x 1 = 0, x 2 = 1 and x 3 = 2. According to the definition of footnote 4, the empirical CCDF values of the three distinct citation counts are y 1 = 1.0, y 2 = 0.5 and y 3 = 0.1.

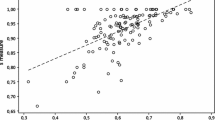

In the regression equation y = kx + b, x and y are values of two different scaling factors. If the intercept b is much smaller than the slope k, the regression equation can be approximately simplified to y = kx, which indicates y is approximately a multiple of x.

We do not plot the results in the case of raw citations in Fig. 4 because the distributions of global top z% percentages under raw citations are much more unbalanced than the three normalization cases. The big slope of the percentage distribution under raw citations results in wider ranges of y coordinates, which makes the performance differences among the three normalization methods difficult to distinguish.

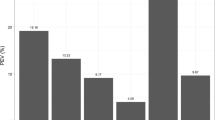

The absolute value of translation term b in OPTA of each field is small (−1.6 ≤ b ≤ 2.1), so they have trivial impact on the range of normalized citations.

In a citation distribution, distinct citations are arranged in ascending order. For example, the order of citation count zero is 1 and the order of citation count one are 2, and so on.

Such as minimizing the Kolmogorov–Smirnov distance (K–S statistic) between field citation distributions and reference distribution.

References

Abramo, G., Cicero, T., & D’Angelo, C. A. (2011). A field-standardized application of DEA to national-scale research assessment of universities. Journal of Informetrics, 5(4), 618–628.

Abramo, G., Cicero, T., & D’Angelo, C. A. (2012). Revisiting the scaling of citations for research evaluation. Journal of Informetrics, 6(4), 470–479.

Albarrán, P., Crespo, J. A., Ortuño, I., & Ruiz-Castillo, J. (2011). The skewness of science in 219 sub-fields and a number of aggregates. Scientometrics, 88(2), 385–397.

Albarrán, P., & Ruiz-Castillo, J. (2011). References made and citations received by scientific articles. Journal of the American Society for Information Science and Technology, 62(1), 40–49.

Bornmann, L. (2013). How to analyze percentile impact data meaningfully in bibliometrics: The statistical analysis of distributions, percentile rank classes and top-cited papers. Journal of the American Society for Information Science and Technology, 64(3), 587–595.

Bornmann, L., Mutz, R., Neuhaus, C., & Daniel, H.-D. (2008). Citation counts for research evaluation: Standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics in Science and Environmental Politics, 8(1), 93–102.

Calver, M. C., & Bradley, J. S. (2009). Should we use the mean citations per paper to summarise a journal’s impact or to rank journals in the same field? Scientometrics, 81(3), 611–615.

Castellano, C., & Radicchi, F. (2009). On the fairness of using relative indicators for comparing citation performance in different disciplines. Archivum Immunologiae et Therapiae Experimentalis, 57(2), 85–90.

Clauset, A., Shalizi, C. R., & Newman, M. E. J. (2009). Power-law distributions in empirical data. SIAM Review, 51(4), 661–703.

Crespo, J. A., Li, Y., & Ruiz-Castillo, J. (2013). The measurement of the effect on citation inequality of differences in citation practices across scientific fields. PLoS ONE, 8(3), e58727.

Franceschet, M. (2011). The skewness of computer science. Information Processing and Management, 47(1), 117–124.

Garfield, E. (1979a). Citation indexing—Its theory and application in science, technology and humanities. New York: Wiley & Sons.

Garfield, E. (1979b). Is citation analysis a legitimate evaluation tool? Scientometrics, 1(4), 359–375.

Gupta, H. M., Campanha, J. R., & Pesce, R. A. G. (2005). Power-law distributions for the citation index of scientific publications and scientist. Brazilian Journal of Physics, 35(4A), 981–986.

Katz, J. S. (2000). Scale-independent indicators and research evaluation. Science and Public Policy, 27(1), 23–36.

Lee, G. J. (2010). Assessing publication performance of research units: Extentions through operational research and economic techniques. Scientometrics, 84(3), 717–734.

Lehmann, S., Lautrup, B., & Jackson, A. D. (2003). Citation networks in high energy physics. Physical Review E, 68(2), 026113.

Leydesdorff, L., & Opthof, T. (2010). Normalization at the field level: Fractional counting of citations. Journal of Informetrics, 4(4), 644–646.

Leydesdorff, L., & Opthof, T. (2011). Remaining problems with “New Crown Indicator” (MNCS) of the CWTS. Journal of Informetrics, 5(1), 224–225.

Li, Y., Radicchi, F., Castellano, C., & Ruiz-Castillo, J. (2013). Quantitative evaluation of alternative field normalization procedures. Journal of Informetrics, 7(3), 746–755.

Lundberg, J. (2007). Lifting the crown—Citation z-score. Journal of Informetrics, 1(2), 145–154.

Martin, B. R., & Irvine, J. (1983). Assessing basic research: Some partial indicators of scientific progress in radio astronomy. Research Policy, 12(2), 61–90.

MathWorks. (2014a). Exponential Models. http://www.mathworks.cn/cn/help/curvefit/exponential.html. Retrieved 5 May 2014.

MathWorks. (2014b). Gaussian Models. http://www.mathworks.cn/cn/help/curvefit/gaussian.html. Retrieved 5 May 2014.

MathWorks. (2014c). Rational Models. http://www.mathworks.cn/cn/help/curvefit/rational.html. Retrieved 5 May 2014.

McAllister, P. R., Narin, F., & Corrigan, J. G. (1983). Programmatic evaluation and comparison based on standardized citation scores. IEEE Transactions on Engineering Management, 30(4), 205–211.

Moed, H. F., Burger, W. J. M., Frankfort, J. G., & Van Raan, A. F. J. (1985). The use of bibliometric data for the measurement of university research performance. Research Policy, 14(3), 131–149.

Newman, M. E. J. (2005). Power laws, Pareto distributions and Zipf’s law. Contemporary Physics, 46(5), 323–351.

Perc, M. (2010). Zipf’s law and log-normal distributions in measures of scientific output across fields and institutions: 40 Years of Slovenia’s research as an example. Journal of Informetrics, 4(3), 358–364.

Peterson, G. J., Pressé, S., & Dill, K. A. (2010). Nonuniversal power law scaling in the probability distribution of scientific citations. Proceedings of the National Academy of Sciences of the United States of America, 107(37), 16023–16027.

Radicchi, F., & Castellano, C. (2011). Rescaling citations of publications in physics. Physical Review E, 83(4), 046116.

Radicchi, F., & Castellano, C. (2012). A reverse engineering approach to the suppression of citation biases reveals universal properties of citation distributions. PLoS ONE, 7(3), e33833.

Radicchi, F., Fortunato, S., & Castellano, C. (2008). Universality of citation distributions: Toward an objective measure of scientific impact. Proceedings of the National Academy of Sciences of the United States of America, 105(45), 17268–17272.

Redner, S. (1998). How popular is your paper? An empirical study of the citation distribution. European Physical Journal B, 4(2), 131–134.

Redner, S. (2005). Citation statistics from 110 years of Physical Review. Physics Today, 58(6), 49–54.

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9(5–6), 281–291.

Seglen, P. O. (1992). The skewness of science. Journal of the American Society for Information Science, 43(9), 628–638.

Thompson, B. (1993). GRE percentile ranks cannot be added or averaged: A position paper exploring the scaling characteristics of percentile ranks, and the ethical and legal culpabilities created by adding percentile ranks in making “High-Stakes” admission decisions. Paper presented at the Annual Meeting of the Mid-South Educational Research Association, New Orleans, LA.

Tsallis, C., & Albuquerque, M. Pd. (2000). Are citations of scientific papers a case of nonextensivity? European Physical Journal B, 13(4), 777–780.

van Eck, N. J., Waltman, L., van Raan, A. F. J., Klautz, R. J. M., & Peul, W. C. (2013). Citation analysis may severely underestimate the impact of clinical research as compared to basic research. PLoS ONE, 8(4), e62395.

van Raan, A. F. J. (2006). Statistical properties of bibliometric indicators: Research group indicator distributions and correlations. Journal of the American Society for Information Science and Technology, 57(3), 408–430.

van Raan, A. F. J., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & Waltman, L. (2010). The new set of bibliometric indicators of CWTS. Paper presented at the Eleventh International Conference on Science and Technology Indicators, Leiden.

Vieira, E. S., & Gomes, J. A. N. F. (2010). Citations to scientific articles: Its distribution and dependence on the article features. Journal of Informetrics, 4(1), 1–13.

Waltman, L., & van Eck, N. J. (2013a). Source normalized indicators of citation impact: An overview of different approaches and an empirical comparison. Scientometrics, 96(3), 699–716.

Waltman, L., & van Eck, N. J. (2013b). A systematic empirical comparison of different approaches for normalizing citation impact indicators. Journal of Informetrics, 7(4), 833–849.

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & van Raan, A. F. J. (2011). Towards a new crown indicator: Some theoretical considerations. Journal of Informetrics, 5(1), 37–47.

Waltman, L., van Eck, N. J., & van Raan, A. F. J. (2012). Universality of citation distributions revisited. Journal of the American Society for Information Science and Technology, 63(1), 72–77.

Zhang, Z., Cheng, Y., & Liu, N. C. (2014). Comparison of the effect of mean-based method and z-score for field normalization of citations at the level of Web of Science subject categories. Scientometrics. doi:10.1007/s11192-014-1294-7.

Acknowledgments

This study is supported by a grant from the National Education Sciences Planning program during the 12th Five-Year period (No. CIA110141).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, Z., Cheng, Y. & Liu, N.C. Improving the normalization effect of mean-based method from the perspective of optimization: optimization-based linear methods and their performance. Scientometrics 102, 587–607 (2015). https://doi.org/10.1007/s11192-014-1398-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-014-1398-0