Abstract

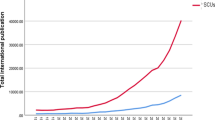

If peer review has been and is continuing to be an acceptable approach for evaluation, Science and technology (S&T) metrics have been demonstrated to be a more accurate and objectively independent tools for evaluation. This article provides insights from an example of a relevant use of S&T metrics to assess a national research policy and subsequently universities achievements within this policy. One of the main findings were that just by setting S&T metrics as objective indicators there was an increasing research outputs: productivity, impact, and collaboration. However, overall productivity is still far low when brought to academic staff size and that a huge difference exists among universities achievements. The reliability of scientometric evaluation’s use as a performance tool is increasing in universities and the culture of this evaluation usefulness in research policy has widely spread. Surprisingly, this evaluation shows that even if S&T metrics have substantially increased, funds execution as means of rate of payment on total budget was less than 15 % due mainly to the high and unusual increase in funding allocations than was before the policy, a fact to which universities were managerially not well prepared. Finally, future evaluation should follow in the very short-term to quantify the impact extent of the policy revealed in this annual evaluation.

Similar content being viewed by others

Notes

DH: Moroccan currency, 1 Euro = 11 DH, 1 US$ = 8 DH.

References

Abramo, G., & D’Angelo, C. A. (2011). Evaluating research: From informed peer review to bibliometrics. Scientometrics, 87, 499–514.

Abramo, G., D’Angelo, C. A., & Solazzi, M. (2011). The relationship between scientists’ research performance and the degree of internationalization of their research. Scientometrics, 86, 629–643.

Adams, J., King, C., & Hook, D. (2010). Global research report of Africa. Leeds: Thomson Reuters.

Archambault, E., Campbell, D., Gingras, Y., & Lariviere, V. (2009). Comparing of science bibliometric statistics obtained from the Web and Scopus. Journal of the American Society for Information Science and Technology, 60(7), 1320–1326.

Auranen, O., & Nieminen, M. (2010). University research funding and publication performance—An international comparison. Research Policy, 39, 822–834.

Bouabid, H., Dalimi, M., & Cherraj, M. (2013). Intermediate-class university ranking system: Application to Maghreb universities. In 14th International society of scientometrics and informetrics (ISSI) conference, Vol. II, pp. 885–895.

Bouabid, H., Dalimi, M., & ElMajid, Z. (2011). Impact evaluation of the voluntary early retirement policy on research and technology outputs of the faculties of science in Morocco. Scientometrics, 86, 125–132.

Bouabid, H., & Larivière, V. (2013). The lengthening of papers’ life expectancy: A diachronous analysis. Scientometrics, 97, 695–717.

Defazio, D., Lockett, A., & Wright, M. (2009). Funding incentives, collaborative dynamics and scientific productivity: Evidence from the EU framework program. Research Policy, 38, 293–305.

Delanghe, H., Sloan, B., & Muldu, U. (2011). European research policy and bibliometric indicators, 1990–2005. Scientometrics, 87, 389–398.

Falagas, M. E., Pitsouni, E. I., Malietzis, G. A., & Pappas, G. (2008). Comparison of PubMed, Scopus, Web of Science, and Google scholar: strengths and weaknesses. Faseb Journal, 22(2), 338–342.

Geuna, A., & Martin, B. R. (2003). University research evaluation and funding: An international comparison. Minerva, 41, 277–304.

Hagen, N. T. (2010). Deconstructing doctoral dissertations: How many papers does it take to make a PhD? Scientometrics, 85, 567–579.

Haslam, N., Ban, L., Kaufmann, L., Loughnan, S., Peters, K., Whelan, J., et al. (2008). What makes an article influential? Predicting impact in social and personality psychology. Scientometrics, 76(1), 169–185.

Helga, B. A., Ernesto, L. R. L., & Tomas, B. M. (2009). Dimensions of scientific collaboration and its contribution to the academic research groups’ scientific quality. Research Evaluation, 18(4), 301–311.

Hicks, D. (2012). Performance-based university research funding systems. Research Policy, 41, 251–261.

Jacso, P. (2009). The h-index for countries in Web of Science and Scopus. Online Information Review, 33(4), 831–837.

Jacso, P. (2010). Pragmatic issues in calculating and comparing the quantity and quality of research through rating and ranking of researchers based on peer reviews and bibliometric indicators from Web of Science, Scopus and Google Scholar. Online Information Review, 34(6), 972–982.

Juznic, P., Peclin, S., Zaucer, M., Mandelj, T., Pusnik, M., & Demsar, F. (2010). Scientometric indicators: peer-review, bibliometric methods and conflict of interests. Scientometrics, 85, 429–441.

King, J. (1987). A review of bibliometric and other science indicators and their role in research evaluation. Journal of Information Science, 13, 261–276.

Kyvik, S., & Smeby, J. C. (1994). Teaching and research. The relationship between the supervision of graduate students and faculty research performance. Higher Education, 28, 227–239.

Larivière, V., Zuccala, A., & Archambault, E. (2008). The declining scientific impact of theses: Implications for electronic thesis and dissertation repositories and graduate studies. Scientometrics, 74(1), 109–121.

Lee, S., & Bozeman, B. (2005). The impact of research collaboration on scientific productivity. Social Studies of Science, 35(1), 673–702.

Leydesdorff, L., & Opthof, T. (2010). Scopus’s source normalized impact per paper (SNIP) versus a journal impact factor based on fractional counting of citations. Journal of the American Society for Information Science and Technology, 61(11), 2365–2369.

Moxham, H., & Anderson, J. (1992). Peer review. A view from the inside. Science and Technology Policy, 5(1),7–15.

Narin, F. (1976). Evaluative bibliometrics: The use of publication and citation analysis in the evaluation of scientific activity. Washington DC: NSF.

Quoniam, L., Rostaing, H., Boutin, E., & Dou, H. (1995). Treating bibliometric indicators with caution: Their dependence on the source database. Research Evaluation, 5(3), 179.

Rinia, E. J. (2000). Scientometric studies and their role in research policy of two research councils in the Netherlands. Scientometrics, 47(2), 363–378.

Scherngell, T., & Yuanjia, H. (2011). Collaborative knowledge production in china: Regional evidence from a gravity model approach. Regional Studies, 45(6), 755–772.

Stvilia, B., Hinnant, C., Schindler, K., Worrall, A., Burnett, G., Burnett, K., et al. (2011). Composition of scientific teams and publication productivity at a national science lab. Journal of the American Society for Information Science and Technology, 62(2), 270–283.

Sun, X., Kaur, J., Milojevic, S., Flammini, A., & Menczer, F. (2013). Social dynamics of science. Scientific Reports, 3(1069), 1–6.

Taylor, J. (2011a). The assessment of research quality in UK universities: Peer review or metrics? British Journal of Management, 22, 202–217.

Taylor, M. C. (2011b). Reform the PhD system or close it down. Nature, 472, 261.

Torres-Salinas, D., Lopez-Cozar, E. D., & Jimenez-Contreras, E. (2009). Ranking of departments and researchers within a university using two different databases: Web of Science versus Scopus. Scientometrics, 80(3), 761–774.

Van Raan, A. F. J. (1996). Advanced bibliometric methods as quantitative core of peer review based evaluation and foresight exercises. Scientometrics, 36(3), 397–420.

Van Rijnsoever, F. J., Hessels, L. K., & Vandeberg, R. L. J. (2008). A resource-based view on the interactions of university researchers. Research Policy, 37(8), 1255–1266.

Vieira, E. S., & Gomes, J. A. N. F. (2009). A comparison of Scopus and Web of Science for a typical university. Scientometrics, 81(2), 587–600.

Acknowledgments

The author is grateful to the anonymous referees for their valuable comments leading to a significant improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Bouabid, H. Science and technology metrics for research policy evaluation: some insights from a Moroccan experience. Scientometrics 101, 899–915 (2014). https://doi.org/10.1007/s11192-014-1407-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-014-1407-3