Abstract

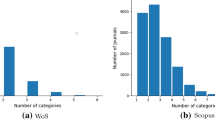

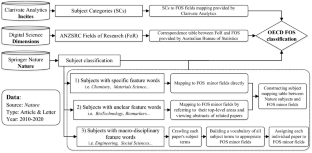

The classification of scientific literature into appropriate disciplines is an essential precondition of valid scientometric analysis and significant to the practice of research assessment. In this paper, we compared the classification of publications in Nature based on three different approaches across three different systems. These were: Web of Science (WoS) subject categories (SCs) provided by InCites, which are based on the disciplinary affiliation of the majority of a paper’s references; Fields of Research (FoR) classification provided by Dimensions, which are derived from machine learning techniques; and subjects classification provided by Springer Nature, which are based on author-selected subject terms in the publisher’s tagging system. The results show, first, that the single category assignment in InCites is not appropriate for a large number of papers. Second, only 27% of papers share the same fields between FoR classification in Dimensions and subjects classification in Springer Nature, revealing great inconsistencies between these machine-determined versus human-judged approaches. Being aware of the characteristics and limitations of the ways we categorize research publications is important to research management.

Similar content being viewed by others

Notes

The document types, article and letter, are defined on the Nature website. Both are peer-reviewed research papers published in Nature.

ANZSRC FoR classification have now been updated to 2020 and are significantly different to the 2008 version. However, since our data was exported from Dimensions in which only FoR 2008 is available, we applied FoR 2008 in this study.

Authors are required to choose the most relevant subject categories (including the top-level subject areas, the second level subjects, and the more fine-grained levels of specific subject terms) when submitting their manuscripts to Springer Nature. These fine-grained subject terms are presented on the webpage of each paper.

References

Abramo, G., D’Angelo, C. A., & Zhang, L. (2018). A comparison of two approaches for measuring interdisciplinary research output: The disciplinary diversity of authors vs the disciplinary diversity of the reference list. Journal of Informetrics, 12(4), 1182–1193.

Ahlgren, P., Chen, Y., Colliander, C., et al. (2020). Enhancing direct citations: A comparison of relatedness measures for community detection in a large set of PubMed publications. Quantitative Science Studies, 1(2), 714–729.

Baharudin, B., Lee, L. H., & Khan, K. (2010). A review of machine learning algorithms for text-documents classification. Journal of Advances in Information Technology, 1, 4–20.

Ballesta, S., Shi, W., Conen, K. E., et al. (2020). Values encoded in orbitofrontal cortex are causally related to economic choices. Nature, 588(7838), 450–453.

Bornmann, L. (2018). Field classification of publications in Dimensions: A first case study testing its reliability and validity. Scientometrics, 117(1), 637–640.

Boyack, K. W., Newman, D., Duhon, R. J., et al. (2011). Clustering more than two million biomedical publications: Comparing the accuracies of nine text-based similarity approaches. Plos One, 6(3), e18029.

Carley, S., Porter, A. L., Rafols, I., et al. (2017). Visualization of disciplinary profiles: Enhanced science overlay maps. Journal of Data and Information Science, 2(3), 68–111.

Chapman, H. N., Fromme, P., Barty, A., et al. (2011). Femtosecond X-ray protein nanocrystallography. Nature, 470(7332), 73–77.

Dehmamy, N., Milanlouei, S., & Barabási, A.-L. (2018). A structural transition in physical networks. Nature, 563(7733), 676–680.

Eykens, J., Guns, R., & Engels, T. C. E. (2019). Article level classification of publications in sociology: An experimental assessment of supervised machine learning approaches. In: Proceedings of the 17th International Conference on Scientometrics & Informetrics, Rome (Italy), 2–5 September, 738–743.

Glänzel, W., & Debackere, K. (2021). Various aspects of interdisciplinarity in research and how to quantify and measure those. Scientometrics. https://doi.org/10.1007/s11192-11021-04133-11194

Glänzel, W., & Schubert, A. (2003). A new classification scheme of science fields and subfields designed for scientometric evaluation purposes. Scientometrics, 56(3), 357–367.

Glänzel, W., Thijs, B., & Huang, Y. (2021). Improving the precision of subject assignment for disparity measurement in studies of interdisciplinary research. FEB Research Report MSI_2104, 1–12.

Gläser, J., Glänzel, W., & Scharnhorst, A. (2017). Same data—Different results? Towards a comparative approach to the identification of thematic structures in science. Scientometrics, 111(2), 981–998.

Gómez-Núñez, A. J., Batagelj, V., Vargas-Quesada, B., et al. (2014). Optimizing SCImago Journal & Country Rank classification by community detection. Journal of Informetrics, 8(2), 369–383.

Gómez-Núñez, A. J., Vargas-Quesada, B., & de Moya-Anegón, F. (2016). Updating the SCImago journal and country rank classification: A new approach using Ward’s clustering and alternative combination of citation measures. Journal of the Association for Information Science and Technology, 67(1), 178–190.

Haunschild, R., Schier, H., Marx, W., et al. (2018). Algorithmically generated subject categories based on citation relations: An empirical micro study using papers on overall water splitting. Journal of Informetrics, 12(2), 436–447.

Janssens, F., Zhang, L., Moor, B. D., et al. (2009). Hybrid clustering for validation and improvement of subject-classification schemes. Information Processing & Management, 45(6), 683–702.

Kandimalla, B., Rohatgi, S., Wu, J., et al. (2021). Large scale subject category classification of scholarly papers with deep attentive neural networks. Frontiers in Research Metrics and Analytics, 5, 31.

Klavans, R., & Boyack, K. W. (2017). Which type of citation analysis generates the most accurate taxonomy of scientific and technical knowledge? Journal of the Association for Information Science and Technology, 68(4), 984–998.

Leydesdorff, L., & Bornmann, L. (2016). The operationalization of “fields” as WoS subject categories (WCs) in evaluative bibliometrics: The cases of “library and information science” and “science & technology studies.” Journal of the Association for Information Science and Technology, 67(3), 707–714.

Leydesdorff, L., & Rafols, I. (2009). A global map of science based on the ISI subject categories. Journal of the American Society for Information Science and Technology, 60(2), 348–362.

Liu, X., Glänzel, W., & De Moor, B. (2012). Optimal and hierarchical clustering of large-scale hybrid networks for scientific mapping. Scientometrics, 91(2), 473–493.

McGillivray, B., & Astell, M. (2019). The relationship between usage and citations in an open access mega-journal. Scientometrics, 121(2), 817–838.

Milojević, S. (2020). Practical method to reclassify Web of Science articles into unique subject categories and broad disciplines. Quantitative Science Studies, 1(1), 183–206.

Nam, S., Jeong, S., Kim, S.-K., et al. (2016). Structuralizing biomedical abstracts with discriminative linguistic features. Computers in Biology and Medicine, 79, 276–285.

Park, I.-U., Peacey, M. W., & Munafò, M. R. (2014). Modelling the effects of subjective and objective decision making in scientific peer review. Nature, 506(7486), 93–96.

Porter, A. L., & Rafols, I. (2009). Is science becoming more interdisciplinary? Measuring and mapping six research fields over time. Scientometrics, 81(3), 719–745.

Rafols, I., & Leydesdorff, L. (2009). Content-based and algorithmic classifications of journals: Perspectives on the dynamics of scientific communication and indexer effects. Journal of the American Society for Information Science and Technology, 60(9), 1823–1835.

Roach, N. T., Venkadesan, M., Rainbow, M. J., et al. (2013). Elastic energy storage in the shoulder and the evolution of high-speed throwing in Homo. Nature, 498(7455), 483–486.

Rutishauser, U., Ross, I. B., Mamelak, A. N., et al. (2010). Human memory strength is predicted by theta-frequency phase-locking of single neurons. Nature, 464(7290), 903–907.

Shu, F., Julien, C.-A., Zhang, L., et al. (2019). Comparing journal and paper level classifications of science. Journal of Informetrics, 13(1), 202–225.

Shu, F., Ma, Y., Qiu, J., et al. (2020). Classifications of science and their effects on bibliometric evaluations. Scientometrics, 125(3), 2727–2744.

Small, H. (1973). Co-citation in the scientific literature: A new measure of the relationship between two documents. Journal of the American Society for Information Science, 24(4), 265–269.

Szomszor, M., Adams, J., Pendlebury, D. A., et al. (2021). Data categorization: Understanding choices and outcomes. The Global Research Report from the Institute for Scientific Information.

Tannenbaum, C., Ellis, R. P., Eyssel, F., et al. (2019). Sex and gender analysis improves science and engineering. Nature, 575(7781), 137–146.

Thijs, B., Zhang, L., & Glänzel, W. (2015). Bibliographic coupling and hierarchical clustering for the validation and improvement of subject-classification schemes. Scientometrics, 105(3), 1453–1467.

Traag, V. A., Waltman, L., & van Eck, N. J. (2019). From Louvain to Leiden: Guaranteeing well-connected communities. Scientific Reports, 9(1), 5233.

Van Eck, N. J., Waltman, L., Van Raan, A. F. J., et al. (2013). Citation analysis may severely underestimate the impact of clinical research as compared to basic research. PLoS ONE, 8(4), e0062395.

Waltman, L., & van Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. Journal of the American Society for Information Science and Technology, 63(12), 2378–2392.

Waltman, L., & Van Eck, N. J. (2013). Source normalized indicators of citation impact: An overview of different approaches and an empirical comparison. Scientometrics, 96(3), 699–716.

Waltman, L., & van Eck, N. J. (2019). Field normalization of scientometric indicators. In W. Glänzel, H. F. Moed, U. Schmoch, et al. (Eds.), Springer Handbook of Science and Technology Indicators (pp. 281–300). Springer.

Waltman, L., Van Eck, N. J., & Noyons, E. C. M. (2010). A unified approach to mapping and clustering of bibliometric networks. Journal of Informetrics, 4(4), 629–635.

Zhang, L., Janssens, F., Liang, L., et al. (2010). Journal cross-citation analysis for validation and improvement of journal-based subject classification in bibliometric research. Scientometrics, 82(3), 687–706.

Zhang, L., Rousseau, R., & Glänzel, W. (2016). Diversity of references as an indicator of the interdisciplinarity of journals: Taking similarity between subject fields into account. Journal of the Association for Information Science and Technology, 67(5), 1257–1265.

Zhang, L., Sun, B., Chinchilla-Rodríguez, Z., et al. (2018). Interdisciplinarity and collaboration: On the relationship between disciplinary diversity in departmental affiliations and reference lists. Scientometrics, 117(1), 271–291.

Zhang, L., Sun, B., Jiang, L., et al. (2021a). On the relationship between interdisciplinarity and impact: Distinct effects on academic and broader impact. Research Evaluation, 30(3), 256–268.

Zhang, L., Sun, B., Shu, F., et al. (2021b). Comparing paper level classifications across different methods and systems: An investigation on Nature publications. In: Proceedings of the 18th International Conference on Scientometrics and Informetrics, Leuven (Belgium), 12–15 July, 1319–1324.

Acknowledgements

The present study is an extended version of an article presented at the 18th International Conference on Scientometrics and Informetrics, Leuven (Belgium), 12–15 July 2021 (Zhang et al. 2021b). The authors would like to acknowledge support from the National Natural Science Foundation of China (Grant Nos. 71974150, 72004169, 72074029), the National Laboratory Centre for Library and Information Science at Wuhan University, and the project “Interdisciplinarity & Impact” (2019–2023) funded by the Flemish Government.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The first author (Lin Zhang) is the Co-Editor-in-Chief of Scientometrics.

Rights and permissions

About this article

Cite this article

Zhang, L., Sun, B., Shu, F. et al. Comparing paper level classifications across different methods and systems: an investigation of Nature publications. Scientometrics 127, 7633–7651 (2022). https://doi.org/10.1007/s11192-022-04352-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04352-3