Abstract

As an essential feature of smart cyber-physical systems (CPSs), self-healing behaviors play a major role in maintaining the normality of CPSs in the presence of faults and uncertainties. It is important to test whether self-healing behaviors can correctly heal faults under uncertainties to ensure their reliability. However, the autonomy of self-healing behaviors and impact of uncertainties make it challenging to conduct such testing. To this end, we devise a fragility-oriented testing approach, which is comprised of two novel algorithms: fragility-oriented testing (FOT) and uncertainty policy optimization (UPO). The two algorithms utilize the fragility, obtained from test executions, to learn the optimal policies for invoking operations and introducing uncertainties, respectively, to effectively detect faults. We evaluated their performance by comparing them against a coverage-oriented testing (COT) algorithm and a random uncertainty generation method (R). The evaluation results showed that the fault detection ability of FOT+UPO was significantly higher than the ones of FOT+R, COT+UPO, and COT+R, in 73 out of 81 cases. In the 73 cases, FOT+UPO detected more than 70% of faults, while the others detected 17% of faults, at the most.

Similar content being viewed by others

Notes

Though call, change, and signal event occurrences can all be triggers to model expected behaviors, only transitions having call event occurrences as triggers can be activated from the outside. A change event or a signal event is only for the SUT’s internal behaviors, which cannot be controlled for testing.

When a collision is avoided, the copter is back to the flight mode. Hence, no testing interface needs to be invoked to trigger \( \mathbb{t}12 \). When the flight mode is changed back, a corresponding change event is generated by TM-Executor to activate the transition. As this event is from inside, we do not capture it in DFSM.

The distance function of greater operator is dis(x > y) = (y − x + k)/(y − x + k + 1), when x ≤ y, where k is an arbitrary positive value. Here, we set k = 1. More details are in Ali et al. (2013).

References

Ali, S., Iqbal, M. Z., Arcuri, A., & Briand, L. C. (2013). Generating test data from OCL constraints with search techniques. IEEE Transactions on Software Engineering, 39(10), 1376–1402.

Anzai, Y. (2012). Pattern recognition and machine learning. New York: Elsevier.

Araiza-Illan, D., Pipe, A. G., & Eder, K. (2016). Intelligent agent-based stimulation for testing robotic software in human-robot interactions. In Proceedings of the 3rd Workshop on Model-Driven Robot Software Engineering. ACM.

Arcuri, A., & Briand, L. (2011). A practical guide for using statistical tests to assess randomized algorithms in software engineering. In 33rd International Conference on Software Engineering (ICSE) (pp. 1–10), IEEE.

Aronson, J. E., Liang, T.-P., & Turban, E. (2005). Decision support systems and intelligent systems. Pearson Prentice-Hall.

Arulkumaran, K., Deisenroth, M. P., Brundage, M., & Bharath, A. A. (2017). A brief survey of deep reinforcement learning. arXiv preprint arXiv:1708.05866.

Asadollah, S. A., Inam, R., & Hansson, H. (2015). A survey on testing for cyber physical system. In The 27th IFIP International Conference on Testing Software and Systems (pp. 194–207). Sharjah and Dubai: Springer.

Bures, T., Weyns, D., Berger, C., Biffl, S., Daun, M., Gabor, T., et al. (2015). Software engineering for smart cyber-physical systems--towards a research agenda: report on the First International Workshop on Software Engineering for Smart CPS. ACM SIGSOFT Software Engineering Notes, 40(6), 28–32.

de Vries, R. G., & Tretmans, J. (2000). On-the-fly conformance testing using SPIN. International Journal on Software Tools for Technology Transfer (STTT), 2(4), 382–393.

Demuth, B., & Wilke, C. (2009). Model and object verification by using Dresden OCL. In Proceedings of the Russian-German Workshop Innovation Information Technologies: Theory and Practice, Ufa, Russia (pp. 687–690). Citeseer.

Duan, Y., Chen, X., Houthooft, R., Schulman, J., & Abbeel, P. (2016)Benchmarking deep reinforcement learning for continuous control. In International Conference on Machine Learning (pp. 1329–1338).

Enoiu, E. P., Cauevic, A., Sundmark, D., & Pettersson, P. (2016). A controlled experiment in testing of safety-critical embedded software. In 2016 IEEE International Conference on Software Testing, Verification and Validation (ICST) (pp. 1–11). IEEE.

Esfahani, N., & Malek, S. (2013). Uncertainty in self-adaptive software systems. In Software Engineering for Self-Adaptive Systems II (pp. 214–238). Springer.

Fredericks, E. M., DeVries, B., & Cheng, B. H. (2014). Towards run-time adaptation of test cases for self-adaptive systems in the face of uncertainty. In Proceedings of the 9th International Symposium on Software Engineering for Adaptive and Self-Managing Systems (pp. 17–26). ACM.

Glynn, P. W., & Iglehart, D. L. (1989). Importance sampling for stochastic simulations. Management Science, 35(11), 1367–1392.

Grieskamp, W., Hierons, R. M., & Pretschner, A. (2011). Model-based testing in practice. In Dagstuhl Seminar Proceedings. Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik.

Groce, A., Fern, A., Pinto, J., Bauer, T., Alipour, A., Erwig, M., et al. (2012). Lightweight automated testing with adaptation-based programming. In IEEE 23rd International Symposium on Software Reliability Engineering (ISSRE) (pp. 161–170). IEEE.

Hestenes, M. R., & Stiefel, E. (1952). Methods of conjugate gradients for solving linear systems. Journal of Research of the National Bureau of Standards, NIST, 49, 409–435.

Kaelbling, L. P., Littman, M. L., & Moore, A. W. (1996). Reinforcement learning: a survey. Journal of Artificial Intelligence Research, 4, 237–285.

Kakade, S., & Langford, J. (2002). Approximately optimal approximate reinforcement learning. In The Nineteenth International Conference on Machine Learning (ICML) (pp. 267–274). PMLR.

Larsen, K. G., Mikucionis, M., & Nielsen, B. (2004). Online testing of real-time systems using uppaal. In International Workshop on Formal Approaches to Software Testing (pp. 79–94). Springer.

Li, Y. (2017). Deep reinforcement learning: an overview. arXiv preprint arXiv:1701.07274.

Ma, T., Ali, S., Yue, T., & Elaasar, M. (2017). Fragility-oriented testing with model execution and reinforcement learning. In IFIP International Conference on Testing Software and Systems (pp. 3–20). Springer.

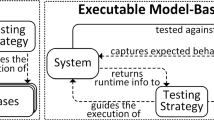

Ma, T., Ali, S., & Yue, T. (2018). Modeling foundations for executable model-based testing of self-healing cyber-physical systems. In International Journal on Software and Systems Modeling (SoSyM) (pp. 1–31). Berlin, Heidelberg: Springer.

Mooney, C. Z., Duval, R. D., & Duval, R. (1993). Bootstrapping: a nonparametric approach to statistical inference (No. 95). Newbury Park: Sage.

Ramirez, A. J., Jensen, A. C., & Cheng, B. H. (2012). A taxonomy of uncertainty for dynamically adaptive systems. In ICSE Workshop on Software Engineering for Adaptive and Self-Managing Systems (SEAMS) (pp. 99–108). IEEE.

Schulman, J., Levine, S., Abbeel, P., Jordan, M., & Moritz, P. (2015). Trust region policy optimization. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15) (pp. 1889–1897). PMLR.

Schupp, S., Ábrahám, E., Chen, X., Makhlouf, I. B., Frehse, G., Sankaranarayanan, S., et al. (2015). Current challenges in the verification of hybrid systems. In International Workshop on Design, Modeling, and Evaluation of Cyber Physical Systems (pp. 8–24). Springer.

Sutton, R. S., & Barto, A. G. (1998). Reinforcement learning: an introduction (1st edn.). Cambridge: MIT Press.

Tatibouet, J. (2016). Moka–a simulation platform for Papyrus based on OMG specifications for executable UML. In 2016 EclipseCon Europe Conference. Ludwigsburg: OSGI.

Utting, M., Pretschner, A., & Legeard, B. (2012). A taxonomy of model-based testing approaches. Software Testing, Verification and Reliability, 22(5), 297–312.

Veanes, M., Roy, P., & Campbell, C. (2006). Online testing with reinforcement learning. In Formal Approaches to Software Testing and Runtime Verification. 240–253.

Wohlin, C., Runeson, P., Höst, M., Ohlsson, M. C., Regnell, B., & Wesslén, A. (2012). Experimentation in software engineering (1st edn.). New York: Springer Science & Business Media.

Yang, W., Xu, C., Liu, Y., Cao, C., Ma, X., & Lu, J. (2014). Verifying self-adaptive applications suffering uncertainty. In Proceedings of the 29th ACM/IEEE international conference on Automated software engineering (pp. 199–210). ACM.

Yegnanarayana, B. (2009). Artificial neural networks (1st edn.). New Delhi: PHI Learning Pvt. Ltd.

Zhang, M., Selic, B., Ali, S., Yue, T., Okariz, O., & Norgren, R. (2015). Understanding uncertainty in cyber-physical systems: a conceptual model. In Modelling Foundations and Applications: 12th European Conference. ECMFA Springer.

Zhang, M., Ali, S., & Yue, T. (2017). Uncertainty-wise test case generation and minimization for cyber-physical systems: a multi-objective search-based approach. Simula. Technical report: https://www.simula.no/publications/uncertainty-based-test-case-generation-and-minimization-cyber-physical-systems-multi.

Zheng, X., & Julien, C. (2015). Verification and validation in cyber physical systems: research challenges and a way forward. In Proceedings of the First International Workshop on Software Engineering for Smart Cyber-Physical Systems (pp. 15–18). IEEE Press.

Funding

This research is funded by the Research Council of Norway (RCN) under MBT4CPS project (grant no. 240013/O70). Tao Yue and Shaukat Ali are also supported by the RCN funded Zen-Configurator project (grant no. 240024/F20), RFF Hovedstaden funded MBE-CR project (grant no number. 239063), and Certus SFI and EU Horizon 2020 funded U-Test project (grant no. 645463).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, T., Ali, S., Yue, T. et al. Testing self-healing cyber-physical systems under uncertainty: a fragility-oriented approach. Software Qual J 27, 615–649 (2019). https://doi.org/10.1007/s11219-018-9437-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11219-018-9437-3