Abstract

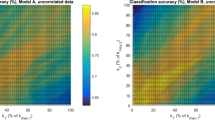

A general inductive Bayesian classification framework is considered using a simultaneous predictive distribution for test items. We introduce a principle of generative supervised and semi-supervised classification based on marginalizing the joint posterior distribution of labels for all test items. The simultaneous and marginalized classifiers arise under different loss functions, while both acknowledge jointly all uncertainty about the labels of test items and the generating probability measures of the classes. We illustrate for data from multiple finite alphabets that such classifiers achieve higher correct classification rates than a standard marginal predictive classifier which labels all test items independently, when training data are sparse. In the supervised case for multiple finite alphabets the simultaneous and the marginal classifiers are proven to become equal under generalized exchangeability when the amount of training data increases. Hence, the marginal classifier can be interpreted as an asymptotic approximation to the simultaneous classifier for finite sets of training data. It is also shown that such convergence is not guaranteed in the semi-supervised setting, where the marginal classifier does not provide a consistent approximation.

Similar content being viewed by others

References

Basu, S.: Semi-supervised clustering: probabilistic models, algorithms and experiments. Ph.D. thesis, Department of Computer Sciences, UT at Austin (2005)

Bailey, N.T.J.: Probability methods of diagnosis based on small samples. In: Mathematics and Computer Science in Biology and Medicine. H.M. Stationery Office, London (1965)

Bernardo, J.M., Smith, A.F.M.: Bayesian Theory. Wiley, Chichester (1994)

Bishop, C.M.: Pattern Recognition and Machine Learning. Springer, New York (2007)

Chapelle, O., Schölkopf, B., Zien, A.: Introduction to semi-supervised learning. In: Chapelle, O., et al. (eds.) Semi-Supervised Learning, pp. 1–12. MIT Press, Cambridge (2006)

Chow, C.K., Liu, C.N.: Approximating discrete probability distributions with dependence trees. IEEE Trans. Inf. Theory 14, 462–467 (1968)

Corander, J., Gyllenberg, M., Koski, T.: Bayesian model learning based on a parallel MCMC strategy. Stat. Comput. 16, 355–362 (2006)

Corander, J., Gyllenberg, M., Koski, T.: Random partition models and exchangeability for Bayesian identification of population structure. Bull. Math. Biol. 69, 797–815 (2007)

Corander, J., Gyllenberg, M., Koski, T.: Bayesian unsupervised classification framework based on stochastic partitions of data and a parallel search strategy. Adv. Data Anal. Classif. 3, 3–24 (2009)

Devroye, L., Györfi, L., Lugosi, G.: A Probabilistic Theory of Pattern Recognition. Springer, New York (1996)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification, 2nd edn. Wiley, New York (2000)

Geisser, S.: Posterior odds for multivariate normal classifications. J. R. Stat. Soc. B 26, 69–76 (1964)

Geisser, S.: Predictive discrimination. In: Krishnajah, P.R. (ed.) Multivariate Analysis, pp. 149–163. Academic Press, New York (1966)

Geisser, S.: Predictive Inference: An Introduction. Chapman & Hall, London (1993)

Gyllenberg, M., Koski, T., Verlaan, M.: Classification of binary vectors by stochastic complexity. J. Multivar. Anal. 63, 47–72 (1997a)

Gyllenberg, H.G., Gyllenberg, M., Koski, T., Lund, T., Schindler, J., Verlaan, J.: Classification of Enterobacteriaceae by minimization of stochastic complexity. Microbiology 143, 721–732 (1997b)

Hand, D.J., Yu, K.: Idiot’s Bayes: not so stupid after all. Int. Stat. Rev. 69, 385–398 (2001)

Howson, C.: Hume’s Problem: Induction and the Justification of Belief. Oxford University Press, Oxford (2000)

Huo, Q., Lee, C.-H.: A Bayesian predictive classification approach to robust speech recognition. IEEE Trans. Speech Audio Process. 8, 200–204 (2000)

Jain, S., Neal, R.M.: Splitting and merging components of a nonconjugate Dirichlet process mixture model. Bayesian Anal. 3, 445–472 (2007)

Kallenberg, O.: Probabilistic Symmetries and Invariance Principles. Springer, New York (2005)

Nádas, A.: Optimal solution of a training problem in speech recognition. IEEE Trans. Acoust. Speech Signal Process. 33, 326–329 (1985)

Ripley, B.D.: Statistical Inference for Spatial Processes. Cambridge University Press, Cambridge (1988)

Ripley, B.D.: Pattern Recognition and Neural Networks. Cambridge University Press, Cambridge (1996)

Robert, C.P., Casella, G.: Monte Carlo Statistical Methods, 2nd edn. Springer, New York (2005)

Solomonoff, R.J.: A formal theory of inductive inference. Inf. Control 7, 1–22 (1964)

Solomonoff, R.J.: Three kinds of probabilistic induction: universal distributions and convergence theorems. Comput. J. 51, 566–570 (2008)

Stam, A.J.: Generation of a random partition of a finite set by an urn model. J. Comb. Theory, Ser. A 35, 231–240 (1983)

Zabell, S.L.: W.E. Johnson’s ‘sufficientness’ postulate. Ann. Stat. 10, 1091–1099 (1982)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Corander, J., Cui, Y., Koski, T. et al. Have I seen you before? Principles of Bayesian predictive classification revisited. Stat Comput 23, 59–73 (2013). https://doi.org/10.1007/s11222-011-9291-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-011-9291-7