Abstract

Subordinators are infinitely divisible distributions on the positive half-line. They are often used as mixing distributions in Poisson mixtures. We show that appropriately scaled Poisson mixtures can approximate the mixing subordinator and we derive a rate of convergence in \(L^p\) for each \(p\in [1,\infty ]\). This includes the Kolmogorov and Wasserstein metrics as special cases. As an application, we develop an approach for approximate simulation of the underlying subordinator. In the interest of generality, we present our results in the context of more general mixtures, specifically those that can be represented as differences of randomly stopped Lévy processes. Particular focus is given to the case where the subordinator belongs to the class of tempered stable distributions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Subordinators are a wide class of positive random variables. They correspond to the class of infinitely divisible distributions on the positive half-line. See Sato (1999) or Steutel and van Harn (2004) for many properties and examples. Subordinators have many uses and applications. They are often used as mixing distributions in the context of Poisson mixtures, which are common models for over-dispersed count data. We show that appropriately scaled Poisson mixtures can approximate the mixing subordinator and we derive a rate of convergence in \(L^p\) for each \(p\in [1,\infty ]\). When \(p=\infty \) this is the Kolmogorov metric and when \(p=1\) it is the Kantorovich or Wasserstein metric. As an application, we develop a methodology for approximate simulation of the underlying subordinator. In the interest of generality, we present our theoretical results in the context of more general mixtures. Specifically, those that can be represented as differences of randomly stopped Lévy processes.

We are particularly interested by the case where the mixing distribution is tempered stable (TS). TS distributions form a large class of models, which modify the tails of infinite variance stable distributions to make them lighter. This leads to more realistic models for many application areas. We are motivated by applications to finance, where TS subordinators are common models for stochastic volatility (Barndorff-Nielsen and Shephard 2001, 2003) and their bilateral versions are often used to model financial returns (Küchler and Tappe 2013, 2014). Tweedie distributions introduced in Tweedie (1984) are, perhaps, the earliest class of TS distributions to be studied. More general classes were introduced in Rosiński (2007) and Rosiński and Sinclair (2010). See also the monograph (Grabchak 2016) and the references therein. Poisson mixtures, where the mixing distribution is TS are called discrete TS distributions, see Grabchak (2018) and Grabchak (2022). In the special case where the mixing distribution belongs to the Tweedie class, they are called Poisson-Tweedie. See Baccini et al. (2016) for a recent overview.

Formally, a Poisson mixture can be described as follows. Let X be a subordinator and, independent of X, let \(\{Z(t):t\ge 0\}\) be a Poisson process with rate 1. The Poisson mixture is then given by Z(X). For a survey of Poisson mixtures see Karlis and Xekalaki (2005) or Sect. VI.7 of Steutel and van Harn (2004). We consider a more general bilateral situation, where \(X_+,X_-\) are independent subordinators and, independent of these, \(Z^+=\{Z^+(t):t\ge 0\}\) and \(Z^-=\{Z^-(t):t\ge 0\}\) are independent Lévy processes. Later we will allow these processes to be quite general, but, for the moment, assume that they are independent Poisson processes each with rate 1 and set

This gives a random variable whose support is concentrated on the lattice \(a\mathbb {Z}=\{0,\pm a,\pm 2a,\pm 3a,\dots \}\).

Distributions of this type are important in the context of high frequency finance. In practice, trades on an exchange cannot be for arbitrary amounts. There is a smallest price increment called the ‘tick size,’ and all prices changes must be in the form of an integer times the tick size. Thus, if a is the tick size, then price changes must take values on the lattice \(a\mathbb {Z}\). For this reason, distributions of the form (1) are often used to model high frequency price changes, see Barndorff-Nielsen et al. (2012) or Koopman et al. (2017). On the other hand, less frequent price changes are typically modeled using continuous distributions, often bilateral subordinators. The idea is that over larger time frames, the tick size does not matter (so long as it is relatively small). We explain the transition from discrete to continuous models, by showing that

Further, this result gives an approximate simulation method for simulating subordinators. The ability to simulate subordinators is particularly important as these distributions form the building blocks from which other distributions, including many multivariate distributions, can be simulated, see Xia and Grabchak (2022).

The purpose of this paper is two-folds. First, we prove that (2) holds and we derive a rate of convergence in \(L^p\) for each \(p\in [1,\infty ]\). In the interest of generality, we do not require \(Z^+\) and \(Z^-\) to be Poisson processes, but allow them to be more general Lévy processes. Second, we use this convergence to justify an approximate simulation method. We show that one can approximately simulate \( X^+-X^-\) by simulating \(a Z^+(X^+/a)- a Z^-(X^-/a)\) for a relatively small a and we derive approaches for simulating the latter. This is particularly important for TS distributions, where, with the exception of some relatively simple special cases (Hofert 2011; Grabchak 2021a), no exact simulation methods are known.

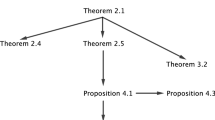

The rest of this paper is organized as follows. In Sect. 2 we recall some basic facts about infinitely divisible distributions and subordinators and in Sect. 3 we give our main theoretical results. In Sect. 4 we discuss Poisson mixtures more broadly and give our methodology for approximate simulation of the mixing distribution. In Sect. 5 we give a small scale simulation study. Proofs are postponed to Sect. 6. In Sect. 7 we consider extension of our results. These include an application to normal variance models, which are popular in financial applications.

Before proceeding we introduce some notation. We use the standard abbreviations: iid for independent and identically distributed, cdf for cumulative distribution function, pdf for probability density function, and pmf for probability mass function. We write \(\mathbb {R}\) to denote the set of real numbers and we write \({\mathfrak {B}}(\mathbb {R})\) to denote the class of Borel sets on \(\mathbb {R}\). We write \(\mathbb {C}\) to denote the set of complex numbers and, for \(z\in \mathbb {C}\), we write \(\Re z\) and \(\Im z\) to denote the real and imaginary parts of z, respectively. We write \(\wedge \) and \(\vee \) to denote the minimum and maximum, respectively. We write \(\textrm{Pois}(\lambda )\), U(a, b), N(0, 1), and \(\delta _a\) to denote, respectively, the Poison distribution with mean \(\lambda \), the uniform distribution on (a, b), the standard normal distribution, and the point mass at a. Further, we write \(\textrm{Ga}(\beta ,\zeta )\) to denote a gamma distribution with pdf

where \(\beta ,\zeta >0\) are parameters. For a set A we write \(1_A\) to denote the indicator function on A and we write \({:=}\) to denote a defining equality. If \(\mu \) is a probability distribution, we write \(X\sim \mu \) to denote that X is a random variable with distribution \(\mu \) and we write \(X_1,X_2,\dots {\mathop {\sim }\limits ^{\textrm{iid}}}\mu \) to denote that \(X_1,X_2,\dots \) are iid random variables with common distribution \(\mu \).

2 Background

In this paper we focus on infinitely divisible distributions with finite variation and no drift. A distribution \(\mu \) of this type has a characteristic function of the form

where

is the cumulant generating function and M is the Lévy measure satisfying

We write \(\mu =\textrm{ID}_0(M)\) to denote this distribution. For future reference, we note that

Associated with every infinitely divisible distribution \(\mu \) is a Lévy process \(\{Z(t):t\ge 0\}\), where \(Z(1)\sim \mu \). This process has independent and stationary increments. An important example is when \(M(\textrm{d}x) = \lambda \delta _1(\textrm{d}x)\). In this case, \(\textrm{ID}_0(M)\) is a Poisson distribution with mean \(\lambda \) and the associated Lévy process is a Poisson process with rate \(\lambda \). See Sato (1999) for many results about infinitely divisible distributions and their associated Lévy processes.

When \(M((-\infty ,0))=0\), the distribution \(\mu =\textrm{ID}_0(M)\) is called a subordinator. By a slight abuse of terminology, we also use this term to describe any random variable with this distribution. In this case, \(\mu ((-\infty ,0))=0\) and the associated Lévy process is increasing. When \(M((0,\infty ))=0\), the distribution \(\mu =\textrm{ID}_0(M)\) satisfies \(\mu ((0,\infty ))=0\) and the associated Lévy process is decreasing. In general, under our assumptions, we can decompose the Lévy measure M into two parts:

and

In this case, if \(X^-\sim \textrm{ID}_0(M^-)\) and \(X^+\sim \textrm{ID}_0(M^+)\) are independent and \(X=X^+-X^-\), then \(X\sim \textrm{ID}_0(M)\) and \(X^-,X^+\) are subordinators. Since X is the difference of two independent subordinators, we sometimes call it (or equivalently its distribution) a bilateral subordinator.

TS distributions form an important class of infinitely divisible distributions. They correspond to the case, where the Lévy measure is of the form

where \(\eta _{\pm }\ge 0\) with \(\eta _++\eta _->0\), \(\alpha \in (0,1)\), and \(g:\mathbb {R}\mapsto [0,\infty )\) is a bounded Borel function satisfying

We call g the tempering function and we denote the distribution \(\mu =\textrm{ID}_0(M)\) by \(\mu =\textrm{TS}_{\alpha }(g,\eta _-,\eta _+)\). We note that one can consider the more general case where \(\alpha \in (0,2)\). However, when \(\alpha \in [1,2)\), the corresponding distributions do not have finite variation and are, thus, beyond the scope of this paper.

When \(g\equiv 1\), the distribution \(\textrm{TS}_{\alpha }(g,\eta _-,\eta _+)\) reduces to an infinite variance stable distribution, see Samorodnitsky and Taqqu (1994). It is often assumed that \(\lim _{x\rightarrow \pm \infty }g(x)=0\), which leads to distributions that are similar to stable distributions in some central region, but with lighter (i.e., tempered) tails. This justifies the name and makes these distributions useful for many application areas, see Grabchak and Samorodnitsky (2010) for a discussion in the context of finance. Many facts about TS distributions can be found in Rosiński (2007), Rosiński and Sinclair (2010), Grabchak (2016), and the references therein.

3 Main results

Our main theoretical results are concerned with randomly stopped Lévy processes. For this reason, we generally work with two infinitely divisible distributions: \(\mu =\textrm{ID}_0(M)\) and \(\nu =\textrm{ID}_0(L)\). For us, \(\nu \) is the distribution of the Lévy process, while \(\mu \) is the distribution that governs the random time when the process is stopped. It is not the distribution of this random time, but it will be decomposed into two parts. The first will be the distribution of the time when we stop the positive jumps and second will be the distribution of the time when we stop the negative jumps. Throughout, we take

to denote generic random variables from distributions \(\mu \) and \(\nu \), respectively. At this point, we make no additional assumptions on \(\mu \), but for distribution \(\nu \), we assume, throughout, that

It can be shown that \(\gamma =\textrm{E}[Z]\).

We decompose M into \(M^+\) and \(M^-\) as in (5) and (6). Let \(X^-\sim \textrm{ID}_0(M^-)\) and \(X^+\sim \textrm{ID}_0(M^+)\) be independent random variables and note that \(X{\mathop {=}\limits ^{d}}X^+-X^-\). Independent of these, let \(Z^+=\{Z^+(t):t\ge 0\}\) and \(Z^-=\{Z^-(t):t\ge 0\}\) be independent Lévy processes with \(Z^+(1),Z^-(1)\sim \nu \). For \(a>0\) set

and let \(\mu _a\) be the distribution of \(Y_a\). A simple conditioning argument shows that the characteristic function of \(Y_a\) is given by

where \(C_\nu \) is the cumulant generating function of \(\nu \).

Proposition 1

We have

In the case where \(Z^-\) and \(Z^+\) are Poisson processes, versions of this result can be found in Klebanov and Slámova (2013), Grabchak (2018), and Proposition 6.5 of Steutel and van Harn (2004). Our goal is to characterize the rate of convergence in \(L^p\). Let F be the cdf of distribution \(\mu \) and let \(F_a\) be the cdf of distribution \(\mu _a\). Recall that, for \(p\in [1,\infty )\), the \(L^p\) distance between these distributions is given by

and for \(p=\infty \) it is given by

Since \( \left| F(x)-F_a(x)\right| \le 1\) for each \(x\in \mathbb {R}\), it is readily checked that for \(1\le p_1\le p_2<\infty \) we have

In this context, the \(L^\infty \) distance is sometimes called the Kolmogorov metric and the \(L^1\) distance is sometimes called the Kantorovich or the Wasserstein metric. Many results about these and related distances can be found in Gibbs and Su (2002), Bobkov (2016), Arras and Houdré (2019), and the references therein. We just note the following equivalent formulations of \(L^1\) distance. We have

where the infimum is taken over all possible joint distributions of X and \(Y_a\) and the supremum is taken over all continuous functions h satisfying the Lipschitz condition \(|h(x)-h(y)|\le |x-y|\) for \(x,y\in \mathbb {R}\).

Before stating our main results, we introduce several quantities. We do not, in general, assume these to be finite. Let

and let

It is well-known that if \(r_0<\infty \), then \(\mu \) has a pdf f satisfying

Further, by Corollary 25.8 in Sato (1999) we have

and it is readily checked that, when they are finite, \(m_1\ge \textrm{E}[|X|]\), \(m_2=\textrm{Var}(X)\), and \(\zeta _2=\textrm{Var}(Z)\). We now give our main result.

Theorem 1

Assume that \(r_0,m_1,\zeta _1,\zeta _2\) are all finite. For any \(a>0\),

and for any \(p\in [2,\infty )\) and any \(a>0\)

If, in addition, \(m_2<\infty \), then for any \(p\in [1,\infty )\) and any \(a>0\)

where

It is well-known that the \(L^1\) and \(L^\infty \) distances provide bounds on a number of other distances, including the Lévy, discrepancy, and Prokhorov metrics, see Gibbs and Su (2002). Thus, our bounds give rates of convergence for these distances as well. We now give a better rate of convergence for the case where \(\mu \) has a TS distribution.

Theorem 2

Let \(\mu =\textrm{TS}_{\alpha }(g,\eta _-,\eta _+)\) and assume that \(m_1,\zeta _1,\zeta _2\) are all finite. For any \(a>0\),

and for any \(p\in [2,\infty )\) and any \(a>0\)

If, in addition, \(m_2<\infty \), then for any \(p\in [1,\infty )\) and any \(a>0\)

In the proof we will see that we always have \(r_0<\infty \) in this case.

4 Poisson mixtures and simulation

Our main results imply that \(\mu _a \approx \mu \) when a is small, which suggests that we can approximately simulate from \(\mu \) by simulating from \(\mu _a\). In practice, simulation from \(\mu _a\) is often easier than simulation from \(\mu \). This is particularly true when the Lévy processes \(Z^+,Z^-\) are Poisson processes. In this case, the distribution of \(\mu _a\) is a scaled Poisson mixture. A general review of Poisson mixtures can be found in Karlis and Xekalaki (2005) or Sect. VI.7 of Steutel and van Harn (2004). Our discussion primarily extends results about discrete TS distributions given in Grabchak (2022).

Throughout this section we assume that \(M((-\infty ,0])=0\), which means that \(\mu =\textrm{ID}_0(M)\) is a subordinator. Further, we take \(L(\textrm{d}z) = \delta _1(\textrm{d}z)\). This means that \(\nu =\textrm{ID}_0(L)=\textrm{Pois}(1)\) and thus that the Lévy process \(Z^+=\{Z^+(t):t\ge 0\}\) with \(Z^+(1)\sim \nu \) is a Poisson process with rate 1 and that \(\gamma =1\). Let \(X\sim \textrm{ID}_0(M)\) be independent of \(Z^+\) and, for any \(a>0\), set

As before, the distribution of \(Y_a\) is denoted by \(\mu _a\). In this case, we sometimes write \(\mu _a=\textrm{PM}(M,a)\). Note that the support of this distribution is concentrated on \(\{0,a,2a,3a,\dots \}\).

Specializing (8) to the current situation shows that the characteristic function of \(Y_a\) is given by

Next, we introduce several useful quantities. These are closely related to the canonical sequence of discrete infinitely divisible distributions discussed in Steutel and van Harn (2004). For \(a>0\), let

and let

We now characterize the distribution \(\mu _a\) in terms of these quantities.

Proposition 2

-

1.

The characteristic function of \(\mu _a\) is given by

$$\begin{aligned} & {\hat{\mu }}_a(s) = \exp \left\{ \sum _{k=1}^{\infty } \frac{\ell _k^{(a)}}{k!}\left( e^{isa k} -1\right) \right\} \\ & = e^{-\ell _+^{(a)}} \exp \left\{ \sum _{k=1}^{\infty } \frac{\ell _k^{(a)}}{k!}e^{isa k} \right\} , \ \ s \in \mathbb {R}. \end{aligned}$$ -

2.

We have \(\mu _a=\textrm{ID}_0(M^{(a)})\), where the Lévy measure \(M^{(a)}\) is concentrated on \(\{a,2a,\ldots \}\) with

$$\begin{aligned} M^{(a)}(\{a k\}) = \frac{1}{k!} \ell _k^{(a)}, \quad k = 1,2,\ldots , \end{aligned}$$and \(M^{(a)} ((0, \infty )) = \ell _+^{(a)} < \infty \).

-

3.

Let \(p_k^{(a)} = P(Y_a = ak)\) for \(k = 0, 1, 2, \ldots \). We have \(p_0 = e^{- \ell _+^{(a)}}\) and

$$\begin{aligned} p_k^{(a)} = \frac{1}{k} \sum _{j=0}^{k-1} \frac{\ell _{k-j}^{(a)}}{(k-j-1)!} p_j^{(a)}, \quad k = 1,2,\ldots . \end{aligned}$$ -

5.

Let \(M_1^{(a)}\) be the probability measure on \(\{a,2a, \ldots \}\) with

$$\begin{aligned} M_1^{(a)}(\{ak\}) = \frac{M^{(a)}(\{ak\})}{M^{(a)}((0, \infty ))} = \frac{\ell _k^{(a)}}{k! \ell _+^{(a)}}, \quad k = 1,2,\ldots . \end{aligned}$$If \(N \sim \textrm{Pois}\left( \ell _+^{(a)}\right) \) and \(W_1, W_2, \ldots {\mathop {\sim }\limits ^{iid}} M_1^{(a)}\) are independent of N, then

$$\begin{aligned} Y_a{\mathop {=}\limits ^{d}}\sum _{i=1}^{N} W_i. \end{aligned}$$

Here, \(M^{(a)}\) is a discretization of M. Parts 3 and 4 can be used to simulate from \(\mu _a\). First we describe the algorithm suggested by Part 3. It is a version of the inverse transform method.

Algorithm 1: Simulation from \(\mu _a=\textrm{PM}(M,a)\).

1. Simulate \(U \sim U(0,1)\).

2. Evaluate \(Y = \min \left\{ m=0,1,2, \ldots | \sum _{k=0}^m p^{(a)}_k>U\right\} \) and return aY.

This method often works well. However, for heavier tailed distributions, we may need to calculate \(p^{(a)}_k\) for a large value of k, which can be inefficient. An alternate simulation method is suggested by Part 4.

Algorithm 2: Simulation from \(\mu _a=\textrm{PM}(M,a)\).

1. Simulate \(N \sim \textrm{Pois}(\ell _+^{(a)})\).

2. Simulate \(W_1, W_2, \ldots , W_N {\mathop {\sim }\limits ^{iid}} M_1^{(a)}\).

3. Return \(\sum _{i=1}^{N} W_i\).

To use Algorithm 2 we need a way to simulate from \(M_1^{(a)}\). Following ideas in Grabchak (2022) for discrete TS distributions, we can represent \(M_1^{(a)}\) as a mixture of scaled zero-truncated Poisson distributions. Let \(\textrm{Pois}_+(\lambda )\) denote the zero truncated Poisson distribution with parameter \(\lambda \) and pmf

We have

where

is a probability measure. Thus, we can simulate from \(M_1^{(a)}\) as follows.

Algorithm 3: Simulation from \(M_1^{(a)}\).

1. Simulate \(\lambda \sim M_2^{(a)}\).

2. Simulate \(W \sim \textrm{Pois}_+(\lambda )\) and return aW.

In this paper, to simulate from \(\textrm{Pois}_+(\lambda )\), we use the rztpois method in the actuar package for the statistical software R, see Dutang et al. (2008). Simulation from \(M_2^{(a)}\) depends on the structure of M. For certain discrete TS distributions and \(a=1\) two methods were given in Grabchak (2022). We now extend them to the case of general \(a>0\). For TS distributions, M is of the form (7) and, to ensure \(M((-\infty ,0])=0\), we take \(\eta _-=0\). It follows that, in this case,

Let

where \(K_a=\int _0^\infty (1-e^{-x}) g(xa)x^{-1-\alpha } \text {d}x= a^\alpha \ell _+^{(a)}/\eta _+\) is a normalizing constant. It is easily checked that, if \(Y\sim M_3^{(a)}\), then \(aY\sim M_2^{(a)}\). Two rejection sampling methods for simulating from \(M_3^{(a)}\) are given in Grabchak (2022). Toward this end, let \(C_g=\operatorname {ess\,sup}_{x>0} g(x)\) and set

Algorithm 4. Simulation from \(M_2^{(a)}\) for TS distributions.

1. Independently simulate \(U,U_1,U_2{\mathop {\sim }\limits ^{\textrm{iid}}}U(0,1)\) and set \(V=U_1^{1/(1-\alpha )}U_2^{-1/\alpha }\).

2. If \(U\le \varphi _1(V)\) return aV, otherwise go back to step 1.

On a given iteration, the probability of acceptance is \(K_a \alpha (1-\alpha )/C_g\). In some cases, we can increase the acceptance rate by using a different trial distribution. The second method works when there exist \(p,C,\zeta >0\) such that, for Lebesgue a.e. x,

In this case let

We get the following rejection sampling algorithm, where, on a given iteration, the probability of acceptance is \( \frac{K_a p\zeta ^\frac{1-\alpha }{p}}{C\Gamma \left( \frac{1-\alpha }{p}\right) } \).

Algorithm 5. Simulation from \(M_2^{(a)}\) for TS distributions when (9) holds.

1. Independently simulate \(U\sim U(0,1)\) and \(V\sim \textrm{Ga}((1-\alpha )/p,\zeta )\).

2. If \(U\le \varphi _2(V^{1/p})\) return \(aV^{1/p}\), otherwise go back to step 1.

Note that, in this section, the assumption that \(M((-\infty ,0])=0\) is without loss of generality. When it does not hold, we can decompose M into \(M^+\) and \(M^-\) as in (5) and (6). We can then independently simulate \(Y_{a,+}\sim \textrm{PM}(M^+,a)\) and \(Y_{a,-}\sim \textrm{PM}(M^-,a)\) and take

5 Simulations

In this section we give a small simulation study to illustrate our methodology for approximate simulation from a subordinator \(\mu \) by simulating from \(\mu _a\) with some small \(a>0\). We focus on two classes of TS distributions: the class of classical tempered stable (CTS) distributions and the class of TS distributions with power tempering (PT distributions). CTS distributions are, arguably, the best known and most commonly used TS distributions, see Küchler and Tappe (2013) for a survey. While exact simulation methods for CTS distributions are well-known, see e.g. Kawai and Masuda (2011) or Hofert (2011), we use them to illustrate our methodology because they are so popular. On the other hand, the only known simulation methods for PT distributions are either approximate or computationally intensive, see Grabchak (2019). For a discussion of PT distributions and their applications, see Grabchak (2016) or Grabchak (2021). In the symmetric case, methods to numerically evaluate pdfs, cdfs, and quantiles of CTS and PT distributions are given in the SymTS package for the statistical software R.

We begin with the class of CTS distributions. Distribution \(\textrm{TS}_{\alpha }(\eta _-,\eta _+,g)\) is CTS if

for some \(\ell _-,\ell _+>0\). It is often convenient to take \(\eta _-=c_-\ell _-^\alpha \) and \(\eta _+=c_+\ell _+^\alpha \), where \(c_-,c_+\ge 0\) with \(c_-+c_+>0\) are parameters. In this case we denote this distribution by CTS\(_\alpha (c_-,\ell _-,c_+,\ell _+)\). When \(c_-=0\) we get an important subset of the class of Tweedie distributions, see Tweedie (1984).

Our methodology for approximate simulation from \(\mu =\)CTS\(_\alpha (c_-,\ell _-,c_+,\ell _+)\) is as follows. First choose \(a>0\) small, then independently simulate \(Y_{a,+}\sim \textrm{PM}(M^+,a)\) and \(Y_{a,-}\sim \textrm{PM}(M^-,a)\), where \(M^+(\textrm{d}x) = c_+\ell _+^\alpha e^{-x/\ell _+}x^{-1-\alpha } 1_{[x>0]}\textrm{d}x\) and \(M^-(\textrm{d}x) = c_-\ell _-^\alpha e^{-x/\ell _-}x^{-1-\alpha } 1_{[x>0]}\textrm{d}x\). The random variable \(Y_a = Y_{a,+}-Y_{a,-}\) gives an approximate simulation from \(\mu \). We denote the distribution of \(Y_a\) by \(\mu _a\). We can simulate \(Y_{a,+}\) and \(Y_{a,-}\) using Algorithm 1 or Algorithm 2. In this paper, we use Algorithm 2, where we simulate from \(M_1^{(a)}\) using Algorithm 3. To simulate from \(M^{(a)}_2\) we can use Algorithm 4 or Algorithm 5. We select the one that has the larger probability of acceptance. Acceptance probabilities for several choices of the parameters are given in Table 1.

We now illustrate the performance of our methodology. For simplicity, we focus on the symmetric case. In this study we choose \(\alpha \in \{0.25, 0.5, 0.75\}\), \(c_-=c_+ = 1\), and \(\ell _-=\ell _+ = 0.5\). We take \(a \in \{0.5, 0.1, 0.01, 0.0001\}\) to demonstrate how \(\mu _a\) approaches \(\mu \). The results of our simulations are given in Fig. 1 and Table 2.

First, for each choice of the parameters and each value of a, we simulate 5000 observations. In Fig. 1 we give two types of diagnostic plots. First, we compare kernel density estimates (KDE) of our observations from \(\mu _a\) with the density of \(\mu \). We can see that, as a decreases, the KDE approaches the density of \(\mu \). Second, we present qq-plots and again we see that, as a decreases, the qq-plots approach the diagonal line.

Next, we perform formal goodness-of-fit testing using the Kolmogorov-Smirnov (KS) and Cramer-Von Mises (CVM) tests. Here, for each choice the parameters and of a, we simulate 100 samples each comprised of 5000 observations. For each sample we evaluate the p-values of the KS and the CVM tests. We then average these together over the 100 samples. Recall that under the null hypothesis, the p-value follows a U(0, 1) distribution. Thus, when averaging over many runs, we expect the average of the p-values to be close to 0.5. The results are given in Table 2. We can see that the means of the p-values get closer to 0.5 as a decreases. Taken together, the goodness-of-fit results given in Fig. 1 and Table 2 suggest that the approximation works well for \(a=10^{-4}\).

Diagnostic plots for CTS. In the plots on the left, the solid black line is the pdf of the CTS distribution. Overlaid are KDEs based on observations from \(\mu _a\) for several choices of a. The plots on the right give qq-plots based on samples from \(\mu _a\) for several choices of a. The solid line is the reference line \(y=x\)

We now turn to PT distributions. Distribution \(\textrm{TS}_{\alpha }(\eta _-,\eta _+,g)\) is PT if

where \(\ell _+,\ell _->0\). It is often convenient to take \(\eta _-=c_-(\alpha +\ell _-)(\alpha +\ell _-+1)\) and \(\eta _+=c_+(\alpha +\ell _+)(\alpha +\ell _++1)\), where \(c_-,c_+\ge 0\) with \(c_-+c_+>0\) are parameters. In this case, we denote this distribution by PT\(_\alpha (c_-,\ell _-,c_+,\ell _+)\). These distributions have finite means, but heavier tails than CTS distributions. If \(Y\sim \)PT\(_\alpha (c_-,\ell _-,c_+,\ell _+)\) with \(c_-,c_+>0\), then

For more on these distributions, see Grabchak (2016) or Grabchak (2021).

Our methodology for approximate simulation from \(\mu =\)PT\(_\alpha (c_-,\ell _-,c_+,\ell _+)\) is as follows. First choose \(a>0\) small, then independently simulate \(Y_{a,+}\sim \textrm{PM}(M^+,a)\) and \(Y_{a,-}\sim \textrm{PM}(M^-,a)\), where \(M^-(\textrm{d}x) = \eta _- g(-x) x^{-1-\alpha }1_{[x>0]}\textrm{d}x\) and \(M^+(\textrm{d}x) = \eta _+ g(x)x^{-1-\alpha } 1_{[x>0]}\textrm{d}x\). The random variable \(Y_a = Y_{a,+}-Y_{a,-}\) gives an approximate simulation from \(\mu \) and we denote the distribution of \(Y_a\) by \(\mu _a\). We can simulate \(Y_{a,+}\) and \(Y_{a,-}\) using Algorithm 1 or Algorithm 2. In this paper, we use Algorithm 2, where we simulate from \(M_1^{(a)}\) using Algorithm 3 and from \(M^{(a)}_2\) using Algorithm 4. Note that we cannot use Algorithm 5 since (9) does not hold in this case.

We now illustrate the performance of our methodology for PT distributions. We again focus on the symmetric case. In this study we choose \(\alpha \in \{0.25, 0.5, 0.75\}\), \(c_-=c_+ = 1\), and \(\ell _-=\ell _+ = 1\). We take \(a\in \{0.5, 0.1, 0.01, 0.0001\}\) to demonstrate how \(\mu _a\) converges to \(\mu \). The results of our simulations are given in Fig. 2 and Table 2.

Again, for each choice of the parameters and each value of a, we simulate 5000 observations. In Fig. 2 we give two types of diagnostic plots. First, we compare the KDE of our observations from \(\mu _a\) with the density of \(\mu \). We can see that, as a decreases, the KDE approaches the density of \(\mu \). Second, we present qq-plots and again we see that, as a decreases, the qq-plots approach the diagonal line.

Next, we perform formal goodness-of-fit testing. For each choice of the parameters and of a, we simulate 100 samples each comprised of 5000 observations. For each sample we evaluate the p-values of the KS and the CVM tests. We then average these together over the 100 samples. Again, when averaging over many runs, we expect the average of the p-values to be close to 0.5 under the null hypothesis. The results are given in Table 2. We can see that the means of the p-values get closer to 0.5 as a decreases. Taken together, the goodness-of-fit results given in Fig. 2 and Table 2 suggest that the approximation works well for \(a=10^{-4}\).

Diagnostic plots for PT. In the plots on the left, the solid black line is the pdf of the PT distribution. Overlaid are KDEs based on observations from \(\mu _a\) for several choices of a. The plots on the right give qq-plots based on samples from \(\mu _a\) for several choices of a. The solid line is the reference line \(y=x\)

6 Proofs

In this section we prove out theoretical results. We begin with several lemmas.

Lemma 1

Fix \(z\in \mathbb {C}\).

1. We have \(\left| e^{z}-1\right| \le |z|e^{|z|}\).

2. If \(\Re z\le 0\), then \(\left| e^{z}-1\right| \le 2\wedge |z|\).

3. If \(\Im z=0\), then for any \(n\in \{0,1,2,\dots \}\)

4. If \(z_1,z_2\in \mathbb {C}\) with \(\Re z_1,\Re z_2\le 0\), then

Proof

We have

Next, let \(z=-a+ib\), where \(a=-\Re z\) and \(b=\Im z\) and assume that \(-a=\Re z\le 0\). We have

and, by the triangle inequality,

The third part can be found in Sect. 26 of Billingsley (1995). We now turn to the fourth part. Without loss of generality assume that \(\Re z_1\le \Re z_2\le 0\) and note that

where the final inequality follows by the second part. \(\square \)

Lemma 2

For any \(s\in \mathbb {R}\) we have

Proof

By Lemma 1

Next, from the definition of \(C_\nu \) and \(\gamma \), we have

where the final inequality follows by Lemma 1. \(\square \)

Proof of Proposition 1

Our proof is based on showing the convergence of characteristic functions. By l’Hôpital’s rule

By Lemma 1

Thus, by dominated convergence

From here

by another application of dominated convergence. We just note that by Lemmas 1 and 2

From here (3) guarantees that dominated convergence holds. \(\square \)

We now recall several versions of Esseen’s smoothing lemma, see Bobkov (2016) for a survey of such results.

Lemma 3

For any \(T>0\), we have

and for any \(T>0\) and any \(p\in [1,\infty )\)

Further, for any \(p\in [2,\infty )\), if \(q = p/(p-1)\), then for any \(T>0\)

Proof

Here, (10) is a version of the classical Esseen’s smoothing lemma, see, e.g., page 538 in Feller (1971) or Theorem 1.5.2 in Ibragimov and Linnik (1971). We just use the fact that the essential supremum of the pdf of \(\mu \) is upper bounded by \(r_0/(2\pi )\). The formula in (11) is essentially Corollary 8.3 in Bobkov (2016), which it itself a version of Theorem 1.5.4 in Ibragimov and Linnik (1971). The constant \(4\pi \) is given in Ibragimov and Linnik (1971). To get the bound in (11), we apply the quotient rule and Minkowski’s inequality to the bound in Bobkov (2016). The formula in (12) is given in Corollary 7.2 of Bobkov (2016). It is a simple application of the classical Hausdorff-Young inequality, as given in, e.g., Proposition 2.2.16 of Grafakos (2004). \(\square \)

Lemma 4

1. For any \(s\in \mathbb {R}\), we have

and

2. For any \(a>0\), \(T>0\), and \(q\ge 1\), we have

and

Clearly, for the bounds in the above to be meaningful, we need the quantities \(r_0,m_1,m_2,\zeta _1\), and \(\zeta _2\) be finite, where appropriate. For ease of presentation, we do not include this assumption in the statement of the lemma. This should not cause any confusion as the bounds are trivial when these quantities are infinite. Our proof of the first part is based on Lemma 1. A different approach for getting related bounds is used in the proof of Theorem 4.1 in Arras and Houdré (2019). However, that approach seems to lead to sub-optimal bounds in this case.

Proof

By the first part of Lemma 1 and the fact that \(|e^{ixs}|=1\)

Combining Lemmas 1 and 2 with (4) gives

From here the first inequality follows. Turning to the second inequality, let

and

By dominated convergence, we have \(\hat{\mu }_a'(s) = A_a(s)\hat{\mu }_a(s)\) and \(\hat{\mu }'(s) = A(s)\hat{\mu }(s)\). It follows that

By dominated convergence,

and thus, for any \(s\in \mathbb {R}\)

and

where the last inequality follows from (4). Next, using Lemmas 1 and 2 and (4) gives

Putting everything together gives the first part. The second part follows immediately from the first, Minkowski’s inequality, and the fact that \(|\hat{\mu }(s)|\le 1\) for each \(s\in \mathbb {R}\). \(\square \)

Proof of Theorem 1

The proof follows by combining Lemmas 3 and 4. We illustrate the approach for the first bound only, as the rest can be verified in a similar way. By (10) for any \(T>0\)

where the second line follows by (13) with \(q=1\). Taking \(T=a^{-1/2}\) gives the result. \(\square \)

Proof of Theorem 2

First, fix \(\delta \in (0,1)\) and note that, since \(\lim _{x\rightarrow 0}g(x)=1\), there exists \(\beta >0\) such that for any \(x\in (-\beta ,\beta )\) we have \(g(x)\ge 1-\delta \). It follows that for \(|s|>1\)

where

Clearly \(|\hat{\mu }(s)|\) is integrable and, hence, \(r_0<\infty \) in this case.

Lemma 4 implies that for any \(q\ge 1\)

where the second line follows by Minkowski’s inequality and symmetry. Taking \(q=1\) and combining this with Lemma 3 gives

where we take \(T = a^{-1/(2-\alpha )} \left( \frac{A\gamma }{m_1\zeta _2}\right) ^{1/(2-\alpha )}\) in the final inequality. The other parts of the theorem can be shown in a similar way. \(\square \)

Proof of Proposition 2

The first part follows from the fact that

The second part follows immediately from the first. We now turn to the third part. We have

where \(\psi (s) = \textrm{E}[e^{-sX}]\) for \(s\ge 0\) is the Laplace transform of X and \(\psi ^{(k)}\) is the kth derivative of \(\psi \). Formal substitution shows that

From here we see that \(p_0^{(a)}=\psi (1/a)=e^{-\ell _+^{(a)}}\). Next, setting \(f(s) = \log \psi (s)\) we note that the kth derivative of f is given by

It follows that \(f^{(k)}(1/a) =(-a)^k \ell _k^{(a)}\). From standard results about derivatives of exponential functions, we get

see, e.g., Lemma 1 in Grabchak (2022). It follows that

as required. The fourth part follows by a standard conditioning argument. \(\square \)

7 Extensions

In this section we consider some extensions of our work. These results are not complete, but aim to suggest directions for future research.

7.1 Infinite \(r_0\)

The results in Theorem 1 assume that \(r_0=\int _{-\infty }^\infty |\hat{\mu }(s)|\textrm{d}s<\infty \), which implies that \(\mu \) has a bounded density. Here, we briefly discuss what happens if we allow \(r_0=\infty \). In this case, we no longer need \(|\hat{\mu }(s)|\) in the bounds in Lemma 4. While we can apply \(|\hat{\mu }(s)|\le 1\), we can get better bounds as follows. By (4), the fourth part of Lemma 1, and (14), we have for \(s\in \mathbb {R}\)

From here, by arguments as in the proof of Lemma 4, we get for \(s\in \mathbb {R}\)

It follows that for any \(a>0\), \(T>0\), and \(q\ge 1\), we have

and

Now, arguments as in the proof of Theorem 1 give the following.

Proposition 3

Assume that \(m_1,\zeta _1,\zeta _2\) are all finite. For any \(p\in [2,\infty )\) and any \(a>0\)

If, in addition, \(m_2<\infty \), then for any \(p\in [1,\infty )\) and any \(a>0\)

Here, for \(p\in [1,2)\), we get a slightly worse bound than in Theorem 1. We do not know if this due to an actually slower rate of convergence or if it is an artifact of the proof. We cannot give a bound for the \(L^\infty \) metric without assuming \(r_0<\infty \), as (10) requires this assumption. However, we can get a bound for the closely related Lévy metric, which metrizes weak convergence. Specifically, combining (15) with Theorem 3.2 in Bobkov (2016) leads to the rate \(\mathcal {O}\left( a^{1/3}\log (1/a)\right) \).

7.2 Normal variance mixtures

In this subsection we give rates of convergence for approximating normal variance mixtures. A normal variance mixture is the distribution of a random variable of the form \(\sqrt{X} W\), where \(W\sim N(0,1)\) and X is a positive random variable independent of W. Such distributions often arise in financial application, see, e.g., Sabino (2023), Bianchi and Tassinari (2024), and the references therein.

Throughout this section, let \(W\sim N(0,1)\) and let \(Z=\{Z_t:t\ge 0\}\) be a Lévy process with \(Z_1\sim \nu =\textrm{ID}_0(L)\). Assume that L is symmetric, i.e., that \(L(B)=L(-B)\) for each \(B\in {\mathfrak {B}}(\mathbb {R})\). This implies that \(\nu \) is also symmetric and that its cumulant generating function \(C_\nu \) is real. Assume further that

and set

Let \(X\sim \mu =\textrm{ID}_0(M)\) with \(M((-\infty ,0])=0\) and

Assume that X is independent of W and Z when appropriate. Let \(\mu ^*\) be the distribution of \(\sqrt{X} W\) and let \(\mu _a^*\) be the distribution of \(\sqrt{\frac{a}{\gamma ^*}} Z_{X/a}\). By a conditioning argument, it is easily checked that for any \(a>0\) the characteristic function of \(\mu ^*_{a}\) is

and the characteristic function of \(\mu ^*\) is

Proposition 4

We have

Proof

First note that the fact that L is symmetric and satisfies (16) implies that

Now, by Lemma 1

and

It follows that

and by dominated convergence

From here we get \(\lim _{a\downarrow 0}\hat{\mu }^*_a(s) = \hat{\mu }^*(s)\) for each \(s\in \mathbb {R}\) using another application of dominated convergence. To see that it holds note that by (4), Lemma 1, and (18), we have

which is integrable with respect to M. \(\square \)

Henceforth, assume that

Theorem 3

Let \(F_a\) be the cdf of \(\mu _a^*\) and let F be the cdf of \(\mu ^*\). Assume that \(r_0,m_1,\zeta _3\) are all finite. For any \(a>0\),

and for any \(p\in [2,\infty )\) and any \(a>0\)

For technical reasons, we are not able to get a rate of convergence for \(p\in [1,2)\).

Proof

By arguments similar to those in the proof of Lemma 4, we have

By the fourth part of Lemma 1 and (17), we have

It follows that, for any \(a>0\), \(T>0\), and \(q\ge 1\) we have

From here we proceed as in the proof of Theorem 1. \(\square \)

Data availability

No datasets were generated or analysed during the current study.

References

Arras, B., Houdré, C.: On Stein’s Method for Infinitely Divisible Laws with Finite First Moment. Springer International Publishing, Berlin (2019)

Baccini, A., Barabesi, L., Stracqualursi, L.: Random variate generation and connected computational issues for the Poisson-Tweedie distribution. Comput. Stat. 31, 729–748 (2016)

Barndorff-Nielsen, O.E., Pollard, D.G., Shephard, N.: Integer-valued Lévy processes and low latency financial econometrics. Quant. Financ. 12(4), 587–605 (2012)

Barndorff-Nielsen, O.E., Shephard, N.: Non-Gaussian Ornstein–Uhlenbeck-based models and some of their uses in financial economics. J. Roy. Stat. Soc. B 63(2), 167–241 (2001)

Barndorff-Nielsen, O.E., Shephard, N.: Integrated OU processes and non-Gaussian OU-based stochastic volatility models. Scand. J. Stat. 30(2), 277–295 (2003)

Bianchi, M.L., Tassinari, G.L.: Estimation for multivariate normal rapidly decreasing tempered stable distributions. J. Stat. Comput. Simul. 94(1), 103–125 (2024)

Billingsley, P.: Probability and Measure, 3rd edn. Wiley, New York (1995)

Bobkov, S.G.: Proximity of probability distributions in terms of Fourier–Stieltjes transforms. Russ. Math. Surv. 71(6), 1021 (2016)

Dutang, C., Goulet, V., Pigeon, M.: actuar: an R package for actuarial science. J. Stat. Softw. 25(7), 1–37 (2008)

Feller, W.: An Introduction to Probability Theory and Its Applications, 2nd edn. John Wiley & Sons Inc, New York (1971)

Gibbs, A.L., Su, F.E.: On choosing and bounding probability metrics. Int. Stat. Rev. 70(3), 419–435 (2002)

Grabchak, M.: Tempered Stable Distributions: Stochastic Models for Multiscale Processes. Springer, Cham, Switzerland (2016)

Grabchak, M.: Domains of attraction for positive and discrete tempered stable distributions. J. Appl. Probab. 55(4), 30–42 (2018)

Grabchak, M.: Rejection sampling for tempered Lévy processes. Stat. Comput. 29(3), 549–558 (2019)

Grabchak, M.: An exact method for simulating rapidly decreasing tempered stable distributions. Stat. Probab. Lett. 170, 109015 (2021)

Grabchak, M.: On the transition laws of p-tempered alpha-stable OU-processes. Comput. Stat. 36(2), 1415–1436 (2021)

Grabchak, M.: Discrete tempered stable distributions. Methodol. Comput. Appl. Probab. 24(3), 1877–1890 (2022)

Grabchak, M., Samorodnitsky, G.: Do financial returns have finite or infinite variance? A paradox and an explanation. Quant. Financ. 10(8), 883–893 (2010)

Grafakos, L.: Classical and Modern Fourier Analysis. Pearson Education Inc., Upper Saddle River (2004)

Hofert, M.: Sampling exponentially tilted stable distributions. ACM Trans. Model. Comput. Simul. (TOMACS) 22(1), 1–11 (2011)

Ibragimov, I.A., Linnik, Yu.V.: Independent and Stationary Sequences of Random Variables. Wolters-Noordhoff Publishing, Groningen (1971)

Karlis, D., Xekalaki, E.: Mixed Poisson distributions. Int. Stat. Rev. 73(1), 35–58 (2005)

Kawai, R., Masuda, H.: On simulation of tempered stable random variates. J. Comput. Appl. Math. 235, 2873–2887 (2011)

Klebanov, L.B., Slámova, L.: Integer valued stable random variables. Statist. Probab. Lett. 83(6), 1513–1519 (2013)

Koopman, S.J., Lit, R., Lucas, A.: Intraday stochastic volatility in discrete price changes: the dynamic Skellam model. J. Am. Stat. Assoc. 112(520), 1490–1503 (2017)

Küchler, U., Tappe, S.: Tempered stable distributions and processes. Stoch. Process. Their Appl. 123(12), 4256–4293 (2013)

Küchler, U., Tappe, S.: Exponential stock models driven by tempered stable processes. J. Econ. 181(1), 53–63 (2014)

Rosiński, J.: Tempering stable processes. Stoch. Process. Their Appl. 117(6), 677–707 (2007)

Rosiński, J., Sinclair, J.L.: Generalized tempered stable processes. Banach Center Publ. 90, 153–170 (2010)

Sabino, P.: Normal tempered stable processes and the pricing of energy derivatives. SIAM J. Financ. Math. 14(1), 99–126 (2023)

Samorodnitsky, G., Taqqu, M.S.: Stable Non-Gaussian Random Processes: Stochastic Models with Infinite Variance. Chapman & Hall, New York (1994)

Sato, K.: Lévy Processes and Infinitely Divisible Distributions. Cambidge University Press, Cambridge (1999)

Steutel, F.W., van Harn, K.: Infinite Divisibility of Probability Distributions on the Real Line. Marcel Dekker Inc, New York (2004)

Tweedie, M.C.K.: An index which distinguishes between some important exponential families. In J. K. Ghosh and J. Roy (eds.), Statistics: Applications and New Directions. Proceedings of the Indian Statistical Institute Golden Jubilee International Conference. Indian Statistical Institute, Calcutta, pp. 579–604 (1984)

Xia, Y., Grabchak, M.: Estimation and simulation for multivariate tempered stable distributions. J. Stat. Comput. Simul. 92(3), 451–475 (2022)

Acknowledgements

The authors wish to thank the anonymous referee, whose comments led to an improvement in the presentation of the paper.

Funding

Open access funding provided by the Carolinas Consortium.

Author information

Authors and Affiliations

Contributions

Both authors contributed to the theoretical parts of the paper. M.G. wrote most of the text and S.S. performed the simulations and prepared the tables and figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Heading

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grabchak, M., Saba, S. On approximations of subordinators in \(L^p\) and the simulation of tempered stable distributions. Stat Comput 35, 54 (2025). https://doi.org/10.1007/s11222-025-10586-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-025-10586-x