Abstract

Deep learning is a very computing-intensive and time-consuming task. It needs an amount of computing resource much greater than a single machine can afford to train a sophisticated model within a reasonable time. Normally, GPU clusters are required to reduce the training time of a deep learning model from days to hours. However, building large dedicated GPU clusters is not always feasible or even ineffective for most organizations due to the cost of purchasing, operation and maintenance while such systems are not fully utilized all the time. In this regard, volunteer computing can address this problem as it provides additional computing resources at less or no cost. This work presents the hybrid cluster and volunteer computing platform that scales out GPU clusters into volunteer computing for distributed deep learning. The owners of the machines contribute unused computing resources on their computers to extend the capability of the GPU cluster. The challenge is to seamlessly align the differences between GPU cluster and volunteer computing systems so as to ensure the scalability transparency, whereas performance is also another major concern. We validate the proposed work with two well-known sample cases. The results show an efficient use of our hybrid platform at sub-linear speedup.

Similar content being viewed by others

Notes

Enabling Grids for E-sciencE (EGEE) has become a part of European Grid Infrastructure (EGI) [4]

References

(2017) Amazon elastic compute cloud. https://aws.amazon.com/ec2/. Accessed 17 Mar 2017

(2017) HTCondor high throughput computing. https://research.cs.wisc.edu/htcondor/. Accessed 17 Mar 2017

(2017) Openstack open source cloud computing software. https://www.openstack.org/. Accessed 17 Mar 2017

(2017) A short history of egi. https://www.egi.eu/about/a-short-history-of-egi/. Accessed 17 Mar 2017

Shilov A (2017) Discrete desktop GPU market trends Q2 2016: AMD grabs market share, but NVIDIA remains on top. http://www.anandtech.com/show/10613/discrete-desktop-gpu-market-trends-q2-2016-amd-grabs-market-share-but-nvidia-remains-on-top. Accessed 17 Mar 2017

(2018) Gpu-accelerated applications. https://www.nvidia.com/content/gpu-applications/PDF/gpu-applications-catalog.pdf. Accessed 20 Mar 2017

(2018) Multihost BOINC. http://boinc.berkeley.edu/trac/wiki/MultiHost. Accessed 20 Mar 2017

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, Kudlur M, Levenberg J, Monga R, Moore S, Murray DG, Steiner B, Tucker P, Vasudevan V, Warden P, Wicke M, Yu Y, Zheng X (2016) Tensorflow: a system for large-scale machine learning. In: Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, USENIX Association, OSDI’16, pp 265–283

Altintas I, Berkley C, Jaeger E, Jones M, Ludscher B, Mock S (2004) Kepler: towards a grid-enabled system for scientific workflows. In: Proceedings of the Workflow in Grid Systems Workshop in The Tenth Global Grid Forum (GGF-10)

Anderson DP (2004) BOINC: a system for public-resource computing and storage. In: Proceedings of the 5th IEEE/ACM International Workshop on Grid Computing (Grid), pp 4–10

Anderson DP, Fedak G (2006) The computational and storage potential of volunteer computing. In: Cluster Computing and the Grid, 2006. CCGRID 06. Sixth IEEE International Symposium, pp 73–80

Aydin S, Samet R, Bay OF (2017) Real-time parallel image processing applications on multicore cpus with openmp and gpgpu with cuda. J Supercomput. https://doi.org/10.1007/s11227-017-2168-6

Burp (2017) BURP: the big and ugly rendering project. http://burp.renderfarming.net/. Accessed 20 Mar 2017

Cappello F, Djilali S, Fedak G, Herault T, Magniette F, Néri V, Lodygensky O (2005) Computing on large-scale distributed systems: Xtrem web architecture, programming models, security, tests and convergence with grid. Future Gener Comput Syst 21(3):417–437

Chen J, Monga R, Bengio S, Jozefowicz R (2016) Revisiting distributed synchronous SGD. In: Proceedings of the ICLR Workshop

Chilimbi T, Suzue Y, Apacible J, Kalyanaraman K (2014) Project Adam: building an efficient and scalable deep learning training system. In: Proceedings of the 11th USENIX Conference on Operating Systems Design and Implementation, OSDI’14, pp 571–582

Coates A, Huval B, Wang T, Wu DJ, Catanzaro BC, Ng AY (2013) Deep learning with COTS HPC systems. In: Proceedings of the 30th International Conference on Machine Learning (ICML)

CondorB (2017) Condor-B: BOINC/condor integration. http://boinc.berkeley.edu/trac/wiki/CondorBoinc. Accessed 20 Mar 2017

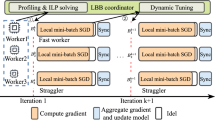

Cong G, Bhardwaj O (2017) A hierarchical, bulk-synchronous stochastic gradient descent algorithm for deep-learning applications on gpu clusters. In: 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), pp 818–821

Cui H, Zhang H, Ganger GR, Gibbons PB, Xing EP (2016) GeePS: scalable deep learning on distributed GPUs with a GPU-specialized parameter server. In: Proceedings of the Eleventh European Conference on Computer Systems (EuroSys)

Dean J, Corrado G, Monga R, Chen K, Devin M, Le QV, Mao MZ, Ranzato M, Senior AW, Tucker PA, Yang K, Ng AY (2012) Large scale distributed deep networks. In: Conference on Neural Information Processing Systems (NIPS), pp 1232–1240

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) ImageNet: a large-scale hierarchical image database. In: Computer Vision and Pattern Recognition. IEEE Conference on CVPR 2009

Desell T (2017) Large scale evolution of convolutional neural networks using volunteer computing. In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, ACM, New York, NY, USA, GECCO ’17, pp 127–128, https://doi.org/10.1145/3067695.3076002

Docking (2017) Docking@home. http://docking.cis.udel.edu/. Accessed 20 Mar 2017

Farkas Z, Kacsuk P, Balaton Z, Gombás G (2010) Interoperability of BOINC and EGEE. Future Gener Comput Syst 26(8):1092–1103

Gawehn E, Hiss JA, Schneider G (2016) Deep learning in drug discovery. Mol Inf 35(1):3–14

Guerrero GD, Imbernn B, Prez-Snchez H, Sanz F, Garca JM, Cecilia JM (2014) A performance/cost evaluation for a GPU-based drug discovery application on volunteer computing. BioMed Res Int 2014. https://doi.org/10.1155/2014/474219

Gupta S, Zhang W, Wang F (2017) Model accuracy and runtime tradeoff in distributed deep learning: a systematic study. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, IJCAI’17, pp 4854–4858

Hannun AY, Case C, Casper J, Catanzaro B, Diamos G, Elsen E, Prenger R, Satheesh S, Sengupta S, Coates A, Ng AY (2014) Deep speech: scaling up end-to-end speech recognition. arxiv:1412.5567

Iandola FN, Moskewicz MW, Ashraf K, Keutzer K (2016) FireCaffe: near-linear acceleration of deep neural network training on compute clusters. In: The 29th IEEE International Conference on Computer Vision and Pattern Recognition (CVPR)

Javadi B, Kondo D, Vincent JM, Anderson DP (2009) Mining for statistical models of availability in large-scale distributed systems: an empirical study of SETI@home. In: 2009 IEEE International Symposium on Modeling, Analysis Simulation of Computer and Telecommunication Systems

Javadi B, Kondo D, Vincent JM, Anderson DP (2011) Discovering statistical models of availability in large distributed systems: an empirical study of SETI@home. IEEE Trans Parallel Distrib Syst 22(11):1896–1903

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T (2014) Caffe: convolutional architecture for fast feature embedding. In: Proceedings of the 22Nd ACM International Conference on Multimedia, pp 675–678

Jin PH, Yuan Q, Iandola F, Keutzer K (2016) How to scale distributed deep learning? In: NIPS Workshop on Machine Learning Systems

Kacsuk P, Farkas Z, Fedak G (2008) Towards making BOINC and EGEE interoperable. In: eScience, 2008. IEEE Fourth International Conference on eScience ’08, pp 478–484

Kijsipongse E, Assawamekin N (2014) Improving the communication performance of distributed animation rendering using bittorrent file system. J Syst Softw 97(C):178–191

Kijsipongse E, U-ruekolan S (2013) Scaling HPC clusters with volunteer computing for data intensive applications. In: Computer Science and Software Engineering (JCSSE), 2013 10th International Joint Conference, pp 138–142

Kondo D, Fedak G, Cappello F, Chien AA, Casanova H (2007) Characterizing resource availability in enterprise desktop grids. Future Gen Comput Syst 23(7):888–903

Konecn J, McMahan HB, Yu FX, Richtrik P, Suresh AT, Bacon D (2016) Federated learning: strategies for improving communication efficiency. In: NIPS Workshop on Private Multi-Party Machine Learning

Korambath P, Wang J, Kumar A, Hochstein L, Schott B, Graybill RB, Baldea M, Davis J (2014) Deploying Kepler workflows as services on a cloud infrastructure for smart manufacturing. In: Proceedings of the International Conference on Computational Science, ICCS 2014, pp 2254–2259

Kovács J, Marosi AC, Visegrádi A, Farkas Z, Kacsuk P, Lovas R (2015) Boosting gLite with cloud augmented volunteer computing. Future Gen Comput Syst 43(C):12–23

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Pereira F, Burges CJC, Bottou L, Weinberger KQ (eds) Advances in neural information processing systems, pp 1097–1105

Lee K, Son M (2017) Deepspotcloud: Leveraging cross-region gpu spot instances for deep learning. In: 2017 IEEE 10th International Conference on Cloud Computing (CLOUD), pp 98–105

Li M, Andersen DG, Park JW, Smola AJ, Ahmed A, Josifovski V, Long J, Shekita EJ, Su BY (2014) Scaling distributed machine learning with the parameter server. In: Proceedings of the 11th USENIX Conference on Operating Systems Design and Implementation, OSDI’14, pp 583–598

Lin M, Chen Q, Yan S (2014) Network in network. In: Proceedings of the International Conference on Learning Representations (ICLR)

Ludäscher B, Altintas I, Berkley C, Higgins D, Jaeger E, Jones M, Lee EA, Tao J, Zhao Y (2006) Scientific workflow management and the kepler system: research articles. Concurr Comput Pract Exp 18(10):1039–1065

Moritz P, Nishihara R, Stoica I, Jordan MI (2016) Sparknet: training deep networks in spark. In: International Conference on Learning Representations (ICLR)

Myers DS, Bazinet AL, Cummings MP (2007) Expanding the reach of grid computing: combining globus- and BOINC-based systems. In: Zomaya AY, Talbi EG (eds) Grid computing for bioinformatics and computational biology. Wiley, New York, pp 71–85

SETI (2017) SETI@home. http://setiathome.berkeley.edu/. Accessed 20 Mar 2017

Shehab M, Al-Ayyoub M, Jararweh Y, Jarrah M (2017) Accelerating compute-intensive image segmentation algorithms using gpus. J Supercomput 73(5):1929–1951

Shrivastava D, Chaudhury S, Jayadeva D (2017) A data and model-parallel, distributed and scalable framework for training of deep networks in apache spark. https://arxiv.org/abs/1708.05840

Szegedy C, Liu W, Jia Y, Sermanet P, Reed SE, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015

Torque (2017) Torque resource manager. http://www.adaptivecomputing.com/products/open-source/torque/. Accessed 20 Mar 2017

Urbah E, Kacsuk P, Farkas Z, Fedak G, Kecskemeti G, Lodygensky O, Marosi A, Balaton Z, Caillat G, Gombas G, Kornafeld A, Kovacs J, He H, Lovas R (2009) EDGeS: bridging EGEE to BOINC and XtremWeb. J Grid Comput 7:335–354

Vouzis PD, Sahinidis NV (2011) Gpu-blast: using graphics processors to accelerate protein sequence alignment. PMC 27:182–188

Wang J, Altintas I (2012) Early cloud experiences with the Kepler scientific workflow system. In: Proceedings of the International Conference on Computational Science, ICCS 2012, pp 1630–1634

Wang Y, Zhang L, Ren Y, Zhang W (2017) Nexus: bringing efficient and scalable training to deep learning frameworks. In: 2017 IEEE 25th International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS), pp 12–21

Wingstrom J, Casanova H (2008) Probabilistic allocation of tasks on desktop grids. In: Parallel and Distributed Processing, 2008. IPDPS 2008. IEEE International Symposium

Zhang W, Gupta S, Lian X, Liu J (2016) Staleness-aware async-SGD for distributed deep learning. In: Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI), pp 2350–2356

Acknowledgements

The authors acknowledge National e-Science Infrastructure Consortium for providing partial computing resources that have contributed to the research results reported within this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kijsipongse, E., Piyatumrong, A. & U-ruekolan, S. A hybrid GPU cluster and volunteer computing platform for scalable deep learning. J Supercomput 74, 3236–3263 (2018). https://doi.org/10.1007/s11227-018-2375-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-018-2375-9