Abstract

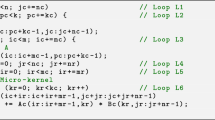

The general matrix–matrix multiplication is a core building block for implementing Basic Linear Algebra Subprograms. This paper presents a methodology for automatically producing the matrix–matrix multiplication kernels tuned for the Intel Xeon Phi Processor code-named Knights Landing and the Intel Skylake-SP processors with AVX-512 intrinsic functions. The architecture of the latest manycore processors has been complicated in the levels of parallelism and cache hierarchies; it is not easy to find the best combination of optimization techniques for a given application. Our approach produces matrix multiplication kernels through a process of heuristic auto-tuning based on generating multiple kernels and selecting the fastest ones through performance tests. The tuning parameters include the size of block matrices for registers and caches, prefetch distances, and loop unrolling depth. Parameters for multithreaded execution, such as identifying loops to parallelize and the optimal number of threads for such loops are also investigated. We also present a method to reduce the parameter search space based on our previous research results.

Similar content being viewed by others

References

Bilmes J, Asanovic K, Chin CW, Demmel J (2014) Optimizing matrix multiply using PHiPAC: a portable, high-performance, ANSI C coding methodology. In: ACM International Conference on Supercomputing 25th Anniversary Volume. ACM, pp 253–260

Goto K, Geijn RA (2008) Anatomy of high-performance matrix multiplication. ACM Trans Math Softw (TOMS) 34(3):12

Gunnels JA, Henry GM, Van De Geijn RA (2001) A family of high-performance matrix multiplication algorithms. In: International Conference on Computational Science. Springer, pp 51–60

Heinecke A, Vaidyanathan K, Smelyanskiy M, Kobotov A, Dubtsov R, Henry G, Shet AG, Chrysos G, Dubey P (2013) Design and implementation of the linpack benchmark for single and multi-node systems based on Intel® Xeon Phi Coprocessor. In: IEEE 27th International Symposium on Parallel & Distributed Processing (IPDPS), 2013. IEEE, pp 126–137

Intel: Math kernel library (2018) https://software.intel.com/en-us/intel-mkl. Accessed 24 July 2018

Jeffers J, Reinders J, Sodani A (2016) Intel Xeon Phi processor high performance programming: knights, landing edn. Morgan Kaufmann, Burlington

Lim R, Lee Y, Kim R, Choi J (2018) OpenMP-based parallel implementation of matrix-matrix multiplication on the Intel Knights Landing. In: HPC Asia 2018, pp 63–66

Lim R, Lee Y, Kim R, Choi J (2018) An implementation of matrix-matrix multiplication on the Intel KNL processor with AVX-512. Cluster Comput 21(4):1785–1795

Low TM, Igual FD, Smith TM, Quintana-Orti ES (2016) Analytical modeling is enough for high-performance blis. ACM Trans Math Softw (TOMS) 43(2):12

Smith TM, Van De Geijn RA, Smelyanskiy M, Hammond JR, Van Zee FG (2014) Anatomy of high-performance many-threaded matrix multiplication. In: Parallel and Distributed Processing Symposium, 2014 IEEE 28th International. IEEE, pp 1049–1059

Van Zee FG, Van De Geijn RA (2015) BLIS: a framework for rapidly instantiating BLAS functionality. ACM Trans Math Softw (TOMS) 41(3):14

Whaley RC, Dongarra JJ (1998) Automatically tuned linear algebra software. In: Proceedings of the 1998 ACM/IEEE Conference on Supercomputing. IEEE Computer Society, pp 1–27

Whaley RC, Petitet A, Dongarra JJ (2001) Automated empirical optimizations of software and the atlas project. Parallel Comput 27(1–2):3–35

Van Zee FG, Smith TM, Marker B, Low TM, Van De Geign RA, Igual FD, Smelyanskiy M, Zhang X, Kistler M, Austel V, Gunnels JA, Killough L (2016) The BLIS framework: experiments in portability. ACM Trans Math Softw (TOMS) 42(2):12:1–12:19

Zhang X, Wang Q, Werber S (2018) Openblas. http://www.openblas.net. Accessed 24 July 2018

Acknowledgements

The work was supported by the Next-Generation Information Computing Development Program through the National Research Foundation of Korea (NRF) funded by the Korea government (MSIT) (NRF-2015M3C4A7065662).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lim, R., Lee, Y., Kim, R. et al. Auto-tuning GEMM kernels on the Intel KNL and Intel Skylake-SP processors. J Supercomput 75, 7895–7908 (2019). https://doi.org/10.1007/s11227-018-2702-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-018-2702-1