Abstract

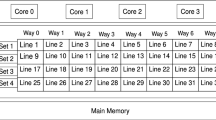

Although the shared last-level cache (SLLC) occupies a significant portion of multicore CPU chip die area, more than 59% of SLLC cache blocks are not reused during their lifetime. If we can filter out these useless blocks from SLLC, we can effectively reduce the size of SLLC without sacrificing performance. For this purpose, we classify the reuse of cache blocks into temporal and spatial reuse and further analyze the reuse by using reuse interval and reuse count. From our experimentation, we found that most of spatially reused cache blocks are reused only once with short reuse interval, so it is inefficient to manage them in SLLC. In this paper, we propose a new small additional cache called Filter Cache to the SLLC, which cannot only check the temporal reuse but also can prevent spatially reused blocks from entering the SLLC. Thus, we do not maintain data for non-reused blocks and spatially reused blocks in the SLLC, dramatically reducing the size of the SLLC. Through our detailed simulation on PARSEC benchmarks, we show that our new SLLC design with Filter Cache exhibits comparable performance to the conventional SLLC with only 24.21% of SLLC area across a variety of different workloads. This is achieved by its faster access and high reuse rates in the small SLLC with Filter Cache.

Similar content being viewed by others

References

Core Intel (2010) i7 processor extreme edition and Intel Core i7 processor datasheet. White paper, Intel

Singh T, Rangarajan S, John D, Henrion C, Southard S, McIntyre H, Novak A et al. (2017) 3.2 Zen: a next-generation high-performance × 86 core. In: Solid-State Circuits Conference (ISSCC), 2017 IEEE International, pp 52–53. IEEE

McNairy Cameron, Soltis Don (2003) Itanium 2 processor microarchitecture. IEEE Micro 23(2):44–55

Konstadinidis GK, Li HP, Schumacher F, Krishnaswamy V, Cho H, Dash S, Masleid RP et al (2016) SPARC M7: A 20 nm 32-core 64 MB L3 cache processor. IEEE J Solid-State Circuits 51(1):79–91

Sinharoy B, Van Norstrand JA, Eickemeyer RJ, Le HQ, Leenstra J, Nguyen DQ, Konigsburg B et al (2015) IBM POWER8 processor core microarchitecture. IBM J Res Dev 59(1):1–2

Albericio J, Ibáñez P, Viñals V, Llabería JM (2013) Exploiting reuse locality on inclusive shared last-level caches. ACM Trans Archit Code Optim (TACO) 9(4):38

Jaleel A, Theobald KB, Steely SC Jr, Emer J (2010) High performance cache replacement using re-reference interval prediction (RRIP). ACM SIGARCH Comput Archit News 38(3):60–71

Wu CJ, Jaleel A, Hasenplaugh W, Martonosi M, Steely Jr SC, Emer J (2011) SHiP: signature-based hit predictor for high performance caching. In: Proceedings of the 44th Annual Ieee/Acm International Symposium on Microarchitecture , pp 430–441. IEEE

Albericio J, Ibáñez P, Viñals V, Llabería JM (2013) The reuse cache: downsizing the shared last-level cache. In: Proceedings of the 46th Annual IEEE/ACM International Symposium on Microarchitecture, pp 310–321. ACM

Das S, Kapoor HK (2016) Towards a better cache utilization by selective data storage for CMP last level caches. In: 2016 29th International Conference on VLSI Design and 2016 15th International Conference on Embedded Systems (VLSID) , pp 92–97. IEEE

Zhao L, Iyer R, Makineni S, Newell D, Cheng L (2010) NCID: a non-inclusive cache, inclusive directory architecture for flexible and efficient cache hierarchies. In: Proceedings of the 7th ACM International Conference on Computing Frontiers, pp 121–130. ACM

Binkert N, Beckmann B, Black G, Reinhardt SK, Saidi A, Basu A, Hestness J, Hower DR, Krishna T, Sardashti S, Sen R, Sewell K, Shoaib M, Vaish N, Hill MD, Wood DA (2011) The Gem5 simulator. ACM SIGARCH Comput Archit News 39(2):1–7

Bienia C, Kumar S, Singh JP, Li K (2008) The PARSEC benchmark suite: characterization and architectural implications. In: Proceedings of the 17th International Conference on Parallel Architectures and Compilation Techniques, pp 72–81, Oct 2008

Thoziyoor, S., Muralimanohar, N., Ahn, J. H., & Jouppi, N., “Cacti 5.3.”, HP Laboratories, Palo Alto, CA., 2008

Jain A, Lin C (2016) Back to the future: leveraging Belady’s algorithm for improved cache replacement. In: 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture. IEEE

Díaz J, Monreal T, Ibáñez P, Llabería JM, Viñals V (2019) ReD: a reuse detector for content selection in exclusive shared last-level caches. J Parallel Distrib Comput 125:106–120

Acknowledgements

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) and funded by the Ministry of Science, ICT and Future Planning (NRF-2017R1A2B2009 641). This research was also supported by the MSIP (Ministry of Science, ICT and Future Planning), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2019-2015-0-00363) supervised by the IITP (Institute for Information & Communications Technology Promotion). This research was supported by Korea University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bae, H.J., Choi, L. Filter cache: filtering useless cache blocks for a small but efficient shared last-level cache. J Supercomput 76, 7521–7544 (2020). https://doi.org/10.1007/s11227-020-03177-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03177-2