Abstract

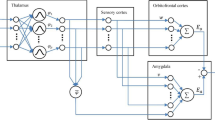

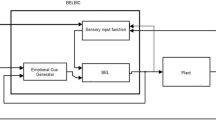

Biologically inspired controllers demonstrate great success in several applications, mainly in situations that present disturbances and uncertainties in the dynamics of the system. In recent times, several works have appeared in the area of emotional learning which occurs in the human brain, thus allowing to the emergence of new theories and applications in control engineering. In control engineering, it is possible to highlight the BELBIC (Brain Emotional Learning-Based Intelligent Controller). However, the design and commissioning of this type of controller still represents a major challenge for researchers, since it is necessary to determine some characteristic signals to this system (stimuli), which can vary from application to application. This work presents a methodology for the construction of architectures for BELBIC stimulus signals, using as a basis the DRL (Deep Reinforcement Learning) techniques. The DRL allows extracting characteristic patterns from the dynamics of systems which, perhaps, may have high dimensionality and possibly nonlinear dynamics, as is the case of most problems involving real-world dynamic systems. The resulting controller model is validated by applying an inverted pendulum dynamic system in order to demonstrate a new approach to the architectures of the BELBIC that allows to achieve a greater generalization in its application, as well as providing a viable alternative to the traditional models in use.

Similar content being viewed by others

References

Lucas C, Shahmirzadi D, Sheikholeslami N (2004) Introducing BELBIC: brain emotional learning based intelligent controller. Intell Autom Soft Comput 10:11–21. https://doi.org/10.1080/10798587.2004.10642862

Jafari M, Fehr R, Carrillo LRG, Xu H (2017) Brain emotional learning-based intelligent tracking control for unmanned aircraft systems with uncertain system dynamics and disturbance. In: Proc. Int. Conf. Unmanned Aircraft Syst 1470–1475. https://doi.org/10.1109/ICUAS.2017.7991512

Khorashadizadeh S, Zadeh SMH, Koohestani MR et al (2019) Robust model-free control of a class of uncertain nonlinear systems using BELBIC: stability analysis and experimental validation. J Braz Soc Mech Sci Eng 41:311. https://doi.org/10.1007/s40430-019-1824-6

Zamani AA, Etedali S (2021) A new framework of multi-objective BELBIC for seismic control of smart base-isolated structures equipped with MR dampers. Eng Comput. https://doi.org/10.1007/s00366-021-01414-7

Morén J, Balkenius CA (2000) Emotion and learning: a computational model of the amygdala. From Anim Anim: The Sixth Int Conf Simul Adapt Behav 6:115–124. https://doi.org/10.1007/s00521-016-2492-4

Lotfi E, Rezaee AA (2019) Generalized BELBIC. Nat Comput Appl 31:4367–4383. https://doi.org/10.1007/s00521-018-3352-1

Dehkordi BM, Kiyoumarsi A, Hamedani P, Lucas C (2011) A comparative study of various intelligent based controllers for speed control of ipmsm drives in the field-weakening region. Expert Syst Appl 38:12643–12653. https://doi.org/10.1016/j.eswa.2011.04.052

Sharma P, Kumar V (2020) Design and analysis of novel bio inspired BELBIC and PSOBELBIC controlled semi active suspension. Int J Veh Perform 6:399–424. https://doi.org/10.1504/IJVP.2020.111407

Sharma P, Kumar V (2021) Design of Novel BELBIC Controlled Semi-Active Suspension and Comparative Analysis with Passive and PID Controlled Suspension. Walailak. J Sci Technol (WJST) 18(5):8989. https://doi.org/10.48048/wjst.2021.8989

Qutubuddin MD, Desik NJ, Yadaiah N (2021) Design and implementation of an intelligent multi modular joint (MMJ)-brain controller: application to aircraft and brushless DC (BLDC) systems. Int J Dyn Control 1:19. https://doi.org/10.1007/s40435-021-00773-9

Morén J (2002) Emotion and learning: a computational model of the amygdala. Lund Univ Cognit Sci 93:16

Lucas C (2011) BELBIC and its industrial applications: towards embedded neuroemotional control codesign. Integrated systems, design and technology, Springer, Berlin, Heidelberg, p 203–214

Debnath B, Mija SJ (2021) Design of a multivariable stimulus for emotional-learning based control of a 2-dof laboratory helicopter. ISA Transactions

Sharma P, Kumar V (2020) Design and analysis of novel bio inspired BELBIC and PSOBELBIC controlled semi active suspension. Int J Veh Perform, 6(4)

Aggleton JP (1992) The amygdala: Neurobiological aspects of emotion, memory, and mental dysfunction, 1st edn. Wiley-Liss, NY, US

Jayawardhana B, Logemann H, Ryan E (2008) PID control of second-order systems with hysteresis. Int J Control 81(8):1331–1342. https://doi.org/10.1080/00207170701772479

Sharbafi MA, Lucas C, Daneshvar R (2010) Motion control of omni-directional three-wheel robots by brain-emotional-learning based intelligent controller. EEE Transactions on Systems, Man, and Cybernetics. Part C (Appl Rev) 40:630–638

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA (2017) Deep reinforcement learning: a brief survey. IEEE Signal Process Mag 34:26–38. https://doi.org/10.1109/MSP.2017.2743240

Kiran BR, Sobh I, Talpaert V, Mannion P, AlSallab AA, Yogamani S, Pérez P (2021) Deep reinforcement learning for autonomous driving: a survey. IEEE Trans Intell Transp Syst. https://doi.org/10.1109/TITS.2021.3054625

Liang W, Huang W, Long J, Zhang K, Li K, Zhang D (2020) Deep reinforcement learning for resource protection and real-time detection in IoT environment. IEEE Internet Things J 7:6392–6401. https://doi.org/10.1109/JIOT.2020.2974281

Zhao R, Wang X, Xia J, Fan L (2020) Deep reinforcement learning based mobile edge computing for intelligent internet of things. Phys Commun. https://doi.org/10.1016/j.phycom.2020.101184

Brockman G, Cheung V, Pettersson L, Schneider J, Schulman J, Tang J, Zaremba W (2016) Openai gym. arXiv preprint arXiv:1606.01540

Zamani AA, Etedali S (2021) A new framework of multi-objective BELBIC for seismic control of smart base-isolated structures equipped with MR dampers. Eng Comput, 1–14

Barto AG, Sutton RS, Anderson CW (1983) Neuronlike adaptive elements that can solve difficult learning control problems. IEEE Trans Syst Man Cybern 13:835–846

Fujimoto, SS, Hoof H, Meger D (2018) Addressing function approximation error in actor-critic methods. ICML 2018 arXiv:1802.09477 [cs.AI]

Schulman J, Levine S, Moritz P, Jordan MI, Abbeel P (2015) Trust region policy optimization. arXiv:1502.05477 [cs.LG]

Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv:1707.06347 [cs.LG]

Wu Y, Mansimov E, Liao S, Grosse R, Ba J (2017) Scalable trust-region method for deep reinforcement learning using kronecker-factored approximation. arXiv:1708.05144 [cs.LG]

Özalp R, Varol NK, Taşci B, Uçar A (2020) A Review of Deep Reinforcement Learning Algorithms and Comparative Results on Inverted Pendulum System. In: Tsihrintzis G, Jain L (eds) Machine learning paradigms. Learning and analytics in intelligent systems, vol 18. Springer, Cham. https://doi.org/10.1007/978-3-030-49724-8_10

Zhikang TW, Yuto A, Masahito U (2020) Deep reinforcement learning control of quantum cartpoles phys. Rev Lett 125:100401

Raffin Antonin (2018) RL Baselines Zoo. GitHub repository. https://github.com/araffin/rl-baselines-zoo

Sutton RS, Barto AG (2018) Reinforcement Learning, 2nd edn. MIT Press, London, England

Goodfellow I, Bengio Y, Courville A (2016) A. Deep Learning, 1st edn. MIT Press, [S.l.]

Liu Q, Zhai JW, Zhang ZZ (2017) A survey on deep reinforcement learning.Chin. J Comput. 40:1–28

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Silva, J., Aquino, R., Ferreira, A. et al. Deep brain emotional learning-based intelligent controller applied to an inverted pendulum system. J Supercomput 78, 8346–8366 (2022). https://doi.org/10.1007/s11227-021-04200-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-021-04200-w