Abstract

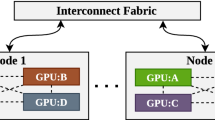

Synchronous parameters algorithms with data parallelism have been successfully utilized to accelerate the distributed training of deep neural networks (DNNs). However, a prevalent shortcoming of the synchronous methods is computation waste resulted from the mutual waiting among the computational workers with different performance and the communication delays at each synchronization. To alleviate this drawback, we propose a novel method, free local stochastic gradient descent (FLSGD) with parallel synchronization, to eliminate the waiting and communication overhead. Specifically, the process of distributed DNN training is firstly modeled as a pipeline which assembly consists of three components: dataset partition, local SGD, and parameter updating. Then, a novel adaptive batch size and dataset partition method based on the computational performance of the node is employed to eliminate the waiting time by keeping the load balance of the distributed DNN training. The local SGD and the parameter updating including gradients synchronization are parallelized to eliminate the communication cost by one-step gradient delaying, and the stale problem is remedied by an appropriate approximation. To our best knowledge, this is the first work focusing on decreasing both distributed training load balancing and communication overhead Extensive experiments are conducted with four state-of-the-art DNN models on two image classification datasets (i.e., CIFAR10 and CIFAR100) to demonstrate that the effectiveness of FLSGD outperforms the synchronous methods.

Similar content being viewed by others

Change history

29 March 2022

A Correction to this paper has been published: https://doi.org/10.1007/s11227-022-04440-4

Notes

References

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

Dayiheng L, Yeyun G, Jie F, Yu Y, Jiusheng C, Daxin J, Jiancheng L, Nan D (2020) Rikinet: reading wikipedia pages for natural question answering. ACL. pp 6762–6771

Sim CKB (2014) A spectral masking approach to noise-robust speech recognition using deep neural networks. IEEE Trans Audio Speech Lang Process Publ IEEE Signal Process Soc 22(8):1296–1305

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol 2016. pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Deng J, Dong W, Socher R, Li L-J, Li K, Li FF (2009) Imagenet: a large-scale hierarchical image database. pp 248–255. https://doi.org/10.1109/CVPR.2009.5206848

Dean J, Corrado GS, Monga R, Chen K, Devin M, Le QV, Mao MZ, Ranzato M, Senior A, Tucker P, Yang K, Ng, AY (2012) Large scale distributed deep networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems, vol 1. NIPS’12, Curran Associates Inc., Red Hook, NY, USA, pp 1223–1231

Lecun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444. https://doi.org/10.1038/nature14539

Zhang H, Hsieh C-J, Akella V (2016) Hogwild++: A new mechanism for decentralized asynchronous stochastic gradient descent. In: 2016 IEEE 16th International Conference on Data Mining (ICDM). pp 629–638. https://doi.org/10.1109/ICDM.2016.0074

Dekel O, Gilad-Bachrach R, Shamir O, Xiao L (2012) Optimal distributed online prediction using mini-batches. J Mach Learn Res 13:165–202

Zhao X, An A, Liu J, Chen BX (2019) Dynamic stale synchronous parallel distributed training for deep learning. https://doi.org/10.1109/ICDCS.2019.00149

Yu H, Yang S, Zhu S (2019) Parallel restarted sgd with faster convergence and less communication: demystifying why model averaging works for deep learning. In: Proceedings of the AAAI Conference on Artificial Intelligence vol 33, pp 5693–5700. https://doi.org/10.1609/aaai.v33i01.33015693

Stich SU (2019) Local SGD converges fast and communicates little. In: International Conference on Learning Representations. https://openreview.net/forum?id=S1g2JnRcFX

Zhang J, Sa CD, Mitliagkas I, Ré C (2021) Parallel SGD: when does averaging help?. CoRR. arXiv:1606.07365

Wang J, Joshi G (2019) Adaptive communication strategies to achieve the best error-runtime trade-off in local-update SGD. In: Talwalkar A, Smith V, Zaharia M (eds) Proceedings of Machine Learning and Systems, 2019, MLSys 2019, Stanford, CA, USA, March 31–April 2, 2019, mlsys.org, 2019

Zhang S, Choromanska A, LeCun Y (2015) Deep learning with elastic averaging SGD. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, December 7–12, 2015, Montreal, Quebec, Canada. pp 685–693

Zhao X, Papagelis M, An A, Chen BX, Liu J, Hu Y (2019) Elastic bulk synchronous parallel model for distributed deep learning. In: Wang J, Shim K, Wu X (eds) 2019 IEEE International Conference on Data Mining. ICDM 2019, Beijing, China, November 8–11, 2019, IEEE, pp 1504–1509

Lian X, Huang Y, Li Y, Liu J (2015) Asynchronous parallel stochastic gradient for nonconvex optimization. In: Proceedings of the 28th International Conference on Neural Information Processing Systems, vol 2, NIPS’15. MIT Press, Cambridge, pp 2737–2745

Seide F, Fu H, Droppo J, Li G, Yu D (2014) 1-bit stochastic gradient descent and its application to data-parallel distributed training of speech DNNs. pp 1058–1062

Alistarh D, Grubic D, Li J, Tomioka R, Vojnovic M (2017) QSGD: communication-efficient SGD via gradient quantization and encoding. In: Guyon I, von Luxburg U, Bengio S, Wallach HM, Fergus R, Vishwanathan SVN, Garnett R (eds) Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4–9, 2017, Long Beach, CA, USA. pp 1709–1720

Aji AF, Heafield K (2017) Sparse communication for distributed gradient descent. In: Palmer M, Hwa R, Riedel S (eds) Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP 2017, Copenhagen, Denmark, September 9–11, 2017. Association for Computational Linguistics, pp 440–445

Shi S, Wang Q, Zhao K, Tang Z, Wang Y, Huang X, Chu X (2019) A distributed synchronous SGD algorithm with global top-k sparsification for low bandwidth networks. In: 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), pp 2238–2247

Vogels T, Karimireddy SP, Jaggi M (2020) Practical low-rank communication compression in decentralized deep learning. In: Larochelle H, Ranzato M, Hadsell R, Balcan M, Lin H (eds) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6–12, 2020, virtual

Zhang H, Zheng Z, Xu S, Dai W, Ho Q, Liang X, Hu Z, Wei J, Xie P, Xing EP (2017) Poseidon: an efficient communication architecture for distributed deep learning on GPU clusters. In: USENIX Annual Technical Conference (USENIX ATC 17). USENIX Association, Santa Clara, CA, pp 181–193

Wang S, Pi A, Zhou X, Wang J, Xu CZ (2021) Overlapping communication with computation in parameter server for scalable dl training. IEEE Trans Parallel Distrib Syst 32(9):2144–2159

Li Y, Yu M, Li S, Avestimehr S, Kim NS, Schwing A (2018) Pipe-SGD: A decentralized pipelined SGD framework for distributed deep net training. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18. Curran Associates Inc., Red Hook, NY, USA, pp 8056–8067

Lin T, Stich SU, Patel KK, Jaggi M (2020) Don’t use large mini-batches, use local SGD. In: International Conference on Learning Representations. https://openreview.net/forum?id=B1eyO1BFPr

Ye Q, Zhou Y, Shi M, Sun Y, Lv J (2020) DBS: Dynamic batch size for distributed deep neural network training. arXiv e-prints arXiv:2007.11831

Zinkevich M, Weimer M, Smola AJ, Li L (2010) Parallelized stochastic gradient descent. In: Lafferty JD, Williams CKI, Shawe-Taylor J, Zemel RS, Culotta A (eds) Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, vol 2010. Proceedings of a Meeting held 6–9 December. Vancouver, British Columbia, Canada, Curran Associates Inc, pp 2595–2603

Woodworth B, Kshitij Patel K, Stich SU, Dai Z, Bullins B, McMahan HB, Shamir O, Srebro N (2020) Is local SGD better than minibatch SGD?. arXiv e-prints arXiv:2002.07839

Lin T, Stich SU, Patel KK, Jaggi M (2020) Don’t use large mini-batches, use local SGD. In: 8th International Conference on Learning Representations, ICLR 2020. Addis Ababa, Ethiopia, April 26–30, 2020, OpenReview.net

Kshitij Patel K, Dieuleveut A (2019) Communication trade-offs for synchronized distributed SGD with large step size. arXiv e-prints arXiv:1904.11325

Jiang P, Agrawal G (2020) Adaptive periodic averaging: a practical approach to reducing communication in distributed learning. arXiv e-prints arXiv:2007.06134

Ko Y, Choi K, Seo J, Kim S-W (2021) An in-depth analysis of distributed training of deep neural networks. In: IEEE International Parallel and Distributed Processing Symposium (IPDPS), vol 2021, pp 994–1003. https://doi.org/10.1109/IPDPS49936.2021.00108

Gupta S, Zhang W, Wang F (2016) Model accuracy and runtime tradeoff in distributed deep learning: a systematic study. In: Bonchi F, Domingo-Ferrer J, Baeza-Yates R, Zhou Z, Wu X (eds) IEEE 16th International Conference on Data Mining, ICDM 2016, December 12–15, 2016, Barcelona, Spain. IEEE Computer Society, pp 171–180. https://doi.org/10.1109/ICDM.2016.0028

Ho Q, Cipar J, Cui H, Lee S, Kim JK, Gibbons PB, Gibson GA, Ganger G, Xing EP (2013) More effective distributed ml via a stale synchronous parallel parameter server. In: Advances in Neural Information Processing Systems. pp 1223–1231

Cipar J, Ho Q, Kim JK, Lee S, Ganger GR, Gibson G, Keeton K, Xing E (2013) Solving the straggler problem with bounded staleness. In: Presented as Part of the 14th Workshop on Hot Topics in Operating Systems

Zhang W, Gupta S, Lian X, Liu J. Staleness-aware async-SGD for distributed deep learning. arXiv preprint arXiv:1511.05950

Lian X, Zhang W, Zhang C, Liu J (2018) Asynchronous decentralized parallel stochastic gradient descent. In: Dy J, Krause A (eds) Proceedings of the 35th International Conference on Machine Learning, vol 80 of Proceedings of Machine Learning Research, PMLR. pp 3043–3052

Suresh AT, Yu FX, Kumar S, McMahan HB (2017) Distributed mean estimation with limited communication. In: Proceedings of the 34th International Conference on Machine Learning, vol 70, ICML’17, JMLR.org. p 3329–3337

Wen W, Xu C, Yan F, Wu C, Wang Y, Chen Y, Li H (2017) Terngrad: ternary gradients to reduce communication in distributed deep learning. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds) Advances in Neural Information Processing Systems 30. Curran Associates Inc, pp 1509–1519

Tang H, Yu C, Lian X, Zhang T, Liu J (2019) DoubleSqueeze: Parallel stochastic gradient descent with double-pass error-compensated compression. In: Chaudhuri K, Salakhutdinov R (eds) Proceedings of the 36th International Conference on Machine Learning, vol 97 of Proceedings of Machine Learning Research, PMLR. pp 6155–6165

Sattler F, Wiedemann S, Müller K, Samek W (2019) Sparse binary compression: towards distributed deep learning with minimal communication. In: 2019 International Joint Conference on Neural Networks (IJCNN). pp 1–8

Wang H, Sievert S, Liu S, Charles ZB, Papailiopoulos DS, Wright S (2018) ATOMO: communication-efficient learning via atomic sparsification. In: Bengio S, Wallach HM, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds) Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, December 3–8, 2018, Montréal, Canada. pp 9872–9883

Vogels T, Karimireddy SP, Jaggi M (2019) Powersgd: practical low-rank gradient compression for distributed optimization. In: Wallach HM, Larochelle H, Beygelzimer A, d’Alché-Buc F, Fox EB, Garnett R (eds) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8–14, 2019. Vancouver, BC, Canada, pp 14236–14245

Alistarh D, Hoefler T, Johansson M, Khirirat S, Konstantinov N, Renggli C (2018) The convergence of sparsified gradient methods. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18. Curran Associates Inc., Red Hook, NY, USA, pp 5977–5987

Wang S, Pi A, Zhou X, Wang J, Xu C-Z (2021) Overlapping communication with computation in parameter server for scalable dl training. IEEE Trans Parallel Distrib Syst 32(9):2144–2159. https://doi.org/10.1109/TPDS.2021.3062721

Shi S, Chu X, Li B (2021) Mg-wfbp: Merging gradients wisely for efficient communication in distributed deep learning. IEEE Trans Parallel Distrib Syst 32(8):1903–1917. https://doi.org/10.1109/TPDS.2021.3052862

Zheng S, Meng Q, Wang T, Chen W, Yu N, Ma Z-M, Liu T-Y (2017) Asynchronous stochastic gradient descent with delay compensation. In: International Conference on Machine Learning, PMLR. pp 4120–4129

Friedman J, Hastie T, Tibshirani R et al (2001) The elements of statistical learning, vol 1. Springer series in statistics. Springer, New York

Krizhevsky A, Hinton G. Learning multiple layers of features from tiny images. Computer Science Department, University of Toronto, Tech. Rep 1

Zhao H, Canny J (2014) Kylix: A sparse allreduce for commodity clusters. In: 2014 43rd International Conference on Parallel Processing. pp 273–282. https://doi.org/10.1109/ICPP.2014.36

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol 2017. pp 2261–2269. https://doi.org/10.1109/CVPR.2017.243

Funding

This work is supported by the Key Program of National Science Foundation of China (Grant No. 61836006), and the 111 Project under grant B21044.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Fully documented templates are available in the elsarticle package on CTAN.

The original online version of this article was revised: The Funding information section was missing. Information on Fig. 6 was corrected.

Rights and permissions

About this article

Cite this article

Ye, Q., Zhou, Y., Shi, M. et al. FLSGD: free local SGD with parallel synchronization. J Supercomput 78, 12410–12433 (2022). https://doi.org/10.1007/s11227-021-04267-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-021-04267-5