Abstract

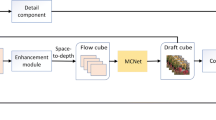

The video super-resolution (VSR) task refers to the use of corresponding low-resolution frames and multiple neighboring frames to generate high-resolution (HR) frames. An important step in VSR is to fuse the features of the reference frame with the features of the supporting frame. Existing VSR methods do not take full advantage of the information provided by distant neighboring frames and usually fuse the information in a one-stage manner. In this paper, we propose a deep fusion video super-resolution network based on temporal grouping. We divide the input sequence into groups according to different frame rates to provide more accurate supplementary information. Our method aggregates temporal-spatial information at different fusion stages. Firstly, we group the input sequence. Then the temporal-spatial information is extracted and fused hierarchically, and these groups are used to recover the information lost in the reference frame. Secondly, integrate information within each group to generate group-wise features, and then perform multi-stage fusion. The information of the reference frame is fully utilized, resulting in a better recovery of video details. Finally, the upsampling module is used to generate HR frames. We conduct a comprehensive comparative experiment on Vid4, SPMC-11 and Vimeo-90K-T datasets. The results show that the proposed method achieves good performance compared with state-of-the-art methods.

Similar content being viewed by others

References

Ahn N, Kang B, Sohn K-A (2018) Fast, accurate, and lightweight super-resolution with cascading residual network. In: Proceedings of the European Conference on Computer Vision, pp 252–268

Bertasius G, Torresani L, Shi J (2018) Object detection in video with spatiotemporal sampling networks. In: Proceedings of the European Conference on Computer Vision, pp 331–346

Caballero J, Ledig C, Aitken A, Acosta A, Totz J, Wang Z, Shi W (2017) Real-time video super-resolution with spatio-temporal networks and motion compensation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2848–2857

Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder-decoder with atrous separable convolution for semantic image segmentation. In: In Proceedings of the European Conference on Computer Vision, pp 801–818

Dai Jifeng, Qi Haozhi, Xiong Yuwen, Li Yi, Zhang Guodong, Hu Han, Wei Yichen (2017) Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp 764–773

Dong Chao, Loy Chen Change, He Kaiming, Tang Xiaoou (2014) Learning a deep convolutional network for image super-resolution. In: Proceedings of the European Conference on Computer Vision, pp 184–199

Gast J, Roth S (2019) Deep video deblurring: the devil is in the details. In: Proceedings of the IEEE/CVF Conference on International Conference on Computer Vision Workshop, pp 3824–3833

Glorot X, Bordes A, Bengio Y (2011) Deep sparse rectifier neural networks. In: Proceedings of the International Conference on Artificial Intelligence and Statistics, pp 315–323

Haris M, Shakhnarovich G, Ukita N (2018) Deep back-projection networks for super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1664–1673

Haris Muhammad, Shakhnarovich G, Ukita N (2019) Recurrent back-projection network for video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3892–3901

Huang X, Belongie S (2017) Arbitrary style transfer in real-time with adaptive instance normalization. In: Proceedings of the IEEE Conference on International Conference on Computer Vision, pp 1510–1519

Huang Yan, Wang Wei, Wang Liang (2015) Bidirectional recurrent convolutional networks for multi-frame super-resolution. Adv Neural Inf Process Syst 28:235–243

Huang Yuanfei, Li Jie, Gao Xinbo, Yanting Hu, Wen Lu (2021) Interpretable detail-fidelity attention network for single image super-resolution. IEEE Trans Image Process 30:2325–2339

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, pp 448–456

Isobe T, Li S, Jia X, Yuan S, Slabaugh G, Xu C, Li Y-L, Wang S, Tian Q (2020) Video super-resolution with temporal group attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 8005–8014

Jo Y, Oh SW, Kang J, Kim SJ (2018) Deep video super-resolution network using dynamic upsampling filters without explicit motion compensation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3224–3232

Armin Kappeler, Seunghwan Yoo, Qiqin Dai, Katsaggelos Aggelos K (2016) Video super-resolution with convolutional neural networks. IEEE Trans Comput Imag 2(2):109–122

Kim J, Lee JK, Lee KM (2016) Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1637–1645

Kim SY, Lim J, Na T, Kim M (2018) 3dsrnet: video super-resolution using 3d convolutional neural networks. CoRR, arXiv:abs/1812.09079

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Kupyn O, Budzan V, Mykhailych M, Mishkin D, Matas J (2018) Deblurgan: blind motion deblurring using conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 8183–8192

Lai W-S, Huang J-B, Ahuja N, Yang M-H (2017) Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 624–632

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, Shi W (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 105–114

Li Z, Yang J, Liu Z, Yang X, Jeon G, Wu W (2019) Feedback network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3867–3876

Lim B, Son S, Kim H, Nah S, Mu LK (2017) Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp 136–144

Liu Ce, Sun Deqing (2013) On Bayesian adaptive video super resolution. IEEE Trans Pattern Anal Mach Intell 36(2):346–360

Liu D, Wang Z, Fan Y, Liu X, Wang Z, Chang S, Huang T (2017) Robust video super-resolution with learned temporal dynamics. In: Proceedings of the IEEE Conference on International Conference on Computer Vision, pp 2526–2534

Nah S, Tae HK, Kyoung ML (2017) Deep multi-scale convolutional neural network for dynamic scene deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3883–3891

Qi Y, Junhua G, Li W, Tian Z, Zhang Y, Geng J (2020) Pulmonary nodule image super-resolution using multi-scale deep residual channel attention network with joint optimization. J Supercomput 76(2):1005–1019

Ren D, Zhang K, Wang Q, Hu Q, Zuo W (2020) Neural blind deconvolution using deep priors. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3338–3347

Ren Dongwei, Zuo Wangmeng, Zhang David, Zhang Lei, Yang Ming-Hsuan (2021) Simultaneous fidelity and regularization learning for image restoration. IEEE Trans Pattern Anal Mach Intell 43(1):284–299

Sajjadi MSM, Vemulapalli R, Brown M (2018) Frame-recurrent video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 6626–6634

Shi W, Caballero J, Huszár F, Totz J, Aitken AP, Bishop R, Rueckert D, Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1874–1883

Song Huihui Xu, Wenjie Liu Dong, Bo Liu, Qingshan Liu, Metaxas Dimitris N (2021) Multi-stage feature fusion network for video super-resolution. IEEE Trans Image Process 30:2923–2934

Tao X, Gao H, Liao R, Wang J, Jia J (2017) Detail-revealing deep video super-resolution. In: Proceedings of the IEEE Conference on International Conference on Computer Vision, pp 4482–4490

Tian Y, Zhang Y, Fu Y, Xu C (2020) Tdan: temporally-deformable alignment network for video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3357–3366

Kuo-Kun Tseng, Ran Zhang, Chien-Ming Chen, Mehedi Hassan Mohammad (2021) Dnetunet: a semi-supervised CNN of medical image segmentation for super-computing ai service. J Supercomput 77(4):3594–3615

Wang L, Guo Y, Lin Z, Deng X, An W (2018) Learning for video super-resolution through hr optical flow estimation. In: Asian Conference on Computer Vision, pp 514–529

Longguang Wang, Yulan Guo, Li Liu, Zaiping Lin, Xinpu Deng, Wei An (2020) Deep video super-resolution using hr optical flow estimation. IEEE Trans Image Process 29:4323–4336

Wang X, Chan KCK, Yu K, Dong C, Loy CC (2019) Edvr: video restoration with enhanced deformable convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp 1954–1963

Wang X, Yu K, Dong C, Loy CC (2018) Recovering realistic texture in image super-resolution by deep spatial feature transform. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 606–615

Yang Wen, Jie Chen, Bin Sheng, Zhihua Chen, Ping Li, Ping Tan, Tong-Yee Lee (2021) Structure-aware motion deblurring using multi-adversarial optimized cyclegan. IEEE Trans Image Process 30:6142–6155

Tianfan Xue, Chen Baian Wu, Jiajun Wei Donglai, Freeman William T (2019) Video enhancement with task-oriented flow. Int J Comput Vis 127(8):1106–1125

Yi P, Wang Z, Jiang K, Jiang J, Ma J (2019) Progressive fusion video super-resolution network via exploiting non-local spatio-temporal correlations. In: Proceedings of the IEEE Conference on International Conference on Computer Vision, pp 3106–3115

Zhang K, Zuo W, Zhang L (2019) Deep plug-and-play super-resolution for arbitrary blur kernels. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1671–1681

Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y (2018) Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision, pp 286–301

Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y (2018) Residual dense network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2472–2481

Zhao Y, Xiong Y, Lin D (2018) Trajectory convolution for action recognition. In: Proceedings of the International Conference on Neural Information Processing Systems, pp 2208–2219

Acknowledgements

This work was supported by the National Natural Science Foundation of China under (Grant No. 61703196), the Key Science Foundation of Zhangzhou City under (Grant Nos. ZZ2019ZD11, ZZ2021J23) and the Fujian Province Nature Science Foundation under (Grant Nos. 2020J01813, 2020J01821).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, Z., Yang, W. & Yang, J. Deeply feature fused video super-resolution network using temporal grouping. J Supercomput 78, 8999–9016 (2022). https://doi.org/10.1007/s11227-021-04299-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-021-04299-x