Abstract

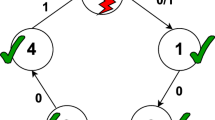

It is essential to use fault tolerance techniques on exascale high-performance computing systems, but this faces many challenges such as higher probability of failure, more complex types of faults, and greater difficulty in failure detection. In this paper, we designed the Fail-Lagging model to describe HPC process-level failure. The failure model does not distinguish whether the failed process is crashed or slow, but is compatible with the possible behavior of the process due to various failures, such as crash, slow, recovery. The failure detection in Fail-Lagging model is implemented by local detection and global decision among processes, which depend on a robust and efficient communication topology. Robust means that failed processes do not easily corrupt the connectivity of the topology, and efficient means that the time complexity of the topology used for collective communication is as low as possible. For this purpose, we designed a torus-tree topology for failure detection, which is scalable even at the scale of an extremely large number of processes. The Fail-Lagging model supports common fault tolerance methods such as rollback, replication, redundancy, algorithm-based fault tolerance, etc. and is especially able to better enable the efficient forward recovery mode. We demonstrate with large-scale experiments that the torus-tree failure detection algorithm is robust and efficient, and we apply fault tolerance based on the Fail-Lagging model to iterative computation, enabling applications to react to faults in a timely manner.

Similar content being viewed by others

References

Aguilera MK, Chen W, Toueg S (1998) Failure detection and consensus in the crash-recovery model. In: Kutten S (ed) Distributed Computing, 12th International Symposium, DISC ’98, Andros, Greece, September 24-26, Proceedings, Lecture Notes in Computer Science, vol 1499, pp 231–245. Springer. https://doi.org/10.1007/BFb0056486

Albrecht JR, Tuttle C, Snoeren AC, Vahdat A (2006) Loose synchronization for large-scale networked systems. In: Adya A, Nahum EM (eds) Proceedings of the 2006 USENIX Annual Technical Conference, Boston, MA, USA, May 30–June 3, pp 301–314. USENIX. http://www.usenix.org/events/usenix06/tech/albrecht.html

Angskun T, Bosilca G, Dongarra JJ (2007) Binomial graph: a scalable and fault-tolerant logical network topology. In: Stojmenovic I, Thulasiram RK, Yang LT, Jia W, Guo M, de Mello RF (eds) Parallel and Distributed Processing and Applications, 5th International Symposium, ISPA 2007, Niagara Falls, Canada, August 29–31, 2007, Proceedings, Lecture Notes in Computer Science, vol 4742, pp 471–482. Springer. https://doi.org/10.1007/978-3-540-74742-0_43

Angskun T, Fagg GE, Bosilca G, Pjesivac-Grbovic J, Dongarra JJ (2006) Scalable fault tolerant protocol for parallel runtime environments. In: Mohr B, Träff JL, Worringen J, Dongarra JJ (eds) Recent Advances in Parallel Virtual Machine and Message Passing Interface, 13th European PVM/MPI User’s Group Meeting, Bonn, Germany, September 17–20, Proceedings, Lecture Notes in Computer Science, vol 4192, pp 141–149. Springer. https://doi.org/10.1007/11846802_25

Arpaci-Dusseau RH, Arpaci-Dusseau AC (2001) Fail-stutter fault tolerance. In: Proceedings of HotOS-VIII: 8th Workshop on Hot Topics in Operating Systems, May 20–23, Elmau/Oberbayern, Germany, pp 33–38. IEEE Computer Society. https://doi.org/10.1109/HOTOS.2001.990058

Bosilca G, Bouteiller A, Guermouche A, Hérault T, Robert Y, Sens P, Dongarra JJ (2016) Failure detection and propagation in HPC systems. In: West J, Pancake CM (eds) Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC 2016, Salt Lake City, UT, USA, November 13–18, pp 312–322. IEEE Computer Society. https://doi.org/10.1109/SC.2016.26

Bosilca G, Bouteiller A, Guermouche A, Hérault T, Robert Y, Sens P, Dongarra JJ (2018) A failure detector for HPC platforms. Int J High Perform Comput Appl 32(1):139–158. https://doi.org/10.1177/1094342017711505

Chandra TD, Toueg S (1996) Unreliable failure detectors for reliable distributed systems. J ACM 43(2):225–267. https://doi.org/10.1145/226643.226647

Chen Z, Dongarra JJ (2008) Algorithm-based fault tolerance for fail-stop failures. IEEE Trans Parallel Distrib Syst 19(12):1628–1641. https://doi.org/10.1109/TPDS.2008.58

Dwork C, Lynch NA, Stockmeyer LJ (1984) Consensus in the presence of partial synchrony (preliminary version). In: Kameda T, Misra J, Peters JG, Santoro N (eds) Proceedings of the Third Annual ACM Symposium on Principles of Distributed Computing, Vancouver, B. C., Canada, August 27–29, pp 103–118. ACM. https://dl.acm.org/citation.cfm?id=1599406

Egwutuoha IP, Levy D, Selic B, Chen S (2013) A survey of fault tolerance mechanisms and checkpoint/restart implementations for high performance computing systems. J Supercomput 65(3):1302–1326. https://doi.org/10.1007/s11227-013-0884-0

Ferreira K, Stearley J, Laros JH, Oldfield R, Pedretti K, Brightwell R, Riesen R, Bridges PG, Arnold D (2011) Evaluating the viability of process replication reliability for exascale systems. In: SC ’11: Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis, pp 1–12. https://doi.org/10.1145/2063384.2063443

Graham N, Harary F, Livingston M, Stout QF (1993) Subcube fault-tolerance in hypercubes. Inf Comput 102(2):280–314. https://doi.org/10.1006/inco.1993.1010

Gunawi HS, Suminto RO, Sears R, Golliher C, Sundararaman S, Lin X, Emami T, Sheng W, Bidokhti N, McCaffrey C, Srinivasan D, Panda B, Baptist A, Grider G, Fields PM, Harms K, Ross RB, Jacobson A, Ricci R, Webb K, Alvaro P, Runesha HB, Hao M, Li H (2018) Fail-slow at scale: evidence of hardware performance faults in large production systems. ACM Trans Storage (TOS) 14(3):1–26. https://doi.org/10.1145/3242086

Gupta S, Tiwari D, Jantzi C, Rogers JH, Maxwell D (2015) Understanding and exploiting spatial properties of system failures on extreme-scale HPC systems. In: 45th Annual IEEE/IFIP International Conference on Dependable Systems and Networks, DSN 2015, Rio de Janeiro, Brazil, June 22–25, pp 37–44. IEEE Computer Society. https://doi.org/10.1109/DSN.2015.52

Hurfin M, Mostéfaoui A, Raynal M (1998) Consensus in asynchronous systems where processes can crash and recover. In: The Seventeenth Symposium on Reliable Distributed Systems, SRDS 1998, West Lafayette, Indiana, USA, October 20–22, Proceedings, pp 280–286. IEEE Computer Society. https://doi.org/10.1109/RELDIS.1998.740510

Hursey J, Graham RL (2011) Building a fault tolerant mpi application: a ring communication example. In: 2011 IEEE International Symposium on Parallel and Distributed Processing Workshops and Phd Forum, pp 1549–1556. https://doi.org/10.1109/RELDIS.1998.740510

Kharbas K, Kim D, Hoefler T, Mueller F (2012) Assessing HPC failure detectors for MPI jobs. In: Stotzka R, Schiffers M, Cotronis Y (eds) Proceedings of the 20th Euromicro International Conference on Parallel, Distributed and Network-Based Processing, PDP 2012, Munich, Germany, February 15–17, pp 81–88. IEEE. https://doi.org/10.1109/PDP.2012.11

Lamport L, Shostak RE, Pease MC (1982) The byzantine generals problem. ACM Trans Program Lang Syst 4(3):382–401. https://doi.org/10.1145/357172.357176

Losada N, González P, Martín MJ, Bosilca G, Bouteiller A, Teranishi K (2020) Fault tolerance of MPI applications in exascale systems: the ULFM solution. Future Gener Comput Syst 106:467–481. https://doi.org/10.1016/j.future.2020.01.026

Schlichting RD, Schneider FB (1983) Fail-stop processors: an approach to designing fault-tolerant computing systems. ACM Trans Comput Syst (TOCS) 1(3):222–238. https://doi.org/10.1145/357369.357371

Sloan J, Kumar R, Bronevetsky G (2013) An algorithmic approach to error localization and partial recomputation for low-overhead fault tolerance. In: 2013 43rd Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Budapest, Hungary, June 24–27, pp 1–12. IEEE Computer Society. https://doi.org/10.1109/DSN.2013.6575309

Ye Y, Zhang Y, Ye W (2021) An application-level failure detection algorithm based on a robust and efficient torus-tree for HPC. In: 2021 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), New York City, NY, USA, September 30–Oct. 3, pp 484–492. IEEE. https://doi.org/10.1109/ISPA-BDCloud-SocialCom-SustainCom52081.2021.00073

Zhai J, Chen W (2018) A vision of post-exascale programming. Front Inf Technol Electron Eng 19(10):1261–1266. https://doi.org/10.1631/FITEE.1800442

Zhong D, Bouteiller A, Luo X, Bosilca G (2019) Runtime level failure detection and propagation in HPC systems. In: Hoefler T, Träff JL (eds) Proceedings of the 26th European MPI Users’ Group Meeting, EuroMPI 2019, Zürich, Switzerland, September 11–13, pp 14:1–14:11. ACM. https://doi.org/10.1145/3343211.3343225

Acknowledgements

The authors would like to thank Jianfeng Zheng for interesting discussions related to this work. The work is partially supported by Key-Area Research and Development of Guangdong Province (No.2021B0101190003). The work is also partially supported by Guangdong Province Key Laboratory of Computational Science at the Sun Yat-sen University (2020B1212060032).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ye, Y., Zhang, Y. & Ye, W. Failure detection algorithm for Fail-Lagging model applied to HPC. J Supercomput 78, 14009–14033 (2022). https://doi.org/10.1007/s11227-022-04347-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04347-0