Abstract

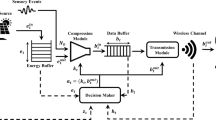

We investigate an energy-harvesting IoT device transmitting (delay/jitter)-sensitive data over a wireless fading channel. The sensory module on the device injects captured event packets into its transmission buffer and relies on the random supply of the energy harvested from the environment to transmit them. Given the limited harvested energy, our goal is to compute optimal transmission control policies that decide on how many packets of data should be transmitted from the buffer’s head-of-line at each discrete timeslot such that a long-run criterion involving the average delay/jitter is either minimized or never exceeds a pre-specified threshold. We realistically assume that no advance knowledge is available regarding the random processes underlying the variations in the channel, captured events, or harvested energy dynamics. Instead, we utilize a suite of Q-learning-based techniques (from the reinforcement learning theory) to optimize the transmission policy in a model-free fashion. In particular, we come up with three Q-learning algorithms: a constrained Markov decision process (CMDP)-based algorithm for optimizing energy consumption under a delay constraint, an MDP-based algorithm for minimizing the average delay under the limitations imposed by the energy harvesting process, and finally, a variance-penalized MDP-based algorithm to minimize a linearly combined cost function consisting of both delay and delay variation. Extensive numerical results are presented for performance evaluation.

Similar content being viewed by others

References

Lee D, Lee H (2018) IoT service classification and clustering for integration of IoT service platforms. J Supercomput 74:6859–6875

He Y, Cheng X, Peng W, Stuber GL (2015) A survey of energy harvesting communications: models and offline optimal policies. IEEE Commun Mag 53(6):79–85. https://doi.org/10.1109/MCOM.2015.7120021

Yang J, Ulukus S (2012) Optimal packet scheduling in an energy harvesting communication system. IEEE Trans Commun 60:220–230

Sah DK, Amgoth T (2020) A novel efficient clustering protocol for energy harvesting in wireless sensor networks. Wireless Netw 26:4723–4737

Shaviv D, Zgur AO (2016) Universally near optimal online power control for energy harvesting nodes. IEEE J Sel Areas Commun 34:3620–3631

Arafa A, Baknina A, Ulukus S (2018) Online fixed fraction policies in energy harvesting communication systems. IEEE Trans Wireless Commun 17:2975–2986

Aprem A, Murthy CR, Mehta NB (2013) Transmit power control policies for energy harvesting sensors with retransmissions. IEEE J Sel Topics Signal Process 7(5):895–906

Neely M (2010) Stochastic network optimization with application to communication and queuing systems. Morgan and Claypool

Sharma N, Mastronarde N, Chakareski J (2020) Accelerated structure-aware reinforcement learning for delay-sensitive energy harvesting wireless sensors. IEEE Trans Signal Process 68:1409–1424

Toorchi N, Chakareski J, and Mastronarde N. (2016) Fast and low- complexity reinforcement learning for delay-sensitive energy harvesting wireless visual sensing systems. IEEE International Conference on Image Processing (ICIP), 1804–1808.

Shahhosseini S, Seo D, Kanduri A, Hu T, Lim S, Donyanavard B, Rahmani AM, Dutt N (2022) Online learning for orchestration of inference in multi-user end-edge-cloud networks. ACM Trans Embed Comput Syst. https://doi.org/10.1145/3520129

Aslani R, Hakami V, Dehghan M (2018) A token-based incentive mechanism for video streaming applications in peer- to-peer networks. Multim Tools Appl 77:14625–14653

Wang C, Li J, Yang Y, Ye F (2018) Combining solar energy harvesting with wireless charging for hybrid wireless sensor networks. IEEE Trans Mob Comput 17:560–576

Malekijoo A, Fadaeieslam MJ, Malekijou H, Homayounfar M, Alizadeh-Shabdiz F, Rawassizadeh R, (2021), FEDZIP: A Compression Framework for Communication-Efficient Federated Learning. https://doi.org/10.48550/arXiv.2102.01593

Prabuchandran KJ, Meena SK, Bhatnagar S (2013) Q-learning based energy management policies for a single sensor node with finite buffer. IEEE Wireless Commun Lett 2:82–85

Kansal A, Jason H, Zahedi S, Srivastava M (2007) Power management in energy harvesting sensor networks. ACM Trans Embedd Comput Syst 6:32–44

Mastronarde N, Modares J, Wu C, and Chakareski J. (2016) Reinforcement learning for energy-efficient delay-sensitive csma/ca scheduling. IEEE Global Communications Conference (GLOBECOM), 1–7.

Hakami V, Mostafavi SA, Javan NT, Rashidi Z (2020) An optimal policy for joint compression and transmission control in delay-constrained energy harvesting IoT devices. Comput Commun 160:554–566. https://doi.org/10.1016/j.comcom.2020.07.005

Masadeh A, Wang Z, and Kamal AE. (2018) Reinforcement learning exploration algorithms for energy harvesting communications systems. IEEE International Conference on Communications (ICC), 1–6.

Hu S, Chen W (2021) Joint lossy compression and power allocation in low latency wireless communications for IIoT: a cross-layer approach. IEEE Trans Commun 69(8):5106–5120. https://doi.org/10.1109/TCOMM.2021.3077948

Namjoonia F, Sheikhi M, Hakami V (2022) Fast reinforcement learning algorithms for joint adaptive source coding and transmission control in IoT devices with renewable energy storage. Neural Comput Appl 34:3959–3979. https://doi.org/10.1007/s00521-021-06656-6

Wenwei LU, Siliang G, Yihua Z (2021) Timely data delivery for energy-harvesting IoT devices. Chin J Electron 31(2):322–336

Lei J, Yates R, Greenstein L (2009) A generic model for optimizing single-hop transmission policy of replenishable sensors. IEEE Trans Wireless Commun 8:547–551

Blasco P, Gunduz D, Dohler M (2013) A learning theoretic approach to energy harvesting communication system optimization. IEEE Trans Wireless Commun 12:1872–1882

Putterman M. (2014) Markov decision processes.:discrete stochastic dynamic programming.

Xiao Y, Niu L, Ding Y, Liu S, Fan Y (2020) Reinforcement learning based energy-efficient internet-of-things video transmission. Intell Converg Netw 3:258–270. https://doi.org/10.23919/ICN.2020.0021

Sutton R, Barto AG (2018) Reinforcement learning: an introduction. MIT Press

Prakash G, Krishnamoorthy R, Kalaivaani PT (2020) Resource key distribution and allocation based on sensor vehicle nodes for energy harvesting in vehicular ad hoc networks for transport application. J Supercomput 76:5996–6009

Chu M, Li H, Liao X, Cui S (2019) Reinforcement learning-based multiaccess control and battery prediction with energy harvesting in IOT systems. IEEE Internet Things J 6:2009–2020

Teimourian H, Teimourian A, Dimililer K et al (2021) The potential of wind energy via an intelligent IoT-oriented assessment. J Supercomput. https://doi.org/10.1007/s11227-021-04085-9

Berry RA, Gallager RG (2002) Communication over fading channels with delay constraints”. IEEE Trans Inf Theory 48(5):1135–1149

Altman E (1999) Constrained Markov decision processes. Routledge

Gosavi A (2014) “Variance-penalized markov decision processes: dynamic programming and reinforcement learning techniques. Int J Gener Syst 43:871

Bertsekas D (1999) Nonlinear programming. Athena Scientific

Borkar V, Konda V (1997) The actor-critic algorithm as multi-time-scale stochastic approximation. Sadhana 22:525–543

Wang H, Mandayam NB (2004) A simple packet-transmission scheme for wireless data over fading channels. IEEE Trans Commun 52:1055–1059

Altman E, Asingleutility I (1999) Constrained markov decision processes. Routledge

Puterman ML (2014) Markov decision processes: discrete stochastic dynamic programming. Wiley

Little JDC (1961) A proof for the queuing formula: L = (lambda) w. Oper Res 9:383–387

Sharma AB, Golubchik L, Govindan R, Neely MJ (2009) Dynamic data compression in multi-hop wireless networks. Sigmetrics Perform Eval Rev 37:145–156

Mitchell TM (1997) Machine Learning, 1st edn. McGraw-Hill Inc.

Gosavi A (2015) Simulation-based optimization parametric optimization techniques and reinforcement learning. Springer

Sakulkar P, Krishnamachari B (2018) Online learning schemes for power allocation in energy harvesting communications. IEEE Trans Inf Theory 64:4610–4628

Zordan D, Melodia T, Rossi M (2016) On the design of temporal compression strategies for energy harvesting sensor networks. IEEE Trans Wireless Commun 15:1336–1352

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest that are relevant to the content of this article.

Data availability

Data sharing is not applicable—no new data generated.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Malekijou, H., Hakami, V., Javan, N.T. et al. Q-learning-based algorithms for dynamic transmission control in IoT equipment. J Supercomput 79, 75–108 (2023). https://doi.org/10.1007/s11227-022-04643-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04643-9