Abstract

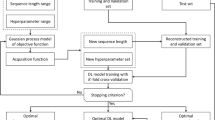

Sanitary sewer overflows caused by excessive rainfall derived infiltration and inflow is the major challenge currently faced by municipal administrations, and therefore, the ability to correctly predict the wastewater state of the sanitary sewage system in advance is especially significant. In this paper, we present the design of the Sparse Autoencoder-based Bidirectional long short-term memory (SAE-BLSTM) network model, a model built on Sparse Autoencoder (SAE) and Bidirectional long short-term memory (BLSTM) networks to predict the wastewater flow rate in a sanitary sewer system. This network model consists of a data preprocessing segment, the SAE network segment, and the BLSTM network segment. The SAE is capable of performing data dimensionality reduction on high-dimensional original input feature data from which it can extract sparse potential features from the aforementioned high-dimensional original input feature data. The potential features extracted by the SAE hidden layer are concatenated with the smooth historical wastewater flow rate features to create an augmented previous feature vector that more accurately predicts the wastewater flow rate. These augmented previous features are applied to the BLSTM network to predict the future wastewater flow rate. Thus, this network model combines two kinds of abilities, SAE's low-dimensional nonlinear representation for original input feature data and BLSTM's time series prediction for wastewater flow rate. Then, we conducted extensive experiments on the SAE-BLSTM network model utilizing the real-world hydrological time series datasets and employing advanced SVM, FCN, GRU, LSTM, and BLSTM models as comparison algorithms. The experimental results show that our proposed SAE-BLSTM model consistently outperforms the advanced comparison models. Specifically, we selected a 3 months period training dataset in our dataset to train and test the SAE-BLSTM network model. The SAE-BLSTM network model yielded the lowest RMSE, MAE, and highest R2, which are 242.55, 179.05, and 0.99626, respectively.

Similar content being viewed by others

Data availability

Not applicable.

Code availability

Not applicable.

References

Zhang Z (2007) Estimating rain derived inflow and infiltration for rainfalls of varying characteristics. J Hydraul Eng 133(1):98–105

Kang H, Yang S, Huang J, Oh J (2020) Time series prediction of wastewater flow rate by bidirectional LSTM deep learning. Int J Control Autom Syst 18(12):3023–3030

Zhang M, Liu Y, Cheng X, Zhu DZ, Shi H, Yuan Z (2018) Quantifying rainfall-derived inflow and infiltration in sanitary sewer systems based on conductivity monitoring. J Hydrol 558:174–183

Zeng Y, Zhang Z, Kusiak A, Tang F, Wei X (2016) Optimizing wastewater pumping system with data-driven models and a greedy electromagnetism-like algorithm. Stoch Env Res Risk Assess 30(4):1263–1275

Box GE, Pierce DA (1970) Distribution of residual autocorrelations in autoregressive-integrated moving average time series models. J Am Stat Assoc 65(332):1509–1526

Box GE, Jenkins GM, Reinsel GC, Ljung GM (2015) Time series analysis: forecasting and control. Wiley, Hoboken

Valipour M, Banihabib ME, Behbahani SMR (2013) Comparison of the ARMA, ARIMA, and the autoregressive artificial neural network models in forecasting the monthly inflow of Dez dam reservoir. J Hydrol 476:433–441

Valipour M (2015) Long-term runoff study using SARIMA and ARIMA models in the United States. Meteorol Appl 22(3):592–598

Martínez-Acosta L, Medrano-Barboza JP, López-Ramos Á, Remolina López JF, López-Lambraño ÁA (2020) SARIMA approach to generating synthetic monthly rainfall in the Sinú river watershed in Colombia. Atmosphere 11(6):602

Lin GF, Chen GR, Huang PY, Chou YC (2009) Support vector machine-based models for hourly reservoir inflow forecasting during typhoon-warning periods. J Hydrol 372(1–4):17–29

Guo J, Zhou J, Qin H, Zou Q, Li Q (2011) Monthly streamflow forecasting based on improved support vector machine model. Expert Syst Appl 38(10):13073–13081

Niu WJ, Feng ZK (2021) Evaluating the performances of several artificial intelligence methods in forecasting daily streamflow time series for sustainable water resources management. Sustain Cities Soc 64:102562

Tokar AS, Johnson PA (1999) Rainfall-runoff modeling using artificial neural networks. J Hydrol Eng 4(3):232–239

Dawson CW, Wilby RL (2001) Hydrological modelling using artificial neural networks. Prog Phys Geogr 25(1):80–108

Gholami V, Sahour H (2022) Simulation of rainfall-runoff process using an artificial neural network (ANN) and field plots data. Theoret Appl Climatol 147(1):87–98

Prasanth A (2021) Certain investigations on energy-efficient fault detection and recovery management in underwater wireless sensor networks. J Circuits Syst Comput 30(08):2150137

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 770–778

Wang CY, Bochkovskiy A, Liao HYM (2022) YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint https://arxiv.org/abs/2207.02696

Collobert R, Weston J (2008, July) A unified architecture for natural language processing: deep neural networks with multitask learning. In: Proceedings of the 25th International Conference on Machine Learning. pp. 160–167

Liu P, Yuan W, Fu J, Jiang Z, Hayashi H, Neubig G (2021) Pre-train, prompt, and predict: a systematic survey of prompting methods in natural language processing. arXiv preprint https://arxiv.org/abs/2107.13586

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Ng A (2011) Sparse autoencoder. In: CS294A Lecture notes, 72:1–19

Kao IF, Liou JY, Lee MH, Chang FJ (2021) Fusing stacked autoencoder and long short-term memory for regional multistep-ahead flood inundation forecasts. J Hydrol 598:126371

Abbasi M, Farokhnia A, Bahreinimotlagh M, Roozbahani R (2021) A hybrid of Random Forest and Deep Auto-Encoder with support vector regression methods for accuracy improvement and uncertainty reduction of long-term streamflow prediction. J Hydrol 597:125717

Yuan Y, Jia K (2015) A water quality assessment method based on sparse autoencoder. In: 2015 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC) (pp. 1–4). IEEE

Li Z, Peng F, Niu B, Li G, Wu J, Miao Z (2018) Water quality prediction model combining sparse auto-encoder and LSTM network. IFAC-PapersOnLine 51(17):831–836

Qian L, Li J, Liu C, Tao J, Chen F (2020) River flow sequence feature extraction and prediction using an enhanced sparse autoencoder. J Hydroinf 22(5):1391–1409

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint https://arxiv.org/abs/1412.3555

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Siami-Namini S, Tavakoli N, Namin AS (2019) The performance of LSTM and BiLSTM in forecasting time series. In: 2019 IEEE International Conference on Big Data (Big Data) (pp. 3285–3292). IEEE

Gao S, Huang Y, Zhang S, Han J, Wang G, Zhang M, Lin Q (2020) Short-term runoff prediction with GRU and LSTM networks without requiring time step optimization during sample generation. J Hydrol 589:125188

Kratzert F, Klotz D, Brenner C, Schulz K, Herrnegger M (2018) Rainfall-runoff modelling using long short-term memory (LSTM) networks. Hydrol Earth Syst Sci 22(11):6005–6022

Hu C, Wu Q, Li H, Jian S, Li N, Lou Z (2018) Deep learning with a long short-term memory networks approach for rainfall-runoff simulation. Water 10(11):1543

Sahoo BB, Jha R, Singh A, Kumar D (2019) Long short-term memory (LSTM) recurrent neural network for low-flow hydrological time series forecasting. Acta Geophys 67(5):1471–1481

Xiang Z, Yan J, Demir I (2020) A rainfall-runoff model with LSTM-based sequence-to-sequence learning. Water Resour Res. https://doi.org/10.1029/2019WR025326

Shu X, Ding W, Peng Y, Wang Z, Wu J, Li M (2021) Monthly streamflow forecasting using convolutional neural network. Water Resour Manag 35(15):5089–5104

Zhang B, Zhang H, Zhao G, Lian J (2020) Constructing a PM2. 5 concentration prediction model by combining auto-encoder with Bi-LSTM neural networks. Environ Modell Softw 124:104600

Shen Z, Zhang Y, Lu J, Xu J, Xiao G (2020) A novel time series forecasting model with deep learning. Neurocomputing 396:302–313

Olshausen BA, Field DJ (1996) Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381(6583):607–609

Sun C, Ma M, Zhao Z, Tian S, Yan R, Chen X (2018) Deep transfer learning based on sparse autoencoder for remaining useful life prediction of tool in manufacturing. IEEE Trans Industr Inf 15(4):2416–2425

Liu J, Li Q, Han Y, Zhang G, Meng X, Yu J, Chen W (2019) PEMFC residual life prediction using sparse autoencoder-based deep neural network. IEEE Trans Transp Electrif 5(4):1279–1293

Zhang K, Zhang J, Ma X, Yao C, Zhang L, Yang Y, Zhao H (2021) History matching of naturally fractured reservoirs using a deep sparse autoencoder. SPE J 26(04):1700–1721

Møller MF (1993) A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw 6(4):525–533

Liu H, Chen C (2019) Multi-objective data-ensemble wind speed forecasting model with stacked sparse autoencoder and adaptive decomposition-based error correction. Appl Energy 254:113686

Sekar J, Aruchamy P, Sulaima Lebbe Abdul H, Mohammed AS, Khamuruddeen S (2022) An efficient clinical support system for heart disease prediction using TANFIS classifier. Comput Intell 38(2):610–640

Cheng Q, Chen Y, Xiao Y, Yin H, Liu W (2022) A dual-stage attention-based Bi-LSTM network for multivariate time series prediction. J Supercomput. https://doi.org/10.1007/s11227-022-04506-3

Gul MJ, Urfa GM, Paul A, Moon J, Rho S, Hwang E (2021) Mid-term electricity load prediction using CNN and Bi-LSTM. J Supercomput 77(10):10942–10958

MATLAB Deep Learning Toolbox Documentation (2021a), Fully Connected Layer. https://www.mathworks.com/help/releases/R2021a/deeplearning/ref/nnet.cnn.layer.fullyconnectedlayer.html?s_tid=doc_ta

Acknowledgements

This research was supported by the Chung-Ang University Young Scientist Scholarship in 2021, by Korea Environment Industry & Technology Institute (KEITI) through Public Technology Program based on Environmental Policy (Project No: 2018000700005) funded by Korea Ministry of Environment (MOE), and by National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2021R1A2C1009735).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, J., Yang, S., Li, J. et al. Prediction model of sparse autoencoder-based bidirectional LSTM for wastewater flow rate. J Supercomput 79, 4412–4435 (2023). https://doi.org/10.1007/s11227-022-04827-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04827-3