Abstract

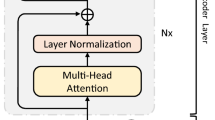

Remaining useful life (RUL) prediction is critical for industrial equipment status detection, and the accurate prediction results provide decision-makers with actionable information. Preventive maintenance can be carried out to prevent the sudden failure of the equipment effectively based on the predicted results. However, as Industry 4.0 technologies develop, the amount of data collected by sensors is also rapidly increasing. The existing RUL prediction methods are gradually unable to cope with complex industrial equipment data, and deep learning methods gradually come to the fore. In this background, this paper proposes a transformer-based model with a multi-layer encoder–decoder structure to extract domain-invariant features. The decoder in the traditional transformer structure only obtains a single piece of information from the last layer of the encoder, and this paper uses an integrated layer-cross decoding strategy to compensate. Based on the encoder–decoder cross-connection, each decoder layer is provided with global view information from the final encoder layer simultaneously, improving the model’s performance. The validity and superiorities of the proposed method are evaluated through several experiments on the publicly available C-MAPSS dataset provided by NASA. As can be seen from the results, the proposed method gets higher prediction accuracy than other network architectures and state-of-the-art approaches.

Similar content being viewed by others

Availability of data and materials

Not applicable.

References

Hu Y, Miao X, Si Y, Pan E, Zio E (2022) Prognostics and health management: a review from the perspectives of design, development and decision. Reliab Eng Syst Saf 217:108063

Lei Y, Yang B, Jiang X, Jia F, Li N, Nandi AK (2020) Applications of machine learning to machine fault diagnosis: a review and roadmap. Mech Syst Signal Process 138:106587

Xu J, Wang Y, Xu L (2013) PHM-oriented integrated fusion prognostics for aircraft engines based on sensor data. IEEE Sens J 14(4):1124–1132

Wu X, Chang Y, Mao J, Du Z (2013) Predicting reliability and failures of engine systems by single multiplicative neuron model with iterated nonlinear filters. Reliab Eng Syst Saf 119:244–250

Li A, Yang X, Dong H, Xie Z, Yang C (2018) Machine learning-based sensor data modeling methods for power transformer PHM. Sensors 18(12):4430

Tang L, Hettler E, Zhang B, DeCastro J (2011) In: Annual Conference of the PHM Society, vol 3

Rezvani M, AbuAli M, Lee S, Lee J, Ni J (2011) A comparative analysis of techniques for electric vehicle battery prognostics and health management (PHM). SAE Technical Paper 191:1–9

Lei Y, Li N, Gontarz S, Lin J, Radkowski S, Dybala J (2016) A model-based method for remaining useful life prediction of machinery. IEEE Trans Reliab 65(3):1314–1326

Nguyen V, Seshadrinath J, Wang D, Nadarajan S, Vaiyapuri V (2017) Model-based diagnosis and RUL estimation of induction machines under interturn fault. IEEE Trans Ind Appl 53(3):2690–2701

Zhao H, Liu H, Jin Y, Dang X, Deng W (2021) Feature extraction for data-driven remaining useful life prediction of rolling bearings. IEEE Trans Instrum Meas 70:1–10

Huang CG, Huang HZ, Li YF, Peng W (2021) A novel deep convolutional neural network-bootstrap integrated method for RUL prediction of rolling bearing. J Manuf Syst 61:757–772

Li N, Gebraeel N, Lei Y, Fang X, Cai X, Yan T (2021) Remaining useful life prediction based on a multi-sensor data fusion model. Reliab Eng Syst Saf 208:107249

Wang Y, Zhao Y, Addepalli S (2020) Remaining useful life prediction using deep learning approaches: a review. Procedia Manuf 49:81–88

Mo Y, Wu Q, Li X, Huang B (2021) Remaining useful life estimation via transformer encoder enhanced by a gated convolutional unit. J Intell Manuf 32:1997–2006

Zhou H, Zhang S, Peng J, Zhang S, Li J, Xiong H, Zhang W (2021) In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 35, pp 11106–11115

Li H, Zhao W, Zhang Y, Zio E (2020) Remaining useful life prediction using multi-scale deep convolutional neural network. Appl Soft Comput 89:106113

Wang Q, Li C, Zhang Y, Xiao T, Zhu J (2020) Layer-wise multi-view learning for neural machine translation. arXiv preprint arXiv:2011.01482

Domhan T (2018) In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp 1799–1808

He T, Tan X, Xia Y, He D, Qin T, Chen Z, Liu TY (2018) Layer-wise coordination between encoder and decoder for neural machine translation. Advances in Neural Information Processing Systems 31

Bapna A, Chen MX, Firat O, Cao Y, Wu Y (2018) Training deeper neural machine translation models with transparent attention. arXiv preprint arXiv:1808.07561

Kalyan KS, Rajasekharan A, Sangeetha S (2021) Ammus: A survey of transformer-based pretrained models in natural language processing. arXiv preprint arXiv:2108.05542

Benkedjouh T, Medjaher K, Zerhouni N, Rechak S (2013) Remaining useful life estimation based on nonlinear feature reduction and support vector regression. Eng Appl Artif Intell 26(7):1751–1760

Zeng F, Li Y, Jiang Y, Song G (2021) A deep attention residual neural network-based remaining useful life prediction of machinery. Measurement 181:109642

Sateesh Babu G, Zhao P, Li XL (2016) In: International Conference on Database Systems for Advanced Applications. Springer, pp 214–228

Chadha GS, Panara U, Schwung A, Ding SX (2021) Generalized dilation convolutional neural networks for remaining useful lifetime estimation. Neurocomputing 452:182–199

Huang G, Zhang Y, Ou J (2021) Transfer remaining useful life estimation of bearing using depth-wise separable convolution recurrent network. Measurement 176:109090

Zheng S, Ristovski K, Farahat A, Gupta C (2017) In: 2017 IEEE International Conference on Prognostics and Health Management (ICPHM). IEEE, pp 88–95

Zhang J, Wang P, Yan R, Gao RX (2018) Long short-term memory for machine remaining life prediction. J Manuf Syst 48:78–86

Xia J, Feng Y, Lu C, Fei C, Xue X (2021) LSTM-based multi-layer self-attention method for remaining useful life estimation of mechanical systems. Eng Fail Anal 125:105385

Wu JY, Wu M, Chen Z, Li XL, Yan R (2021) Degradation-aware remaining useful life prediction with LSTM autoencoder. IEEE Trans Instrum Meas 70:1–10

Remadna I, Terrissa LS, Ayad S, Zerhouni N (2021) RUL estimation enhancement using hybrid deep learning methods. Int J Progn Health Manag 12(1):1–19

Cao Y, Jia M, Ding P, Ding Y (2021) Transfer learning for remaining useful life prediction of multi-conditions bearings based on bidirectional-GRU network. Measurement 178:109287

Su X, Liu H, Tao L, Lu C, Suo M (2021) An end-to-end framework for remaining useful life prediction of rolling bearing based on feature pre-extraction mechanism and deep adaptive transformer model. Comput Ind Eng 161:107531

Mo Y, Wu Q, Li X, Huang B (2021) Remaining useful life estimation via transformer encoder enhanced by a gated convolutional unit. J Intell Manuf 32(7):1997–2006

Chen D, Hong W, Zhou X (2022) Transformer network for remaining useful life prediction of lithium-ion batteries. IEEE Access 10:19621–19628

Zhang Z, Song W, Li Q (2022) Dual-aspect self-attention based on transformer for remaining useful life prediction. IEEE Trans Instrum Meas 71:1–11

Wang HK, Cheng Y, Song K (2021) Remaining useful life estimation of aircraft engines using a joint deep learning model based on TCNN and transformer. Computational Intelligence and Neuroscience 2021

Xie XK, Wang H (2017) In: Computer Science, Technology and Application: Proceedings of the 2016 International Conference on Computer Science, Technology and Application (CSTA2016). World Scientific, pp 397–402

Belhadi A, Djenouri Y, Djenouri D, Lin JCW (2020) A recurrent neural network for urban long-term traffic flow forecasting. Appl Intell 50(10):3252–3265

Ugurlu U, Oksuz I, Tas O (2018) Electricity price forecasting using recurrent neural networks. Energies 11(5):1255

Graves A (2012) Long short-term memory. Supervised sequence labelling with recurrent neural networks, pp 37–45

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555

Cho K, Van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078

Ren L, Sun Y, Cui J, Zhang L (2018) Bearing remaining useful life prediction based on deep autoencoder and deep neural networks. J Manuf Syst 48:71–77

Song Y, Shi G, Chen L, Huang X, Xia T (2018) Remaining useful life prediction of turbofan engine using hybrid model based on autoencoder and bidirectional long short-term memory. J Shanghai Jiaotong Univ (Sci) 23:85–94

Su C, Li L, Wen Z (2020) Remaining useful life prediction via a variational autoencoder and a time-window-based sequence neural network. Qual Reliab Eng Int 36(5):1639–1656

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Advances in Neural Information Processing Systems 30

Simon D, Garg S (2010) In: AIAA Infotech@ Aerospace Conference and AIAA Unmanned... Unlimited Conference, p 1872

Li X, Mba D, Lin T (2019) In: 2019 Prognostics and System Health Management Conference (PHM-Qingdao). IEEE, pp 1–5

Xu T, Han G, Gou L, Martinez-Garcia M, Shao D, Luo B, Yin Z (2022) SGBRT: an edge-intelligence based remaining useful life prediction model for aero-engine monitoring system. IEEE Trans Netw Sci Eng 9:3112–3122

Wang H, Chen Y, Zhao H, Cui S, Zhao X (2022) In: Journal of Physics: Conference Series, vol 2171. IOP Publishing, p 012016

Li Y, Huang X, Zhao C, Ding P (2022) A novel remaining useful life prediction method based on multi-support vector regression fusion and adaptive weight updating. ISA Transactions 131:444–459

Li W, Jia X, Hsu YM, Liu Y, Lee J (2021) In: Annual Conference of the PHM Society, vol 13

He K, Zhang X, Ren S, Sun J (2016) In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778

Ba JL, Kiros JR, Hinton GE (2016) Layer normalization. arXiv preprint arXiv:1607.06450

Chang JH, Lee WS (2004) A sliding window method for finding recently frequent itemsets over online data streams. J Inf Sci Eng 20(4):753–762

Saxena A, Goebel K, Simon D, Eklund N (2008) In: 2008 International Conference on Prognostics and Health Management. IEEE, pp 1–9

Zhang C, Lim P, Qin AK, Tan KC (2016) Multiobjective deep belief networks ensemble for remaining useful life estimation in prognostics. IEEE Trans Neural Netw Learn Syst 28(10):2306–2318

Heimes FO (2008) In: 2008 International Conference on Prognostics and Health Management. IEEE, pp 1–6

Li X, Ding Q, Sun JQ (2018) Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab Eng Syst Saf 172:1–11

Ellefsen AL, Bjørlykhaug E, Æsøy V, Ushakov S, Zhang H (2019) Remaining useful life predictions for turbofan engine degradation using semi-supervised deep architecture. Reliab Eng Syst Saf 183:240–251

Wang T, Guo D, Sun XM (2022) Remaining useful life predictions for turbofan engine degradation based on concurrent semi-supervised model. Neural Comput Appl 34(7):5151–5160

Song T, Liu C, Wu R, Jin Y, Jiang D (2022) A hierarchical scheme for remaining useful life prediction with long short-term memory networks. Neurocomputing 487:22–33

Li J, Chen R, Huang X (2022) A sequence-to-sequence remaining useful life prediction method combining unsupervised LSTM encoding-decoding and temporal convolutional network. Meas Sci Technol 33(8):085013

Berghout T, Mouss MD, Mouss LH, Benbouzid M (2022) Prognet: a transferable deep network for aircraft engine damage propagation prognosis under real flight conditions. Aerospace 10(1):10

Funding

The research was supported in part by the Sichuan Science and Technology Program (No. 2022NSFSC0459), the Open Research Fund of Key Laboratory of Advanced Manufacturing Technology of the Ministry of Education in China (No. GZUAMT2021KF05) and the Building Program Fund of Scientific Research Platform of YiBin Vocational And Technical College (No. YBZY21KYPT-03).

Author information

Authors and Affiliations

Contributions

P.G. and Q.L. contributed to conceptualization, methodology, formal analysis, investigation and writing—review and editing; S.Y. contributed to review and to edit; J.-Y. X. and X. T. contributed to methodology; C.G. contributed to project administration. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical Approval

Not applicable.

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, P., Liu, Q., Yu, S. et al. A transformer with layer-cross decoding for remaining useful life prediction. J Supercomput 79, 11558–11584 (2023). https://doi.org/10.1007/s11227-023-05126-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05126-1