Abstract

Inverting a matrix is time-consuming, and many works focus on accelerating the inversion of a single large matrix by GPU. However, the problem of parallelizing the inversion of a large number of small matrices has received little attention. These problems are widely applied in computer science, including accelerating cryptographic algorithms and image processing algorithms. In this paper, we propose a Revised In-Place Inversion algorithm for inverting a large number of small matrices on the CUDA platform, which adopts a more refined parallelization scheme and outperforms other algorithms, achieving a speedup of up to 20.9572 times over the batch matrix inverse kernel in CUBLAS. Additionally, we found that there is an upper bound on the input data size for each GPU device, and the performance will degrade if the input data size is too large. Therefore, we propose the Saturation Size Curve based on this finding to divide matrices into batches and improve the algorithm performance. Experimental results show that this strategy increases the algorithm’s performance by 1.75 times and effectively alleviates the problem of performance degradation.

Similar content being viewed by others

Data availability

All data sets this paper used were randomly generated by MATLAB, and there are no specific restrictions on the data sets.

References

Milanov E (2009) The RSA algorithm. RSA Laboratories, pp 1–11

Syafalni I, Reynaldi DM, Munir R, Adiono T, Sutisna N, Mulyawan R (2022) Complexity analysis of encoding in CKKS-fully homomorphic encryption algorithm. In: 2022 International Symposium on Electronics and Smart Devices (ISESD), pp 1–5

Richards D, Abdelgawad A, Yelamarthi K (2018) How does encryption influence timing in IoT? In: 2018 IEEE Global Conference on Internet of Things (GCIoT), pp 1–5

Anaya E, Patel J, Shah P, Shah V, Cheng Y (2020) A performance study on cryptographic algorithms for IoT devices. In: Proceedings of the Tenth ACM Conference on Data and Application Security and Privacy. CODASPY ’20, pp 159–161. Association for Computing Machinery, New York

Lee W, Kim M, Park J (2021) Speed-up of the matrix computation on the ridge regression. In: KSII Transactions on Internet & Information Systems, vol 15, no 10

Shakeel N, Mehmood T, et al (2023) Inverse matrix problem in regression for high-dimensional data sets. Math Probl Eng 2023

Abdi H, et al (2007) The method of least squares. In: Encyclopedia of Measurement and Statistics. Thousand Oaks

Darabi A, Bagheri M, Gharehpetian GB (2019) Highly accurate directional overcurrent coordination via combination of Rosen’s gradient projection-complex method with GA-PSO algorithm. IEEE Syst J 14(1):1171–1182

Wang Y, Wan R, Yang W, Li H, Chau L-P, Kot A (2022) Low-light image enhancement with normalizing flow. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 36, pp 2604–2612

Herbreteau S, Kervrann C (2022) Dct2net: an interpretable shallow CNN for image denoising. IEEE Trans Image Process 31:4292–4305

Zhou M, Huang J, Fang Y, Fu X, Liu A (2022) Pan-sharpening with customized transformer and invertible neural network. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 36, pp 3553–3561

Yan M, Chen Y, Chen Y, Zeng G, Hu X, Du J (2022) A lightweight weakly supervised learning segmentation algorithm for imbalanced image based on rotation density peaks. Knowl Based Syst 244:108513. https://doi.org/10.1016/j.knosys.2022.108513 (ISSN 0950-7051)

Wei T, Wang X, Li X, Zhu S (2022) Fuzzy subspace clustering noisy image segmentation algorithm with adaptive local variance & non-local information and mean membership linking. Eng Appl Artif Intell 110:104672

Tanaka Y, Eldar YC, Ortega A, Cheung G (2020) Sampling signals on graphs: from theory to applications. IEEE Signal Process Mag 37(6):14–30

Kumar MA, Chari KM (2019) Noise reduction using modified wiener filter in digital hearing aid for speech signal enhancement. J Intell Syst 29(1):1360–1378

Stankovic L, Mandic DP, Dakovic M, Kisil I, Sejdic E, Constantinides AG (2019) Understanding the basis of graph signal processing via an intuitive example-driven approach [lecture notes]. IEEE Signal Process Mag 36(6):133–145

Althoen SC, Mclaughlin R (1987) Gauss–Jordan reduction: a brief history. Am Math Mon 94(2):130–142

Strassen V (1969) Gaussian elimination is not optimal. Numer Math 13(4):354–356

Bailey DH, Gerguson HR (1988) A Strassen–Newton algorithm for high-speed parallelizable matrix inversion. In: Conference on High Performance Networking and Computing: Proceedings of the 1988 ACM/IEEE Conference on Supercomputing, vol 12, pp 419–424

Coppersmith D, Winograd S (1982) On the asymptotic complexity of matrix multiplication. SIAM J Comput 11(3):472–492

Press WH, Flannery BP, Teukolsky SA, Vetterling WT (1992) Lu decomposition and its applications. In: Numerical Recipes in FORTRAN: The Art of Scientific Computing, pp 34–42

Burian A, Takala J, Ylinen M (2003) A fixed-point implementation of matrix inversion using Cholesky decomposition. In: 2003 46th Midwest Symposium on Circuits and Systems, vol 3, pp 1431–1434. IEEE

Press W, Teukolsky S, Vetterling W, Flannery B (2007) Section 2.10. QR decomposition. In: Numerical Recipes: The Art of Scientific Computing, vol 3

Gu M, Eisenstat SC (1996) Efficient algorithms for computing a strong rank-revealing QR factorization. SIAM J Sci Comput 17(4):848–869

DasGupta D et al (2013) In-place matrix inversion by modified Gauss–Jordan algorithm. Appl Math 4(10):1392–1396

Ries F, De Marco T, Guerrieri R (2011) Triangular matrix inversion on heterogeneous multicore systems. IEEE Trans Parallel Distrib Syst 23(1):177–184

Ries F, De Marco T, Zivieri M, Guerrieri R (2009) Triangular matrix inversion on graphics processing unit. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis, pp 1–10. IEEE

Sharma G, Agarwala A, Bhattacharya B (2013) A fast parallel Gauss Jordan algorithm for matrix inversion using CUDA. Comput Struct 128:31–37

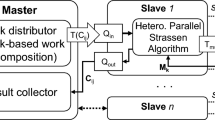

Yu D, He S, Huang Y, Yu G, Yang L (2015) A fast parallel matrix inversion algorithm based on heterogeneous multicore architectures. In: 2015 IEEE Global Conference on Signal and Information Processing (GlobalSIP), pp 903–907. IEEE

Evstigneev NM, Ryabkov OI, Tsatsorin EA (2018) On the inversion of multiple matrices on GPU in batched mode. Supercomput Front Innov 5(2):23–42

NVIDIA: cuBLAS Documentation. https://docs.nvidia.com/cuda/cublas/index.html. Accessed 17 March 2023

Abdelfattah A, Haidar A, Tomov S, Dongarra J (2017) Factorization and inversion of a million matrices using GPUs: challenges and countermeasures. Procedia Comput Sci 108:606–615

Cavicchioli R, Capodieci N, Bertogna M (2017) Memory interference characterization between CPU cores and integrated gpus in mixed-criticality platforms. In: 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), pp 1–10. IEEE

Jeong D, Park J, Kim J (2022) Demand MemCpy: overlapping of computation and data transfer for heterogeneous computing. IEEE Access 10:79925–79938

Tatsugi Y, Nukada A (2022) Accelerating data transfer between host and device using idle GPU. In: Proceedings of the 14th Workshop on General Purpose Processing Using GPU, pp 1–6

Rocher-González J, Gran EG, Reinemo S-A, Skeie T, Escudero-Sahuquillo J, García PJ, Flor FJQ (2022) Adaptive routing in infiniband hardware. In: 2022 22nd IEEE International Symposium on Cluster, Cloud and Internet Computing (CCGrid), pp 463–472. IEEE

Pfister GF (2001) An introduction to the infiniband architecture. High performance mass storage and parallel I/O 42(617–632):102

Wadekar A, Swapnil S, Lohani RB (2011) Design and implementation of a universal DMA controller. In: ICWET ’11, pp 1189–1190. Association for Computing Machinery, New York

Wang Z, Wang Z, Liao J, Chen C, Yang Y, Dong B, Chen W, Chen W, Lei M, Guo W, Chen R, Peng Y, Yu Z (2021) CNN-DMA: a predictable and scalable direct memory access engine for convolutional neural network with sliding-window filtering. In: Proceedings of the 2021 on Great Lakes Symposium on VLSI. GLSVLSI ’21, pp 115–121. Association for Computing Machinery, New York

Kobayashi R, Fujita N, Yamaguchi Y, Boku T (2019) OpenCL-enabled high performance direct memory access for GPU-FPGA cooperative computation. In: Proceedings of the HPC Asia 2019 Workshops, pp 6–9

Skejić E, Demirović D, Begić D (2020) Evaluation of perlin noise using nvidia cuda pla. Elektrotehniski Vestnik 87(5):260–266

Corporation, N.: CUDA Toolkit Documentation. https://docs.nvidia.com/cuda/index.html. Accessed March 2023

Funding

This paper accepted financial support from the National Natural Science Foundation of China (No. 61673186, No. 61972010), and the Natural Science Foundation of Fujian Province, China (No. 2021J01317), we acknowledge sincerely financial support above.

Author information

Authors and Affiliations

Contributions

XJ designed the major Algorithm, drafted the manuscript, analyzed and interpretation of experiments, and prepared figures. YC, WF, YZ, and JD revised the manuscript critically for important intellectual content.

Corresponding author

Ethics declarations

Conflict of interest

All authors have no conflict of interest that might be perceived to influence the results or discussion reported in this paper.

Ethical approval

This paper is not applicable for ethical approval.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xuebin, J., Yewang, C., Wentao, F. et al. Fast algorithm for parallel solving inversion of large scale small matrices based on GPU. J Supercomput 79, 18313–18339 (2023). https://doi.org/10.1007/s11227-023-05336-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05336-7