Abstract

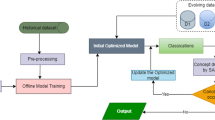

In recent years, the popularity of using data science for decision-making has grown significantly. This rise in popularity has led to a significant learning challenge known as concept drifting, primarily due to the increasing use of spatial and temporal data streaming applications. Concept drift can have highly negative consequences, leading to the degradation of models used in these applications. A new model called BOASWIN-XGBoost (Bayesian Optimized Adaptive Sliding Window and XGBoost) has been introduced in this work to handle concept drift. This model is designed explicitly for classifying streaming data and comprises three main procedures: pre-processing, concept drift detection, and classification. The BOASWIN-XGBoost model utilizes a method called Bayesian-Optimized Adaptive Sliding Window (BOASWIN) to identify the presence of concept drift in the streaming data. Additionally, it employs an optimized XGBoost (eXtreme Gradient Boosting) model for classification purposes. The hyperparameter tuning approach known as BO-TPE (Bayesian Optimization with Tree-structured Parzen Estimator) is employed to fine-tune the XGBoost model's parameters, thus enhancing the classifier's performance. Seven streaming datasets were used to evaluate the proposed approach's performance, including Agrawal_a, Agrawal_g, SEA_a, SEA_g, Hyperplane, Phishing, and Weather. The simulation results demonstrate that the suggested model achieves impressive accuracy values of 70.83%, 71.02%, 76.76%, 76.96%, 84.26%, 95.53%, and 78.35% on the corresponding datasets, affirming its superior performance in handling concept drift and classifying streaming data.

Similar content being viewed by others

Availability of data and materials

The datasets generated during and analyzed during the current study are available from the corresponding author upon reasonable request.

References

Angbera A, Chan HY (2022) A novel true-real-time spatiotemporal data stream processing framework. Jordan J Comput Inf Technol (JJCIT) 8(3):256–270

Tanha J, Samadi N, Abdi Y, Razzaghi-asl N (2022) CPSSDS: conformal prediction for semi-supervised classification on data streams. Inf Sci 584:212–234. https://doi.org/10.1016/j.ins.2021.10.068

Lu J, Liu A, Dong F, Gu F, Gama J, Zhang G (2019) Learning under concept drift: a review. IEEE Trans Knowl Data Eng 31(12):2346–2363. https://doi.org/10.1109/TKDE.2018.2876857

Liu W, Zhang H, Ding Z, Liu Q, Zhu C (2021) A comprehensive active learning method for multiclass imbalanced data streams with concept drift. Knowl-Based Syst 215:106778. https://doi.org/10.1016/j.knosys.2021.106778

Yang L, Cheung Y-M, YanTang Y (2020) Adaptive chunk-based dynamic weighted majority for imbalanced data streams with concept drift. IEEE Trans Neural Netw Learn Syst 31(8):2764–2778. https://doi.org/10.1109/TNNLS.2019.2951814

Suárez-Cetrulo AL, Quintana D, Cervantes A (2022) A survey on machine learning for recurring concept drifting data streams. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2022.118934

Gama J, Zliobaite I, Bifet A, Pechenizkiy M, Bouchachia A (2013) A survey on concept drift adaptation. ACM Comput Surv 1(1):35. https://doi.org/10.1145/0000000.0000000

Priya S, Uthra RA (2020) Comprehensive analysis for class imbalance data with concept drift using ensemble based classification. J Ambient Intell Humaniz Comput 12(5):4943–4956. https://doi.org/10.1007/s12652-020-01934-y

Liu A, Lu J, Liu F, Zhang G (2018) Accumulating regional density dissimilarity for concept drift detection in data streams. Pattern Recogn 76:256–272. https://doi.org/10.1016/j.patcog.2017.11.009

Liao G et al (2022) A novel semi-supervised classification approach for evolving data streams. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2022.119273

Liu A, Lu J, Zhang G (2021) Concept drift detection via equal intensity k-means space partitioning. IEEE Trans Cybern 51(6):3198–3211. https://doi.org/10.1109/TCYB.2020.2983962

Bifet A, Gavaldà R (2007) Learning from time-changing data with adaptive windowing. In: Proceedings of the 7th SIAM International Conference on Data Mining, pp 443–448. https://doi.org/10.1137/1.9781611972771.42

Santos SGTC, Barros RSM, Gonçalves PM (2019) A differential evolution based method for tuning concept drift detectors in data streams. Inf Sci J 485:376–393

Raab C, Heusinger M, Schleif FM (2020) Reactive soft prototype computing for concept drift streams. Neurocomputing 416:340–351. https://doi.org/10.1016/j.neucom.2019.11.111

Gama J, Medas P, Castillo G, Rodrigues P (2004) Learning with drift detection. In: Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol 3171, pp 286–295. https://doi.org/10.1007/978-3-540-28645-5_29

Yang L, Manias DM, Shami A (2021) PWPAE: an ensemble framework for concept drift adaptation in IoT data streams. In: 2021 IEEE Global Communications Conference, GLOBECOM 2021—Proceedings, pp 1–6. https://doi.org/10.1109/GLOBECOM46510.2021.9685338

Wares S, Isaacs J, Elyan E (2019) Data stream mining: methods and challenges for handling concept drift. SN Appl Sci 1(11):1–19. https://doi.org/10.1007/s42452-019-1433-0

Baena-Garcia M, Del Campo-Avila J, Fidalgo R, Bifet A, Gavalda R, Morales-bueno R (2006) Early drift detection method. In: 4th ECML PKDD International Workshop on Knowledge Discovery from Data Streams, vol 6, pp 77–86

Crammer K, Dekel O, Keshet J, Shalev-Shwartz S, Singer Y (2006) Online passive-aggressive algorithms. J Mach Learn Res 7:551–585

Losing V, Hammer B, Wersing H (2017) Self-adjusting memory: how to deal with diverse drift types. In: IJCAI International Joint Conference on Artificial Intelligence, October, pp 4899–4903. https://doi.org/10.24963/ijcai.2017/690

Zhang Y, Chu G, Li P, Hu X, Wu X (2017) Three-layer concept drifting detection in text data streams. Neurocomputing 260:393–403. https://doi.org/10.1016/j.neucom.2017.04.047

Zyblewski P, Sabourin R, Woźniak M (2021) Preprocessed dynamic classifier ensemble selection for highly imbalanced drifted data streams. Inf Fus 66:138–154. https://doi.org/10.1016/j.inffus.2020.09.004

Guo H, Zhang S, Wang W (2021) Selective ensemble-based online adaptive deep neural networks for streaming data with concept drift. Neural Netw 142:437–456. https://doi.org/10.1016/j.neunet.2021.06.027

UdDin S, Shao J, Kumar J, Ali W, Liu J, Ye Y (2020) Online reliable semi-supervised learning on evolving data streams. Inf Sci 525:153–171. https://doi.org/10.1016/j.ins.2020.03.052

Goel K, Batra S (2022) Dynamically adaptive and diverse dual ensemble learning approach for handling concept drift in data streams. Comput Intell 38(2):463–505

Yang L, Moubayed A, Hamieh I, Shami A (2019) Tree-based intelligent intrusion detection system in internet of vehicles. In: 2019 IEEE Global Communications Conference, GLOBECOM 2019—Proceedings, no. Ml. https://doi.org/10.1109/GLOBECOM38437.2019.9013892

Chen T, Guestrin C (2016) XGBoost: a scalable tree boosting system. In: Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, vol 13–17-August, pp 785–794

Jiang Y, Tong G, Yin H, Xiong N (2019) A pedestrian detection method based on genetic algorithm for optimise XGBoost training parameters. IEEE Access 7:118310–118321. https://doi.org/10.1109/ACCESS.2019.2936454

Chen Z, Jiang F, ChengY, Gu X, Liu W, Peng J (2018) XGBoost classifier for DDoS attack detection and analysis in SDN-based cloud. In: Proceedings—2018 IEEE International Conference on Big Data and Smart Computing, BigComp 2018, pp 251–256. https://doi.org/10.1109/BigComp.2018.00044

Smith LN (2018) A disciplined approach to neural network hyper-parameters: part 1—learning rate, batch size, momentum, and weight decay, pp 1–21. http://arxiv.org/abs/1803.09820

Ruder S (2016) An overview of gradient descent optimization algorithms, pp 1–14. http://arxiv.org/abs/1609.04747

Prechelt L (1998) Early stopping—but when? Early stopping is not quite as simple, pp 55–69

Kingma DP, Ba JL (2015) Adam: a method for stochastic optimization. In: 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, pp 1–15

Wu J, Chen XY, Zhang H, Xiong LD, Lei H, Deng SH (2019) Hyperparameter optimization for machine learning models based on Bayesian optimization. J Electron Sci Technol 17(1):26–40. https://doi.org/10.11989/JEST.1674-862X.80904120

Caruana R, Ksikes A, Crew G (2014) Ensemble selection from libraries of models. In: Proceedings of the Twenty-First International Conference on Machine Learning. https://doi.org/10.1145/1015330.1015432

Snoek J, Larochelle H, Adams RP (2012) Practical Bayesian optimization of machine learning algorithms. Adv Neural Inf Process Syst 4:2951–2959

El Shawi R, Sakr S (2020) Automated machine learning: techniques and frameworks. In: Kutsche R-D, Zimanyi E (eds) Big Data Management and Analytics. Springer, Cham, pp 40–69

Yang L, Shami A (2020) On hyperparameter optimization of machine learning algorithms: theory and practice. Neurocomputing 415:295–316. https://doi.org/10.1016/j.neucom.2020.07.061

Seeger M (2004) Gaussian processes for machine learning University of California at Berkeley. Int J Neural Syst 14:69–109

Bergstra J, Bardenet R, Bengio Y, Kégl B (2011) Algorithms for hyper-parameter optimization. In: Advances in Neural Information Processing Systems, vol 24. https://proceedings.neurips.cc/paper/2011/file/86e8f7ab32cfd12577bc2619bc635690-Paper.pdf

Injadat M, Moubayed A, Nassif AB, Shami A (2021) Multi-stage optimized machine learning framework for network intrusion detection. IEEE Trans Netw Serv Manag 18(2):1803–1816. https://doi.org/10.1109/TNSM.2020.3014929

Yang L, Shami A (2022) IoT data analytics in dynamic environments: from an automated machine learning perspective. Eng Appl Artif Intell. https://doi.org/10.1016/j.engappai.2022.105366

Sun Y, Wang Z, Liu H, Du C, Yuan J (2016) Online ensemble using adaptive windowing for data streams with concept drift. Int J Distrib Sensor Netw. https://doi.org/10.1155/2016/4218973

Yang L, Shami A (2021) A lightweight concept drift detection and adaptation framework for IoT data streams. IEEE Internet Things Mag 4(2):96–101. https://doi.org/10.1109/iotm.0001.2100012

Montiel J et al (2021) River: machine learning for streaming data in python. J Mach Learn Res 22:1–8

López Lobo J (2020) Synthetic datasets for concept drift detection purposes. Harvard Dataverse. https://doi.org/10.7910/DVN/5OWRGB

Agrawal R, Swami A, Imielinski T (1993) Database mining: a performance perspective. IEEE Trans Knowl Data Eng 5(6):914–925. https://doi.org/10.1109/69.250074

Bifet A, Holmes G, Pfahringer B, Kirkby R, Gavaldà R (2009) New ensemble methods for evolving data streams. In: Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 139–147. https://doi.org/10.1145/1557019.1557041

Elwell R, Polikar R (2011) Incremental learning of concept drift in nonstationary environments. IEEE Trans Neural Netw 22(10):1517–1531. https://doi.org/10.1109/TNN.2011.2160459

Zhu X (2010) Stream Data Mining Repository. http://www.cse.fau.edu/~xqzhu/stream.html

Dua D, Graff C (2017) UCI Machine Learning Repository. http://archive.ics.uci.edu/ml.

Funding

No funding.

Author information

Authors and Affiliations

Contributions

AA wrote the manuscript and did all the analysis, while HYC supervised the study, and both went through the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

No conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Angbera, A., Chan, H.Y. An adaptive XGBoost-based optimized sliding window for concept drift handling in non-stationary spatiotemporal data streams classifications. J Supercomput 80, 7781–7811 (2024). https://doi.org/10.1007/s11227-023-05729-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05729-8