Abstract

Capturing clear images in underwater environments is a major challenge in marine engineering. There are many issues to consider in obtaining clear underwater images such as climate, environment, and human factors. The most important reasons are the atomization effect caused by dispersion and the color cast caused by inconsistent energy attenuation of each wavelength when light propagates in water. Recently, deep learning technology has shown impressive performance on underwater image enhancement. The deep learning-based methods apply to the underwater image enhancement tasks. We propose a deep learning model for inferring a degradation model to further improve image dynamic range through a network-guided underwater image enhancement network architecture with multicolor space embedding and convolutional media transfer, fixed an issue with limited dynamic range and brightness in underwater images. Quantitative and qualitative results show that our network performs relatively well in the Underwater Image Enhancement Benchmark (UIEB) [7] dataset compared to other recent methods, and is expected to be applied to different types of underwater work and environments in the future and reduce the degradation problems that often occur with underwater images. The acquisition of high-fidelity imagery in subaqueous environments presents significant technical challenges in marine engineering, encompassing a complex interplay of climatological variables, environmental parameters, and anthropogenic factors. Primary impediments to image clarity comprise the atomization phenomenon induced by optical scattering and chromatic distortion resulting from wavelength-dependent energy attenuation in aqueous media. The procurement of high-resolution underwater imagery is fundamental to numerous scientific applications, including marine biological research, autonomous underwater robotics, and environmental surveillance systems, where precise visual data acquisition substantially augments analytical efficacy. Contemporary developments in deep learning architectures have exhibited remarkable potential for enhancing underwater image quality. In response to these challenges, we present a novel deep learning framework that derives an empirical degradation model, utilizing a network-guided enhancement architecture incorporating multicolor space embedding and convolutional media transfer methodologies to optimize image dynamic range. This methodological approach specifically addresses the limitations in luminance distribution and dynamic range characteristics inherent in subsea imagery. Empirical evaluation of our architectural framework on the standardized Underwater Image Enhancement Benchmark (UIEB) [7] dataset demonstrates statistically significant performance improvements over contemporary methodologies, suggesting broad applicability across diverse submarine environments for mitigating common degradation phenomena.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the years, many scholars have explored the field of underwater environment. Gradually, underwater images have been increasingly applied in various fields, such as marine biology, underwater robotics, and environmental monitoring. Extensive research in the past has been summarized and classified into three major directions: underwater image enhancement method based on imaging equipment, algorithm-based enhancement method, the underwater image algorithm based on deep learning, and based on the algorithm, two sub-methods of underwater image enhancement algorithms are separated. The light received by the underwater camera not only comes from the light directly reflected by the object, but also includes the light generated by random reflection and backscattering during the propagation process. These processes disperse the beam of light into a uniform background light, adding artificial light sources to imaging devices to improve visibility is a widely known method. Therefore, many scholars have designed many hardware platforms and imaging devices to improve the quality of underwater images. A hardware platform such as Wang et al. [1] proposed a method based on the spatial correlation of the triangular range intensity profile, which can realize 3D super-resolution range gating imaging, and improve the imaging quality and resolution. Compared with the high cost of equipment, digital image processing shows a more convenient and practical aspect in software. Pizer et al. [2] introduced adaptive histogram equalization (AHE) through image in each pixel, the local histogram equalization is performed, the local histogram is limited, and different area sizes and masks are used to enhance the contrast of the image. He et al. [3] proposed a priori dehazing algorithm based on dark channels. This method has been widely used in the field of underwater image processing. It is a method for estimating global atmospheric light. It estimates global atmospheric light by finding dark channel pixels, and then calculates the occlusion rate of the image. This occlusion rate can be used to estimate the effect of scattered light in the image, allowing for better removal of fog and scatter. With the maturity of the neural network structure, scholars have found that such a method is suitable for image processing, so underwater images have become a popular learning topic. Li et al. [4] proposed to use feature extraction to combine RGB and depth information, and improve the efficiency of target detection through interactive fusion and self-adaptive guided attention. Y. Guo [5] used the generative adversarial network (GAN) for training, and combines the concept of multi-scale feature extraction and dense connection to improve the quality of underwater images. A color classifier based on deep learning was proposed by C. Li, J. [6]. This method has better color correction performance than the past on many underwater datasets. The convolutional neural network (CNN) model (Water-Net) proposed by Li et al. [7] for underwater image enhancement is a gated fusion network for underwater image enhancement, aiming to improve underwater image visibility and quality. It is based on the observation that underwater images suffer from shading bias and blurring and need to be enhanced for better visuals. Most of the existing underwater image enhancement methods use a single mode, which is difficult to adapt to different underwater environments and scenes. The predicted confidence map can help identify foreground objects and background regions in an image, and help to better handle image details. We implement the concepts proposed by the network architecture of U color [8] and Water-Net [7] and explore whether other improvement methods can significantly improve the enhancement of underwater images.

Based on the multicolor space embedding enhanced underwater image algorithm proposed by Li et al. [8] guided by medium transmission, we propose an algorithm to embed the extracted feature information into the multicolor space before adding gamma correction, HE correction to improve image quality, to capture the color distribution information in the image, and enhance the image in a multicolor space, and convert the enhanced image back to the original color space. Use the CNN architecture concept of [7] to change the channel of its medium transmission map, and use the medium transmission channel to post-process the enhanced image to further reduce the noise and distortion in the underwater image. Figure 1 demonstrates the results of our method.

In this research propose, a deep learning model for inferring a degradation model to further improve image dynamic range through a network-guided underwater image enhancement network architecture with multicolor space embedding and convolutional media transfer, fixed an issue with limited dynamic range and brightness in underwater images.

2 Related works

Only by understanding, the principles of image formation can we design improved methods more effectively. As the distance increases, the light will decrease. Therefore, defogging needs to calculate the transmittance according to the intensity of atmospheric light. This method can help us better restore images covered by fog and improve image quality and clarity. The image formation model (IFM) is:

where \(I^{c}\) is the observed intensity, \(J^{c}\) is the scene radiance, \(A^{c}\) is the homogeneous ambient or background light, and \(T\left( x \right)\) is the medium transmission, indicating the transmittance of light rays reaching the camera. Most current methods use IFM as a starting point, with the goal of estimating the transmittance of each pixel, and then use the estimated transmittance to remove the haze.

Underwater images have gradually been applied in various fields. Extensive research in the past has been summarized and classified into three major directions: hardware-based methods, conventional improvement methods, and deep learning-based methods.

2.1 Hardware-based methods

The propagation of underwater light will be affected by many factors, causing problems such as absorption, scattering, and wavelength disappearance. [9] As the depth increases, the visibility will also rapidly decay. The light received by the camera is produced by three main components. The first is the direct component, which is the portion of the light that bounces off the surface of the object directly to the camera. The second component is the forward scatter component, which refers to the part of the light that is randomly reflected during propagation and finally reaches the camera. The third component is the backscatter component, which is the part of the light that is scattered after encountering a particle and eventually reaches the camera. These multiple scattering processes further disperse the light beam, resulting in a uniform background light. In other words, the light received by the camera not only comes from the light directly reflected by the object, but also the light generated by random reflection and backscattering during the propagation process, which will spread the beam into a uniform background light. Adding artificial light sources to imaging devices to improve visibility is a widely known method. Therefore, many scholars have designed many hardware platforms and imaging devices to improve the quality of underwater images. A hardware platform such as Wang et al. [1] proposed a method based on the spatial correlation of the triangular range intensity profile, which can realize 3D super-resolution range gating imaging, and improve the imaging quality and resolution. Jaffe [10] discussed how structured lighting systems can collect images faster than scanning single-beam systems and effectively enhance the contrast of underwater imaging systems. Foresti et al. [11] proposed a method based on visual perception, using autonomous underwater vehicles for visual inspection of submarine structures, which uses high-resolution cameras to capture images of submarine structures, and performs image processing and analysis to detect anomalies in submarine structures. Although the addition of artificial light sources increases the visibility, it also brings many problems. The increase of lighting makes the equipment in convenient to carry, and the complex underwater environment causes uneven lighting. Both reduce the image quality, so there is an algorithm-based underwater image enhancement method.

2.2 Conventional improvement methods

Underwater image enhancement algorithms: An underwater image can be viewed as a linear composition of these three light components. Among them, both forward and backward scattering will lead to unclear image quality, which will have a great impact on the imaging of underwater images. Image enhancement methods can make underwater images have better contrast. In recent years, researchers have proposed many image enhancement algorithms. Pizer et al. [2] introduced Adaptive Histogram Equalization (AHE) through image. In each pixel, the local histogram equalization is performed, the local histogram is limited, and different area sizes and masks are used to enhance the contrast of the image. Reza proposed a contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. Compared with traditional methods, this algorithm uses techniques such as blocking and linear interpolation to reduce the excessive enhancement effect and noise amplification, and Anicut is a fusion-based method that fuses different images or videos to generate a better-quality image or video. A fusion of techniques, such as wavelet transform, Laplacian pyramid, and machine learning methods, etc., finally demonstrates through experiments that the proposed method can enhance the quality of underwater images and videos, which are still affected by artificial lighting sources.

Underwater image restoration methods: In recent years, due to the great limitations of single-image dehazing techniques in underwater images, researchers have begun to focus on developing special enhancement and restoration methods for underwater images. Then, we have to mention the dark channel prior dehazing algorithm proposed by He et al. [3]. This method has been widely used in the field of underwater image processing. It is a method for estimating the global atmospheric light channel pixels to estimate the global atmospheric light, and then calculate the occlusion rate of the image. This occlusion rate can be used to estimate the effect of scattered light in the image, allowing for better removal of fog and scatter. In order to improve, He et al. [3] may fail when the brightness of the object is similar to the background, and Tan studied a deep learning weather visibility estimation method in a single image, which is widely used in traffic safety, weather forecasting and other fields, and for other related studies provide reference and reference. Because the red wavelength of underwater images is easily absorbed, Drew [12] proposed a dark channel prior (DCP) that only considers the color information of the blue and green channels for processing. The image performs depth estimation and obtains the depth information of each pixel in the image. Then, a restoration network (Restoration Net) is used to jointly train the estimated depth map and the original image, and learn a restoration model to restore the sharpness and contrast of the image. Li et al. [13] proposed a dehazing enhancement method for underwater images based on minimum information loss and prior knowledge of histogram distribution. Specifically, the method decom poses the underwater image into fog-based data and background-based data by minimizing information loss, and then removes the fog through prior knowledge of the histogram distribution to achieve underwater image enhancement. This method can effectively remove fog and enhance the contrast and clarity of underwater images, and achieve better results than existing methods, which has practical value and application prospects.

2.3 Deep learning-based methods

LeNet-5 proposed by Y. Lecun [14] has laid a solid foundation for modern machine learning. With the maturity of the neural network structure, scholars have found that such a method is suitable for image processing, so underwater images it has also become a popular study topic. This focus is driven by the unique challenges posed by underwater environments, such as color distortion, haze, and noise, which traditional methods often fail to adequately address. Li et al. [4] proposed to use feature extraction to combine RGB and depth information, and improve the efficiency of target detection through interactive fusion and self-adaptive-guided attention. Y. Guo [5] used the generative adversarial network (GAN) for training, and combines the concept of multi-scale feature extraction and dense connection to improve the quality of underwater images. A color classifier based on deep learning was proposed by C. Li, J. [6]. This method has better color correction performance than the past on many underwater datasets. C. Li, S. [15]. An underwater image enhancement convolutional neural network (CNN) method based on underwater scene prior (UWCNN), which uses the prior characteristics of underwater scenes to input into the deep neural network to restore and enhance the image and improve the quality. To make the output clearer, Li et al.[8] proposed a network architecture (Ucolor) that combines the characteristics of medium transmission and multicolor spaces to achieve dehazing and enhancement of underwater images. Compared with traditional underwater image enhancement methods, this method has better dehazing and enhancement effects, and can be adaptively adjusted according to different underwater environments. Kai et al. [16] proposed (MTUR-Net) an intermediate transfer graph-based inpainting method. The method uses a neural network to reconstruct the input underwater image, and estimates the intermediate transmission map through supervised learning during the reconstruction process, thereby optimizing the inpainting result. Compared with traditional restoration methods, this method can better handle various underwater environments and provide more accurate and adaptive restoration results. The CNN model (Water-Net) proposed by Li et al. [7] for underwater image enhancement is a gated fusion network for underwater image enhancement, aiming to improve underwater image visibility and quality. It is based on the observation that underwater images suffer from shading bias and blurring and need to be enhanced for better visuals. Most of the existing underwater image enhancement methods use a single mode, which is difficult to adapt to different underwater environments and scenes. The predicted confidence map can help identify foreground objects and background regions in an image, and help to better handle image details.

More recently, state-of-the-art techniques such as the Hclr-net by Zhou et al. [17] and the generalized physical knowledge-guided dynamic model by Mu et al. [18] have pushed the boundaries of underwater image enhancement. Hclr-net [17] introduces hybrid contrastive learning regularization with locally randomized perturbations, enhancing contrast and reducing noise in underwater images. This method is particularly effective in scenarios with significant image degradation, outperforming many traditional and deep learning-based models. Mu et al. [18] proposed a dynamic model guided by physical underwater knowledge, specifically addressing light absorption and scattering in varying underwater environments. By incorporating physical properties into the learning process, the model dynamically adapts to changes in water conditions, offering more accurate and consistent image restoration than previous methods. These newer approaches build on the strengths of deep learning and physical modeling, making them more robust in handling the complexity of underwater environments. Compared to earlier methods like GANs [5] and Water-Net [7], both Zhou et al. and Mu et al.’s [18] methods offer significant improvements in robustness and adaptability across different underwater scenarios. Our proposed method shares a similar motivation by leveraging multicolor space embedding and media transmission to enhance underwater image quality while addressing issues of dynamic range and color degradation.

3 Materials and proposed method

3.1 Network architecture

In this study, we draw on concepts from the Ucolor model [8], but develop a unique network architecture that integrates multicolor space embedding with media transport guidance for enhanced underwater image quality. This network, distinct from Ucolor [8], specifically tailors feature extraction to suit the varying lighting conditions and noise present in underwater environments.

Adapted from [8]

Diagram showing the overall procedure of the proposed approach

Adapted from [8]

Residual-enhancement module architecture

Adapted from [8]

Channel-attention module architecture

Adapted from [8]

The structure diagram of the medium transmission network. The medium transmission diagram passes through CONV filter 128, CONV filter 256, and CONV filter 512, then decreases back to 128, and then outputs the result through CONV filter 1

The main components of the Ucolor [8] network include a residual enhancement module, a channel-attention module, and a media transport guidance module. The kernel size of the Conv block is all 3 × 3 filters. “Down sampling” is done by max pooling operation, and “Up sampling” is done by bilinear interpolation Fig. 2.

3.2 Color space encoder

In order to obtain more diverse features, in addition to the RGB color space, the CIE L*a*b* and Y’CbCr color spaces are used here. The CIE L*a*b* color space is a uniform color space that is mainly used for the color difference analysis of human perception. Luminance (L*), chroma (a*), and chroma (b*) are composed of three channels. The Y’CbCr color space is mainly used for color representation and compression of digital images and videos composed of channels. Both color spaces have their unique characteristics in different applications Figs. 3, Fig. 4 and Fig. 5.

The role of the residual enhancement module is to enhance the feature extraction ability of the deep residual network and reduce the problem of gradient disappearance, thereby improving the performance of the model. It consists of two convolutional layers and a skip connection, where the first convolutional layer is responsible for extracting features, the second convolutional layer is responsible for weighting and scaling the features, and the skipping connection is used to add the original input to the feature map, thus achieving feature reuse and residual learning. The local features and global features in the image can be effectively learned, and these features can be further weighted and fused to achieve better image enhancement effects.

Our model acts as a pivotal component in the overall network, specifically targeting the common challenges in underwater image enhancement, such as color cast, haze, and contrast loss. By integrating the transmission guidance module, the model identifies and corrects areas with substantial image degradation, ensuring consistent visibility and color balance. This feature is crucial for practical underwater applications where environmental factors vary significantly.

3.3 Residual-enhancement module

The input goes through a ReLU-activated convolutional layer to become x, and then goes through a set of 2 CONV/ReLU layers and one CONV without activation to become F(x). The output is x + F(x). This process is repeated twice.

3.4 Channel-attention module

The feature quantity is compressed into a 1 × 1 × N vector by global average pooling, through a fully connected layer (FC) where the weights are learned together with other parameters. Activations such as ReLU and Sigmoid are used to select the most representative features and generate feature score. The final result is obtained by pixel-wise multiplying this feature score with the original feature.

In order to obtain the interdependence between channel features from different color spaces, the features on different channels (channel) are weighted to strengthen and enhance useful information, and suppress useless or redundant information for enhancement. This process can be seen as modeling the correlation between feature channels and adjusting them.

As mentioned in Sect. 3.2, inputs are encoded to separated channels in different color spaces. Same as Ucolor [8], in order to investigate the contribution of different feature channels to the training prediction and accuracy as well as to see which channels are more significant, Squeeze-and-Excitation network modules are incorporated at the end of the encoder network, which is called channel-attention module.

Input feature vector \(U = \left[ {u_{1} ,u_{2} , \ldots ,u_{N} } \right] \in {\mathbb{R}}^{H \times W \times N}\), where N is the number of feature channels, H and W are the height and width of each feature map. N in our network is 3*3 = 9. We first squeeze all spatial information by performing global average pooling on each feature channel to obtain channel descriptor \(z = \left[ {z_{1} ,z_{2} , \ldots ,z_{N} } \right] \in {\mathbb{R}}^{N \times 1}\). The \({\text{k}}\)-th entry of \({\varvec{z}}\) can be expressed as:

where U: input feature vectors.

H: height of each feature map.

W: width of each feature map.

N: the number of feature channels.

k: \({1, } \ldots {\text{, N}}\).

z: channel descriptor.

The simple gating mechanism is as follows:

where δ is the ReLU function, and σ is the Sigmoid function. This gating mechanism is simulated here using two fully connected layers (FC) with outputs N/r and N, respectively. The weights of these two FC layers are \(W_{1} \in {\mathbb{R}}^{{\frac{N}{r} \times N}}\) and \(W_{2} \in {\mathbb{R}}^{{N \times \frac{N}{r}}}\), where r = 16 is used for dimensionality reduction purpose. Finally, the output feature vectors \(V \in {\mathbb{R}}^{H \times W \times N}\) is computed by rescaling the input feature vector U with the Sigmoid output vector s:

where \(\otimes\) represents pixel-wise multiplication.

3.5 Medium transmission network

The mass transmission map is considered to play an important role in image restoration. The medium transmission refers to the transmission information of the remaining light after being absorbed and scattered from the light source to the camera. The color distortion and illumination change in the underwater image. the main reason. In the past [8] the media transmission graph was not specially treated. In this paper, this concept is used to design a network to output the enhanced results through the transmission graph.

The design of the neural network refers to the processing of multicolor space encoding by the residual enhancement module, and compares the number of convolutional layers, the number of convolutional kernels, and the activation parameters. The number of output channels of the last convolutional layer is 1, which is the same as the input image and the enhanced image. The function of these convolutional layers is to perform nonlinear transformation on the input image, improve the contrast and details of the image, and remove some noise and distortion information.

3.6 Media transport guidance module

The transmission graph \(T \in {\mathbb{R}}^{H \times W}\) generated by the medium transmission network is integrated at the beginning of the decoder network and evaluate the importance of various locations in a feature map. The more degenerate a pixel is (the higher the transmittance of the \(\overline{{\text{T(x)}}}\) value), the more weight is assigned because that pixel requires more attention. Suppose our input feature vector is \(V \in {\mathbb{R}}^{C \times H \times W}\), and the output features vector is \(W \in {\mathbb{R}}^{H \times W \times C}\). The process is described as follows:

With \(\oplus\) represents pixel-wise addition. The medium transmission map T was obtained using the generalized dark channel prior algorithm [19], which is:

where \(\tilde{T}\) denotes the estimated transmission map, c is an individual color channel, and Ω(x) denotes a 15 × 15 local pixel patch centered at x.

3.7 Loss function

Influenced by the use of perceptual loss in computer vision tasks such as super-resolution and style transfer [20], this method can be applied to tasks such as image style conversion and super-resolution, and can better retain the visual experience and structural details of the image. The core idea of perceptual loss is to use the pretrained convolutional neural network to perform image processing. Feature extraction and comparing these features with the corresponding features of the target image to calculate the loss of the image. The author set the loss function Lf as a linear combination between mean-squared error (MSE) loss LMSE and the perceptual loss Lper:

where λ1 and λ2 are set as hyper parameters. The MSE loss LMSE measures the per-pixel difference between output features map \(\hat{y}\) and ground truth features map \(y\) with \(\hat{y}\), \(y \in {\mathbb{R}}^{C \times H \times W}\) using Euclidean distance \(\left\| {\hat{y} - y} \right\|_{2}\):

where C is {r, g, b} channel. H and W are the height and width of each feature map.

On the other hand, rather than using per-pixel loss as a goal for training, Lper encourages the output images \(\hat{y}\) to have as similar feature representations and structure to target image \(y\) as possible. This can be done by first processing both the results \(\hat{y}\) and the ground truth \(y\) through the jth layer of a pretrained network ϕ. The perceptual loss is the squared and averaged Euclidean distance between these two outputs as:

where C is {r, g, b} channel. H and W are the height and width of each feature map, ϕj(x) is the activations of the jth layer of the ϕ network. Here, ϕ is defined as the pretrained VGG-19 network on the ImageNet dataset [21], and the jth layer is the relu5_4 of the VGG-19 network.

4 Experiments and discussion

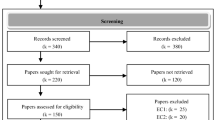

4.1 Training and validation

Training datasets: we used the dataset UIEB [7] and the synthetic image dataset [15] to train the network together. Li et al. [7] built an underwater image dataset UIEB [7], including 950 real photographic underwater images, as well as 890 reference images and 60 challenging images, for a variety of underwater scenarios including a variety of seawater degradation and a wide range of images [15]. In order to objectively evaluate the image, 12 kinds of image enhancement methods are used to generate potential reference images. (This is ground truth, but underwater images do not have the so-called ground truth for comparison, which can only be generated to simulate the most likely imagery data, which we refer to here as reference images or reference data.) The 12 methods are divided into: 9 underwater image enhancement methods (fusion-based, two-step-based, Retinex based, UDCP [12], regression-based, GDCP [20], Red Channel, histogram prior, and blurriness-based), 2 image defogging methods (DCP [6] and MSCNN), 1 commercial application for enhancing underwater performance (Dive + [22]), comparing the enhancement results of 12 images to select 890 images with reference values are used as training basis.

Choice of hyper parameters: In training, the selection of hyperparameters often refers to the data in the original implementation. The hyperparameter training data used in this paper has a random cut size of 128 × 128, a batch training size of 16, and ADAM optimizer [23] parameters β1 and β2 are set to 0.9 and 0.99. The learning rate is set to 0.0001, and the training cycle is 50.

The loss function is defined as the weight λ1 of per-pixel loss and λ2 of perceptual loss, and the best weights λ1 = 5 and λ2 = 0.05 in the past model experiments.

4.2 Experiment settings

Datasets: We used the following datasets for experiment and comparison:

UIEB-90 [7]: The remaining 90 pairs of images in the UIEB [7] dataset.

UIEB-60 [7]: 60 underwater images from the UIEB [7] dataset which are deemed more challenging and do not have corresponding reference images.

SQUID [24]: 16 images taken from the SQUID [24] dataset which contains 57 underwater image pairs taken from various dive sites in Israel. Same as Test-C60, these data do not have corresponding ground truths or reference images.

Color Check 7[25]: Taken by seven different cameras, the images are used to evaluate the robustness and accuracy of underwater color correction.

Compared methods: We used other past underwater image enhancement and restoration methods for comparison, Peng et al. [26], UWCNN [15], UcycleGAN [6], Ucolor [8], and Dive + [22]. The results of using the reference dataset UIEB-90 [7] and the methods without reference data UIEB-60 [7] and SQUID [24] datasets are compared using the experimental results in this paper.

Evaluation Metrics: For comparing the dataset UIEB-90 [24] with reference data, we will use the following metrics for objective evaluation: mean-square error metric (MSE), peak signal-to-noise ratio metric (PSNR), and structural similarity index measure (SSIM) to quantitatively evaluate the visual quality of the results according to the ground truth data. A lower MSE score approaching 0, a higher PSNR score, and a higher SSIM score approaching 1 indicates the image is structurally closer to the reference value.

Datasets like UIEB-60 [7] and SQUID [24] without ground truth data use Underwater Image Quality Measurement (UIQM) [27]: individual methods for evaluating underwater image degradation based on various image characteristics including brightness, contrast, and saturation. Aspect, hue, blur, and noise, the concept of attribute metrics comes from the attribute system of human vision (HVS), and the image quality is evaluated by calculating the weighted sum of these features, Underwater Color Image Quality Evaluation (UCIQE) [28]. By features such as hue, luminance contrast, and saturation averages in the standard color difference space, CIE L*a*b* are calculated to measure image quality and used to measure the quality of underwater images with problems such as blurring, low contrast, and severe color casts.

Recent advancements in underwater image enhancement, such as Hclr-net by Zhou et al. [17] and the generalized physical knowledge-guided dynamic model by Mu et al. [18], provide promising new methods for underwater image restoration. These methods incorporate contrastive learning regularization and dynamic physical models to handle underwater image degradation more effectively. Comparing our proposed method with these state-of-the-art approaches will further validate its performance in handling complex underwater environments. Future work could include experimental comparisons with these models to highlight our method’s relative strengths and weaknesses in terms of robustness and generalization.

In the training and validation section, hyperparameters are chosen with reference to Ucolor [8]. The selection of hyperparameter training data in this article is the followings: random crop size is 128*128, batch size is 16, ADAM optimizer parameters \({\upbeta }_{1}\) and \({\upbeta }_{2}\) are set to 0.9 and 0.99, learning rate is 0.0001, and the training period is 50. The loss function \({\uplambda }_{1}\) (per-pixel loss) is set to 5, and \({\uplambda }_{2}\)(perceptual loss) is set to 0.05.

4.3 Qualitative evaluation

We visually compare the experimental results with different augmentation and restoration methods in Figs. 6, 7, 8, and 9.

In Fig. 6, we can see that when our method removes the color cast, compared with other methods, it can obviously eliminate the blue and green color cast images, and the overall visibility is relatively clear, which will not appear in [8] in Fig. 6 (D). The halo and color cast, we can also restore the color close to the object in the process of processing. The processing method in [6] has the most serious color cast. In Fig. 6 (A, B, C) the color is too bright and there are color artifacts. It is obvious that our method is better than this method in terms of color reproduction and detail preservation. The result is roughly the same as that of [8] which is the closest to ours. After careful observation, we can see that this method still cannot eliminate the problem of green color cast, and the brightness of our photo is also slightly brighter visually. The results of [6] present a strong sense of turbidity, which is obviously inferior to our results.

In order to prove the effectiveness of our proposed method, we trained UIEB-60[7] and SQUID [24] datasets as shown in Fig. 7 and Fig. 8. In Fig. 7 for the turbid original image, we can see that all methods obviously have serious color cast, and cannot overcome the uneven background and green-gray areas. Our method can filter out the green color cast but unlike the unnatural artifacts presented by the background in [8], the details of the image can also be fully presented. In Fig. 7A, B, C, D the visual experience of color, brightness, and contrast is comfortable and pleasant.

In the SQUID [24] dataset, Fig. 8A can clearly see the visual effect of our restored image. Compared with the closer [8], our method does not have on the reef. There are halos in some areas on the middle reef, and there are no artifacts in the background of sea water and sand. Figure 8B also shows that there is a serious color shift in seawater, and our method not only does not have this phenomenon. The color and texture details of the sand and the presentation of coral reefs are also relatively better than [8], proving that our method is effective and executable.

4.4 Quantitative evaluation

It can be seen from Table 1 that the method we designed has higher MSE, PSNR, and SSIM (0.010, 20.93, and 0.877) than the above-mentioned methods in the past. Compared with Ucolor [8], we have MSE, PSNR, and SSIM significant improvement. The results of this objective evaluation demonstrate the effectiveness of our method for underwater image restoration.

From Table 1 that there were better evaluation indicators. However, the values in the two evaluations of UCIQE [28] and UIQM [27] in Table 2 are not the best.

4.5 Ablation study

We further examine the significance of each color space in the encoder network. Since the two color spaces CIE L*a*b* and Y’CbCr have the same structure of one luma/lightness channel and two chroma channels, it raises the question of where or not one of these color spaces is redundant for the model. In order to analyze this, we ran training again with two version of our network, which are w/o Y’CbCr and w/o CIE L*a*b* ( the model without Y’CbCr and CIE L*a*b*, respectively), on the UIEB-90 [7] dataset. The hyperparameters for training were kept the same. MSE, PSNR, and SSIM metrics were used for quantitative comparisons.

As can be seen in Table 3, despite using the same hyperparameters for training, both two version w/o Y’CbCr and w/o CIE L*a*b* do not perform as well as original quantitatively. When combining both two color spaces Y’CbCr and CIE L*a*b* for feature encoding, original manages to optimize learning results due to diverse feature representation.

4.6 Failure cases

Despite relatively good performance among mentioned examples, the enhancement method can only work if the dynamic range of an image is below a certain threshold. If the image has already had an acceptable contrast and distribution of brightness, and then further enhancement would not be needed as noises will be introduced and the image will not look natural as shown in Fig. 10.

5 Conclusion

Our proposed approach, which involves using deep learning models and convolutional neural networks for inference on media transport models, improves upon [3]. We use Y′CbCr, RGB, and CIE L*a*b* to encode our features, enter the residual enhancement module to effectively learn the local features and global features in the image, and further weight and fuse these features, and design the convolutional network used to extract the characteristics of the medium image, and then guide the module through the medium transmission to estimate the propagation distortion model, can repair the pixel by pixel in the image, thereby improving the quality and clarity of the image. An attention mechanism is then used to highlight these important features. The reprogrammed model also showed improved quantitative and qualitative results. By monitoring our learning process and using quantitative metrics, we show how different perceptual loss weights can greatly affect our results and training. The effectiveness and robustness of our underwater recovery method are demonstrated on different datasets, and it can be adapted to different types of underwater environments and constraints. It also demonstrates the importance of medium transport images for image restoration.

Data availability

No datasets were generated or analyzed during the current study.

References

Wang Y, Song W, Fortino G, Qi L-Z, Zhang W, Liotta A (2019) An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 7:140233–140251

Pizer SM, Philip Amburn E, Austin JD, Cromartie R, Geselowitz A, Greer T, ter Haar B, Romeny JB, Zimmerman KZ (1987) Adaptive histogram equalization and its variations. Comput Vision Graph Image Process 39(3):355–368. https://doi.org/10.1016/S0734-189X(87)80186-X

He K, Sun J, Tang X (2011) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

Li C et al (2021) ASIF-net: attention steered interweave fusion network for RGB-D salient object detection. IEEE Trans Cybernetics 51(1):88–100

Huang G et al (2022) Convolutional Networks with dense connectivity. IEEE Trans Pattern Anal Mach Intell 44(12):8704–8716

Li C, Guo J, Guo C (2018) Emerging from water Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process Lett 25(3):323–327

Li C et al (2020) An underwater image enhancement benchmark dataset and beyond. IEEE Trans Image Process 29:4376–4389

Li C et al (2021) Underwater Image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans Image Process 30:4985–5000

Han M et al (2020) A review on intelligence dehazing and color restoration for underwater images. IEEE Trans Syst Man Cybernetics Syst 50(5):1820–1832

Jaffe JS (2010) Enhanced extended range underwater imaging via structured illumination. Opt Express 18:12328–12340

G. L. Foresti, S. Gentili and M. Zampato. (1998) A vision-based system for autonomous underwater vehicle navigation. In: IEEE Oceanic Engineering Society. OCEANS’98. Conference Proceedings (Cat. No.98CH36259), Nice, France 1: 195–199

Drews PL, Nascimento ER, Botelho SS, Campos MFM (2016) Montenegro campos: underwater depth estimation and image restoration based on single images. IEEE Comput Graph Appli 36(2):24–35

Li C-Y, Guo J-C, Cong R-M, Pang Y-W, Wang B (2016) underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans Image Process 25(12):5664–5677. https://doi.org/10.1109/TIP.2016.2612882

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proceed IEEE 86(11):2278–2324. https://doi.org/10.1109/5.726791

Li C, Anwar S, Porikli F (2020) Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit 98:107038–107049

Yan K et al (2022) medium transmission map matters for learning to restore real-world underwater images. Appl Sci. https://doi.org/10.3390/app12115420

Zhou J, Sun J, Li C, Jiang Q, Zhou M, Lam K-M, Zhang W, Fu X (2024) Hclr-net: Hybrid contrastive learning regularization with locally randomized perturbation for underwater image enhancement. Int J Comput Vision. https://doi.org/10.1007/s11263-024-01987

P. Mu, H. Xu, Z. Liu, Z. Wang, S. Chan, and C. Bai. (2023) A generalized physical-know such as ledge-guided dynamic model for underwater image enhancement. In: ACM International Conference on Multimedia pp. 7111–7120

Peng Y-T, Cao K, Cosman PC (2018) generalization of the dark channel prior for single image restoration. IEEE Trans Image Process 27(6):2856–2868. https://doi.org/10.1109/TIP.2018.2813092

J. Johnson, A. Alahi, and L. Fei-Fei (2016) Perceptual losses for real-time style transfer and super-resolution. European conference on computer vision, Springer, pp. 694–711

J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei.2009: ImageNet: A large-scale hierarchical image database. IEEE conference on computer vision and pattern recognition, 248–255 .

Dive+ website. https://dive.plus/

D. P. Kingma and J. LeiBa (2015) ADAM: A method for stochastic optimization. Int Conf Learn Represent.

Berman D, Levy D, Avidan S, Treibitz T (2021) SQUID- stereo quantitative underwater image dataset. Zenodo. https://doi.org/10.5281/zenodo.5744037

Ancuti CO, Ancuti C, De Vleeschouwer C, Bekaert P (2017) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27:379–393

Peng Y-T, Cosman PC (2017) Underwater image restoration based on image blurriness and light absorption. IEEE Trans Image Process 26(4):1579–1594. https://doi.org/10.1109/TIP.2017.2663846

Panetta K, Gao C, Again S (2016) Human-visual-system-inspired underwater image quality measures. IEEE J Oceanic Eng 41(3):541–551. https://doi.org/10.1109/JOE.2015.2469915

Yang M, Sowmya A (2015) An underwater color image quality evaluation metric. IEEE Trans Image Process 24(12):6062–6071. https://doi.org/10.1109/TIP.2015.2491020

Author information

Authors and Affiliations

Contributions

These authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hu, KJ., Pan, YT., Jiang, LW. et al. A robust underwater image enhancement algorithm. J Supercomput 81, 244 (2025). https://doi.org/10.1007/s11227-024-06719-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-024-06719-0