Abstract

The detection of defects in Hexagonal barbed wire is a crucial aspect within the realm of building structure safety and monitoring. Traditional manual detection methods for Hexagonal barbed wire defects suffer from long detection times and low efficiency. Existing deep learning models such as YOLOv5 and YOLOv8 fail to strike a balance between detection accuracy and speed in the context of Hexagonal barbed wire detection. Therefore, this paper proposes a Hexagonal barbed wire defect detection model based on YOLOv8s named YOLOv8S-GSW, which includes replacing part of the convolution of the backbone network with GSconv to improve the detection speed of the model, and replacing C2f Block(C2f) in the feature extraction network with VOV-GSCSP structure. It further improves the detection speed and balances the detection accuracy. Additionally, the WIoUv3 loss function is introduced as a replacement for the original CIoU loss function, resulting in accelerated network convergence along with enhanced detection accuracy. Experimental results demonstrate that YOLOv8S-GSW achieves mAP@0.5 and mAP@0.5 0.95 scores of 94.32% and 45.70%, respectively-exceeding those achieved by YOLOv8s by 3.26% and 2.75%. Furthermore, this improved model reduces its volume by 10.97%. Consequently, the enhanced YOLOv8S-GSW exhibits superior capability for detecting Hexagonal barbed wire defects, while meeting industrial requirements.

Similar content being viewed by others

Data availibility

The data cannot be made publicly available upon publication because they contain sensitive personal information. The data that support the findings of this thesis are available upon reasonable request from the authors.

References

Boetticher A, Volkwein A (2019) Numerical modelling of chain-link steel wire nets with discrete elements. Can Geotechn J 56(3):398–419

Spencer BF, Hoskere V, Narazaki Y (2019) Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 5(2):199–222. https://doi.org/10.1016/j.eng.2018.11.030

Lee B, Tarng Y (2001) Surface roughness inspection by computer vision in turning operations. Int J Mach Tools Manuf 41(9):1251–1263

Gao Y, Liu J, Li W, Hou M, Li Y, Zhao H (2023) Augmented grad-cam++: super-resolution saliency maps for visual interpretation of deep neural network. Electronics 12(23):4846

Sun X, Zhang Q, Wei Y, Liu M (2023) Risk-aware deep reinforcement learning for robot crowd navigation. Electronics 12(23):4744

Cao Y, Pang D, Zhao Q, Yan Y, Jiang Y, Tian C, Wang F, Li J (2024) Improved yolov8-gd deep learning model for defect detection in electroluminescence images of solar photovoltaic modules. Eng Appl Artif Intell 131:107866

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) Ssd: Single shot multibox detector. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 21–37

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Ren S, He K, Girshick R, Sun J (2016) Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125

Lim J-S, Astrid M, Yoon H-J, Lee S-I (2021) Small object detection using context and attention. In: 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), pp. 181–186. IEEE

Song J, Qin X, Lei J, Zhang J, Wang Y, Zeng Y (2024) A fault detection method for transmission line components based on synthetic dataset and improved YOLOV5. Int J Electr Power Energy Syst 157:109852

Zhou Q, Wang H (2024) CABF-YOLO: a precise and efficient deep learning method for defect detection on strip steel surface. Pattern Anal Appl 27(2):1–15

Xie W, Sun X, Ma W (2024) A light weight multi-scale feature fusion steel surface defect detection model based on YOLOV8. Meas Sci Technol 35(5):055017

Hui Y, You S, Hu X, Yang P, Zhao J (2024) Seb-yolo: an improved YOLOV5 model for remote sensing small target detection. Sensors 24(7):2193

Zhao W, Kang Y, Zhao Z, Zhai Y (2023) A remote sensing image object detection algorithm with improved YOLOV5s. CAAI Trans Int Sys 18:86–95

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) Eca-net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13713–13722

Wu K, Chen Y, Lu Y, Yang Z, Yuan J, Zheng E (2024) Sod-yolo: a high-precision detection of small targets on high-voltage transmission lines. Electronics 13(7):1371

Yu Z, Huang H, Chen W, Su Y, Liu Y, Wang X (2024) YOLO-FACEV2: a scale and occlusion aware face detector. Pattern Recogn 155:110714

Zhou Y (2024) A YOLO-NL object detector for real-time detection. Expert Syst Appl 238:122256

Li H, Li J, Wei H, Liu Z, Zhan Z, Ren Q (2022) Slim-neck by gsconv: A better design paradigm of detector architectures for autonomous vehicles. arXiv preprint arXiv:2206.02424

Tong Z, Chen Y, Xu Z, Yu R (2023) Wise-iou: bounding box regression loss with dynamic focusing mechanism. arXiv preprint arXiv:2301.10051

Zheng Z, Wang P, Ren D, Liu W, Ye R, Hu Q, Zuo W (2021) Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans Cybern 52(8):8574–8586

Reza AM (2004) Realization of the contrast limited adaptive histogram equalization (clahe) for real-time image enhancement. J VLSI Signal Process Syst Signal Image Video Technol 38:35–44

Wang C-Y, Liao H-YM, Wu Y-H, Chen P-Y, Hsieh J-W, Yeh I-H (2020) Cspnet: A new backbone that can enhance learning capability of cnn. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 390–391

Wang C-Y, Bochkovskiy A, Liao H-YM (2023) Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7464–7475

Liu X, Peng H, Zheng N, Yang Y, Hu H, Yuan Y (2023) Efficientvit: Memory efficient vision transformer with cascaded group attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14420–14430

Funding

The Program for Innovative Research Team in University of Tianjin TD13-5036, in part by Tianjin Science and Technology Popularization Project under Grant 22KPXMRC00090.

Author information

Authors and Affiliations

Contributions

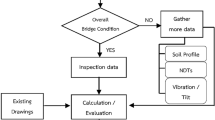

S and L completed the main part of the manuscript, T drew Figures 1-3, H drew figures 5-7, and both S and L and T and H reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Song, L., Lu, S., Tong, Y. et al. YOLOv8s-GSW: a real-time detection model for hexagonal barbed wire breakpoints. J Supercomput 81, 222 (2025). https://doi.org/10.1007/s11227-024-06738-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-024-06738-x