Abstract

This paper presents a novel image descriptor that enhances performance in image recognition and retrieval by combining deep learning and handcrafted features. Our method integrates high-level semantic features extracted via InceptionResNet-V2 with color and texture features to create a comprehensive representation of image content. The descriptor’s effectiveness is demonstrated through extensive experiments across a range of image recognition and retrieval tasks. Our approach is tested on six benchmark datasets, including Corel-1 K, VS, OT, QT, SUN-397, and ILSVRC-2012 for single-label classification, and COCO and NUS-WIDE for multi-label classification, achieving high performances. The results establish that the proposed method is versatile and robust, excelling in single-label and multi-label recognition as well as image retrieval tasks, and outperforms several state-of-the-art methods. This work provides a significant advancement in image representation, with broad applicability in various computer vision domains.

Similar content being viewed by others

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Liu Q, Zhou T, Cai Z, Yuan Y, Xu M, Qin J, Ma W (2023) Turning backdoors for efficient privacy protection against image retrieval violations. Inf Process Manage 60(5):103471

Giveki D (2021) Improving the performance of convolutional neural networks for image classification. Opt Memory Neural Netw 30:51–66

Rastegar H, Giveki D (2023) Designing a new deep convolutional neural network for content-based image retrieval with relevance feedback. Comput Electr Eng 106:108593

Giveki D, Dalvand O, Rastegar H (2022) Introducing a new dataset (Iranian Plate) for Iranian license plate recognition. J Mach Vis Image Process 9(2):81–95

Xu X, Bao X, Lu X, Zhang R, Chen X, Lu G (2023) An end-to-end deep generative approach with meta-learning optimization for zero-shot object classification. Inf Process Manage 60(2):103233

Rastegar H, Giveki D (2023) Designing a new deep convolutional neural network for skin lesion recognition. Multimedia Tools and Applications 82(12):18907–18923

Giveki D (2024) Human action recognition using an optical flow-gated recurrent neural network. Int J Multimed Inf Retr 13(3):29

Giveki D, Soltanshahi MA, Rastegar H (2024) Shape classification using a new shape descriptor and multi-view learning. Displays 82:102636

Anami BS, Sagarnal CV (2023) A fusion of hand-crafted features and deep neural network for indoor scene classification. Malays J Comput Sci 36(2):193–207

Gkelios S, Sophokleous A, Plakias S, Boutalis Y, Chatzichristofis SA (2021) Deep convolutional features for image retrieval. Expert Syst Appl 177:114940

Ma W, Zhou T, Qin J, Xiang X, Tan Y, Cai Z (2023) Adaptive multi-feature fusion via cross-entropy normalization for effective image retrieval. Inf Process Manage 60(1):103119

Gupta S, Sharma K, Dinesh DA, Thenkanidiyoor V (2021) Visual semantic-based representation learning using deep CNNs for scene recognition. ACM Trans Multimed Comput, Commun Appl (TOMM) 17(2):1–24

Dong R, Liu M, Li F (2019) Multilayer convolutional feature aggregation algorithm for image retrieval. Math Probl Eng 2019:9794202

Zhang Z, Xie Y, Zhang W, Tian Q (2019) Effective image retrieval via multilinear multi-index fusion. IEEE Trans Multimed 21(11):2878–2890

Cao B, Araujo A, & Sim J (2020) Unifying deep local and global features for image search. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16 (pp. 726–743). Springer International Publishing

Reena MR, Ameer PM (2022) A content-based image retrieval system for the diagnosis of lymphoma using blood micrographs: an incorporation of deep learning with a traditional learning approach. Comput Biol Med 145:105463

Pathak D, Raju USN (2021) Content-based image retrieval using feature-fusion of GroupNormalized-Inception-Darknet-53 features and handcraft features. Optik 246:167754

Singh D, Mathew J, Agarwal M, Govind M (2023) DLIRIR: deep learning based improved reverse image retrieval. Eng Appl Artif Intell 126:106833

Zhang N, Shamey R, Xiang J, Pan R, Gao W (2022) A novel image retrieval strategy based on transfer learning and hand-crafted features for wool fabric. Expert Syst Appl 191:116229

Wang Y, Haq NF, Cai J, Kalia S, Lui H, Wang ZJ, Lee TK (2022) Multi-channel content based image retrieval method for skin diseases using similarity network fusion and deep community analysis. Biomed Signal Process Control 78:103893

Wang C, Peng G, De Baets B (2022) Embedding metric learning into an extreme learning machine for scene recognition. Expert Syst Appl 203:117505

Liu S, Tian G, Xu Y (2019) A novel scene classification model combining ResNet based transfer learning and data augmentation with a filter. Neurocomputing 338:191–206

Ji J, Zhang T, Jiang L, Zhong W, Xiong H (2019) Combining multilevel features for remote sensing image scene classification with attention model. IEEE Geosci Remote Sens Lett 17(9):1647–1651

Yu D, Guo H, Xu Q, Lu J, Zhao C, Lin Y (2020) Hierarchical attention and bilinear fusion for remote sensing image scene classification. IEEE J Sel Top Appl Earth Observations Remote Sens 13:6372–6383

Zheng L, Yang Y, Tian Q (2017) SIFT meets CNN: a decade survey of instance retrieval. IEEE Trans Pattern Anal Mach Intell 40(5):1224–1244

Wan J, Wang D, Hoi SCH, Wu P, Zhu J, Zhang Y, and Li J (2014) Deep learning for content-based image retrieval: a comprehensive study. In Proceedings of the 22nd ACM International Conference on Multimedia (pp. 157–166)

Monroy R, Lutz S, Chalasani T, Smolic A (2018) Salnet360: Saliency maps for omni-directional images with cnn. Signal Process: Image Commun 69:26–34

Lai S, Jin L, Yang W (2017) Toward high-performance online hccr: a cnn approach with dropdistortion, path signature and spatial stochastic max-pooling. Pattern Recogn Lett 89:60–66

Razavian AS, Sullivan J, Carlsson S, Maki A (2016) Visual instance retrieval with deep convolutional networks. ITE Trans Media Technol Appl 4(3):251–258

Noh H, Araujo A, Sim J, Weyand T, & Han B (2017) Large-scale image retrieval with attentive deep local features. In Proceedings of the IEEE International Conference on Computer Vision (pp. 3456–3465)

Mei S, Min W, Duan H, Jiang S (2019) Instance-level object retrieval via deep region CNN. Multimed Tools Appl 78:13247–13261

Zheng L, Yang Y, Tian Q (2018) Sift meets cnn: a decade survey of instance retrieval. IEEE Trans Pattern Anal Mach Intell 40(5):1224–1244

Mohamed O, Mohammed O, Brahim A, et al (2017) Content-based image retrieval using convolutional neural networks. In 1st International Conference on Real Time Intelligent Systems (pp. 463–476). Springer

Rian Z, Christanti V and Hendryli J (2019) Content-based image retrieval using convolutional neural networks. In 2019 IEEE International Conference on Signals and Systems (ICSigSys) (pp. 1–7). IEEE

Sitaula C, Shahi TB, Marzbanrad F and Aryal J (2023) Recent advances in scene image representation and classification. Multimed Tools Appl 1–28

Zhao H, Zhou W, Hou X, Zhu H (2020) Double attention for multi-label image classification. IEEE Access 8:225539–225550

Zhou F, Huang S, Xing Y (2021) Deep semantic dictionary learning for multi-label image classification. Proc AAAI Conf Artif Intell 35(4):3572–3580

Yan Z, Liu W, Wen S, Yang Y (2019) Multi-label image classification by feature attention network. IEEE Access 7:98005–98013

Zhu K, and Wu J (2021) Residual attention: a simple but effective method for multi-label recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 184–193)

Chen T, Wang W, Pu T, Qin J, Yang Z, Liu J, Lin L (2024) Dynamic correlation learning and regularization for multi-label confidence calibration. IEEE Trans Image Process 33(4811):4823

Gangwar A, González-Castro V, Alegre E, Fidalgo E, Martínez-Mendoza A (2024) DeepHSAR: Semi-supervised fine-grained learning for multi-label human sexual activity recognition. Inf Process Manage 61(5):103800

Wang Z, Fang Z, Li D, Yang H, Du W (2021) Semantic supplementary network with prior information for multi-label image classification. IEEE Trans Circuits Syst Video Technol 32(4):1848–1859

Zhang J, Wu Q, Shen C, Zhang J, Lu J (2018) Multilabel image classification with regional latent semantic dependencies. IEEE Trans Multimed 20(10):2801–2813

Liang J, Xu F, Yu S (2022) A multi-scale semantic attention representation for multi-label image recognition with graph networks. Neurocomputing 491:14–23

Chen T, Lin L, Chen R, Hui X, Wu H (2020) Knowledge-guided multi-label few-shot learning for general image recognition. IEEE Trans Pattern Anal Mach Intell 44(3):1371–1384

Singh IP, Ghorbel E, Oyedotun O, Aouada D (2024) Multi-label image classification using adaptive graph convolutional networks: from a single domain to multiple domains. Comput Vis Image Underst 247:104062

Qu X, Che H, Huang J, Xu L, Zheng X (2023) Multi-layered semantic representation network for multi-label image classification. Int J Mach Learn Cybern 14(10):3427–3435

Wang X, Li Y, Luo T, Guo Y, Fu Y, and Xue X (2021) Distance restricted transformer encoder for multi-label classification. In 2021 IEEE International Conference on Multimedia and Expo (ICME) (pp. 1–6). IEEE.

Lanchantin J, Wang T, Ordonez V, and Qi Y (2021) General multi-label image classification with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 16478–16488)

Zhou W, Dou P, Su T, Hu H, Zheng Z (2023) Feature learning network with transformer for multi-label image classification. Pattern Recogn 136:109203

Dao SD, Zhao H, Phung D, Cai J (2023) Contrastively enforcing distinctiveness for multi-label image classification. Neurocomputing 555:126605

Wang JZ, Li J, Wiederhold G (2001) SIMPLIcity: semantics-sensitive integrated matching for picture libraries. IEEE Trans Pattern Anal Mach Intell 23(9):947–963

Vogel J, & Schiele B (2004) Natural scene retrieval based on a semantic modeling step. In Image and Video Retrieval: 3rd International Conference, CIVR 2004, Dublin, Ireland, July 21-23, 2004. Proceedings 3 (pp. 207-215). Springer Berlin Heidelberg

Oliva A, Torralba A (2001) Modeling the shape of the scene: a holistic representation of the spatial envelope. Int J Comput Vision 42:145–175

Quattoni A, and Torralba A (2009) Recognizing indoor scenes. In 2009 IEEE Conference on Computer Vision and Pattern Recognition (pp. 413–420)

Xiao J, Hays J, Ehinger KA, Oliva A, and Torralba A (2010) Sun database: large-scale scene recognition from abbey to zoo. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (pp. 3485–3492). IEEE

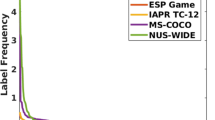

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, ... and Zitnick CL (2014) Microsoft coco: common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, Proceedings, Part V 13 (pp. 740–755). Springer International Publishing

Chua TS, Tang J, Hong R, Li H, Luo Z, and Zheng Y (2009) Nus-wide: a real-world web image database from national university of singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval (pp. 1–9)

Deng J, Dong W, Socher R, Li LJ, Li K, and Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition (pp. 248–255)

Vieira GS, Fonseca AU, Sousa NM, Felix JP, Soares F (2023) A novel content-based image retrieval system with feature descriptor integration and accuracy noise reduction. Expert Syst Appl 232:120774

Chen ZM, Wei XS, Wang P, and Guo Y (2019) Multi-label image recognition with graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 5177–5186)

Kumar S, Jain A, Kumar Agarwal A, Rani S, Ghimire A (2021) [Retracted] object-based image retrieval using the u-net-based neural network. Comput Intell Neurosci 2021(1):4395646

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, ... and Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861

Huang G, Liu Z, Van Der Maaten L, and Weinberger KQ (2017) Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 4700–4708)

Zoph B, Vasudevan V, Shlens J, and Le QV (2018) Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 8697–8710).

Szegedy C, Ioffe S, Vanhoucke V, and Alemi A (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence 31(1)

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, and Wojna Z (2016) Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2818–2826)

Fran C (2017) Deep learning with depth wise separable convolutions. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Irtaza A, Adnan SM, Ahmed KT, Jaffar A, Khan A, Javed A, Mahmood MT (2018) An ensemble based evolutionary approach to the class imbalance problem with applications in CBIR. Appl Sci 8(4):495

Ahmed KT, Ummesafi S, Iqbal A (2019) Content based image retrieval using image features information fusion. Inf Fus 51:76–99

Sharif U, Mehmood Z, Mahmood T, Javid MA, Rehman A, Saba T (2019) Scene analysis and search using local features and support vector machine for effective content-based image retrieval. Artif Intell Rev 52:901–925

Bibi R, Mehmood Z, Yousaf RM, Saba T, Sardaraz M, Rehman A (2020) Query-by-visual-search: multimodal framework for content-based image retrieval. J Ambient Intell Humaniz Comput 11:5629–5648

Mohammed MA, Oraibi ZA and Hussain MA (2023) Content based image retrieval using fine-tuned deep features with transfer learning. In 2023 2nd International Conference on Computer System, Information Technology, and Electrical Engineering (COSITE) (pp. 108–113)

Kumar S, Pal AK, Varish N, Nurhidayat I, Eldin SM, Sahoo SK (2023) A hierarchical approach based CBIR scheme using shape, texture, and color for accelerating retrieval process. J King Saud Univ-Comput Inf Sci 35(7):101609

Salih SF, Abdulla AA (2023) An effective bi-layer content-based image retrieval technique. J Supercomput 79(2):2308–2331

Xie GS, Zhang XY, Yan S, Liu CL (2015) Hybrid CNN and dictionary-based models for scene recognition and domain adaptation. IEEE Trans Circuits Syst Video Technol 27(6):1263–1274

Cheng X, Lu J, Feng J, Yuan B, Zhou J (2018) Scene recognition with objectness. Pattern Recogn 74:474–487

Dixit M, Li Y, Vasconcelos N (2019) Semantic fisher scores for task transfer: using objects to classify scenes. IEEE Trans Pattern Anal Mach Intell 42(12):3102–3118

Tang P, Wang H, Kwong S (2017) G-MS2F: GoogLeNet based multi-stage feature fusion of deep CNN for scene recognition. Neurocomputing 225:188–197

Wang C, Peng G, De Baets B (2020) Deep feature fusion through adaptive discriminative metric learning for scene recognition. Inf Fus 63:1–12

Gupta S, Dileep AD, Thenkanidiyoor V (2021) Recognition of varying size scene images using semantic analysis of deep activation maps. Mach Vis Appl 32(2):52

Lin C, Lee F, Cai J, Chen H, Chen Q (2021) Global and graph encoded local discriminative region representation for scene recognition. Comput Model Eng Sci 128(3):985–1006

Sitaula C, Xiang Y, Aryal S, Lu X (2021) Scene image representation by foreground, background and hybrid features. Expert Syst Appl 182:115285

Wang C, Peng G, De Baets B (2022) Joint global metric learning and local manifold preservation for scene recognition. Inf Sci 610:938–956

Song C, and Ma X (2023) Srrm: Semantic region relation model for indoor scene recognition. In 2023 International Joint Conference on Neural Networks (IJCNN) (pp. 01–08). IEEE

Liu M, Yu Y, Ji Z, Han J, Zhang Z (2024) Tolerant self-distillation for image classification. Neural Netw 174:106215

Brock A, De S, Smith SL, and Simonyan K (2021) High-performance large-scale image recognition without normalization. In International Conference on Machine Learning (pp. 1059–1071). PMLR

Zhai X, Kolesnikov A, Houlsby N, and Beyer L (2022) Scaling vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 12104–12113)

Wang W, Xie E, Li X, Fan DP, Song K, Liang D, Shao L (2022) Pvt v2: improved baselines with pyramid vision transformer. Comput Visual Media 8(3):415–424

Wortsman M, Ilharco G, Gadre SY, Roelofs R, Gontijo-Lopes R, Morcos AS, ... & Schmidt L (2022) Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, Maryland

Liu Z, Mao H, Wu CY, Feichtenhofer C, Darrell T, and Xie S (2022) A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 11976–11986)

Touvron H, Cord M, and Jégou H (2022) Deit iii: revenge of the vit. In European Conference on Computer Vision (pp. 516–533). Cham: Springer Nature Switzerland

Dehghani M, Djolonga J, Mustafa B, Padlewski P, Heek J, Gilmer J, ... and Houlsby N (2023) Scaling vision transformers to 22 billion parameters. In International Conference on Machine Learning (pp. 7480–7512). PMLR

Singh M, Duval Q, Alwala KV, Fan H, Aggarwal V, Adcock A, ... and Misra I (2023b) The effectiveness of MAE pre-pretraining for billion-scale pretraining. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 5484–5494)

Liu S, Chen T, Chen X, Chen X, Xiao Q, Wu B, ... and Wang Z (2023) More convnets in the 2020s: Scaling up kernels beyond 51x51 using sparsity. In: International Conference on Learning Representations (ICLR)

Sicre R, Zhang H, Dejasmin J, Daaloul C, Ayache S, and Artières T (2023) DP-Net: learning discriminative parts for image recognition. In 2023 IEEE international conference on image processing (ICIP) (pp. 1230–1234). IEEE

Kim D, Heo B, and Han D (2024) DenseNets reloaded: paradigm shift beyond ResNets and ViTs. arXiv preprint arXiv:2403.19588

Li S, Wang Z, Liu Z, Tan C, Lin H, Wu D, ... and Li SZ (2023) Moganet: multi-order gated aggregation network. In The 12th International Conference on Learning Representations

Fein-Ashley J, Feng E, and Pham M (2024) HVT: a comprehensive vision framework for learning in non-euclidean space. arXiv preprint arXiv:2409.16897

Srivastava S, and Sharma G (2024) OmniVec2. a novel transformer based network for large scale multimodal and multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 27412–27424)

Guo H, Zheng K, Fan X, Yu H, and Wang S (2019) Visual attention consistency under image transforms for multi-label image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 729–739)

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

Davar Giveki contributed to conceptualization, methodology, software, supervision, writing, review, and editing. Sajad Esfandyari contributed to software, writing, review, and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Giveki, D., Esfandyari, S. Semantic image representation for image recognition and retrieval using multilayer variational auto-encoder, InceptionNet and low-level image features. J Supercomput 81, 346 (2025). https://doi.org/10.1007/s11227-024-06792-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-024-06792-5