Abstract

Many current embedded systems comprise heterogeneous computing components including quite powerful GPUs, which enables their application across diverse sectors. This study demonstrates the efficient execution of a medium-sized self-supervised audio spectrogram transformer (SSAST) model on a low-power system-on-chip (SoC). Through comprehensive evaluation, including real time inference scenarios, we show that GPUs outperform multi-core CPUs in inference processes. Optimization techniques such as adjusting batch size, model compilation with TensorRT, and reducing data precision significantly enhance inference time, energy consumption, and memory usage. In particular, negligible accuracy degradation is observed, with post-training quantization to 8-bit integers showing less than 1% loss. This research underscores the feasibility of deploying transformer neural networks on low-power embedded devices, ensuring efficiency in time, energy, and memory, while maintaining the accuracy of the results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the field of artificial intelligence (AI) has witnessed unprecedented advancements that have permeated various sectors of our daily lives. From natural language processing to computer vision, AI-powered technologies have demonstrated remarkable capabilities in tasks such as language translation, image recognition, and autonomous decision-making [1,2,3]. These accomplishments have been primarily driven by developing deep learning models, among which convolutional neural networks (CNNs) and Transformers have emerged as outstanding architectures [4, 5].

However, the surge in AI capabilities is not confined to traditional computing environments. One of the most exciting frontiers in AI deployment lies in integrating these cutting-edge models into GPU-based embedded systems. These embedded systems, characterized by their compact size and resource constraints, are commonly found in applications ranging from autonomous vehicles and smart appliances to healthcare devices and industrial automation [6,7,8]. Incorporating AI models into these systems can enhance their functionality, enabling real time data analysis, predictive maintenance, and intelligent decision-making, while operating within the confines of limited computational resources. It is essential to recognize the accelerating pace of AI adoption across various sectors. The healthcare industry benefits from AI-powered diagnostics and drug discovery, while the automotive sector harnesses AI for autonomous driving and safety systems. Additionally, the Internet of Things (IoT) ecosystem relies on AI to process sensor data and enable intelligent automation [9]. These applications underscore the urgency of optimizing AI models for embedded systems, as doing so can unlock new possibilities and drive innovation in these domains.

Usually, the main factor taken into account in the field of neural networks is the quality of the result of the inference process, that is, the main goal is to improve their accuracy. Another factor that is usually of interest to optimize is the efficiency with which the inference is carried out, especially if it is necessary to carry it out in real time. Additionally, if the process is to be performed on an embedded system, it is also very important to reduce the size of the model and the memory used during inference, since these types of devices tend to have much smaller memory than more powerful discrete GPUs. Finally, in many applications using embedded devices, it is also important to reduce energy consumption, as this has a direct impact on battery life.

This paper presents a comprehensive evaluation of a Transformer in the context of GPU-based embedded systems. We assess its performance, resource utilization, and real time capabilities, shedding light on the practicality of its deployment in such environments.

Specifically, we evaluate a self-supervised audio transformer (SSAST), which is a vision transformer (ViT) model applied to keyword spotting. We perform the evaluation of the inference process on a low-power NVIDIA Jetson Orin Nano SoC, which includes a six-core ARM CPU and an NVIDIA Ampere GPU. We apply several optimization techniques to accelerate inference and compare the inference time, computational cost, and energy consumption of different versions of the model. Experimental results show that by using NVIDA’s TensorRT framework, choosing an appropriate batch size, and using half floating point data precision, we can significantly reduce inference time and energy consumption, while maintaining low memory cost.

The main contributions of this paper are as follows:

-

Demonstrate the feasibility of running the inference process of a medium-size Vision Transformer on a low-power embedded system. Specifically, we run the inference process of an SSAST model on the CPU and GPU of a low-power Jetson Orin Nano board.

-

Optimize the inference process using various techniques such as modifying the batch size, compiling the model, or reducing the data precision.

-

Evaluate and compare the computational, space, and energy efficiency of different versions of the SSAST model in different scenarios, including real time processing of single samples and batch processing of multiple samples.

The rest of the paper is structured as follows. Section 2 summarizes the related work. Section 3 briefly describes the experimental environment, dataset and model versions. Section 4 analyses the experimental results. Finally, Sect. 5 summarizes the main conclusions of the paper.

2 Deep learning algorithms in keyword spotting

Keyword spotting (KWS) detects particular words from a learned vocabulary in an audio signal containing speech. The development of different solutions to this problem has enabled real time voice interaction with many devices in recent years, forcing KWS algorithms to become more lightweight and computationally efficient. This enables real time interaction, while working persistently on those devices, such as smartphones, IoT, or wearable devices, where computational resources and power supply are limited.

Deep learning algorithms have become state-of-the-art techniques for KWS tasks such as wake word detection and speech command recognition. As classic approaches, CNN [10] [11] and RNN [12] [13] architectures were used and proved to be effective. Then, Vision Transformer (ViT) [14] based models such as audio spectrogram transformer [15] (AST) or Keyword Transformer [16] (KWT) were introduced as a more innovative architecture. These models rely on splitting audio spectrograms into patches and applying self-attention mechanisms between those patches to get a deeper understanding of the information patterns that can be recognized in the audio. These architectures proved that they could perform better than CNNs and RNNs in some computer vision and audio recognition tasks. Nevertheless, transformer-based architectures only outperform other classical deep learning techniques when the amount of training data is enough to allow them to generalize properly.

SSAST, [17], was introduced to tackle its predecessor’s, the Audio Spectrogram Transformer (AST), data inefficiency. SSAST is pretrained with an extensive unlabeled corpus of audio and speech data, enabling the model to generate audio representations that can be fine-tuned with smaller labeled datasets for specific downstream tasks. In this work, we fine-tuned the pretrained SSAST with the Speech Commands Dataset [18] for keyword spotting.

3 GPU-based experimental environment

We used two devices to perform the training and inference experiments, including very different CPUs and GPUs. For training and fine tuning, we used a server containing 2 Intel Xeon Gold 6126 at 2.6GHz processors, each with 12 cores and a total of 64 GB of memory. The server also contained a high-performance Tesla V100 GPU with the Volta architecture consisting of 5120 CUDA cores, 640 Tensor cores and a total memory of 32 GB.

To perform the inference experiments we use both the CPU and GPU of a Jetson Orin Nano developer kit [19]. This particular system consists of a quad-core ARM Cortex A78AE processor (or CPU), a battery-saving ARM Cortex A78AE shadow dual-core, and an NVIDIA Ampere GPU with 1024 CUDA cores and 32 Tensor cores with a total me of 8 GB of memory shared between the CPU and GPU. The main features of the GPUs that include the experimental devices can be seen in Table 1.

The two GPUs represent very different types of device with different targets. While the Ampere GPU is a low-power device that can be used in embedded systems to reduce power consumption, the Tesla V100 is a high-performance GPU that provides massive data parallelism with thousands of cores, suitable for use in HPC nodes.

The Jetson module, in which the experiments were conducted, was pre-installed with the JetPack 5.1.2 release. This version encompasses Jetson Linux 35.4.1 BSP featuring Linux Kernel 5.10 and an Ubuntu 20.04 derived root file system.

The application has been developed and containerized inside a Docker image to ensure reproducibility in all Jetson Orin Nano modules running on top of JetPack 5.1.2. Important version dependencies, such as specific versions for the module and intermediate dependencies for the libraries, are included in the Docker image, being the most important dependencies: PyTorch 2.0.0, TorchVision 0.14.1, TorchAudio 0.13.1, Torch-TensorRT 1.1.0 and PyTorch Quantization 2.1.3. These dependencies require special wheels for building on the Jetson Orin Nano.

3.1 Dataset

To test the models’ performance in Keyword Spotting task, we used Google Speech Commands, a well-known and widely adopted dataset, as a benchmark for this task [18]. The dataset consists of 105,829 utterances of 35 words. Each sample is a one-second audio clip encoded as linear 16-bit single-channel Pulse Code Modulation (PCM) values with 16kHz sample rate. 2,618 different speakers recorded the corpus. The dataset is divided into an approximate 80:10:10 ratio for training, validation, and test. 1000 samples from the test split are randomly selected to perform the inference experiments.

3.2 SSAST model detail

SSAST architecture has three versions varying in the dimensions of the attention heads and the embedding size, which can be seen in Table 2.

The Tiny model has almost 15 times fewer parameters than the Base version and gets only a 0.8% penalty in terms of accuracy; this makes the Tiny version obtain the best trade-off between the accuracy and number of parameters. Therefore, to get the best possible performance baseline, we ran our transformer-based model tests using the Tiny version of the SSAST architecture.

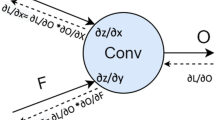

3.3 Model compilation and inference acceleration

The field of deep learning has seen continuous evolution, with the development of tools and frameworks aimed at improving model efficiency and deployment. An exemplary contribution to this evolution is Torch-TensorRT ,Footnote 1 a tool that seamlessly integrates the strengths of PyTorch Footnote 2 and NVIDIA’s TensorRT Footnote 3 to enable accelerated deep learning inference. This integration leverages TensorRT’s optimization capabilities to significantly improve the speed of deep neural network inference on NVIDIA GPUs within the PyTorch ecosystem. This streamlined solution empowers researchers and practitioners in real-world applications, particularly in scenarios, where low latency and high throughput are critical. Its value lies in its ability to leverage the performance optimisations offered by TensorRT, thus advancing the practical implementation of deep learning models.

Techniques to reduce computational load without compromising accuracy and enhance runtime efficiency have become pivotal in the realm of deep learning. TensorRT accelerates deep learning model speed through precision calibration, dynamic tensor memory optimization, and layer fusion techniques, capitalizing on NVIDIA GPU hardware capabilities. One notable strength lies in its implementation of precision-optimized kernels that utilize specialized hardware features to expedite mathematical operations. Furthermore, TensorRT supports concurrent execution and efficient batch processing, enabling the simultaneous processing of multiple inputs. The tool incorporates a range of optimizations, including kernel auto-tuning and layer-wise optimization, tailoring the inference process for specific model architectures and hardware configurations.

In terms of flexible compilation processes that optimize model performance, users can select precision modes such as float32 and float16 during the compilation phase based on application requirements. float32 precision ensures high accuracy, while float16 enables faster inference by using the floating point format of half-precision. In addition, TensorRT’s post-training quantization compiles the model to int8 precision, reducing the precision of weights and activations to 8 bits. This strategy has been shown to minimize memory requirements and accelerate inference speed in CNNs and traditional NNs, and the current focus is on implementing, perfecting, and developing these strategies in attention-based models. Due to the limited resource availability of the Jetson Orin Nano and the limited version packages compatible with the JetPack version, some acceleration strategies could not be executed.

The rigorous evaluation of the Jetson Orin Nano module capabilities of Vision Transformer models involved extensive testing, including normal non-compiled models running on CPU and GPU architectures, as well as compiled models using TensorRT with various precision modes. In particular, the use of Vision Transformer models required preparatory steps to account for their dynamic shape output of attention heads, due to variable input information length, including recording expected shapes and then freezing the model prior to compilation to ensure compatibility with TensorRT’s optimization pipeline. Importantly, integration of post-training quantization in int8 precision required a beforehand calibration step to adjust the floating point weights to the int8 scale to ensure optimal model performance within the specified precision.

A common effect of reducing precision and performing quantization on weights is the loss of accuracy. Techniques such as Quantization Aware Training, where the model is trained or fine-tuned during some epochs with additional layers that mimic the quantified behavior, aim to reduce this performance loss. However, while performing the experiments, an accuracy benchmark was also carried out to measure this effect on Post Training Quantization.

4 Experimental study

4.1 Experimental setup

To assess the efficiency of model inference in the embedded device, three experiments were conducted to compare the performance of the original, non-compiled model with the compiled model in different input scenarios. The first experiment, which we call dummy, involved a dummy input inference test, which employed random data simulating the input size and data type, without recording the output. The audio sample after applying the spectrogram results in an image of shape \((batch\_size, 128, 128)\). This simulation aimed to evaluate the raw inference speed and resource utilization of the models in the absence of output-related computations. The second experiment, which we call sample, used a single tensor of data corresponding to one sample included in the dataset, to mimic a scenario, where real-world inputs are processed individually. This test evaluated the efficiency of each model in handling single-input and batched instances, without changing data. Lastly, the third experiment, which we call iter, used the entire test dataset compromising the 1000 utterances, iterating over the data reflecting a more comprehensive and realistic workload on batched-oriented processing.

In the dummy and sample experiments, 100 iterations are executed for each model, and time, memory, and power are recorded for each of the iterations, then averaged over the batch size to obtain the mean and variance per sample for every case. On the iter experiment, an entire iteration over the 1000 utterances of the dataset is performed, averaged on the number of executions, and batch size of the execution to obtain the results per sample.

The next listings show the main steps performed on each experiment involving both the CPU and GPU.

The figures and data presented in the next sections will comment on the dummy experiment, concluding with a graphical comparison between the three experiments.

4.2 Inference performance

In the first study, an inference performance evaluation was performed using the CUDA event API to record inference time during model execution. The CUDA event API includes calls to create and destroy events, record events, and compute the elapsed time in milliseconds between two recorded events. This value has a resolution of approximately \(500\,\text {ns}\).

For a comprehensive evaluation, we extended the experiments to investigate the impact of different batch sizes on model inference when the experiments were conducted on the CPU and GPU of the Jetson Orin Nano board. The GPU, powered by CUDA and Tensor cores, demonstrates its inherent advantage in parallel processing, significantly accelerating inference speed compared to CPU execution, as seen in Fig. 1. The bar plot showcases the mean and variance of 100 iterations of model inference on a dummy input with different batch sizes, each iteration being a single execution of a specific batch size, measuring the execution time of the model. CPUs, designed for general-purpose computing, often struggle to efficiently handle numerous repetitive floating point multiplications inherent in deep learning models due to their serial processing characteristics. GPUs, on the other hand, have remarkable acceleration capabilities that make them ideal for the computational demands of deep learning tasks due to the provided parallelism by the architecture. The results of the experiments show a significant acceleration when using GPUs, especially for larger batch sizes. In particular, the GPU performed the inference six times faster than the CPU when using a batch size of 1 and the speedup increased to an impressive thirty-two when using a batch of size 16.

An additional study was conducted to explore the impact of batch size on inference time on the GPU, consisting of experiments with batch sizes ranging from 1 to 256, highlighting the inherent relationship between batch processing and overall inference efficiency. Torch models without any accelerated compilation are tested, that is, just the CUDA implementation on GPU by making use of the PyTorch package pytorch.cuda, which adds support for CUDA Tensor types. Results can be seen in Fig. 2. A discernible trend of decreasing inference times is evident as the batch size increases. These results suggest that larger batch sizes lead to more efficient use of computational resources, parallelizing the inference in the GPU, resulting in a decrease in inference time.

The use of TensorRT for model compilation results in significant improvements in inference acceleration, as can be seen in the following figures. Figure 3 depicts the comparison between the raw and compiled models and the batch size.

The improvement is particularly evident in the torch half-precision compilation, which shows a remarkable twofold acceleration compared to non-compiled models. This increased efficiency is due to TensorRT’s ability to utilize optimized precision kernels, resulting in faster computation with reduced numerical precision. Integer quantization did not provide the expected acceleration because, as mentioned earlier, some strategies could not be implemented due to memory constraints and library versions.

In addition, the compiled models show a significant decrease in the standard deviation of inference times, indicating improved consistency and reliability of performance, as seen by the error bar, which is almost unnoticeable at larger batch sizes. Half compile provides a consistent twofold reduction in inference time, whereas the other compilation strategies fail to provide any noticeable inference acceleration at larger batch sizes.

4.3 Memory performance

Memory utilization also is an important feature to look at, since it is limited in Edge devices. If a single process takes the whole shared memory, other tasks could be affected or even interrupted, so a study was made on how the batch size and the compilation affect resource utilization. The PyTorch CUDA package support for CUDA tensor types has the utility to measure memory allocated during the execution of the script.

Reducing the inference time provokes an increase in memory utilization, as seen in Fig. 4. Increasing the batch size further than 32 does not lead to any noticeable improvement, while greatly increasing the memory requirements and preventing other processes from using the hardware.

Increasing the batch size, which is a common strategy for optimizing GPU utilization, reduces inference times, while demonstrating a moderate increase in memory allocation on the compiled models. Figure 5 depicts the comparison of the memory requirements between uncompiled and compiled versions of the model, with different precision modes. The compiled models are more efficient in balancing computational speed and memory utilization compared to their non-compiled counterparts, and the half-precision compiled model exhibits reduced memory utilization on higher batch sizes. This showcases the overall effectiveness of TensorRT in accelerating inference and achieving more consistent and resource-efficient model execution.

4.4 Energy performance

The increasing number of Internet of Things (IoT) devices at the network’s edge requires energy-efficient solutions to support extended operation, particularly in resource-constrained environments. Low-power devices are necessary to reduce energy consumption, while maintaining computational capabilities, ensuring seamless functionality without undue strain on power resources. Using hardware platforms like the Jetson Orin Nano, which has a power limit of 15 W, is a strategic way to achieve optimal performance within power constraints. Therefore, it is critical to understand and optimize power consumption in Edge, IoT, and Embedded systems to facilitate the adoption and sustainability of connected devices in modern electronic ecosystems.

To quantify the power consumption of the inference process in W, we leverage the pmlib framework [20]. This tool allows us to aggregate instantaneous power readings from the internal power monitoring sensors of the Jetson Orin Nano [21]. The readings are collected at a sampling frequency of 10 Hz and are subsequently averaged over the duration of the inference. This process involves utilizing three real time power sensors, each providing insights into the power consumption of distinct power domains on the platform: the main module with all its components, the GPU, and the CPU. We present the energy consumption of the main module in J by multiplying the power consumption by the respective device’s time utilization during the inference process.

The recorded time was obtained for the whole inference cycle, and normalized for a single sample.

Observing Fig. 6, we can conclude that longer inference times and resource utilization associated with CPU execution directly translate into increased power consumption, which is a challenge in energy-constrained environments. This phenomenon underscores the critical importance of leveraging GPU acceleration to reduce power consumption.

The energy performance analysis results provide insights into the relationship between model compilation, inference speed, and energy consumption. This reduction in energy consumption is directly correlated with the observed acceleration in inference speed. The energy consumption for GPU models was 6 times lower for a batch size of 1 and 10 times lower for a batch size of 16 compared to CPU-compiled models. By increasing the batch size, we speed up the time by making better use of GPU resources, but this improved use stresses those resources and implies an increase in GPU power consumption per unit of time.

Next, a comparison is made between non-compiled and compiled models to assess the impact on the energy efficiency of inference on accelerated models and precision modes. Figure 7 shows the results obtained for the power consumption study.

Notably, when comparing batch sizes for the CUDA implementation with and without compilation, a similar effect on results was observed as in the timing study, with a more pronounced reduction in energy consumption than an acceleration in inference speed. However, it is important to note that the energy efficiency gains achieved with compiled models did not show a proportional reduction with increasing batch size. This study suggests that the benefits of model compilation on energy consumption are more nuanced and influenced by factors beyond simple batch processing efficiency. This could be due to the intensive acceleration and resource utilization behind the scenes of TensorRT compilation.

4.5 Experiments comparison

In this subsection we compare the results obtained with the three experiments described in section 4.1. The results of the dummy experiments showed the raw inference efficiency of the GPU, where all dummy samples are transferred before the inference process starts, and no additional transfer or computation is performed on the GPU or CPU. In the sample experiments, we need to add the cost of transferring the output from the GPU and computing the loss in CPU after inference for each batch, and computing the accuracy after inference for all batches. Finally, in the iter experiments, as we use different samples in each batch, we have to add the cost of transferring each of them to the GPU before performing the inference.

The iter experiments allow us to compare the accuracy of the different models used in our experiments with the accuracy of the baseline Tiny version. While, the baseline version has an accuracy of 97.2% on the original test dataset, the model achieves an accuracy of 96% on our reduced dataset. The compiled models with float32 and float16 floating point precision do not reduce the accuracy of the base model at all, while the post-training quantization in int8 reduces the accuracy by less than \(1\%\).

In terms of inference time, Fig. 8 compares the results of the three experiments with the raw, non-compiled CUDA execution of the model. In this case there is little difference between the times of the three experiments. In all three cases the variance of the inference time decreases as we increase the batch size and is larger in the case of the iter experiment due to the effect of parsing and transferring the whole dataset using small batches.

In Fig. 9 the same comparison of inference time is performed with the compiled TensorRT model using half-precision, which is the fastest version of the model. In this case the effects of copying new data in and out of the GPU in the iter experiment are more noticeable. Note that the copy time does not decrease when compiling the model, while the inference time decreases significantly.

On the other hand, the iter experiment has a significantly higher power consumption than the other two experiments, even using the non-compiled CUDA version of the model, when the inference time is very similar, as can be seen in Fig. 10. This could be due to the effect of loading and preprocessing new samples on the CPU before transferring each batch from the CPU to the GPU.

The difference in power consumption between the three experiments increases when we use the TensorRT versions of the model with half-precision, as can be seen in Fig. 11. Using the compiled version reduces the power consumption of the iter model with respect to the non-compiled version for small batches, but increases it when we use large batches. On the contrary, in the case of the other two experiments, the compiled versions clearly reduce the power consumption of the non-compiled versions for all batch sizes.

5 Conclusions

In recent years, the power and flexibility of embedded systems has increased enormously. On the one hand, this has extended their range of applications to all kinds of areas, including healthcare, automotive and Industry 4.0. On the other hand, today’s devices can combine multi-core processors, powerful GPUs, FPGAs or application-specific components for neural network acceleration.

In this paper, we demonstrate that it is possible to efficiently run a medium-sized neural network on a low-power SoC. Specifically, we perform the inference process of a vision transformer SSAST model with six million parameters on a Jetson Orin Nano SoC. We have evaluated and compared different scenarios, including the real time inference of individual dummy or real samples and the batched processing of multiple samples.

Experimental results confirm that the GPU is much more efficient than the multi-core CPU in performing the inference process. We have used different techniques to speed up the inference process and also to reduce its space and energy cost. Firstly, by increasing the batch size it is possible to reduce the time and energy cost of processing very significantly, although at the cost of increasing the spatial cost. By choosing an appropriate batch size, it is possible to optimize all three parameters. In our case the best trade-off proved to be 16.

On the other hand, the use of compiled models with tools such as TensorRT can greatly accelerate the inference process on NVIDIA GPUs compared to non-compiled versions of the same models, while reducing the energy and spatial cost. Finally, reducing the data precision by using half-precision floating point elements further reduces the inference time and energy cost.

Results show that there is no accuracy decrease in floating point precision compilations, while in the post-training quantization compilation in 8 bit integers reduces the accuracy less than 1%.

In short, the appropriate use of various optimization techniques allows the transformer neural network inference process to be performed efficiently in terms of time, energy and memory on low-power embedded devices. It maintains the accuracy of the results and opens up the possibility of using them in a wide range of environments and applications.

For future work, we plan to explore the capabilities offered by the new JetPack 6.1, which provides support for the latest versions of TensorRT. This will enable the efficient compilation of attention-based models, which are becoming increasingly essential in various AI applications. We also propose conducting quantization aware training (QAT) to evaluate the potential for compensating for the minimal precision loss typically observed with quantization, aiming to maintain model accuracy while benefiting from the reduced computational load.

Furthermore, we will investigate the performance gains of executing models directly in C/C++ using TensorRT, potentially incorporating more optimized libraries like JAX and XLA. This approach could yield even greater acceleration and efficiency in edge AI deployments. Additionally, we consider the possibility of leveraging more powerful edge devices, such as the Jetson Xavier, which offers enhanced processing capabilities for demanding AI tasks.

Lastly, we plan to extend our experiments by compiling and measuring the execution of Large Language Models (LLMs) on edge devices. This will be facilitated by using TensorRT-LLM, a specialized tool that optimizes LLM deployment on resource-constrained environments, further broadening the scope and impact of our research in edge AI.

Availability of data and materials

No additional data or materials available.

References

Deng L, Liu Y (2018) Deep learning in natural language processing. Springer

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Schwarting W, Alonso-Mora J, Rus D (2018) Planning and decision-making for autonomous vehicles. Annual Rev Control, Robot Auto Syst 1(1):187–210

O’shea, K., Nash, R.: An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458 (2015)

Dosovitskiy, A.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

An S, Tuncel Y, Basaklar T, Ogras UY (2023) A Survey of Embedded Machine Learning for Smart and Sustainable Healthcare Applications. Springer

Silva RL, Canciglieri Junior O, Rudek M (2022) A road map for planning-deploying machine vision artifacts in the context of industry 4.0. Journal of Industrial and Production Engineering 39(3):167–180

de Sousa, F.L.M., da Silva, M.J., de Meira Santos, R.C.C., Silva, M.C., Oliveira, R.A.R.: Deep-Learning-Based Embedded ADAS System. IEEE (2021)

Singh R, Gill SS (2023) Edge AI: a survey. Internet of Things and Cyber-Phys Syst 3:71–92

Aafaq, N., Saleem, M., Khan, J.T., Abbasi, I.H.: Convolutional neural networks for deep spoken keyword spotting. In: 2023 3rd International Conference on Artificial Intelligence (ICAI), pp. 170–175 (2023). 10.1109/ICAI58407.2023.10136648

Sainath, T.N., Parada, C.: Convolutional neural networks for small-footprint keyword spotting. In: Proc. Interspeech 2015, pp. 1478–1482 (2015). 10.21437/Interspeech.2015-352

Chen, G., Parada, C., Sainath, T.N.: Query-by-example keyword spotting using long short-term memory networks. In: 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5236–5240 (2015). 10.1109/ICASSP.2015.7178970

Fernández S, Graves A, Schmidhuber J (2007) An application of recurrent neural networks to discriminative keyword spotting. In: de Sá JM, Alexandre LA, Duch W, Mandic D (eds) Artificial Neural Networks - ICANN 2007. Springer, Berlin, Heidelberg, pp 220–229

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., Houlsby, N.: An image is worth 16x16 words: Transformers for image recognition at scale. CoRR arxiv:abs/2010.11929 (2020)

Gong, Y., Chung, Y., Glass, J.R.: AST: Audio Spectrogram Transformer. CoRR arxiv:abs/2104.01778 (2021)

Berg, A., O’Connor, M., Cruz, M.T.: Keyword Transformer: A Self-Attention Model for Keyword Spotting. ISCA (2021). 10.21437/interspeech.2021-1286

Gong, Y., Lai, C.-I.J., Chung, Y.-A., Glass, J.: SSAST: Self-Supervised Audio Spectrogram Transformer (2022)

Warden, P.: Speech commands: A dataset for limited-vocabulary speech recognition. arXiv preprint arXiv:1804.03209 (2018)

NVIDIA: NVIDIA Jetson Orin Nano Developer Kit User Guide. https://manuals.plus/nvidia/jetson-orin-nano-developer-kit-manual (April 2023)

Barrachina, S., Barreda, M., Catalán, S., Dolz, M.F., Fabregat, G., Mayo, R., Quintana-Ortí, E.: An integrated framework for power-performance analysis of parallel scientific workloads. Energy, 114–119 (2013)

NVIDIA: NVIDIA Jetson Linux Developer Guide. Release 35.4.1. (2023). https://docs.nvidia.com/jetson/archives/r35.4.1/DeveloperGuide/

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This work has been supported by the Spanish Ministry of Science and Innovation under projects PID2020-113656RB-C21 and PID2022-137048OA-C43 funded by MCIN/AEI/10.13039/501100011033 and by “ERDF A way of making Europe" as well as TED2021-131401B-C21 and TED2021-131401A-C22.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Martin-Salinas, I., Badia, J.M., Valls, O. et al. Evaluating and accelerating vision transformers on GPU-based embedded edge AI systems. J Supercomput 81, 349 (2025). https://doi.org/10.1007/s11227-024-06807-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-024-06807-1