Abstract

Quantum computing holds significant promise for solving complex problems, but simulating quantum circuits on classical computers remains essential due to the current limitations of quantum hardware. Efficient simulation is crucial for the development and validation of quantum algorithms and quantum computers. This paper explores and compares various strategies to leverage different levels of parallelism to accelerate the contraction of tensor networks representing large quantum circuits. We propose a new parallel multistage algorithm based on communities. The original tensor network is partitioned into several communities, which are then contracted in parallel. The pairs of tensors of the resulting network can be contracted in parallel using a GPU. We use the Girvan–Newman algorithm to obtain the communities and the contraction plans. We compare the new algorithm with two other parallelisation strategies: one based on contracting all the pairs of tensors in the GPU and another one that uses slicing to cut some indexes of the tensor network and then MPI processes to contract the resulting slices in parallel. The new parallel algorithm gets the best results with different well-known quantum circuits with a high degree of entanglement, including random quantum circuits. In conclusion, the results show that the main factor that limits the simulation is the space cost. However, the parallel multistage algorithm manages to reduce the cost of sequential simulation for circuits with a high number of qubits and allows simulating larger circuits.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantum computing can have a huge social and economic impact in areas such as finance, agriculture, pharmacology, materials science, energy, and computer and telecommunications security [1, 2]. Nowadays, we are in the noisy intermediate-scale quantum (NISQ) era [3]. We face an enormous technological challenge to create and adequately control the millions of qubits that may be required to achieve the impact mentioned. However, even with today’s quantum computers, we already have algorithms for solving optimisation problems, quantum simulation or quantum machine learning that are being applied to real small-scale problems [4, 5].

However, given the significant shortage of quantum computers, it is essential to develop scalable and efficient quantum simulators on classical computers to design and test new quantum algorithms or to evaluate the reliability of current quantum computers [6, 7]. The main problem with these simulators is scalability, since a small increase in the number of qubits, the number of gates, or the depth of the quantum circuits can lead to an exponential increase in the spatial and temporal cost of the simulation.

In fact, the main factor limiting the possibility of simulating large quantum circuits is the enormous spatial cost involved in representing their complete state. For example, storing the complete state of 50 qubits as single-precision complex numbers requires \(2^{50} \times 8\) bytes, that is, about 9 PB of memory.

In some cases, one possible solution to reduce the simulation cost is to use tensor networks to represent quantum circuits [8, 9]. This makes it possible to simulate them by contracting the tensors that make up the network and taking advantage of their well-known properties and operations, which have been studied for decades. A fundamental aspect that determines the cost of contraction is the order in which different pairs of tensors are contracted. Choosing an optimal order can reduce this cost by several orders of magnitude, but it is an NP-hard problem. Parallelising the contraction process can also significantly reduce the cost.

Two levels of parallelism can be used to carry out the contraction process. First, we can parallelise the contraction of each pair of tensors. Second, we can simultaneously contract different groups of tensor pairs. This paper explores and compares different strategies to leverage both levels of parallelism in order to accelerate the contraction process and to be able to contract larger circuits. In the following, we present a new parallel algorithm that allows different groups of tensors to be contracted in parallel. This algorithm has three stages. The first stage starts by applying a community detection method to partition the tensor network into a set of sub-tensor networks with a low degree of connection between them. It then contracts in parallel the networks associated with the different communities. The last stage contracts the tensor network built from the contracted communities. We have also implemented a variant of this algorithm that can contract the final tensor network in parallel using different strategies. We have used the well-known Girvan–Newman algorithm to detect the communities in the tensor network and also to define efficient plans to contract each of the sub-tensor networks [10, 11].

We compare the behaviour of the proposed parallel algorithm with the sequential version using the Girvan–Newman method and with two different parallelisation strategies to contract the network. The first strategy defines an efficient plan for determining the order in which the tensor network will be reduced, and then uses the GPU to contract in parallel each pair of tensors. The second strategy uses a slicing strategy to cut some indices of the tensor network, uses MPI processes to contract in parallel the resulting sliced networks, and combines the results of these contractions [6, 12]. All parallel algorithms have been implemented and tested using the QXTools simulation environment [13]. This package uses the Julia language and relies on other packages to generate and manipulate tensors, find efficient contraction plans and slicing indexes, or run simulations on GPUs or large clusters of computers .Footnote 1 The QXTools framework was selected for its unique advantages, such as being open-source and extensible, which facilitates customisation and scalability. Julia combines a high level of abstraction with computational efficiency, enabling the use of advanced parallelisation techniques that exploit both CPU and GPU resources effectively. Additionally, QXTools integrate state-of-the-art libraries for tensor operations, providing robust tools for optimising contraction plans and executing simulations across diverse hardware configurations. These features make it particularly well-suited for tensor network-based simulations, where performance and flexibility are paramount.

We evaluate the spatial and temporal performance of the new multistage parallel algorithm on a multicore multiprocessor including a high-end GPU with three types of circuits with different characteristics in terms of size, connectivity and entanglement: circuits associated with the quantum Fourier transform (QFT) algorithm, random quantum circuits (RQC) and Greenberger–Horne–Zeilinger (GHZ) circuits. We also assess the impact of the number of communities used on the cost of the different stages of the algorithm. We further evaluate the performance of a hybrid variant of this algorithm that uses the GPU to perform the contractions of each pair of tensors during its third stage.

Finally, we compare the performance of the proposed algorithm with two parallel methods implemented in the QXTools environment. One uses the GPU to contract each pair of tensors and another applies slicing to the original network and uses MPI processes to contract in parallel each sliced network.

To ensure reproducibility, the code used in this work has been made publicly available in a repository .Footnote 2

The main contributions of this work are the following:

-

Design, implementation and evaluation of a new multistage parallel algorithm for contracting tensor networks and simulating quantum circuits. The algorithm uses a community detection method to partition the network and then contracts the sub-tensor networks in parallel.

-

Implementation and evaluation of a hybrid variant of the proposed algorithm that uses a GPU to contract each pair of tensors in parallel during the final stage of the method.

-

Evaluation and comparison of the performance of the proposed algorithm with other two algorithms based on different parallelisation strategies. One that uses the GPU to contract in parallel each pair of tensors of the original tensor network. Another strategy that uses slicing to generate several smaller tensor networks and then contracts them in parallel using MPI processes.

-

Evaluation and comparison of the different parallel algorithms using tensor networks associated with three well-known types of quantum circuits. These include the random quantum circuits (RQC) designed by Google to demonstrate quantum supremacy.

-

The newly proposed multistage parallel algorithm significantly speeds up the contraction process, outperforming other parallelisation strategies and allowing larger circuits to be simulated.

The remainder of the paper is structured as follows. In Sect. 2, we introduce the main related work. Section 3 presents the background that supports our algorithms. In Sect. 4, we describe the parallel algorithms. Section 5 studies and analyses the experimental results. Finally, in Sect. 6, the main conclusions drawn are summarised.

2 Related work

Many quantum circuit simulators are available for classical computers [14]. Their main challenge is that as the number of qubits increases, the temporal and spatial cost of the simulation rapidly escalates. To overcome this problem, most simulators employ some form of parallelisation strategy.

Since the publication of Markov and Shi’s paper in 2008 [9], which introduced the use of tensor network contraction to simulate quantum circuits, many simulators have adopted this technique to represent and operate quantum circuits. These simulators have often been used to address the problem of amplitude sampling in random quantum circuits (RQC). In particular, they have been applied to the large-scale Sycamore-53 circuits used by Google in their demonstration of quantum supremacy.

Google used qFlex for CPU simulations [6] and TAL-SH for GPU simulations [15]. The latter has evolved and improved, resulting in the qExaTN simulator [16]. The Jet simulator combines slicing and parallelism techniques, using asynchronous tasks to avoid redundant computation of contractions and reduce simulation time on both shared memory multiprocessors and GPUs [17]. These computational accelerators are also used in the AC-QPD simulator Footnote 3 with RQC circuits, and the techniques developed have been integrated into the widely used CoTenGra library .Footnote 4

In this work, we have used a community detection algorithm in graphs to partition the tensor network into several sub-tensor networks and contract each of them in parallel. Different community detection methods can be found in the literature, as they can be applied in very different areas such as social networks, transportation networks or telecommunication networks. Among these detection methods, those based on hierarchical clustering, modularity maximisation, statistical inference or clique detection in graphs stand out [18]. In this work, we have relied on a method developed by Girvan and Newman (GN) based on the centrality measure called edge betweenness [10]. This method produces high quality communities with tensor networks compared to other detection methods such as Louvain, the Leiden method [19], or the spin-glass method [20], among others, included in the NetworkX Footnote 5 or python-igraph Footnote 6 libraries.

Another reason for choosing the GN method is that while it detects the communities, it can also generate a contraction plan that defines the order in which the tensors are contracted. The version of the method implemented in [21] was compared in [11] with other contraction ordering methods using a variety of tensor networks, including those associated with random circuits (RQC) and the quantum approximate optimisation algorithm (QAOA).

3 Background

3.1 Tensor networks and quantum circuits

Tensors are the natural generalisation of vectors and matrices. A tensor of rank r is defined as an element \(d_1 \times \cdots \times d_r\) existing in the space \(\mathbb {C}^{d_1,\cdots ,d_r}\). Thus a vector with d complex elements (dimension d) can be considered as an object in the space \(\mathbb {C}^d\) and is therefore a tensor of rank 1, while a matrix with dimensions \(n \times m\) can be considered as an object in the space \(\mathbb {C}^{n \times m}\) and is therefore a tensor of rank 2. A tensor can be represented as a geometric shape with edges associated with its indices and a rank equal to the number of edges. Thus a tensor network is a graph where the vertices are tensors and the edges are the indices connecting them.

Quantum circuits can be easily represented by tensor networks, where the gates correspond to tensors and the indices correspond to the qubits that connect these gates. In this case, the dimension of all indices is 2, since they can only take the values 0 and 1.

The fundamental operation used with tensor matrices is contraction, which corresponds to a tensor product of two tensors followed by a trace over the indices they share. An example is shown graphically in Fig. 1, where the contraction between two rank 3 tensors sharing 2 indices is shown.

In 2008, Markov and Shi laid the foundations for using tensor networks to simulate quantum circuits [9]. For the simulation, it is sufficient to contract all its tensors in pairs until a single tensor is obtained. If the edges corresponding to the input and output qubits of the circuit are kept open, a unitary matrix representing its full functionality is computed. If the input edges are closed with specific qubit values, a complex vector of size \(2^n\) is computed, where n is the number of qubits. This vector contains probability amplitudes for all possible output states of the circuit. Markov and Shi showed that any quantum circuit of polynomial size (number of gates) with logarithmic treewidth can be simulated deterministically in polynomial time. The treewidth of a graph G is equal to the minimum width among all possible tree decompositions of the graph.

The time and space cost of reducing (contracting) the network to a single tensor strongly depends on the order in which the tensors are contracted. Given a tensor network, determining the optimal contraction order is an NP-hard problem [22]. For this reason, various heuristics have been defined to determine the contraction order of the tensors in a way that approximates the optimal order.

3.2 Methods for ordering contractions

The aim of the ordering methods is to approximate the optimal contraction order that reduces its temporal and spatial cost, i.e. to construct an efficient tensor network contraction plan. Both costs depend exponentially on the rank of the tensors involved in the pairwise contractions performed. In particular, the rank of the largest tensor obtained in the contraction process is a reliable indicator of the cost of contracting the entire network [9].

There are several categories of methods for performing tensor contractions. The most important methods, directly inspired by the results of Markov and Shi, are based on finding a tree decomposition of the linear graph associated with the tensor network. One of the early algorithms introduced to determine the order was the tree decomposition minimum fill-in method [23]. Other methods include FlowCutter [24], and Tamaki [25, 26].

One heuristic on which we have based our parallel algorithm relies on the method proposed by Gray and Courtis in [11]. An order or contraction plan for the tensor network is obtained by applying the Girvan–Newman procedure [10] for community detection, focusing on edges with high betweenness centrality.

3.3 Graph communities

Modelling a quantum circuit as a complex network based on graphs allows us to approach the simulation problem using well-established tools in graph theory, which are also used in other fields such as the study of social networks. In this analogy, the vertices of a graph represent websites, while the edges represent links or connections between sites. The study of protein interactions is another example: proteins would be at the nodes of the graph, while common metabolic functions would act as edges. Many other applications can be found in [27].

Another notable observation from empirical studies of these real-world applications is the presence of distinct internal structures known as communities or clusters [28], as we can see in the Fig. 2. These communities exhibit cohesive behaviour or denser internal links between their members compared to links with members outside the community, similar to membership of a particular club [29]. Detecting these communities by identifying specific patterns that reveal hidden internal structures is compelling because of the wide range of applications in different fields.

The lack of a rigorous definition of the concept of communities leads to inaccuracies and ambiguities in obtaining them, creating a group of algorithms with different approaches to the same goal [28]. Therefore, it is necessary to define certain criteria or metrics that allow us to evaluate the quality of the communities that form a partition of the graph. One of the most commonly used metrics to assess the suitability of disjoint communities, i.e. where nodes can only belong to one of the communities in which the graph is partitioned, is modularity [30]. Modularity compares the number of connections (lines) within communities to the number of connections between communities. If most of the connections are within communities, the modularity will be high, indicating that the division into communities is of high quality. If there are many links between different communities, the modularity will be low, indicating that the division is of low quality. A high modularity indicates that the communities are well-defined and clearly separated, while a lower value indicates that the communities lack clarity and are not well separated.

Several methods have been proposed to obtain communities that aim to maximise modularity, such as extreme optimisation methods [31], spectral theory [32], simulated annealing [33], or greedy algorithms such as the Louvain method [34].

3.4 Betwenness centrality

Another key metric we will use to detect communities is centrality. This is usually associated with nodes and describes in some way their position or “importance” within the graph. Centrality measures are often classified as dynamic, based on the flow through the node, and topological, based on the structure of the network.

In this paper we will focus on the measure known in the literature as betweenness centrality. It is based on the idea that a node is “more central” if the greatest number of shortest paths between any two nodes in the network pass through it. If such nodes were removed from the complex network, the communication of the remaining components would be hindered and the network would tend to become disconnected in communities.

Let G be a connected, undirected, and unweighted graph with a set of nodes V. We define \(\sigma (s, t)\) as the number of shortest paths between nodes s and t, and \(\sigma (s, t | v)\) as the number of shortest paths between s and t that pass through node v. Additionally, if \(s = t\), then \(\sigma (s, t) = 1\), and if \(s = v\) or \(t = v\), then \(\sigma (s, t | v) = 0\). The betweenness centrality of v is defined as:

Typically, this expression is adjusted with a normalisation factor to account for uncomputed paths.

If we consider edges instead of nodes, we obtain the equivalent measure known in the literature as edge betweenness centrality, which we will use in our algorithms.

3.5 Girvan–Newman algorithm

To select the most suitable community detection algorithm, we compared the performance and the quality of the communities generated by several algorithms discussed in Sect. 2. Some of these algorithms allow us to specify the desired number of communities, even if they do not yield the highest quality based on metrics such as modularity. Other algorithms, such as FastGreedy [35], only provide the number of communities that maximises the modularity metric. The quality of the communities obtained largely depends on the type of circuit, and for a given number of qubits, it is similar across most methods. Ultimately, we chose the Girvan–Newman (GN) algorithm because, as highlighted in [11], this method produces a contraction tree that approximates the optimal solution in terms of spatial cost. Moreover, it allows us to select the number of communities that ensures the contraction process can be executed within the available memory. In many cases, selecting the number of communities that maximises modularity results in a failure of the contraction process due to insufficient memory.

The GN algorithm is a widely used method for detecting communities in networks [10]. The GN algorithm works by removing edges from the graph in descending order of betweenness centrality (1) until unconnected components corresponding to communities are formed. As edges are further removed from these components, smaller and smaller communities are formed, producing what is known as a dendrogram.

If we continue the process until we obtain communities with a single node, the resulting list corresponds to a contraction plan for the tensor network associated with the graph. To contract the network, we simply have to go through the list in reverse order, contracting the pairs of tensors connected by the edges one by one.

3.6 Slicing tensor networks

The slicing technique is a strategy for reducing the size of tensor networks and thus allowing larger networks to be contracted [36]. This strategy consists of cutting one of the indices of the network and generating several sub-tensor networks by setting the value of the cut ends to the possible values of the index. In the case where the tensor network represents a quantum circuit, these values are set to \(|0\rangle\) or \(|1\rangle\). After slicing, each sub-tensor network is contracted and the result of the contraction of the whole network can be obtained as the sum of the results of the sub-tensor networks. The strategy can be applied to any number of indices by exponentially increasing the number of sub-tensor networks to be contracted. The slicing technique has two fundamental advantages. First, the size of the contracted tensors is smaller, thus reducing the spatial cost of contraction. Second, the contraction of the different sub-networks can be performed in parallel [11].

While slicing facilitates the simulation of larger circuits by reducing the need for memory and computational resources, it also introduces complexities in its implementation, such as the correct division and combination of sub-tensor networks, and can introduce additional overheads that affect overall efficiency. There are several techniques for performing slicing on a circuit, each with its own approach and complexity [12, 37, 38]. Specifically, QXTools uses Shutski’s tree-trimming method [39] to select efficient indices of a network to slice, attempting to reduce the treewidth of the resulting sub-tensor networks.

4 Parallel contraction methods for tensor networks

We can use different levels of parallelism to contract a tensor network. At the finest level of parallelism, we can perform each contraction of a pair of tensors in parallel. At a coarser-grained level, we can contract several pairs of tensors or several larger groups of tensors in parallel. Our proposed multistage algorithm can use the second level of parallelism or a combination of both levels. We will also describe other parallelisation strategies implemented in the QXTools framework and compare their experimental behaviour.

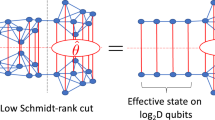

4.1 Multistage parallel algorithm

The proposed multistage parallel algorithm, that we call ComPar, is based on partitioning the tensor network associated with the circuit, contracting the resulting partitions in parallel, reducing each of them to a tensor, and finally contracting these tensors. A good partitioning of the network should try to find the partitions with fewer indices connecting them, so that the tensors obtained from each partition have a rank as small as possible. A promising strategy to approach this stage of the algorithm is to obtain communities in the graph associated with the tensor network as partitions. By definition, these algorithms aim to find groups of nodes in the graph that have strong internal connections and weak connections with nodes outside their group.

Therefore, the multistage algorithm can be summarised as follows:

The total cost of this algorithm depends significantly on the rank of the tensors generated in the different stages. In order to reduce the rank of the tensors that may be generated after stage 2, it is important to obtain communities with the lowest possible degree of interconnection in stage 1. In the experiments, we used a widely known method for community detection in graphs: the GN algorithm. However, we could use any community detection method in the first stage of the algorithm, such as those discussed in Sect. 3.3.

There are other aspects to consider in this algorithm. On the one hand, the contraction order applied in stages 2 and 3 can be obtained using any heuristic, such as the GN algorithm (which can also be used to obtain the communities) or others discussed in Sect. 3.2. On the other hand, the degree of parallelism of the algorithm is determined by the number of communities we obtain in stage 1. In general, more communities increase the total number of sub-circuits that can be contracted in parallel. So, if the system has enough resources, these sub-circuits can be contracted in parallel, reducing the execution time of stage 2. However, obtaining a large number of communities can significantly increase the cost of stage 3. Despite obtaining high-quality communities, the range of tensors to be contracted in the final stage is usually much larger than in stage 2, and in highly entangled circuits they can be connected by many indices. Since the cost of contraction increases exponentially with such a range, the final stage can be the bottleneck for achieving high performance in the parallel algorithm.

We could reduce the cost of stage 3 by using some strategy to parallelise it. For example, some pairs of tensors of the final network could be contracted in parallel. However, as this network has very few tensor, the number of pairs of tensors that could be contracted in parallel is small (i.e. two to four pairs). Experimental results show that the speedup achieved with this strategy is very limited. In the next section we will see an alternative strategy to parallelise the contraction of the final network.

4.2 Parallel tensor contraction on GPU

Another strategy to parallelise network contraction is to apply a contraction plan and contract each pair of tensors in parallel. Contraction of a pair of tensors usually involves reordering the elements of matrices, taking into account the indices over which they are being contracted, followed by multiplication of the resulting matrices. Matrix multiplication is a fundamental operation in numerical linear algebra, and as such there are very efficient sequential and parallel implementations on various platforms: processor clusters, multicore processors and GPUs. There are libraries containing efficient tensor contraction algorithms, such as ITensor [40], TBLIS [41] and cuTensor [42].

The QXTools framework implements this strategy of parallelisation on the GPU. It is implemented in the TensorOperations.jl package, which allows efficient tensor operations to be performed. This software provides support for managing CuArray objects when used together with the CUDA.jl [43] and cuTENSOR.jl packages, providing a high level interface to use NVidia’s cuTENSOR library.Footnote 7 This allows for very efficient contraction on the GPU based on a CUDA C implementation.

This parallelisation strategy can be applied to contract each pair of tensors of the network, but it can also be used to contract only the tensors of the network obtained after the second stage of the multistage algorithm described in the previous section. This can be very useful, as the contraction of this final network is usually the bottleneck to obtain high-performance on large circuits. In this way, we use a hybrid algorithm that combines the two levels of parallelism described in Sect. 4, and that we call ComPar_gpu.

4.3 Parallel algorithm using slicing

The main goal of using slicing on tensor networks is to reduce the spatial cost and to be able to contract larger circuits. However, since we obtain independent tensor networks, it is possible to contract them in parallel. Obviously, if we use slicing to get a large number of smaller networks, even though contracting each of them may have a much smaller memory impact, contracting them all in parallel may increase the spatial cost of the original contraction. However, we can combine the benefits of contracting larger networks and speeding up the contraction by exploiting parallelism if we use a distributed memory multiprocessor and contract different sliced networks or groups of networks on different processors.

Here is an example of the main steps to use QXTools to perform the slicing of a tensor network and then to contract the different sub-tensor networks in parallel. First, we use the following code to create the circuit and generate the simulation files needed to run the simulation. We call this strategy ParSli.

In this example, we create an RQC circuit of size \(7 \times 7\) and depth 16, and we use 42 as the seed to generate the random structure of the circuit. Using the parameter final_h, we can also specify whether or not to add a final layer of Hadamard gates. Next, we specify that we only want to compute the amplitude associated with 1 output bitstring in a circuit with 49 qubits. QXTools provides the function output_params_dict which will create a dictionary for the output. This function indicates the number of qubits in the circuit to be simulated and the desired number of outputs to generate (i.e number of bitstring samples or number of amplitudes to compute). Finally, we generate the files needed to run the simulation. In this case, we choose to slice 3 indices and set a timeout of 10s for the GN algorithm to obtain the contraction plan. QXTools use the Shutski’s tree-trimming method to select efficient indices to slice [39]. It is a greedy algorithm that removes the indices of the tensor network trying to reduce the treewidth of the sliced networks.

The above example generates three files that describe the calculations and data required to run the simulation. The file rqc_7_7_16.yaml is a YAML parameter file containing information about the bitstrings whose amplitudes are to be obtained. The file rqc_7_7_16.qx is a DSL file containing instructions describing the tensor operations involved in running the simulation. Finally, the file rqc_7_7_16.jld2 is a data file containing the numerical values of the initial tensors of the tensor network. A domain specific language (DSL) has been defined to allow a better separation of concerns between components.

Next we want to run the simulation in parallel. The mpiexecjl script can be used to start Julia on several MPI processes.Footnote 8 Depending on the MPI version, we can run these processes on different nodes of a cluster, or on different cores of a multiprocessor, using shared memory as the communication mechanism.

The following command launches 8 MPI processes to perform the simulation using the Julia script qxrun.jl. Each process contracts one of the 8 sub-tensor networks generated by cutting 3 indices of the original network and obtains the amplitude associated with the bitstring on its sub-tensor. All the results are then added to obtain the amplitude for the original tensor network. Examples of input files and Julia scripts to perform simulations with different types of circuits can be found in the documentation of the QXContext package Footnote 9

QXTools include the flowcutter and minimum fill-in methods to define the contraction plans when generating the .qx file. We have added new methods such as Girvan–Newman algorithm, used in this paper, Tamaki [25], and others [26, 44].

5 Experimental results and discussion

5.1 Hardware and software setup

Table 1 summarises the main features of the CPU and GPU of the server used in the experiments.

To evaluate the proposed parallel algorithms, we have used three well-known types of quantum circuits. In all cases, the contraction were performed by closing the input and output rates of the circuit. That is, we obtained the probability amplitude of a given quantum state.

Random quantum circuits (RQCs) are a type of circuit developed by Google specifically for quantum supremacy experiments [38, 45]. They are circuits defined by a matrix of qubits (rows x columns) and a depth level given by the number of layers of one- or two-qubit gates. The gates of a qubit are chosen at random from the set \(\textrm{T}\), \(\mathrm {\sqrt{X}}\) and \(\mathrm {\sqrt{Y}}\). Normally 2-qubit gates are \(\textrm{CZ}\) and connect pairs of qubits in various patterns. These circuits were specifically designed to be very difficult to simulate with classical computers.

The quantum Fourier transform (QFT) is the quantum analogue of the classical discrete Fourier transform (DFT). This circuit is an important component of many other quantum algorithms, such as Shor’s integer factorisation algorithm, which can be used to break current standard classical encryption protocols.

Finally, Greenberger–Horne–Zeilinger (GHZ) circuits produce entangled states of a particular type. Their construction is very simple and they have a very regular structure. A \(\textrm{H}\) gate is applied to the first qubit, and then \(\textrm{CNOT}\) gates are applied between successive pairs of qubits: (0,1), (1,2), and so on.

For our experiments, the circuits were constructed using the methods contained in QXZoo of the QXTools package [13], with slight modifications in some cases. The arguments used to construct the RQC circuits specify the number of rows, columns, depth of the quantum gates and the seed used to select the gates.

5.2 Results of the multistage algorithm

In this section, we examine the computational performance of the parallel multistage algorithm (ComPar) for the three circuits under consideration. This analysis will focus on assessing how the algorithm handles contraction time and memory requirements compared to a sequential algorithm. By comparing the results across the different circuit types, we aim to identify the strengths and potential limitations of the algorithm in different scenarios.

We have used the GN algorithm and the minimum fill-in to obtain the contraction plan in the second and third stage of the parallel algorithm, respectively, although any other of the sequential algorithm referenced in this article in Sect. 3.2 can be employed. Also, it is important to note that all plots use a logarithmic scale, so small line spacing may imply large differences in time or space cost.

Figure 3a allows us to compare the contraction time of the parallel multistage algorithm with the GN sequential algorithm described in Sect. 3.5 using QFT-type circuits with different numbers of qubits. The contraction time is less than one second up to circuits of 20 qubits in both algorithms, so the differences between them are in the order of tenths or hundredths of a second, and they remain very similar in cost up to 40 qubits. The parallel algorithm is the fastest in all cases, but achieves modest advantages over the sequential GN algorithm, with speedups ranging between 1.1 and 1.7. This behaviour can be understood, at least in part, by analysing the costs of the three stages of the parallel algorithm, also shown in the figure. Firstly, we can see that the sequential detection of the communities to be contracted can, in many cases, involve a significant cost, which is difficult to compensate for by a possible acceleration in stage 2. However, the most rapidly increasing cost, which eventually dominates the total time of the parallel algorithm in QFT circuits, is that of stage 3, i. e. the cost of contracting the final network of communities. Although this network contains very few tensors, their ranks are much higher than those of the tensors in the communities, which ultimately causes them to dominate the total cost.

The spatial cost, shown in Fig. 3b, has a similar behaviour to the temporal one for the two compared algorithms, and we can confirm that the main factor limiting the size of the circuits we can simulate is the memory needed when we increase the number of qubits.

To better understand the limits of the speedup achieved by the parallel algorithm in this type of circuit, we can analyse how varying the number of communities defined in stage 1 affects the execution time of the different stages. Figure 4 shows the execution time of each one of the stages and the total contraction time for a QFT circuit of 36 qubits when we vary the number of communities. The cost of using the GN method to obtain the communities is constant and, in the case of QFT-type circuits, represents between 12% and 28% of the total cost. The time required to contract the communities defined in parallel in stage 2 can be very variable. In theory, the more communities we define, the greater the degree of parallelism. With enough cores, this can significantly reduce the time required for this stage. However, the cost of contracting different communities can be very different and the workload can be very unbalanced, so we are not optimising the use of resources. In the example shown in the figure, the cost is acceptable in 2 communities, increases in the range 3 to 5, and drops sharply when we go from 6 to 10 communities. From here the cost grows with slight ups and downs, so that the final figure represents a window of communities where the cost of contraction is optimal. For more than 13 communities, the time grows exponentially and the spatial cost prevents the completion of stage 3.

Figure 4 also shows that the time required to contract the network of communities in stage 3 tends to increase as the number of defined communities grows, which can offset the potential gains achieved in stage 2 and lead to an overall increase in the total time of the contraction algorithm. As mentioned above, the parallelism of contracting different groups of tensors in stage 3 is very limited and depends very much on the circuit and the method used to contract the final network of communities.

The total cost of contracting the tensor network in parallel depends strongly on the number of communities and also the methods used to contract the communities in stages 2 and 3. In any case, there is a range of values of the number of communities that gives the minimum time. In the example shown in Fig. 4, this optimal number is given when we use 8 communities and obtain a total parallel contraction time of 28.01s.

In all the cases analysed for the QFT- and RQC-type circuits, the number of communities that produce the minimum global time is always between 2 and 13. This means that the optimal degree of parallelism is not very high, which limits the speedup we can achieve in stage 2 of the algorithm.

Figures 5a and 5b show the contraction time and spatial cost in RQC-type circuits applying multistage and GN algorithms. The relative behaviour of the two algorithms with respect to QFT circuits is very different. The temporal and spatial cost of all algorithms depends on the number and rank of the contracted tensors, and these are related to the size of the circuits and the degree of qubit entanglement. The higher spatial cost of the algorithms for QFT circuits means that we can only simulate circuits with up to 41 qubits, whereas in the case of RQC circuits the parallel algorithm allows us to simulate circuits with \(12 \times 12 = 144\) qubits and a depth of 16 levels. While the multistage algorithm offers modest improvements in contraction time over the sequential GN algorithm, the size of the circuits that can be contracted remains significantly constrained by memory limitations. For highly entangled circuits like QFT, increasing the number of qubits leads to an exponential growth in tensor rank during the third stage of the algorithm, which becomes the dominant factor in spatial cost. With an increase in available memory from 256 GB to, for instance, 1 TB, it would be possible to simulate QFT circuits with 2 to 3 additional qubits. In contrast, for RQC circuits, the multistage algorithm shows a more notable improvement, enabling the contraction of circuits with up to 144 qubits, compared to the 121-qubit limit achievable with GN. While expanding memory would further extend these capabilities, scalability remains fundamentally constrained by the exponential growth in memory requirements for such simulations.

Analysing the behaviour of the three phases of the parallel algorithm in Fig. 5a, we observe that in the case of RQC circuits, the cost of obtaining communities has a significantly higher weight compared to QFT circuits. In addition, it can be seen that the cost of contracting the community network increases rapidly for larger circuits, eventually dominating the other two stages. In general, the parallel algorithm does outperform the sequential GN algorithm, with speedups between 1.2 and 6.5.

Figure 5b shows the spatial cost in RQC circuits. As with the QFT circuits, the sequential algorithm is in all cases the one with the highest space cost. This means that we cannot use it to contract circuits with more than 121 qubits. The parallel algorithm has a lower space cost and can handle up to 144 qubits.

The behaviour of the algorithms is very different in the case of GHZ circuits. Figure 6a shows that the times of the sequential and parallel algorithms grow much more slowly and allow a much higher number of qubits to be reached without exhausting the available memory and reaching the maximum allowed by the tool: 999 qubits. Moreover, in this case, the parallel algorithm speeds up the sequential reference algorithm considerably. Specifically, it achieves speedups of up to 31 on the larger circuit. The key is to use the optimal number of communities to partition the circuit and contract in parallel. Theoretically, the optimal number corresponds to the communities with the highest modularity, i.e. those with the lowest degree of interconnection between them. Whereas the modularity of the communities used for QFT and RQC circuits is consistently below 0.9 and often falls below 0.5, for GHZ circuits it is almost always above 0.94. In GHZ circuits, even though we divide them into a large number of communities, the resulting network can be contracted in stage 3 without exhausting the available memory. This enables us to utilise a large number of cores during the parallel contraction in stage 2, significantly speeding up the process. In particular, the optimal number of communities increases from 21 with 100 qubits up to 66 with 999 qubits.

Another advantage of being able to use the optimal number of communities is that we can use a faster algorithm to obtain them, so that stage 1 of the parallel algorithm weighs much less than in the case of QFT and RQC circuits. In the case of these two types of circuits, with the GN algorithm we can choose the number of communities that allows us to complete the final contraction without exhausting the memory. For GHZ circuits, we use a greedy algorithm that tries to maximise the modularity of the decomposition and stops when it cannot improve this parameter [35]. Its cost is \(O(mlog^{2}n)\), which can be very small for sparse graphs with n nodes and m edges such as those associated with GHZ circuits. For example, the time to obtain the communities for a circuit with 999 qubits, \(n=3995\) nodes and \(m=3994\) edges is 0.016 seconds.

Figure 6b shows that the memory cost of algorithms using GHZ circuits is between one and two orders of magnitude lower than that of QFT and RQC circuits. This allows us to significantly increase the size of the circuits we can contract. This also applies to the multistage parallel algorithm. Even when using a large number of communities, the memory consumed during stage 3 is very small (2.8 MB), and stage 2, which consumes the most memory in this type of circuit, has a lower spatial cost than the sequential algorithm (381 MB vs. 799 MB).

The low spatial and temporal cost of the parallel algorithm with GHZ circuits is due to the fact that GHZ circuits can be very efficiently partitioned into communities with a minimal connection level. This results in a network of communities with a treewidth of 1, regardless of the number of qubits. In addition, the network associated with the communities obtained during stage 1 also has a constant treewidth of 2, which perfectly balances the load during stage 2. The rank of the contracted tensors in stages 2 and 3 is very small, which implies a contraction time and space cost smaller than that of the QFT and RQC circuits.

5.3 Parallel contraction on GPU

We have used the QXTools environment to implement two algorithms that exploit the level of parallelism based on the contraction of each tensor pair using the GPU. Specifically, the GN_gpu algorithm uses the GN method to obtain a contraction plan and contracts all tensor pairs on the GPU. The ComPar_gpu algorithm implements the multistage scheme described in Sect. 4.1 and uses the GPU in the third stage to contract the tensor pairs of the network obtained from the communities. This last algorithm thus combines the two levels of parallelism described in Sect. 4. In the second stage, it contracts the communities in parallel on the multicore CPU and in the third stage it performs each contraction in parallel on the GPU.

Figures 7a and b enable us to compare the performance of the two algorithms, as well as the corresponding variants that use the CPU for contracting each pair of tensors. Thus, GN_cpu is a sequential algorithm, while ComPar_cpu is the multistage algorithm described in Sect. 4.1. Figure 7a shows the contraction time of the four algorithms with QFT-type circuits, whereas Fig. 7b shows the results with RQC circuits with depth 16 and a variable number of qubits. Note that the first figure uses a linear scale on the vertical axis, while most figures use a logarithmic scale.

The relative performance of the four algorithms is similar for both types of circuit. In general, algorithms based on community-level parallelism are faster than those based on contraction-level parallelism.

On the other hand, we observe that for both variants of the GN and ComPar algorithms (with CPU and GPU), the execution times are nearly identical until the largest circuits are reached. In both cases, this behaviour is due to the fact that the majority of the execution time is spent on tasks other than tensor network contraction on the GPU or CPU. These tasks are common to both variants of each algorithm and require the same amount of time in both instances, resulting in nearly identical total execution times. It is only with larger circuits that the cost of these contractions begins to dominate the total time, making the difference between using the CPU or GPU more noticeable. In this scenario, for both algorithms and circuit types, performing each contraction in parallel on the GPU significantly accelerates the process compared to sequential execution on the CPU.

In the two variants of the ComPar algorithm, the common tasks correspond to stages 1 and 2 described in Sect. 4.1. The results shown in Figs. 3a and 5a confirm that the time for stage 3, where the GPU is used, is dominant only for the larger circuits. For the two variants of the GN algorithm, the common times include converting the initial circuit format into a tensor network, obtaining the contraction plan using the GN algorithm and obtaining the computation tree.

In Tables 2, 3, 4 and 5, we can see the time and percentage dedicated to the main steps of the algorithm for both variants of the GN and ComPar algorithms in a 30-qubit QFT circuit. The figures also include information about the memory allocated to run each step. These are sorted by time, not by order of application.

Tables 2 and 3 show that the total time of both variants of the GN algorithm is similar. This is due to the fact that both variants spend more than 90% of the time in the common processes. The tensor network contraction takes only 916 ms on the CPU and 172 ms on the GPU, demonstrating the large speedup achieved by using a parallel algorithm to perform the contractions on the GPU.

The behaviour of both variants of the ComPar algorithm is similar to those of the GN algorithm, since almost all the execution time of both is devoted to the first two stages of the algorithm, which are common. As we can see in Tables 4 and 5, the contraction of the tensor network during the third stage (i.e. Final contraction) represents a small percentage of the total time for both variants. However, if we focus only on this third stage, performing the contraction on the GPU is 4.33 times faster than performing it on the CPU, going from 1.25s to 0.289 ms.

5.4 Parallel slicing algorithm

This section analyses the performance of applying the slicing technique and contracting the various partitioned circuits in parallel. The algorithm starts by applying the GN method to obtain a contraction plan for the initial tensor network. Then it applies the tree-trimming method to select the best indices to slice, and finally contracts the sub-circuits. The first two steps are performed sequentially, and we compare the time necessary to perform the contraction of the sub-circuits both sequentially and in parallel. The time to obtain the contraction plan is independent of the number of indices that we want to slice. In [39], the authors show that the execution time of the heuristic to get the best indices to slice grows with the number of indices and tends to stabilise when we slice more than 10 indices.

We used an RQC circuit of size \(7 \times 7\) and depth 32 and divided it by cutting from 0 to 6 indexes generating from 1 to 64 different sub-circuits. For the parallel version, that we call ParSli, we create one MPI process per sliced sub-circuit. Figure 8 shows that the sequential time increases very slowly with a few slices, and only grows exponentially when 6 indices are sliced. Specifically, the sequential contraction of the original circuit takes 248s. Cutting one index and sequentially contracting the two resulting circuits only increases the time to 259s. The time doubles to 561s if we cut 5 indices and contract the resulting 32 circuits, and then almost doubles again to 1059s with a further cut. This behaviour suggests that slicing not only reduces the spatial cost of contraction, but often also reduces the temporal cost of contracting the sliced circuits, since the treewidth of these circuits in many cased decreases with each slice.

The results in the figure also show that we can contract all the sliced circuits in parallel without exhausting the server’s memory. Furthermore, we significantly accelerate the contraction of the original circuit, achieving up to a 4.79-fold speedup by slicing 4 indices and contracting the resulting 16 circuits in parallel. If we slice more indices we still accelerate the original contraction process but with a worse speedup.

5.5 Comparison of the parallel algorithms

Finally, we compare the performance of the different parallel algorithms using two circuits associated with different algorithms. Table 6 shows the speedup of the four parallel algorithms evaluated compared to the sequential CPU-based algorithm using the Girvan–Newman method to obtain the contraction plan (GN_cpu). The table includes two speedups per algorithm and circuit. The first speedup (Total) is obtained using the total execution time from reading the circuit in its original format to the end of the contraction process. This speedup includes all the initial sequential processes, such as obtaining the contraction plan, obtaining the communities or performing the slicing, depending on the algorithm. We also include the speedup of the contraction process (Contraction), which is the parallelised phase of the sequential algorithm.

For each test, the most optimal case is shown. For example, in the two variants of the ComPar algorithm, we have chosen the number of communities that gives the fastest execution time, which could be different if we use the CPU or the GPU during the third stage of the algorithm. In the ParSli algorithm, the number of indices to be cut that gives the minimum execution time of the whole algorithm does not have to coincide with the number of indices that gives the shortest contraction time. For example, in the case of the RQC-6-6-32 circuit, the shortest total time is obtained by cutting 2 indices, while the shortest contraction time is obtained by cutting 4 indices.

Obviously, the speedup of the contraction process is always greater than the speedup of the whole algorithm, since we are excluding the initial sequential tasks, which can account for a large percentage of the total execution time. We will use the speedup of the contraction process to evaluate the best parallelisation strategy. The best strategy depends on the circuit and can also vary with the size of the circuits. In the case of the QFT30 circuit, it is difficult to achieve large speedups for the contraction process as it takes less than one second in the CPU. We can see that in the case of the QFT30 circuit, the greatest speedup in contraction is obtained with the GN_gpu algorithm. The two variants of the ComPar algorithm obtain similar speedups with 8 communities. In this case, it is faster to contract the tensor network in the third stage sequentially on the CPU than on the GPU. This could happen if the contraction time in the CPU is small and the speedup obtained with the GPU does not compensate for the time to transfer the tensors between the CPU and the GPU.

The results are very different in the case of the RQC-6-6-32 circuit as the sequential contraction time is much larger (74.7s). In this case the best speedup by far is obtained with the ComPar_gpu algorithm. It is more than 44 times faster than the sequential algorithm, while the ComPar_cpu only is 3.66 times faster. In this case the algorithm ComPar_gpu can better leverage the two levels of parallelism employed during its second and third stages. The best case for the ComPar_gpu algorithm happens when we use 32 communities during its second stage, while the best case for the ComPar_cpu happens when we use 13 communities. Than means that the first version of the algorithm can use more than twice cores than the second version to accelerate the contraction of the communities. Besides, the ComPar_gpu can additionally accelerate the third stage of the algorithm by using the GPU with a large enough tensor network. Regarding the ParSli algorithm, it also gets a quite large speedup (5.93), as the time to sequentially contract the sub-circuits after slicing does not grow very fast and we can get the fastest parallel time when we slice 4 indexes and use 16 cores to contract the sub-circuits in parallel.

6 Conclusions

This paper analyses and compares various strategies for exploiting different levels of parallelism to facilitate the simulation of quantum circuits and accelerate the contraction of larger circuits based on tensor network. Different groups of tensors can be contracted in parallel, but we can also use a parallel algorithm to contract a pair of tensors.

We also introduce a new parallel multistage algorithm based on community detection to optimise the simulation of quantum circuits on classical computers. Using the Girvan–Newman algorithm, we partition tensor networks into communities, which can then be contracted in parallel. The resulting tensor network can be then contracted sequentially or we can use a parallel algorithm to contract each tensor pair in the GPU.

This new algorithm has been tested alongside two other parallel algorithms: one that uses the GPU to contract each pair of tensors, and another that uses slicing and MPI processes to contract the resulting sub-tensor networks. These algorithms were evaluated and compared on various quantum circuits, including QFT and RQC, known for their high levels of entanglement, to assess their performance.

Our experiments show that the main limitation to efficiently contract large circuits is the large spatial cost of contraction algorithms. When contracting circuits that can fit in the available memory, the contraction time can be relatively small when only the amplitude associated with one output state is to be calculated. This makes it difficult to achieve large speedups if we include the time spent initial tasks performed sequentially, such as calculating a good contraction plan, obtaining the communities or selecting the best indices to cut when applying slicing. Obviously this problem will be greatly reduced if compute many amplitudes, as the initial tasks would have to be performed only once. Nevertheless, experimental results computing only one amplitude show that the proposed multistage algorithm reduces the contraction time of the sequential version and can allow to contract circuits of higher dimension. When comparing different parallelisation strategies, using the GPU to contract each pair of tensors offers significant speedups depending on circuit. When combined with the parallel contraction of communities in the multistage algorithm, it achieves speeudps larger than 44 when applied to e.g. RQC circuits.

The proposed algorithm based on community detection also shows that the community detection-based approach offers large advantages in scenarios where the quantum circuits naturally exhibit well-defined communities, such as the GHZ circuits. It also shows significant improvements in simulation efficiency and scalability, even for highly entangled circuits such as QFT and RQC circuits.

Future work could explore refinements to the algorithm, such as incorporating more sophisticated community detection methods or extending its application to a wider range of quantum circuits. These developments, although incremental, could contribute to more efficient simulations and help support ongoing research in quantum computing. Additionally, we aim to adapt the multistage and ParSli algorithms to distributed memory systems and investigate multi-GPU setups, enabling simulations of significantly larger circuits while improving scalability and performance.

Data Availability

Codes are publicly available in the repository https://github.com/alfred-miquel/Multistage_contraction

Notes

References

National Academies of Sciences, Engineering, and Medicine (2019) Quantum computing: progress and prospects. The National Academies Press, Washington. https://doi.org/10.17226/25196

Bobier J-F, Langione M, Tao E, Gourevitch A (2021) What happens when ‘if’ turns to ‘when ’in quantum computing? Boston Consulting Group

Preskill J (2018) Quantum computing in the NISQ era and beyond. Quantum 2:79

Nielsen MA, Chuang IL (2010) Quantum computation and quantum information. Cambridge University Press, New York

Combarro EF, González-Castillo S, Di Meglio A (2023) A practical guide to quantum machine learning and quantum optimization: hands-on approach to modern quantum algorithms. Packt Publishing Ltd, Birmingham

Villalonga B, Boixo S, Nelson B, Henze C, Rieffel E, Biswas R, Mandrà S (2019) A flexible high-performance simulator for verifying and benchmarking quantum circuits implemented on real hardware. NPJ Quant Inf 5(1):86

Xu X, Benjamin S, Sun J, Yuan X, Zhang P (2023) A herculean task: classical simulation of quantum computers. arXiv preprint arXiv:2302.08880

Orús R (2014) A practical introduction to tensor networks: matrix product states and projected entangled pair states. Ann Phys 349:117–158

Markov IL, Shi Y (2008) Simulating quantum computation by contracting tensor networks. SIAM J Comput 38(3):963–981

Girvan M, Newman ME (2002) Community structure in social and biological networks. Proc Natl Acad Sci 99(12):7821–7826

Gray J, Kourtis S (2021) Hyper-optimized tensor network contraction. Quantum 5:410

Zhao Y-Q, Li R-G, Jiang J-Z et al (2021) Simulation of quantum computing on classical supercomputers with tensor-network edge cutting. Phys Rev A 104(3):032603

Brennan J, Allalen M, Brayford D, Hanley K, Iapichino L, O’Riordan LJ, Doyle M, Moran N (2021) Tensor network circuit simulation at exascale. In: 2021 IEEE/ACM second international workshop on quantum computing software (QCS). IEEE, pp 20–26

Quantiki (2023) List of QC simulators. https://quantiki.org/wiki/list-qc-simulators

Lyakh DI (2015) An efficient tensor transpose algorithm for multicore cpu, intel xeon phi, and nvidia tesla gpu. Comput Phys Commun 189:84–91

Lyakh DI, Nguyen T, Claudino D, Dumitrescu E, McCaskey AJ (2022) ExaTN: scalable GPU-accelerated high-performance processing of general tensor networks at exascale. Front Appl Math Stat 8:838601

Vincent T, O’Riordan LJ, Andrenkov M, Brown J, Killoran N, Qi H, Dhand I (2022) Jet: fast quantum circuit simulations with parallel task-based tensor-network contraction. Quantum 6:709

Jin D, Yu Z, Jiao P, Pan S, He D, Wu J, Philip SY, Zhang W (2021) A survey of community detection approaches: From statistical modeling to deep learning. IEEE Trans Knowl Data Eng 35(2):1149–1170

Traag V, Waltman L, Van Eck N (2019) From Louvain to Leiden: guaranteeing well-connected communities. Sci Rep 9:5233. https://doi.org/10.1038/s41598-019-41695-

Traag VA, Bruggeman J (2009) Community detection in networks with positive and negative links. Phys Rev E 80(3):036115

Kourtis S, Chamon C, Mucciolo E, Ruckenstein A (2019) Fast counting with tensor networks. SciPost Phys 7(5):060

Chi-Chung L, Sadayappan P, Wenger R (1997) On optimizing a class of multi-dimensional loops with reduction for parallel execution. Parallel Processing Letters 7(02):157–168

Bodlaender HL, Koster AM (2010) Treewidth computations I. upper bounds. Inf Comput 208(3):259–275

Hamann M, Strasser B (2018) Graph bisection with pareto optimization. ACM J Exp Algorithmics. https://doi.org/10.1145/3173045

Tamaki H (2019) Positive-instance driven dynamic programming for treewidth. J Comb Optim 37(4):1283–1311. https://doi.org/10.1007/s10878-018-0353-z

Dell H, Komusiewicz C, Talmon N, Weller M (2018) The PACE 2017 Parameterized Algorithms and Computational Experiments Challenge: The Second Iteration. In: Lokshtanov D, Nishimura N (eds) 12th international symposium on parameterized and exact computation (IPEC 2017). Leibniz international proceedings in informatics (LIPIcs), vol 89. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, Dagstuhl, Germany, pp 30–13012. https://doi.org/10.4230/LIPIcs.IPEC.2017.30

Gleiser PM, Danon L (2003) Community structure in jazz. Adv Complex Syst 6(04):565–573

Fortunato S (2010) Community detection in graphs. Phys Rep 486(3–5):75–174

Malliaros FD, Vazirgiannis M (2013) Clustering and community detection in directed networks: a survey. Phys Rep 533(4):95–142

Newman ME, Girvan M (2004) Finding and evaluating community structure in networks. Phys Rev E 69(2):026113

Duch J, Arenas A (2005) Community detection in complex networks using extremal optimization. Phys Rev E 72(2):027104

Newman ME (2013) Spectral methods for community detection and graph partitioning. Phys Rev E 88(4):042822

Guimera R, Sales-Pardo M, Amaral LAN (2004) Modularity from fluctuations in random graphs and complex networks. Phys Rev E 70(2):025101

Blondel VD, Guillaume J-L, Lambiotte R, Lefebvre E (2008) Fast unfolding of communities in large networks. J Stat Mech Theory Exp 2008(10):10008. https://doi.org/10.1088/1742-5468/2008/10/P10008

Clauset A, Newman ME, Moore C (2004) Finding community structure in very large networks. Phys Rev E Stat Nonlinear Soft Matter Phys 70(6):066111

Huang C, Zhang F, Newman M, Ni X, Ding D, Cai J, Gao X, Wang T, Wu F, Zhang G et al (2021) Efficient parallelization of tensor network contraction for simulating quantum computation. Nat Comput Sci 1(9):578–587

Lykov D, Schutski R, Galda A, Vinokur V, Alexeev Y (2022) Tensor network quantum simulator with step-dependent parallelization. In: 2022 IEEE International Conference on Quantum Computing and Engineering (QCE). IEEE, pp 582–593

Villalonga B, Lyakh D, Boixo S, Neven H, Humble TS, Biswas R, Rieffel EG, Ho A, Mandrà S (2020) Establishing the quantum supremacy frontier with a 281 pflop/s simulation. Quant Sci Technol 5(3):034003

Schutski R, Khakhulin T, Oseledets I, Kolmakov D (2020) Simple heuristics for efficient parallel tensor contraction and quantum circuit simulation. Phys Rev A 102(6):062614

Fishman M, White SR, Stoudenmire EM (2022) The ITensor software library for tensor network calculations. SciPost Phys Codebases 4(1.5):1. https://doi.org/10.21468/SciPostPhysCodeb.4

Matthews DA (2018) High-performance tensor contraction without transposition. SIAM J Sci Comput 40(1):1–24

NVIDIA (2024) cuTENSOR: A high-performance CUDA library for tensor primitives. https://docs.nvidia.com/cuda/cutensor

Besard T, Foket C, De Sutter B (2018) Effective extensible programming: unleashing Julia on GPUs. IEEE Trans Parallel Distrib Syst 30(4):827–841

Pastor A, Badia JM, Castillo MI (2023) Efficient quantum circuit simulation using tensor networks. In: Quantum information in Spain (ICE-8): Santiago de Compostela, 29th May to 1st June, Spain

Boixo S, Isakov SV, Smelyanskiy VN, Babbush R, Ding N, Jiang Z, Bremner MJ, Martinis JM, Neven H (2018) Characterizing quantum supremacy in near-term devices. Nat Phys 14(6):595–600

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This work has been supported by the Spanish Ministry of Science and Innovation under project PID2020-113656RB-C21 funded by MCIN/AEI/10.13039/501100011033.

Author information

Authors and Affiliations

Contributions

A.M.P. implemented the codes and executed all the experiments on the computing platforms. J.M.B. and M.C. supervised the work and helped to conceive the codes and experiments. All authors discussed the results and contributed to the final version of the manuscript.

Corresponding author

Ethics declarations

Ethical approval

Not applicable.

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pastor, A.M., Badia, J.M. & Castillo, M. A community detection-based parallel algorithm for quantum circuit simulation using tensor networks. J Supercomput 81, 450 (2025). https://doi.org/10.1007/s11227-025-06918-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-025-06918-3