Abstract

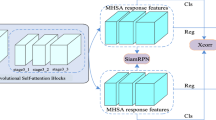

Airborne visual tracking is pivotal in enhancing the autonomy and intelligence of micro aerial vehicles (MAVs). However, MAVs frequently encounter challenges such as viewpoint changes and interference from similar objects in practice. Additionally, due to their small size and lightweight characteristics, MAVs have limited onboard computational resources, significantly constraining algorithm complexity and impacting tracking performance. To address these issues, we propose a robust and lightweight tracking model, self-adaptive dynamic template Siamese network (SiamSDT). Leveraging two key designs: temporal attention mechanism and Self-adaptive Template Fusion module, SiamSDT is capable of adapting to the appearance variations during the tracking process. Specifically, temporal attention mechanism integrates historical information in a sequential manner, retaining pertinent information while reducing storage and computational complexity. Additionally, the Self-adaptive Template Fusion module dynamically adjusts the fusion ratio of each template through a similarity matrix, further enhancing the model’s adaptability and anti-interference capability. Furthermore, we propose a solution tailored for heterogeneous ZYNQ platforms to deal with the issue of limited onboard resources, and an FPGA-based accelerator is designed to accelerate the inference process through pipeline, data reuse, ping-pong operation and array partition. The performance of SiamSDT was evaluated on OTB and UAV123 dataset. On the UAV123 dataset, SiamSDT achieves a 4.8% increase in precision and a 1.2% increase in success rate compared to the baseline algorithm without any increase in parameters. The hardware simulation experiments demonstrate that our deployment scheme can significantly reduce inference latency with an acceptable decrease in tracking performance.

Similar content being viewed by others

Data availability

All the experiments are conducted utilizing publicly accessible datasets.

References

Hatamleh KS, Ma O, Paz R (2009) A uav model parameter identification method: a simulation study. Int J Inform Acquisition 06(04):225–238

Korchenko AG, Illyash OS (2013) The generalized classification of unmanned air vehicles. In: 2013 IEEE 2nd International Conference Actual Problems of Unmanned Air Vehicles Developments Proceedings (APUAVD), pp 28–34

Aboelezz A, Hassanalian M, Desoki A, Elhadidi B, El-Bayoumi G (2020) Design, experimental investigation, and nonlinear flight dynamics with atmospheric disturbances of a fixed-wing micro air vehicle. Aerosp Sci Technol 97:105636

Yang S, Hou Z, Chen H (2023) Evaluation of vulnerability of mav/uav collaborative combat network based on complex network. Chaos, Solit & Fractals 172:113500

Marvasti-Zadeh SM, Cheng L, Ghanei-Yakhdan H, Kasaei S (2021) Deep learning for visual tracking: a comprehensive survey. IEEE Trans Intell Transp Syst 23(5):3943–3968

Chen F, Wang X, Zhao Y, Lv S, Niu X (2022) Visual object tracking: a survey. Comput Vis Image Underst 222:103508. https://doi.org/10.1016/j.cviu.2022.103508

Zhao B, Huo M, Li Z, Yu Z, Qi N (2024) Graph-based multi-agent reinforcement learning for large-scale UAVs swarm system control. Aerosp Sci Technol 150:109166

Tan S, Sun L, Song Y (2022) Prescribed performance control of Euler-Lagrange systems tracking targets with unknown trajectory. Neurocomputing 480:212–219

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PH (2016) Fully-convolutional siamese networks for object tracking. In: Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part II 14, pp 850–865. Springer

Guo Q, Feng W, Zhou C, Huang R, Wan L, Wang S (2017) Learning dynamic siamese network for visual object tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp 1763–1771

Wang Q, Teng Z, Xing J, Gao J, Hu W, Maybank S (2018) Learning attentions: residual attentional siamese network for high performance online visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4854–4863

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 8971–8980

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 101–117

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: Evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 4282–4291

Wang Q, Zhang L, Bertinetto L, Hu W, Torr PH (2019) Fast online object tracking and segmentation: A unifying approach. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1328–1338

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp 12549–12556

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 6668–6677

Ren S, He K, Girshick R, Sun J (2016) Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Wen J, Liu H, Li J (2024) Ptds centertrack: pedestrian tracking in dense scenes with re-identification and feature enhancement. Mach Vis Appl 35(3):54

Su Z, Ji H, Tian C, Zhang Y (2024) Performance evaluation for multi-target tracking with temporal dimension specifics. Chin J Aeronaut 37(2):446–458

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need.(nips), 2017. arXiv preprint arXiv:1706.03762 10, 0140525–16001837

Wang N, Zhou W, Wang J, Li H (2021) Transformer meets tracker: Exploiting temporal context for robust visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1571–1580

Cao Z, Fu C, Ye J, Li B, Hift YL (2021) Hierarchical feature transformer for aerial tracking. in 2021 ieee. In: CVF International Conference on Computer Vision (ICCV), pp 15457–15466

Chen X, Yan B, Zhu J, Lu H, Ruan X, Wang D (2022) High-performance transformer tracking. IEEE Trans Pattern Anal Mach Intell 45(7):8507–8523

Yan B, Peng H, Fu J, Wang D, Lu H (2021) Learning spatio-temporal transformer for visual tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 10448–10457

Cao Z, Huang Z, Pan L, Zhang S, Liu Z, Fu C (2022) Tctrack: Temporal contexts for aerial tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 14798–14808

Cui Y, Jiang C, Wang L, Wu G (2022) Mixformer: End-to-end tracking with iterative mixed attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 13608–13618

Wei X, Bai Y, Zheng Y, Shi D, Gong Y (2023) Autoregressive visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9697–9706

Zhu J, Lai S, Chen X, Wang D, Lu H (2023) Visual prompt multi-modal tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9516–9526

Sun Y, Yu F, Chen S, Zhang Y, Huang J, Li C, Li Y, Wang C (2024) Chattracker: Enhancing visual tracking performance via chatting with multimodal large language model. arXiv preprint arXiv:2411.01756

Chen X, Peng H, Wang D, Lu H, Hu H (2023) Seqtrack: Sequence to sequence learning for visual object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 14572–14581

Hong L, Yan S, Zhang R, Li W, Zhou X, Guo P, Jiang K, Chen Y, Li J, Chen Z, Zhang W (2024) Onetracker: Unifying visual object tracking with foundation models and efficient tuning. CoRR abs/2403.09634

Noordin A, Mohd Basri MA, Mohamed Z (2023) Adaptive pid control via sliding mode for position tracking of quadrotor mav: Simulation and real-time experiment evaluation. Aerospace 10(6):512

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp 2544–2550. IEEE

Henriques JF, Caseiro R, Martins P, Batista J (2014) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Wu H, Sun D, Zhou Z (2004) Micro air vehicle: Configuration, analysis, fabrication, and test. IEEE/ASME Trans Mechatron 9(1):108–117

Grasmeyer J, Keennon M (2001) Development of the black widow micro air vehicle. In: 39th Aerospace Sciences Meeting and Exhibit, p 127

Lee YC, Wang L (2023) Investigation of the flow-transition behaviour on a micro air vehicle. In: 3rd International Conference on Mechanical, Aerospace and Automotive Engineering (CMAAE 2023), vol. 2023, pp 315–319. IET

Tanaka S, Asignacion A, Nakata T, Suzuki S, Liu H (2022) Review of biomimetic approaches for drones. Drones 6(11):320

Zhou H, Wang D, Song H, Nan L, Yang S (2024) Key technologies and development trends of catapult launched foldable unmanned aerial vehicles. Int Core J Eng 10(6):1–14

Chuang H-M, He D, Namiki A (2019) Autonomous target tracking of uav using high-speed visual feedback. Appl Sci 9(21):4552

Falanga D, Zanchettin A, Simovic A, Delmerico J, Scaramuzza D (2017) Vision-based autonomous quadrotor landing on a moving platform. In: 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), pp 200–207. IEEE

Feng Y, Wang D, Yang K (2023) Research on target tracking algorithm of micro-uav based on monocular vision. J Robot 2023(1):6657120

Xiong D, Lu H, Yu Q, Xiao J, Han W, Zheng Z (2020) Parallel tracking and detection for long-term object tracking. Int J Adv Rob Syst 17(2):1729881420902577

Ji Y, Li W, Li X, Zhang S, Pan F (2019) Multi-object tracking with micro aerial vehicle. J Beijing Inst Technol 28(3):389–398

Hensel S, Marinov MB, Panter R (2023) Design and implementation of a camera-based tracking system for mav using deep learning algorithms. Computation 11(12):244

Zhang R (2019) Making convolutional networks shift-invariant again. In: International Conference on Machine Learning, pp 7324–7334. PMLR

Abdelouahab K, Pelcat M, Serot J, Berry F (2018) Accelerating cnn inference on fpgas: A survey. arXiv preprint arXiv:1806.01683

Guo K, Sui L, Qiu J, Yu J, Wang J, Yao S, Han S, Wang Y, Yang H (2017) Angel-eye: a complete design flow for mapping cnn onto embedded fpga. IEEE Trans Comput Aided Des Integr Circuits Syst 37(1):35–47

Wu D, Zhang Y, Jia X, Tian L, Li T, Sui L, Xie D, Shan Y (2019) A high-performance cnn processor based on fpga for mobilenets. In: 2019 29th International Conference on Field Programmable Logic and Applications (FPL), pp 136–143. IEEE

Farrukh FUD, Xie T, Zhang C, Wang Z (2018) Optimization for efficient hardware implementation of cnn on fpga. In: 2018 IEEE International Conference on Integrated Circuits, Technologies and Applications (ICTA), pp. 88–89. IEEE

Huang C, Ni S, Chen G (2017) A layer-based structured design of cnn on fpga. In: 2017 IEEE 12th International Conference on ASIC (ASICON), pp. 1037–1040. IEEE

Jameil AK, Al-Raweshidy H (2022) Efficient cnn architecture on fpga using high level module for healthcare devices. IEEE Access 10:60486–60495

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr PH (2016) Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1401–1409

Lukezic A, Vojir T, Čhovin Zajc L, Matas J, Kristan M (2017) Discriminative correlation filter with channel and spatial reliability. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 6309–6318

Danelljan M, Häger G, Khan F, Felsberg M (2014) Accurate scale estimation for robust visual tracking. In: British Machine Vision Conference, Nottingham, September 1-5, 2014. Bmva Press

Li F, Tian C, Zuo W, Zhang L, Yang M-H (2018) Learning spatial-temporal regularized correlation filters for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4904–4913

Kiani Galoogahi H, Fagg A, Lucey S (2017) Learning background-aware correlation filters for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp 1135–1143

Wu Y, Lim J, Yang M-H (2013) Online object tracking: A benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2411–2418

Matthias M, Neil S, Bernard G (2016) A benchmark and simulator for uav tracking. In: European Conference on Computer Vision

Acknowledgements

Our work is supported by the National Natural Science Foundation of China (Grant No.62271166 and 62401177).

Funding

Our work is supported by the National Natural Science Foundation of China (Grant No.62271166 and 62401177).

Author information

Authors and Affiliations

Contributions

Conceptualization, J.L. and H.L.; methodology, H.L., Y.Z. and R.W.; software, Y.Z.; validation, Y.Z., J.W. and R.W.; formal analysis, Y.Z. and H.L.; writing—original draft preparation, Y.Z. and J.W.; writing—review and editing, Y.Z., H.L. and J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Ethics approval and consent to participate

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Y., Wen, J., Wu, R. et al. Siamsdt: a self-adaptive dynamic template siamese network for airborne visual tracking of MAVs on heterogeneous FPGA-SoC. J Supercomput 81, 481 (2025). https://doi.org/10.1007/s11227-025-06928-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-025-06928-1