Abstract

As the central organ for human cognition and behavior, the human brain is critical to daily functioning. Brain tumors disrupt normal activities and require accurate diagnosis and intervention. In this study, an approach is presented that allows the detection of tumors in the brain. The main motivation of the study is to determine whether there is a tumor in the brain with high performance. For this purpose, the proposed method was tested using the open-source Br35H brain magnetic resonance imaging (MRI) dataset. The proposed model, M-C&M-BL, integrates a Convolutional Neural Network (CNN) for image feature extraction with a Bidirectional Long Short-Term Memory (BiLSTM) Network for sequential data processing. Metrics such as Accuracy (Acc), F1 Score (F1), Precision (Pre), Recall (Rec), Specificity (Spe), and Matthews Correlation Coefficient (MCC) were used for performance evaluation. The proposed model achieved 99.33% Acc and 99.35% F1, outperforming CNN-based models such as BMRI-Net (98.69% Acc, 98.33% F1) and AlexNet (98.79% Acc, 98.82% F1). It also demonstrated competitive performance against MobileNetv2, which achieved a slightly higher Acc of 99.67%. This approach has significant potential for integration into clinical decision support systems, web and mobile diagnostic platforms, and hospital picture archiving and communication systems (PACS). These tools can aid in early diagnosis, improve diagnostic accuracy, and reduce evaluation time. However, challenges such as ensuring privacy, achieving generalizability across diverse datasets, and addressing infrastructure constraints must be addressed for seamless deployment. This study highlights the feasibility and potential of combining deep learning architectures to advance AI-driven tools in healthcare, ultimately improving clinical workflows and patient outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Brain tumor is a highly aggressive disease that affects both children and adults and accounts for the majority of primary tumors in the central nervous system. Each year, approximately 11,700 people are diagnosed with a brain tumor. These tumors are categorized as benign, malignant, or pituitary. Early and accurate diagnosis is critical to increasing the life expectancy of patients with brain tumors. MRI has proven to be the most effective method for detecting such diseases. However, the large volume of image data generated by MRI scans presents a challenge for manual review by radiologists, leading to potential errors [1,2,3]. Advances in imaging technology have enabled radiologists, neurologists, and surgeons to improve the Acc of tumor detection. Automated analysis tools help overcome the complexities associated with brain tumors, resulting in more accurate diagnoses and treatment planning. The type of tumor and the subsequent treatment plan are key factors in determining the most appropriate course of action for patients. In cases where the tumor is malignant, surgical treatment, chemotherapy, and radiation therapy are essential to the patient's recovery. With the advent of new technologies, it is now possible to remove all or part of the tumor by surgery and to use additional treatments such as radiotherapy or chemotherapy to prevent recurrence. At this stage, it is of paramount importance to detect the tumor as early as possible using technologies such as MRI. This will facilitate the subsequent planning of the patient's treatment regimen. The use of evolving technologies related to artificial intelligence, such as image processing and deep learning, is of significant value in this regard. The primary objective of this study is to develop a novel approach for tumor classification from MRI images using a set of deep learning algorithms, which are artificial intelligence methods. For this purpose, the Br35H [4] dataset, an open-source and balanced collection of brain MRI images, was utilized. This dataset includes images of both tumor and non-tumor cases. In this study, a deep architecture called M-C&M-BL was created and its performance was tested in order to detect tumor-containing images in the relevant dataset. M-C&M-BL model proposed in this study stands for “Multi-CNN and Multi-BiLSTM.” This refers to the hybrid architecture proposed in this study, which integrates multiple CNN for spatial feature extraction with multiple BiLSTM networks for sequential dependency modeling. This combination enhances the model’s ability to capture both local spatial features and long-range dependencies within the data, making it highly effective for brain MRI classification tasks. In this study, the proposed M-C&M-BL hybrid architecture provides strong classification performance in the analysis of image-based data. CNN is useful in extracting spatial features from images and learning important features such as local edges, textures and patterns required for tumor detection. On the other hand, BiLSTM networks provide the ability to capture sequential and temporal relationships and enrich the feature maps produced by CNN with deeper contextual meanings. M-C&M-BL architecture allows both spatial details and sequential relationships to be taken into account when determining the location and shape of the tumor. In addition, this hybrid structure shows higher accuracy rates and stronger generalization ability compared to models based only on CNN or LSTM. While the noise in the image is reduced thanks to CNN's feature extraction, BiLSTM's contextual processing ability reduces the TP or FN results of the model and increases the overall performance. The performance of the M-C&M-BL model was evaluated using a set of performance evaluation metrics, including Acc, F1, Pre, Rec, Spe, and MCC.

Deep learning models face several technical challenges, including high computational costs during training, particularly when processing large datasets, and the risk of overfitting with limited data, which can hinder generalization to unseen cases. Balancing model performance with computational efficiency remains a critical issue in this field. From an engineering perspective, there is a need for scalable and robust architectures capable of efficiently handling large-scale MRI datasets while ensuring seamless integration with real-world healthcare systems and adhering to strict data privacy regulations. On the medical front, early and accurate diagnosis is crucial for improving patient outcomes, especially for aggressive diseases like brain tumors. However, manual review processes often struggle to detect subtle features in MRI images, leading to potential misdiagnoses or delays, which underscores the importance of developing reliable and automated AI-driven diagnostic tools.

The contribution of this study is listed below:

-

The results obtained using single-layer and triple-layer deep learning models, such as CNN and BiLSTM, applied to publicly available brain tumor MRI images, were investigated.

-

A hybrid model, M-C&M-BL, combining triple-layer CNN, and triple-layer BiLSTM architectures, was developed, and its performance was compared to single-layer and triple-layer models on the same dataset.

-

Hyperparameter optimization was conducted for all models, including single-layer and triple-layer CNN, BiLSTM, and the M-C&M-BL model, to enhance performance.

-

The effects of using different callbacks, such as early stopping and learning rate reduction, were investigated to prevent overfitting and improve the robustness of the models.

The impact of a single-layer, triple-layer, and hybrid deep learning model on the classification performance of brain tumors will be evaluated. Figure 1 depicts the study's flowchart diagram.

As illustrated in Fig. 1, balanced brain tumor MRI images made available as open-source were initially subjected to preprocessing and then classified with the M-C&M-BL model, with a separation of the training and test sets being made and the performance metrics Acc, F1, Pre, Rec, Spe, and MCC being evaluated.

BiLSTM is a powerful model that is often used to learn long-term dependencies in sequential data. In the context of brain MRI data, where the regional features of an MRI image are considered in a particular order, it can be important to understand this sequential structure. In particular, feature maps extracted by a CNN model can be converted into a sequential data format and processed in BiLSTM. BiLSTM can enhance the CNN's ability to learn local and regional features to understand the relationship between ordered features. For example, it can learn important information such as the structural relationship of a tumor to neighboring regions, or the regional spread of a particular pathology. This approach provides a more comprehensive classification by considering not only the visual structure of the tumor, but also the spatial and sequential relationships of that structure. Therefore, the combination of CNN's visual feature extraction capabilities and BiLSTM's ability to learn sequential dependencies is considered to be particularly effective for understanding complex structural relationships in brain MRI data. While single-layer models are preferred for learning basic features, triple-layer models are preferred for representing complex structures more effectively and increasing the generalization capacity of deep learning architectures. Single-layer and triple-layer separate and hybrid usage as suggested allows both learning basic features and analysis of complex structures. In this way, it provides a strong classification performance.

The remainder of this paper is organized as follows: Sect. 2 presents a review of the literature, Sect. 3 describes the data source, the CNN, and BiLSTM deep learning algorithm methods used throughout the study, and the metrics used to evaluate the performance of the models built with these two algorithms. Section 4 describes the M-C&M-BL model, the experimental settings, and the experimental results. Section 5 presents the experimental settings and results of the M-C&M-BL model. Section 6 presents a comprehensive evaluation of the overall results, accompanied by a comparison with existing literature.

2 Literature Review

Brain tumors have been the subject of research in many different disciplines, from genetics [5] to image processing [6, 7]. In particular, the advantages of artificial intelligence and deep learning methods play an important role in brain tumor diagnosis and classification. Deep learning, with its ability to learn complex data structures, enables more accurate and faster diagnosis of brain tumors.

This section discusses the artificial intelligence and deep learning techniques used in previous brain tumor studies. The datasets, methods, and their performance metrics such as accuracy, Pre, and F1 are compared. In addition, the contributions of AI-based approaches to tumor classification and image analysis are evaluated. Previous studies have investigated multi-classification [8] or binary classification methods on brain MRI and Computed Tomography (CT) images and reported that these methods reduce uncertainties in the process of accurate diagnosis. These AI-based approaches have not only improved classification accuracy, but also provided innovative solutions in data processing and model optimization. Previous studies have investigated classification with multiple tumor segmentation in brain MR and CT images [5,6,7]. To detect many different tumor types, multi-classification [8] or binary classification has been investigated. In this study, a new hybrid method for binary tumor classification is developed using a publicly available open-source balanced dataset. This section presents the studies conducted on MRI images of patients with suspected brain tumors, mainly on the selected dataset.

Asif et al., new CNN-based architecture, BMRI-Net, was proposed. Results were obtained on the Br35H [4] dataset, with an Acc of 98.33%, and a F1 of 98.33% in fivefold cross-validation [9]. Khushi et al. compared the performance of differential transfer learning methods on SGD, RMSProp, and Adam optimizers after holdout (80:20) separation on the Br35H [4] brain MRI dataset used in this study. They obtained F1 results of 98.79% and 98.82% with AlexNet and SGD optimizers [10]. Ata et al. method was proposed for the classification of brain tumor MRIs using a CNN after the extraction of a grey-level co-occurrence matrix. When the [4] dataset was utilized in the study, an Acc of 98.22% and a F1 of 98% were obtained [11]. Sarkar et al., In a dataset comprising [12] glioma, meningioma, and pituitary tumors, models were created using CNN for classification. These models achieved an Acc of 91.3% [13]. In another study by Sultan et al., utilizing this same dataset, the proposed CNN-based model achieved an Acc of 96.08% with an F1 of 97.3 [14]. The model proposed by Haq et al. in this study comprises 16 layers, triple of which are convolutional layers, and a variety of other layers, including a max pooling layer, a ReLU layer, and a fully connected layer. In the same dataset, another proposed CNN-based architecture achieved 97.86% Acc [15]. Naseer et al. achieved an Acc of 98.8% with their proposed 12-layer CNN on the Br35H [4] dataset, employing a holdout 90:10 training–test separation [16]. Kang et al. achieved an Acc of 98.67% with their proposed architecture (DenseNet121 + ResNet101 + NasNet) through the utilization of transfer learning methods on the Br35H [4] dataset, with a holdout. A 80:20 training–test separation was employed [17]. Jeyaraj and Nadar obtained a F1 of 97.41 on the Br3H5 [4] dataset with their proposed denoising deep adversarial network [18]. Papageorgiou's study achieved 99.10% Acc with low complexity CNN models [19]. Amran et al. removed five layers of GoogLeNet and hybridized it with a 14-layer CNN model. This resulted in an Acc of 99.51 and an F1 of 98.50 [20]. In Gómez-Guzmán et al., the combination of three distinct datasets, including the Br3H5 [4] dataset with CNN, and various transfer learning methods (EfficientNet, Inception, InceptionResNet, MobileNet, ResNet, and Xception) yielded the highest Acc of 0.9712 with the InceptionV3 model [21]. The MRI images were optimized by a meta-heuristic algorithm, the particle swarm optimizer, and then classified with a CNN. In this study by Bashkandi et al., the researchers achieved 97.09% Acc and 92.97% F1 [22]. In a comprehensive comparative study by Kalra et al. on Efficientnetb4, VGG-16, Efficientnetv2B1, MobileNet and ResNet50 architectures, the highest Acc was achieved by MobileNetV2, with a performance of 99.67% [23]. Islam et al. obtained 98.5% Acc and 98 F1 in their proposed 10-layer model with 4 CNN, 4 max pooling layer, and subsequent Flatten layer, and Dense layer on the [4] Br35H dataset [24]. On the Br35H [4] dataset, they obtained 98.84% Acc with a model called BTD-CNN, which incorporates image enhancement and CNN [25]. Zacharaki et al., investigated the use of classification methods for distinguish different types of brain tumors. When distinguishing high-grade gliomas from low-grade gliomas, they achieved an Acc of 96.2% using Support Vector Machine (SVM) with recursive feature elimination [26]. In this study, Dahshan et al., the features in MRI images were obtained using discrete wavelet transform (DWT) and then reduced to more basic features using principal component analysis (PCA). In the classification stage, k-Nearest Neighbor (KNN) method was used to classify the images as normal or abnormal with 98% Acc [27]. For the detection of brain glioma tumors, CNN classification was performed on The Cancer Genome Atlas digital pathology image dataset [28]. An Acc of 96% was achieved in the study [29]. The 8-layer CNN proposed by the authors achieved an Acc of 98% in classifying 66 real brain MRI images, including a total of 66 brain MRI data with glioblastoma, sarcoma, and metastatic bronchogenic carcinoma tumors collected from [30] the Harvard Medical School website [31]. In this study, a new CNN model is proposed to identify brain tumor images. The proposed model is evaluated on Br35H dataset [4]. The model proposed by Mahesha is compared with the state-of-the-art VGG-16 method. The predicted model achieved 97.2% Acc on the [4] Br35H dataset [32]. Choudhury et al. achieved an average Acc of 96.08% and F1 of 97.3 with their proposed classification model for CNN-based tumor detection [33]. In the study, by Bharargava [34], 98.50 Acc 98.35% F1 with InceptionV3 infused CNN for brain cancer detection on the Br35H [4] dataset. Mohanty et al. build a model consisting of four CNN layers and a soft attention mechanism on the Figshare brain tumor dataset [12] and achieve a class-based Acc of 97.1% [35]. Another study by Ghosal et al., using the Figshare dataset [12], presented an automatic tool for brain tumor classification from MRI data, where image slice samples were fed to a CNN-based Compression and Excitation ResNet model. Their proposed model achieved an Acc of 89.93% without data augmentation, while it averaged 93.83% with data augmentation. They proposed a new system based on an ensemble of GoogleNet, AlexNet, SqueezeNet, ShuffleNet, and NasNet-Mobile using majority voting on a dataset of 3064 T1-weighted contrast-enhanced brain MRI images [12] from 233 patients [36]. The proposed system by Nassar et al. achieved 99.31% Acc and 98.90 F1 [37]. Sajol and Hasan compared seven state-of-the-art models that use transformers or mimic the self-attention process of transformers in their design for brain tumor classification. They obtained 96.90% Acc and 96.64% F1 with ResNet50 [38] on the Figshare dataset [12]. Crossover smell agent optimized multi-layer perceptron (CSA-MLP) is proposed to perform the accurate detection and classification of tumor cells from the Figshare MRI image dataset [12]. The proposed method achieved 98.56% Acc, 98.34% F1, 98.64% Pre, and 98.45% Sens [39]. In their study, Reddy et al. used a dataset consisting of 7,023 MRI images [4, 12] divided into four different classes: glioma, meningioma, pituitary, and no tumor. They created models using ResNet50, EfficientNet-B0, and MobileNetV2 and Fine-Tuned Vision Transformer models (FTVT). They got 96.50% Acc with ResNet50 and 96.87% Acc with FTVT-b32. They also got 98.6 Acc and 98.6 F1 in their proposed FTVT-b32 model [40]. Wang et al., this paper presents a new computer-aided diagnosis method for brain tumor classification called RanMerFormer. Brain tumor dataset [41], Br35H dataset [4], and SARTAJ MRI data [42] were merged. They achieved 98.86% Acc, 98.87% Pre, 98.46% Spe, and 99.39% Spe [43]. Nutt et al., proposed a new optimization approach called PSCS combined with deep learning for brain tumor classification on Kent Rigid Repository Gene expression dataset [44]. Yoshi and Aziz, who scored 98.7% Acc, 97.98% F1 [45]. Vankdothu et al. proposed a hybrid CNN and LSTM method for brain tumor detection in an open-source dataset [46]. They obtained 92% Acc, 96% Pre, 98.5% Rec with their proposed CNN-LSTM method [47]. They developed a Particle Swarm Optimized Kernel Extreme Learning Machine (PSO-KELM) model prior to model building to increase classification Acc based on deep learning architectures such as Inception V3 and DenseNet201. They used two different datasets. In the first dataset [46], they obtained 97.97% Acc 98.02 F1, and in the second dataset [12], Sandiya et al. obtained 98.21% Acc 98.33 F1 [48].

Accordingly, studies based on the use of different methods can be found in the literature. In particular, the use of CNN-based techniques, transfer learning strategies, and hybrid approaches are prominent. Asif et al. [9] proposed a CNN-based architecture called BMRI-Net. BMRI-Net is designed as a CNN-based architecture, but its depth is limited. Also, only a single architecture is used, and no hybrid architecture is attempted. This prevents the model from processing spatial and temporal features simultaneously. Ata et al. [11], Sarkar et al. [13], Islam et al. [24], Choudhury et al. [33], Haq et al. [15] have similarly performed tumor detection using single-layer CNN. The studies include only one layer. This situation only confirms the possibility of performance degradation in complex datasets. The use of deep architectures is necessary for richer feature extraction and classification accuracy. In addition, these models may not be robust enough to noise or data diversity. Sultan et al. [14] presented an approach with 16 layers, Naseer et al. [16] with 12 layers, Mohsen et al. [31] with 8 layers, Ertosun and Rubin [29] with 19 layers. Multi-layer CNN models are used in studies. Although the relevant models are successful in extracting deep features, they cannot take into account time-sequential features. Bashkandi et al. [22] preferred a strategy using CNN with PSO. This approach, which combines particle swarm optimization (PSO) with CNN is powerful in hyperparameter optimization. However, the drawback of the approach is that it does not include a component for time-sequential data processing. The work of Mohanty et al. [35] includes CNN with soft attention mechanism. In this study, the soft attention mechanism allows focusing on important features. However, time-sequential data processing was not taken into account in this study. This situation is likely to be a limitation, especially in analyzing time-varying tumor features. One of the studies based on hybrid techniques was realized by Amran et al. [20] using GoogLeNet and 14-layer CNN. The combination of GoogLeNet and 14-layer CNN is powerful but does not contain a structure to process time-sequential features. Vankdothu et al. [47] used a combination of CNN and LSTM for tumor detection. The combination of CNN and LSTM is effective for time-sequential data, but the use of a single layer may be insufficient to analyze complex relationships across different datasets. Sandiya et al. [48] present a PSO-KELM hybrid structure. PSO-KELM hybrid structure is optimized for a limited dataset and has not been tested on large datasets. Dua et al. [25] created the BTD-CNN architecture in their work. Arumugan et al. [39] developed the CSA-MLP hybrid structure, while El-Dahshan [27] developed the DWT-PCA-KNN hybrid structure. Considering transfer learning strategies, Khushi et al. [10] performed one of these studies with AlexNet + SGD. Gómez-Guzmán et al. [21] used EfficientNet and InceptionV3 techniques, while Bharargava et al. [34] used only Inception V3. Kang et al. [17] used a combination of DenseNet121, ResNet101, NasNet. The work of Kalra et al. [23] is based on the use of MobileNet, while the work of Mahesha et al. [32] is based on the use of VGG-16. Nassar et al. [37] use ensemble transfer learning techniques in their studies. The use of related approaches shows high performance, but relying solely on pre-trained networks may prevent sufficient learning of dataset-specific features.

These studies highlight the effectiveness of CNN-based models, hybrid approaches, and transfer learning strategies in brain tumor classification. While single-layer models provide a foundation, multi-layer and hybrid architectures significantly enhance performance. This study builds on these findings by proposing a novel hybrid model, M-C&M-BL, which integrates CNN and BiLSTM architectures to leverage their complementary strengths for accurate and robust brain tumor classification by combining spatial feature extraction capabilities of CNN with the sequential processing advantages of BiLSTM, addressing both spatial and temporal data characteristics effectively.

3 Material and methods

This section outlines the data source, classification algorithm (CNN), and BiLSTM architectures employed in the study, as well as the metrics utilized to assess the efficacy of the models constructed with these architectures.

3.1 Data source

In this study, the Br35H dataset an open-source and balanced dataset specifically designed for brain MRI analysis is used. It consists of 3,000 images equally divided into two classes: 1,500 images from patients with tumors and 1,500 images from patients without tumors. The dataset classifies brain MRI images into “tumor present” and “tumor absent” classes. Its balanced structure ensures equal representation, eliminating bias in training and evaluation. In addition, it is open-source and widely recognized, allowing reproducibility and fair comparison with existing methods. These characteristics make it an ideal choice for evaluating the performance of the proposed model. Attributes of the dataset are presented in Table 1.

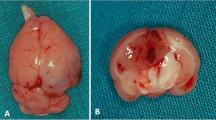

An example from the Br35H dataset is shown in Fig. 2.

Figure 2 shows example images from two randomly selected classes in the dataset: “tumor present” and “tumor absent.” The dataset used in this study is inherently balanced, ensuring equal representation of both classes. Therefore, no data enhancement techniques such as scaling or resizing were applied during the preprocessing phase. This approach was chosen to preserve the original characteristics of the MRI images and to avoid introducing potential biases through synthetic modifications.

3.2 Deep Learning

Deep learning is a branch of machine learning based on training multi-layer Artificial Neural Networks (ANN) by extracting different representations from data. It includes methods that automate the manual process of feature extraction in machine learning, resulting in higher performance. There are specialized deep learning methods such as CNN for text data and BiLSTM, LSTM for text data. However, different data-independent methods can be trained on different types of data [49]. In this study, a hybrid of the BiLSTM and CNN methods, both of which work well with textual data, has been used.

3.2.1 Convolution Neural Network

CNN is a class of deep feed-forward ANN used primarily in image processing. The methodology employed by CNN involves the use of convolution and pooling techniques as central feature extractors within the model. The results of the convolution and pooling procedures are then connected to a fully connected multi-layer perceptron [50]. In a CNN architecture, the hidden layers contain components that perform convolution. These components consist of an activation function that performs multiplication or an alternative form of dot product. This layer is followed by additional layers, including pooling layers, fully connected layers, and normalization layers. In the context of applying convolution to the text data resulting from word embedding, a filter and a kernel of predetermined dimensions are specified [51]. The filter is then systematically traversed over the text values, resulting in a feature map obtained by multiplying the values corresponding to these filter elements. Pooling operations are then used to reduce the dimensions of the word matrices. After the convolutional phase, the same padding method is used to ensure consistency in array size and input dimensions.

3.2.2 Bi-directional Long Short-Term Memory

BiLSTM is a type of Recurrent Neural Network architecture that includes both external and internal iterations, the latter facilitated by gates.

In an LSTM, there are forget gates (\({f}_{t}\)), input gates (\({i}_{t}\)), and output gates (\({o}_{t}\)). If the input data need to be forgotten before going to the output, it is removed by the forget gate (ft). The weight matrices determine the influence of these gates. Equations (1) and (2) provide the equations describing this process [52,53,54].

Equations (1) to (5) illustrate the bidirectional operation of the LSTM, as applied to both forward and reverse operations. Figure 3 provides a schematic representation of the LSTM and BiLSTM side by side [55].

Recurrent Neural Network architectures a) LSTM, b) BiLSTM [56]

3.3 Performance metric

The study evaluated model performance using Acc, Pre, Rec, F1, Spe, and MCC. These metrics are calculated based on the confusion matrix. An evaluation can be carried out according to the positive and negative classes that are expressed in the images on the confusion matrix [57].

-

True positive (\(Tp\)) and true negative (\(Tn\)) are areas where the model is estimated as positive,

-

False positive (\(Fp\)) and false negative (\(Fn\)) are areas where the model is estimated as negative.

\(Tp\); tumor class predicted as positive and actually positive.

\(Tn\); data whose tumor class is predicted as negative and is truly negative.

\(Fp\); data whose tumor class is predicted as positive but is actually negative.

\(Fn\); and data whose tumor class is predicted as negative but is actually positive.

The matrix defines true positive (Tp), true negative (Tn), false positive (Fp), and false negative (Fn), which represent correctly and incorrectly predicted tumor and non-tumor classes.

Acc; Measures the ratio of correct predictions to the total dataset. Although widely used, it may not be sufficient for unbalanced datasets. Pre; Measures the proportion of predicted positives that are actually positive. Rec; Also known as sensitivity, measures the proportion of actual positives that are correctly predicted. Spe; Assesses the ability to correctly identify negatives. F1; The harmonic mean of Pre and Rec, balancing their contributions. MCC; A balanced metric, particularly useful for unbalanced datasets, combining Pre and Spe [58]. In addition, the Receiver Operating Characteristic (ROC) visualizes the relationship between Pre and Spe at different thresholds. The Area Under the Curve (AUC) summarizes the performance of the model, with values closer to 1 indicating higher accuracy [59]. The related metrics are chosen to analyze the performance of M-C&M-BL as they are commonly presented in similar studies [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37].

4 M-C&M-BL

The structure of proposed model, which is called as the M-C&M-BL model, is shown in Fig. 4. M-C&M-BL is built using two separate deep learning architectures. First, single-layer CNN and BiLSTM and then triple CNN and triple BiLSTM were developed separately. By increasing the number of layers, triple BiLSTM and triple CNN were combined to create a model called M-C&M-BL.

In the preprocessing phase, the MRI images were scaled and blank areas outside the region of interest were cropped to ensure that only the essential features were retained. Each image was then resized to a standardized format of 64 × 64x3 to ensure compatibility with the input dimensions required by the proposed model. The decision to resize the images to 64 × 64x3 dimensions was driven by a balance between computational efficiency and the need to retain sufficient spatial detail for tumor detection. Smaller dimensions, such as 32 × 32, were tested but were found to lose critical information about tumor edges and textures, reducing classification Acc. Larger dimensions, such as 128 × 128, significantly increased the computational cost without a proportional improvement in performance. The 64 × 64 resolution provided an effective compromise, capturing the essential features of the MRI images while ensuring efficient training on the available computational resources. Given the balanced nature of the Br35H dataset, no additional data augmentation techniques such as scaling, rotating, or mirroring were applied. This decision was taken to avoid introducing artificial bias or over-representation of specific features, and to ensure that the dataset remained a true reflection of the original distribution.

This strategy will also provide an opportunity to assess the model’s robustness in handling variations and artifacts commonly encountered in real-world data. The focus of this study was to evaluate the effectiveness and architecture of the proposed model, rather than to assess the impact of preprocessing or augmentation techniques. This approach allows for a more direct analysis of the model's performance in classifying MRI images without the influence of additional preprocessing interventions. After this preprocessing step, the proposed M-C&M-BL model was trained with single and triple-layer models. Since the brain MRI images were balanced and labeled, no additional preprocessing was applied other than the above preprocessing. The hyperparameters of the deep learning methods used in the study were adjusted. The details of these adjustments and the details of the optimal parameter selection are described under the heading “Experimental Setup” in Sect. 5.

As shown in Fig. 4, CONV2D and BiLSTM are used in the models created with M-C&M-BL, which are used separately and together with CNN and BiLSTM. Specific to the M-C&M-BL model. There are triple layers in total, first CONV2D and 5 × 5 filters with an optimum of 512 units in each layer and ReLU activation function in each layer. After the CNN layers, there is a 2 × 2 layer, then a maxpooling layer, and then a Time Distributed Flatten layer. This layer provides the vector transformation for the BiLSTM layer that comes after the CNN to apply the images in the BiLSTM layer. Then 3 BiLSTM layers with ReLU activation function with 512 units were applied. Since the data are labeled in two classes as MRI with tumor and MRI without tumor, the M-C&M-BL model is completed with the last 2 unit Dense layer. Adam optimizer, batch size 64, and binary cross-entropy loss function were used to compile the model. The parameter settings for all these values are described in detail under the experimental settings section. The results obtained with single CNN, single BiLSTM, triple CNN, triple BiLSTM, and M-C&M-BL models are given in Sect. 5. The study pseudo-code is shown in Table 2.

5 Experimental Settings and Results

In this study, two deep learning methods, CNN and BiLSTM, were used to create separate single-layer and triple-layer models. Then, a new method was developed by combining CNN and BiLSTM, each with triple layers. These experiments were conducted on Google Colab. In all models, the optimal parameters of the neural networks were tuned using KerasTuner. These parameters, which were selected from the literature, are presented in Table 3.

In order to prevent the model from overlearning, early termination and learning rate (LR) reduction callbacks were used. The training–test separation was separated by an 80%-20% holdout, with an additional 20% separation written for the validation separation during training.

In this study, CNN and BiLSTM architectures were used independently in one-layer and triple-layer configurations, and then a combined M-C&M-BL model was developed to improve classification performance. The hyperparameters of these models were optimized using KerasTuner, and the computational resources of Google Colab were used for efficient experiments. For the critical parameters, different ranges were explored, including batch size (32, 64, 128, 256), CNN kernel sizes (ranging from 1 to 5, optimal size 3), and CNN units (ranging from 32 to 1024, 512 units were found to be optimal). The most effective configuration for BiLSTM units balanced complexity and generalization, while the Dense layer in the output consisted of two units corresponding to the binary classification task. Training was performed in an optimal range of 9 to 14 epochs, depending on the model, and binary cross-entropy was used as the loss function. Of the optimizers tested (Adam, SGD, RMSProp), Adam was found to be the most effective for this dataset and task. To prevent overfitting, early termination and learning rate reduction callbacks were used, and training was stopped after validation performance deteriorated. The dataset was separation using an 80%-20% holdout strategy for training and testing, and an additional 20% of the training data were used for validation during hyperparameter tuning. This systematic optimization process enabled the models to effectively utilize Br35H dataset. The plots of training and validation Acc losses for the models built with single-layer CNN, single-layer BiLSTM, triple-layer CNN, triple-layer BiLSTM, and M-C&M-BL models are shown in Fig. 5.

As illustrated in Fig. 5, while models constructed with CNN and single or triple layers exhibit comparable trends in terms of validation Acc and loss over comparable epochs, increasing the number of layers in BiLSTM models, particularly beyond a single layer, results in a trend closer to CNN with a more balanced progression. In the M-C&M-BL model, the balanced increase in Acc becomes particularly significant as the number of BiLSTM layers increases. Moreover, it can be observed that no overfitting is evident in any of the models, indicating that all models are adequately fitted. Table 4 presents the Acc, F1, Pre, Rec, Spe, and MCC results for the models developed in the study.

As illustrated in Table 4, the introduction of a triple layer in the model architecture results in a 2% improvement in Acc for the CNN and a 1% improvement for the BiLSTM model. While F1 and Pre for the CNN show a 3% increase, the BiLSTM model shows almost identical performance. The number of layers was increased in both CNN and BiLSTM, resulting in a 1% increase in Spe, while Rec increased by almost 1%. The proposed M-C&M-BL model performed best for all metrics. These results indicate that increasing the number of layers has a positive effect on model performance. The values in Table 4 were obtained using a confusion matrix, and the graphical representations of these matrices are shown in Fig. 6.

In addition, the results obtained in the study with the fivefold cross-validation are illustrated in Table 5, and introducing a triple-layer architecture results in a 2% improvement in Acc for the CNN and a 1% improvement for the BiLSTM model. While F1 and Pre for the CNN increased by 3%, the BiLSTM showed only slight changes. Both models saw a 1% increase in Spe and Rec with additional layers. The proposed M-C&M-BL model outperformed all configurations across all metrics, confirming that increasing the number of layers positively impacts model performance.

Figure 6 depicts the graphical representation of the confusion matrix. It can be observed that in both the single CNN and triple CNN models, the \(Fp\) rate decreases, while the \(Fn\) rate slightly increases. \(Tp\) and \(Tn\) rates remain relatively stable. This contributes to an increase in all metrics, including Acc. In a similar manner, the \(Fn\) rate of the BiLSTM single-layer model exhibits a slight decrease toward the triple layer, while the other metrics exhibit a comparable increase.

This decrease results in a slight decrease in Rec, while the other metrics exhibit a more moderate increase compared to the transition from single CNN to triple CNN. The ROC curve plots of the models that were created in the study are shown in Fig. 7.

The four models depicted in Fig. 7 exhibit high sensitivity due to their high AUC values and the shape of their ROC Curves, as observed in the study. Furthermore, the models are considered to be in an optimal state as they demonstrate low \(Fp\) rates, as indicated by the ROC Curve.

6 Discussion and Conclusion

In this study, a novel approach called M-C&M-BL is presented for brain tumor classification by integrating CNN and BiLSTM architectures and shows competitive results with over 99% Acc on the Br35H dataset and other metrics. The practical implications of the model lie in its potential to support clinical workflows by enabling early diagnosis of brain tumors. Such models, especially when integrated into AI-driven decision support systems for MRI analysis, can significantly assist physicians by reducing the time required for manual diagnosis and increasing the Acc of treatment decisions. This direction aims to transform the proposed model into a comprehensive tool to assist healthcare professionals and enable significant steps in AI applications for critical healthcare conditions such as brain tumors. Binary tumor classification studies based on Br35H dataset [4], Figshare dataset [12], and MRI data collected by the author are presented in Table 6. This table shows the relevant dataset, the proposed models, and the Acc and F1 performance results obtained with these models.

Table 6 shows that the model proposed in this study is comparable to similar studies and shows satisfactory performance. By integrating BiLSTM, which is superior for text and other sequential data, with CNN, a widely used image processing technique, a model has been developed that can compete with the existing literature. This study shows that positive results can be obtained not only with models containing augmented CNN layers, such as AlexNet, but also with models created using alternative networks. It is suggested that methods such as the model proposed in this study should be used to support artificial intelligence-based early diagnosis products for physicians, enabling them to make early diagnoses based on patient data such as MRI images. Such methods will provide significant empirical advantages in the development of web- or mobile-based artificial intelligence-based early diagnosis systems. The most important result of the study is that the combined use of different deep learning architectures in critical human health situations will enable physicians to intervene in diseases and save time.

In the future, the performance of multi-layer models such as the one proposed in this study and similar models on different types of image data and tabular data from different fields will be investigated. In addition, the M-C&M-BL model can be integrated into real-world clinical workflows, such as decision support systems, web or mobile-based diagnostic tools, and PACS systems, to assist physicians in early diagnosis. These applications can help healthcare professionals by automating tumor detection, improving diagnostic Acc, and saving critical time in treatment planning. However, considering the challenges such as privacy, generalizability, interpretability, and infrastructure requirements to ensure seamless integration into clinical environments, the proposed method can become a valuable tool to support early diagnosis and advanced AI applications in healthcare. Similar content methods can be studied on different health data, especially image-based.

In the future, several studies are planned to enhance the general validity of the proposed model, addressing its current limitations. First, the model's performance will be evaluated in diverse clinical scenarios by testing it on different balanced datasets beyond the Br35H dataset. This will help determine its robustness and generalizability across various applications. Additionally, future research will focus on designing models that consistently provide high performance also on unbalanced datasets, ensuring reliability and applicability in a wide range of clinical practices. In addition, transfer learning techniques will be investigated to enable the model to produce more effective results with less data. Furthermore, different imaging modalities (CT and MRI) will be integrated into the model to improve the diagnostic Acc.

Data availability

The dataset utilized in this study is the open-source Br35H dataset. Dataset source: Ahmed Hamada, “Br35H: Brain Tumor Dataset.” [Online]. Available: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection.

References

National Brain Tumor Society, “Brain Tumor Types.” [Online]. Available: https://braintumor.org/brain-tumors/about-brain-tumors/brain-tumor-types/

Onakpojeruo EP, Mustapha MT, Ozsahin DU, Ozsahin I (2024) A Comparative Analysis of the Novel Conditional Deep Convolutional Neural Network Model, Using Conditional Deep Convolutional Generative Adversarial Network-Generated Synthetic and Augmented Brain Tumor Datasets for Image Classification. Brain Sci 14(6):559. https://doi.org/10.3390/brainsci14060559

Onakpojeruo Efe Precious, Mustapha Mubarak Taiwo, Ozsahin Dilber Uzun, Ozsahin Ilker (2024) Enhanced MRI-based brain tumour classification with a novel Pix2pix generative adversarial network augmentation framework. Brain Communications. https://doi.org/10.1093/braincomms/fcae372

Ahmed Hamada, “Br35H: Brain Tumor Dataset.” [Online]. Available: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection

Akbar S, Azam H, Almutairi SS, Alqahtani O, Shah H, Aleryani A (2024) Contemporary Study for Detection of COVID-19 Using Machine Learning with Explainable AI. Computers, Materials & Continua 80(1):1075–1104. https://doi.org/10.32604/cmc.2024.050913

Akbar S, Tariq H, Fahad M, Ahmed G, Syed HJ (2022) Contemporary Study on Deep Neural Networks to Diagnose COVID-19 Using Digital Posteroanterior X-ray Images. Electronics (Basel) 11(19):3113. https://doi.org/10.3390/electronics11193113

Azam H, Tariq H, Shehzad D, Akbar S, Shah H, Khan ZA (2023) Fully Automated Skull Stripping from Brain Magnetic Resonance Images Using Mask RCNN-Based Deep Learning Neural Networks. Brain Sci 13(9):1255. https://doi.org/10.3390/brainsci13091255

Khaliki MZ, Başarslan MS (2024) Brain tumor detection from images and comparison with transfer learning methods and 3-layer CNN. Sci Rep 14(1):2664. https://doi.org/10.1038/s41598-024-52823-9

Asif S, Zhao M, Chen X, Zhu Y (2023) BMRI-NET: A Deep Stacked Ensemble Model for Multi-class Brain Tumor Classification from MRI Images. Interdiscip Sci 15(3):499–514. https://doi.org/10.1007/s12539-023-00571-1

Khushi Hafiz Muhammad Tayyab, Masood Tehreem, Jaffar Arfan, Akram Sheeraz, Bhatti Sohail Masood (2024) Performance analysis of state‐of‐the‐art CNN architectures for brain tumour detection. International Journal of Imaging Systems and Technology. https://doi.org/10.1002/ima.22949

Ata MM, Yousef RN, Karim FK, Khafaga DS (2023) An Improved Deep Structure for Accurately Brain Tumor Recognition. Comput Syst Sci Eng 46(2):1597–1616. https://doi.org/10.32604/csse.2023.034375

J. Cheng, “Brain Tumor Dataset.” [Online]. Available: https://figshare.com/articles/brain_tumor_data set/1512427/5

Sarkar S, Kumar A, Chakraborty S, Aich S, Sim J-S, Kim H-C (2020) A CNN based approach for the detection of brain tumor using MRI scans. Test Engineering and Management 83:16580–16586

Sultan HH, Salem NM, Al-Atabany W (2019) Multi-Classification of Brain Tumor Images Using Deep Neural Network. IEEE Access 7:69215–69225. https://doi.org/10.1109/ACCESS.2019.2919122

Haq EU, Jianjun H, Li K, Haq HU, Zhang T (2023) An MRI-based deep learning approach for efficient classification of brain tumors. J Ambient Intell Humaniz Comput 14(6):6697–6718. https://doi.org/10.1007/s12652-021-03535-9

Naseer A, Yasir T, Azhar A, Shakeel T, Zafar K (2021) Computer-Aided Brain Tumor Diagnosis: Performance Evaluation of Deep Learner CNN Using Augmented Brain MRI. Int J Biomed Imaging 2021:1–11. https://doi.org/10.1155/2021/5513500

Kang J, Ullah Z, Gwak J (2021) MRI-Based Brain Tumor Classification Using Ensemble of Deep Features and Machine Learning Classifiers. Sensors 21(6):2222. https://doi.org/10.3390/s21062222

Jeyaraj PR, Nadar ERS (2023) MR image restoration and segmentation via denoising deep adversarial network for blood vessels accurate diagnosis. Signal Process Image Commun 117:117013. https://doi.org/10.1016/j.image.2023.117013

Papageorgiou V (2021) Brain Tumor Detection Based on Features Extracted and Classified Using a Low-Complexity Neural Network. Traitement du Signal 38(3):547–554. https://doi.org/10.18280/ts.380302

Amran GA et al (2022) Brain Tumor Classification and Detection Using Hybrid Deep Tumor Network. Electronics (Basel) 11(21):3457. https://doi.org/10.3390/electronics11213457

Gómez-Guzmán MA et al (2023) Classifying Brain Tumors on Magnetic Resonance Imaging by Using Convolutional Neural Networks. Electronics (Basel) 12(4):955. https://doi.org/10.3390/electronics12040955

Bashkandi AH, Sadoughi K, Aflaki F, Alkhazaleh HA, Mohammadi H, Jimenez G (2023) Combination of political optimizer, particle swarm optimizer, and convolutional neural network for brain tumor detection. Biomed Signal Process Control 81:104434. https://doi.org/10.1016/j.bspc.2022.104434

M. P. Kalra, M. Sameer, and G. Ahmad, “A Deep Convolutional Neural Network Approach for Reliable Brain Tumor Detection and Segmentation System,” in 2023 3rd International Conference on Intelligent Technologies (CONIT), IEEE, Jun. 2023, pp. 1–4. https://doi.org/10.1109/CONIT59222.2023.10205675.

Md. A. Islam et al., “A Low Parametric CNN Based Solution to Efficiently Detect Brain Tumor Cells from Ultrasound Scans,” in 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), IEEE, Mar. 2023, pp. 1152–1158. https://doi.org/10.1109/CCWC57344.2023.10099302.

R. Dua and S. Bhatt, “A Practical Method for Identifying Brain Tumors Using Deep Learning,” in 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), IEEE, Jul. 2023, pp. 1–6. https://doi.org/10.1109/ICCCNT56998.2023.10307098.

Zacharaki EI et al (2009) Classification of brain tumor type and grade using MRI texture and shape in a machine learning scheme. Magn Reson Med 62(6):1609–1618. https://doi.org/10.1002/mrm.22147

El-Dahshan E-SA, Hosny T, Salem A-BM (2010) Hybrid intelligent techniques for MRI brain images classification. Digit Signal Process 20(2):433–441. https://doi.org/10.1016/j.dsp.2009.07.002

“National Cancer Database.” [Online]. Available: https://www.facs.org/quality-programs/cancer-programs/national-cancer-database/

Ertosun MG, Rubin DL (2015) Automated Grading of Gliomas using Deep Learning in Digital Pathology Images: A modular approach with ensemble of convolutional neural networks. AMIA Annu Symp Proc 2015:1899–1908

Vidoni ED (2012) The Whole Brain Atlas. J Neurol Phys Ther 36(2):108. https://doi.org/10.1097/NPT.0b013e3182563795

Mohsen H, El-Dahshan E-SA, El-Horbaty E-SM, Salem A-BM (2018) Classification using deep learning neural networks for brain tumors. Future Computing and Informatics Journal 3(1):68–71. https://doi.org/10.1016/j.fcij.2017.12.001

Mahesha Y (2023) Identification of Brain Tumor Images Using a Novel Machine Learning Model. In: Ranganathan G, Papakostas George A, Rocha Álvaro (eds) Inventive Communication and Computational Technologies: Proceedings of ICICCT 2023. Springer Nature Singapore, Singapore, pp 447–457. https://doi.org/10.1007/978-981-99-5166-6_30

C. L. Choudhury, C. Mahanty, R. Kumar, and B. K. Mishra, “Brain Tumor Detection and Classification Using Convolutional Neural Network and Deep Neural Network,” in 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA), IEEE, Mar. 2020, pp. 1–4. https://doi.org/10.1109/ICCSEA49143.2020.9132874.

K. Bharargava, H. Nanda, and N. K. Basha, “InceptionV3 infused Neural Network for Brain Tumor Detection,” in 2024 5th International Conference on Smart Electronics and Communication (ICOSEC), IEEE, Sep. 2024, pp. 1063–1068. https://doi.org/10.1109/ICOSEC61587.2024.10722625.

Mohanty BCh, Subudhi PK, Dash R, Mohanty B (2024) Feature-enhanced deep learning technique with soft attention for MRI-based brain tumor classification. Int J Inf Technol 16(3):1617–1626. https://doi.org/10.1007/s41870-023-01701-0

P. Ghosal, L. Nandanwar, S. Kanchan, A. Bhadra, J. Chakraborty, and D. Nandi, “Brain Tumor Classification Using ResNet-101 Based Squeeze and Excitation Deep Neural Network,” in 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), IEEE, Feb. 2019, pp. 1–6. https://doi.org/10.1109/ICACCP.2019.8882973.

Nassar SE, Yasser I, Amer HM, Mohamed MA (2024) A robust MRI-based brain tumor classification via a hybrid deep learning technique. J Supercomput 80(2):2403–2427. https://doi.org/10.1007/s11227-023-05549-w

M. S. I. Sajol and A. S. M. J. Hasan, “Benchmarking CNN and Cutting-Edge Transformer Models for Brain Tumor Classification Through Transfer Learning,” in 2024 IEEE 12th International Conference on Intelligent Systems (IS), IEEE, Aug. 2024, pp. 1–6. https://doi.org/10.1109/IS61756.2024.10705175.

Arumugam M, Thiyagarajan A, Adhi L, Alagar S (2024) Crossover smell agent optimized multilayer perceptron for precise brain tumor classification on MRI images. Expert Syst Appl 238:121453. https://doi.org/10.1016/j.eswa.2023.121453

Reddy CKK et al (2024) A fine-tuned vision transformer based enhanced multi-class brain tumor classification using MRI scan imagery. Front Oncol 14:1400341. https://doi.org/10.3389/fonc.2024.1400341

“Brain MRI Images for Brain Tumor Detection.” [Online]. Available: https://www.kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection

“Kaggle Brain Tumor MRI Dataset.” [Online]. Available: https://doi.org/10.34740/KAGGLE/DSV/1183165

Wang J, Lu S-Y, Wang S-H, Zhang Y-D (2024) RanMerFormer: Randomized vision transformer with token merging for brain tumor classification. Neurocomputing 573:127216. https://doi.org/10.1016/j.neucom.2023.127216

Nutt CL et al (2003) Gene expression-based classification of malignant gliomas correlates better with survival than histological classification. Cancer Res 63(7):1602–1607

Joshi Amol Avinash, Aziz Rabia Musheer (2024) Deep learning approach for brain tumor classification using metaheuristic optimization with gene expression data. International Journal of Imaging Systems and Technology. https://doi.org/10.1002/ima.23007

S. Bhuvaji, A. Kadam, P. Bhumkar, S. Dedge, and S. Kanchan, “Brain Tumor Classification (MRI),” 2020, Kaggle. https://doi.org/10.34740/KAGGLE/DSV/1183165.

Vankdothu R, Hameed MA, Fatima H (2022) A Brain Tumor Identification and Classification Using Deep Learning based on CNN-LSTM Method. Comput Electr Eng 101:107960. https://doi.org/10.1016/j.compeleceng.2022.107960

B. Sandhiya and S. Kanaga Suba Raja, “Deep Learning and Optimized Learning Machine for Brain Tumor Classification,” Biomed Signal Process Control, vol. 89, p. 105778, Mar. 2024, https://doi.org/10.1016/j.bspc.2023.105778.

Üstebay S, Turgut Z, Odabaşı ŞD, Aydın MA, Sertbaş A (2023) A Machine Learning Approach Based on Indoor Target Positioning by Using Sensor Data Fusion and Improved Cosine Similarity. Electrica. https://doi.org/10.5152/electrica.2023.23080

Erdogmus P, Kabakus AT (2023) The promise of convolutional neural networks for the early diagnosis of the Alzheimer’s disease. Eng Appl Artif Intell 123:106254. https://doi.org/10.1016/j.engappai.2023.106254

Kabakus AT, Erdogmus P (2022) An experimental comparison of the widely used pre-trained deep neural networks for image classification tasks towards revealing the promise of transfer-learning. Concurr Comput. https://doi.org/10.1002/cpe.7216

Yan H, Qin Y, Xiang S, Wang Y, Chen H (2020) Long-term gear life prediction based on ordered neurons LSTM neural networks. Measurement 165:108205. https://doi.org/10.1016/j.measurement.2020.108205

Huang Z, Yang F, Xu F, Song X, Tsui K-L (2019) Convolutional Gated Recurrent Unit-Recurrent Neural Network for State-of-Charge Estimation of Lithium-Ion Batteries. IEEE Access 7:93139–93149. https://doi.org/10.1109/ACCESS.2019.2928037

Le Ho (2019) Lee, and Jung, “Application of Long Short-Term Memory (LSTM) Neural Network for Flood Forecasting.” Water (Basel) 11(7):1387. https://doi.org/10.3390/w11071387

Başarslan MS, Kayaalp F (2023) MBi-GRUMCONV: A novel Multi Bi-GRU and Multi CNN-Based deep learning model for social media sentiment analysis. Journal of Cloud Computing 12(1):5. https://doi.org/10.1186/s13677-022-00386-3

Canlı H, Toklu S (2022) Design and Implementation of a Prediction Approach Using Big Data and Deep Learning Techniques for Parking Occupancy. Arab J Sci Eng 47(2):1955–1970. https://doi.org/10.1007/s13369-021-06125-1

Başarslan Muhammet Sinan, Argun İrem Düzdar (2020) Prediction of Potential Bank Customers: Application on Data Mining. In: Jude Hemanth D, Kose Utku (eds) Artificial Intelligence and Applied Mathematics in Engineering Problems: Proceedings of the International Conference on Artificial Intelligence and Applied Mathematics in Engineering (ICAIAME 2019). Springer International Publishing, Cham, pp 96–106. https://doi.org/10.1007/978-3-030-36178-5_9

M. Yenice, Ç. B. Erdaş, E. K. Yenice, C. Kara, İ. H. ÇELİK, and D. U. Işik, “Deep Learning and QR Code based Automated Diagnosis of Premature Retinopathy,” in 2023 Medical Technologies Congress (TIPTEKNO), IEEE, Nov. 2023, pp. 1–4. https://doi.org/10.1109/TIPTEKNO59875.2023.10359202.

Gao J, Bonzel C-L, Hong C, Varghese P, Zakir K, Gronsbell J (2024) Semi-supervised ROC analysis for reliable and streamlined evaluation of phenotyping algorithms. J Am Med Inform Assoc 31(3):640–650. https://doi.org/10.1093/jamia/ocad226

Hossain A et al (2023) A Lightweight Deep Learning Based Microwave Brain Image Network Model for Brain Tumor Classification Using Reconstructed Microwave Brain (RMB) Images. Biosensors (Basel) 13(2):238. https://doi.org/10.3390/bios13020238

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

M.S. Başarslan: Defining the Methodology, Preprocessing the Dataset, Data Analysis, Experiments and Evaluations, Evaluations of the Results, Writing – Review & Editing, Writing – Original Draft.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no competing interests.

Ethics approval

This article does not contain any data or other information obtained from studies or experiments with the participation of human or animal subjects. The study was performed on open-source data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Başarslan, M.S. M-C&M-BL: a novel classification model for brain tumor classification: multi-CNN and multi-BiLSTM. J Supercomput 81, 502 (2025). https://doi.org/10.1007/s11227-025-06964-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-025-06964-x