Abstract

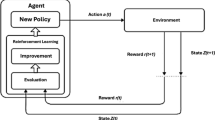

In the realm of IoT, wireless sensor networks (WSNs) play a crucial role in efficient data collection and task execution. However, energy constraints, particularly in battery-powered WSNs, present significant challenges. Energy harvesting (EH) technologies extend battery life but introduce variability that can impact quality of service (QoS). This paper introduces QoSA, a reinforcement learning (RL) agent designed to optimize QoS while adhering to energy constraints in IoT gateways. QoSA employs both single-policy and multi-policy RL methods to address trade-offs between conflicting objectives. This study investigates the performance of these methods in identifying Pareto front solutions for optimal service activation. A comparative analysis highlights the strengths and weaknesses of each proposed algorithm. Experimental results show that multi-policy methods outperform their single-policy counterparts in balancing trade-offs, demonstrating their effectiveness in real-world IoT applications.

Similar content being viewed by others

Data Availability

No datasets were generated or analyzed during the current study.

References

Winston PH (1992) Artificial Intelligence. Addison-Wesley Longman Publishing Co., Inc

Atzori L, Iera A, Morabito G (2010) The internet of things: a survey. Comput Netw 54(15):2787–2805

Huang C, Wang J, Wang S, Zhang Y (2023) Internet of medical things: A systematic review. Neurocomputing p. 126719

Obaideen K, Yousef BA, AlMallahi MN, Tan YC, Mahmoud M, Jaber H, Ramadan M (2022) An overview of smart irrigation systems using IoT. Energy Nexus 7:100124

Mehiaoui A, Wozniak E, Babau JP, Tucci-Piergiovanni S, Mraidha C (2019) Optimizing the deployment of tree-shaped functional graphs of real-time system on distributed architectures. Autom Softw Eng 26:1–57

Lakhdhar W, Mzid R, Khalgui M, Frey G, Li Z, Zhou M (2020) A guidance framework for synthesis of multi-core reconfigurable real-time systems. Inf Sci 539:327–346

Lassoued R, Mzid R (2022) A multi-objective evolution strategy for real-time task placement on heterogeneous processors. International Conference on Intelligent Systems Design and Applications pp. 448–457

Bouaziz R, Lemarchand L, Singhoff F, Zalila B, Jmaiel M (2018) Multi-objective design exploration approach for ravenscar real-time systems. Real-Time Syst 54:424–483

Zhou D, Du J, Arai S (2023) Efficient elitist cooperative evolutionary algorithm for multi-objective reinforcement learning. IEEE Access 11:43128–43139

Shresthamali S, Kondo M, Nakamura H (2022) Multi-objective resource scheduling for IoT systems using reinforcement learning. J Low Power Electron Appl 12(4):53

Vamplew P, Dazeley R, Berry A, Issabekov R, Dekker E (2011) Empirical evaluation methods for multiobjective reinforcement learning algorithms. Mach Learn 84:51–80

Vamplew P, Yearwood J, Dazeley R, Berry A (2008) On the limitations of scalarisation for multi-objective reinforcement learning of pareto fronts. AI 2008: Advances in Artificial Intelligence: 21st Australasian Joint Conference on Artificial Intelligence Auckland, New Zealand, December 1-5, 2008. Proceedings 21 pp. 372–378

Van Moffaert K, Drugan MM, Nowé A (2013) Scalarized multi-objective reinforcement learning: Novel design techniques. 2013 IEEE symposium on adaptive dynamic programming and reinforcement learning (ADPRL) pp. 191–199

Van Moffaert K, Drugan MM, Nowé A (2014) Learning sets of pareto optimal policies. Thirteenth International Conference on Autonomous Agents and Multiagent Systems-Adaptive Learning Agents Workshop (ALA)

Van Moffaert K, Nowé A (2014) Multi-objective reinforcement learning using sets of pareto dominating policies. J Mach Learn Res 15(1):3483–3512

Hayes CF, Rădulescu R, Bargiacchi E, Källström J, Macfarlane M, Reymond M, Verstraeten T, Zintgraf LM, Dazeley R, Heintz F et al (2022) A practical guide to multi-objective reinforcement learning and planning. Auton Agents Multi-Agent Syst 36(1):26

Alegre LN, Bazzan AL, Roijers DM, Nowé A, da Silva BC (2023) Sample-efficient multi-objective learning via generalized policy improvement prioritization. arXiv preprint arXiv:2301.07784

Cai XQ, Zhang P, Zhao L, Bian J, Sugiyama M, Llorens A (2023) Distributional pareto-optimal multi-objective reinforcement learning. Advan Neural Inf Process Syst 36:15593–15613

Lu H, Herman D, Yu Y (2023) Multi-objective reinforcement learning: Convexity, stationarity and pareto optimality. The Eleventh International Conference on Learning Representations

Zhang L, Qi Z, Shi Y (2023) Multi-objective reinforcement learning-concept, approaches and applications. Proced Comput Sci 221:526–532

Voß T, Beume N, Rudolph G, Igel C (2008) Scalarization versus indicator-based selection in multi-objective cma evolution strategies. 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence) pp. 3036–3043

Peschl M, Zgonnikov A, Oliehoek FA, Siebert LC (2021) Moral: Aligning ai with human norms through multi-objective reinforced active learning. arXiv preprint arXiv:2201.00012

Reymond M, Bargiacchi E, Nowé A (2022) Pareto conditioned networks. arXiv preprint arXiv:2204.05036

Nguyen TT, Nguyen ND, Vamplew P, Nahavandi S, Dazeley R, Lim CP (2020) A multi-objective deep reinforcement learning framework. Eng Appl Artif Intell 96:103915

Corne DW, Jerram NR, Knowles JD, Oates MJ (2001) Pesa-ii: Region-based selection in evolutionary multiobjective optimization. Proceedings of the 3rd annual conference on genetic and evolutionary computation pp. 283–290

Singh S, Malik A, Kumar R (2017) Energy efficient heterogeneous DEEC protocol for enhancing lifetime in WSNs. Eng Sci Technol, Int J 20(1):345–353

Singh S, Chand S, Kumar R, Malik A, Kumar B (2016) NEECP: novel energy-efficient clustering protocol for prolonging lifetime of WSNs. IET Wirel Sens Syst 6(5):151–157

Chand S, Singh S, Kumar B (2014) Heterogeneous heed protocol for wireless sensor networks. Wirel Pers Commun 77:2117–2139

Singh S, Chand S, Kumar B (2016) Energy efficient clustering protocol using fuzzy logic for heterogeneous WSNs. Wirel Pers Commun 86:451–475

Kumar S, Das R, Das D, Sarkar MK (2021) Fuzzy-based on-demand multi-node charging scheme to reduce death rate of sensors in wireless rechargeable sensor networks. 2021 10th International Conference on Internet of Everything, Microwave Engineering, Communication and Networks (IEMECON) pp. 1–7

Das R, Dash D, Yadav CBK (2022) An efficient charging scheme using battery constrained mobile charger in wireless rechargeable sensor networks. Telecommun Syst 81(3):389–415

Das R, Dash D (2023) Collaborative data gathering and recharging using multiple mobile vehicles in wireless rechargeable sensor network. Int J Commun Syst 36(15):e5573

Das R, Dash D (2023) Joint on-demand data gathering and recharging by multiple mobile vehicles in delay sensitive WRSn using variable length GA. Comput Commun 204:130–146

Apat HK, Sahoo B, Goswami V, Barik RK (2024) A hybrid meta-heuristic algorithm for multi-objective IoT service placement in fog computing environments. Decis Anal J 10:100379

Iqbal N, Imran Ahmad S, Ahmad R, Kim DH (2021) A scheduling mechanism based on optimization using IoT-tasks orchestration for efficient patient health monitoring. Sensors 21(16):5430

Haouari B, Mzid R, Mosbahi O (2023) PSRL: A new method for real-time task placement and scheduling using reinforcement learning. Software Engineering and Knowledge Engineering pp. 555–560

Haouari B, Mzid R, Mosbahi O (2023) A reinforcement learning-based approach for online optimal control of self-adaptive real-time systems. Neural Comput Appl 35(27):20375–20401

Haouari B, Mzid R, Mosbahi O (2022) On the use of reinforcement learning for real-time system design and refactoring. International Conference on Intelligent Systems Design and Applications pp. 503–512

Feit F, Metzger A, Pohl K (2022) Explaining online reinforcement learning decisions of self-adaptive systems. 2022 IEEE International Conference on Autonomic Computing and Self-Organizing Systems (ACSOS) pp. 51–60

Metzger A, Quinton C, Mann ZÁ, Baresi L, Pohl K (2022) Realizing self-adaptive systems via online reinforcement learning and feature-model-guided exploration. Computing 106(4):1251–1272

Palm A, Metzger A, Pohl K (2020) Online reinforcement learning for self-adaptive information systems. International Conference on Advanced Information Systems Engineering pp. 169–184

Natarajan S, Tadepalli P (2005) Dynamic preferences in multi-criteria reinforcement learning. Proceedings of the 22nd international conference on Machine learning pp. 601–608

Yamamoto H, Hayashida T, Nishizaki I, Sekizaki S (2019) Hypervolume-based multi-objective reinforcement learning: interactive approach. Advan Sci, Technol Eng Syst J 4(1):93–100

Qin Y, Wang H, Yi S, Li X, Zhai L (2020) Virtual machine placement based on multi-objective reinforcement learning. Appl Intell 50:2370–2383

Barrett L, Narayanan S (2008) Learning all optimal policies with multiple criteria. Proceedings of the 25th international conference on Machine learning pp. 41–47

Haouari B, Mzid R, Mosbahi O (2024) Reinforcement learning for multi-objective task placement on heterogeneous architectures with real-time constraints. Proceedings of the 19th International Conference on Evaluation of Novel Approaches to Software Engineering - Volume 1: ENASE pp. 179–189

Xu YH, Xie JW, Zhang YG, Hua M, Zhou W (2019) Reinforcement learning (RL)-based energy efficient resource allocation for energy harvesting-powered wireless body area network. Sensors 20(1):44

Diyan M, Silva BN, Han K (2020) A multi-objective approach for optimal energy management in smart home using the reinforcement learning. Sensors 20(12):3450

Lu J, Mannion P, Mason K (2024) A meta-learning approach for multi-objective reinforcement learning in sustainable home energy management. ECAI 2024 - 27th European Conference on Artificial Intelligence, 19-24 October 2024, Santiago de Compostela, Spain - Including 13th Conference on Prestigious Applications of Intelligent Systems (PAIS 2024) 392, 2814–2821

He X, Lv C (2023) Towards energy-efficient autonomous driving: A multi-objective reinforcement learning approach. IEEE/CAA J Autom Sin 10(5):1329–1331

Tian Y, Si L, Zhang X, Cheng R, He C, Tan KC, Jin Y (2021) Evolutionary large-scale multi-objective optimization: a survey. ACM Comput Surv (CSUR) 54(8):1–34

Coello CAC (2007) Evolutionary algorithms for solving multi-objective problems. Springer

Muhammad I, Yan Z (2015) Supervised machine learning approaches: a survey. ICTACT J Soft Comput 5(3):946–952

Shanthamallu US, Spanias A, Tepedelenlioglu C, Stanley M (2017) A brief survey of machine learning methods and their sensor and iot applications. 2017 8th International Conference on Information, Intelligence, Systems & Applications (IISA) pp. 1–8

Bellman R (1957) A Markovian decision process. J Math Mech 6:679–684

Howard RA (1960) Dynamic programming and Markov processes

Shakya AK, Pillai G, Chakrabarty S (2023) Reinforcement learning algorithms: a brief survey. Expert Syst Appl 231:120495

Clifton J, Laber E (2020) Q-learning: theory and applications. Annu Rev Stat Appl 7:279–301

Feng S, Wu X, Zhao Y, Li Y (2023) Dispatching and scheduling dependent tasks based on multi-agent deep reinforcement learning. Softw Eng Knowl Eng pp. 281–286

Watkins CJCH (1989) Learning from delayed rewards

Watkins CJ, Dayan P (1992) Q-learning. Mach Learn 8(3):279–292

Jang B, Kim M, Harerimana G, Kim JW (2019) Q-learning algorithms: a comprehensive classification and applications. IEEE Access 7:133653–133667

Ghosh A, Zhou X, Shroff N (2022) Provably efficient model-free constrained RL with linear function approximation. Advan Neural Inf Process Syst 35:13303–13315

Koenig S, Simmons RG (1993) Complexity analysis of real-time reinforcement learning. AAAI 93:99–105

Zitzler E, Deb K, Thiele L (2000) Comparison of multiobjective evolutionary algorithms: Empirical results. Evolut Comput 8(2):173–195

Knowles J, Corne D (2002) On metrics for comparing nondominated sets. Proceedings of the 2002 Congress on Evolutionary Computation. CEC’02 (Cat. No. 02TH8600) 1:711–716

Fonseca CM, Paquete L, López-Ibánez M (2006) An improved dimension-sweep algorithm for the hypervolume indicator. 2006 IEEE international conference on evolutionary computation pp. 1157–1163

Cao Y, Smucker BJ, Robinson TJ (2015) On using the hypervolume indicator to compare pareto fronts: applications to multi-criteria optimal experimental design. J Stat Plan Inference 160:60–74

Yang R, Sun X, Narasimhan K (2019) A generalized algorithm for multi-objective reinforcement learning and policy adaptation. Advances in neural information processing systems 32

Zitzler E, Knowles J, Thiele L (2008) Quality assessment of pareto set approximations. Multiobjective optimization: Interactive and evolutionary approaches 5252 373–404

Zitzler E, Thiele L (1998) Multiobjective optimization using evolutionary algorithms-a comparative case study. International conference on parallel problem solving from nature pp. 292–301

Fonseca CM, Knowles JD, Thiele L, Zitzler E et al (2005) A tutorial on the performance assessment of stochastic multiobjective optimizers. Third international conference on evolutionary multi-criterion optimization (EMO 2005) 216:240

Pipattanasomporn M, Kuzlu M, Rahman S, Teklu Y (2013) Load profiles of selected major household appliances and their demand response opportunities. IEEE Trans Smart Grid 5(2):742–750

Perny P, Weng P (2010) On finding compromise solutions in multiobjective markov decision processes. ECAI 2010:969–970

Lei L, Tan Y, Zheng K, Liu S, Zhang K, Shen X (2020) Deep reinforcement learning for autonomous internet of things: model, applications and challenges. IEEE Commun Surv Tutor 22(3):1722–1760

Agarwal A, Kakade SM, Lee JD, Mahajan G (2021) On the theory of policy gradient methods: optimality, approximation, and distribution shift. J Mach Learn Res 22(98):1–76

Author information

Authors and Affiliations

Contributions

Bakhtha Haouari proposed the methodology, implemented the algorithms, analyzed the results, and wrote the paper draft. Rania Mzid contributed by describing the methodology, supervising the research, and revising the manuscript. Olfa Moshabi validated the methodology and reviewed the paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Haouari, B., Mzid, R. & Mosbahi, O. Investigating the performance of multi-objective reinforcement learning techniques in the context of IoT with harvesting energy. J Supercomput 81, 515 (2025). https://doi.org/10.1007/s11227-025-07010-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s11227-025-07010-6