Abstract

We present our work on developing and training scalable, trustworthy, and energy-efficient predictive graph foundation models (GFMs) using HydraGNN, a multi-headed graph convolutional neural network architecture. HydraGNN expands the boundaries of graph neural network (GNN) computations in both training scale and data diversity. It abstracts over message passing algorithms, allowing both reproduction of and comparison across algorithmic innovations that define nearest-neighbor convolution in GNNs. This work discusses a series of optimizations that have allowed scaling up the GFMs training to tens of thousands of GPUs on datasets consisting of hundreds of millions of graphs. Our GFMs use multitask learning (MTL) to simultaneously learn graph-level and node-level properties of atomistic structures, such as energy and atomic forces. Using over 154 million atomistic structures for training, we illustrate the performance of our approach along with the lessons learned on two state-of-the-art US Department of Energy (US-DOE) supercomputers, namely the Perlmutter petascale system at the National Energy Research Scientific Computing Center and the Frontier exascale system at Oak Ridge Leadership Computing Facility. The HydraGNN architecture enables the GFM to achieve near-linear strong scaling performance using more than 2000 GPUs on Perlmutter and 16,000 GPUs on Frontier.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

1 Introduction

Discovery of new materials with desired properties and accurate predictions of a material’s behavior throughout its entire life span are crucial to fundamental scientific progress in energy generation, transportation, electronics, and information technology [1]. Machine learning (ML) has shown great potential in accelerating the screening and preselection of materials for further experimental testing. In particular, deep learning (DL) models effectively captured relevant underlying relationships due to the arrangement of atoms of different constituents within various atomistic structures [2,3,4,5,6,7,8,9,10,11,12]. DL models trained on data generated from experiments and/or first-principles calculations can predict the properties of interest to be used as new inputs. This inference takes only a fraction of the time it would take to run an experiment or a full first-principles calculation while still producing sufficiently accurate results. This drastic reduction in time to predict material properties using atomistic information results in a promising path toward accelerating material discovery and design [13, 14].

However, generating vast volumes of experimental and/or first-principles training data is challenging even with sophisticated experimental facilities and powerful supercomputers. Recently, foundation models (FMs) have shown the promise to navigate around this challenge: Once pre-trained over a large volume of available open-source data [15], an FM would provide a jump-start to refined models by fine-tuning on significantly smaller amounts of data for customized applications (also called downstream tasks). Reducing the number of simulations and/or experiments for generating domain-specific training data also drastically reduces the energy costs of developing domain-specific DL models.

While state-of-the-art language-based FMs with a transformer architecture have reached promising results in several domains [16,17,18,19,20,21,22,23,24,25,26,27], they fail to capture important topological aspects of the atomistic structures. Therefore, alternative DL architectures need to be considered for the development of trustworthy (henceforth understood as simultaneously accurate and highly confident) FMs for materials using atomistic-scale information.

Since atomistic material structures for a generic type of compound can be mapped onto a graph (where atoms can be treated as nodes and interatomic bonds as edges), graph foundation models (GFMs) [28, 29], which are FMs that operate on data structures as graphs, are the candidate of choice for these applications. Currently, GFMs proposed in the literature are developed by training graph neural network (GNN) architectures on a sufficiently large and comprehensive dataset for the domain of interest. While several efforts have already been made to develop GFMs for atomistic materials modeling applications [30,31,32,33], the existing work is still at an incipient stage. Current efforts do not yet ensure that their proposed approach achieves trustworthiness. Moreover, serious concerns have been recently raised about the unaffordable amount of energy requested by proliferating AI centers due to computationally intensive tasks of training large FMs on large volumes of data.

Here we describe our work toward developing scalable and trustworthy supervised GFMs for the simultaneous prediction of energies and atomic forces with careful attention to energy consumption. The GFMs have been constructed using HydraGNN [34, 35], an open-source, fully scalable GNN architecture developed at Oak Ridge National Laboratory (ORNL). Experiments were conducted on two large US-DOE supercomputers: the Perlmutter petascale machine at National Energy Research Scientific Computing Center (NERSC) and the Frontier exascale system at Oak Ridge Leadership Computing Facility (OLCF).

The remaining of the paper is organized as follows. We discuss the current state of the art in Sect. 2. In Sect. 3, we discuss our approach toward developing a scalable framework and list the different optimizations for scalable training using HydraGNN. We discuss our use of large-scale hyperparameter optimization (HPO) and ensemble epistemic uncertainty quantification (UQ) to develop a trustworthy GFM. Section 4 shows the performance of different components of this work: reading large data, scaling the training process, performing HPO at large scale, and measuring epistemic uncertainty using an ensemble of HPO trials with high predictive accuracy and low energy costs. We conclude our study and discuss future work in Sect. 5.

2 Current state of the art

2.1 GNN training on open-source atomistic materials data

To date, there have been a few approaches proposed in the literature to develop GFMs for atomistic materials modeling. In [30], the authors proposed a multimodal approach where multiple encoding DL architectures are trained on different types of data representations and describing different physical quantities. The models are aligned to each other through a penalization term in the training loss function that forces latent vectors from each embedding space to coincide. However, the datasets used comprise only organic molecules, which cover only a relatively small set of natural elements on the periodic table.

In [32], the authors collected open-source datasets that provide labels for different properties of organic molecules. Using such a diverse collection of datasets, a GNN architecture is used for MTL in order to identify embedding spaces that capture meaningful correlations between the different labeled properties, with the promise that such an embedding space would reduce the data requirement on downstream tasks specific to organic chemistry. Since the model is trained on open-source datasets that describe only organic molecules, this approach is not transferable to inorganic compounds. Moreover, the authors compare the performance of different message passing neural network (MPNN) layers to construct the GNN architecture by performing computationally inexpensive hyperparameter tuning on small models with few parameters and transfer the use of such hyperparameters to models of much larger scale. While this approach helps limit the computational burden of HPO on large-scale GFMs, the best performing configuration of hyperparameters at small scale is not guaranteed to be the best performing configuration of hyperparameters at a larger scale and on a larger set of data, because the conclusions drawn from the HPO study are model and data dependent.

In [36], the authors developed a GFM trained on the Materials Project Trajectories (MPTrj) dataset [37], using an MPNN layer that is capable of modeling four-body interactions. As the authors themselves recognize in their conclusions, while the approach sheds light onto a promising path toward building effective GFMs for atomistic materials modeling, the impact of their work is limited by the fact that the GFM has a very small number of parameters that was deliberately maintained low due to computational limitations. Moreover, this reduces the expressivity of the GFM.

In [38], the authors propose a new kernel function for GNNs for modeling potential energy surfaces, which exploits chemical species information, and illustrate its efficacy in the context of transferable GFMs by pre-training the GFM on the Open Catalyst 2020 (OC2020) dataset [39] and illustrating its performance on a set of fine-tuning tasks. Since the GFM was pre-trained only on the OC2020 dataset, the applicability of this GFM is restricted to inorganic compounds.

While not explicitly presented by their developers as GFMs, there have been other models that cover broader sets of elements of the periodic table compared to the approaches mentioned in the previous paragraphs. In [31], the authors built a GNN model using MTL for simultaneous predictions of several material properties by training the GNN model on multiple datasets, including OC2020 [39] and Open Catalyst 2022 (OC2022) [40]. However, the approach considers only a single GNN architecture without performing HPO. Moreover, the set of parameters in the GNN model is relatively small, of the order of a few million parameters, which limits the attainable accuracy on large volumes of data.

In [33], the authors studied the scaling behavior of 2D molecular GNNs under various settings of depth, width, number of molecules, number of labels, the diversity in dataset, and architectural choice. The authors showed that supervised pre-training of large GNNs on molecular datasets provides a rich fingerprint embedding, which is useful for 38 downstream tasks. Even if this work very systematically studied the effect of GNN model size over the predictive performance in the pre-training and fine-tuning stage with diverse downstream tasks, the work has two important limitations: It only considers 2D graphs and it addressed only organic compounds.

Several UQ methods have been applied to GNNs [41], including Bayesian GNNs [42], prediction interval methods [43], and deep ensemble methods [44]. Bayesian methods are theoretically rigorous but challenging to scale to high-dimensional data. Prediction interval methods are cost-effective but often require tedious tuning of heuristic parameters.

Compared to the scientific contributions mentioned above, our work distinguishes itself by leveraging extreme-scale supercomputing resources to ensure trustworthiness of the GFMs by performing (i) a scalable data management and in-memory data store, (ii) a systematic large-scale HPO across a broad set of GNN architectures, and (iii) a large-scale ensemble learning (EL) for UQ, which realizes a judicious compromise between cost and performance.

2.2 Scalability and GPU optimization for GNN training

The effect of the specific algorithmic characteristics of GNNs on performance benchmarking has been carried out on GPUs [45], where the authors noted that GNN training differs significantly from conventional convolutional networks (CNNs) in that only 25% of the execution time is spent on dense and sparse matrix multiplications compared to 50% in CNNs. Moreover, the execution time to process graph samples in GNNs was noted to vary greatly according to the size of the graph (number of nodes and number of edges) of the input data. The studies conducted in this work showed that the majority of the time during GNN training was spent on integer operations, sorting, index selection, reductions, and scatter–gather operations needed for nodal and edge feature updates with message passing. Multi-GPU scaling was reported using up to 4 GPUs, showing about 20-50% strong scaling efficiency between 1 and 4 GPUs. Similar remarks apply to refs. [46,47,48,49], which characterize subdivision of large graphs among processors and parallel aggregation during convolution steps.

These are useful conclusions for optimization of GNN training on large graphs (i.e., with millions of nodes), but need to be re-evaluated for our datasets. Indeed, training on large graphs is highly sensitive to the splitting scheme used to partition the graph into subgraphs and to distribute them among processors. However, for the atomistic materials modeling applications addressed in our work, the graph samples are small (with at most a few hundreds of nodes). In particular, the GNNs convolution on a batch of such samples will have a much more local, block diagonal structure. Therefore, throughput should be less sensitive to the choice of atomistic structures per batch.

Using a larger number of GPUs, the developers of the PyTorch framework for distributed data parallelism (DDP) showed the benefit of overlapping computation with communication, showing near-linear scaling using up to 256 NVIDIA Tesla V100 GPUs [50]. These preliminary scaling results focused on DDP for training of DL model using a moderate volume of data.

2.3 HydraGNN

The complexity of the physics and the scale at which atomistic structures must be studied in response to US-DOE needs in materials science makes it compelling to develop GNN capabilities that simultaneously satisfy several important algorithmic and computer science requirements. Specifically, a GNN architecture must provide: (1) capabilities to read and process data from multiple sources simultaneously, (2) flexibility to support diverse DOE-relevant scientific applications, (3) capabilities to scale the training on leadership class supercomputing facilities, (4) portability across heterogeneous computing environments, (5) continuous software maintenance by ensuring support and compatibility with upgraded software dependencies, and (6) maintained documentation to support new users across a broad set of international institutions.

While several GNN architectures have been made available as open-source tools to the scientific community in the last few years [51,52,53,54], none of these tools satisfies all of the above requirements. Moreover, including missing capabilities on these well-established GNN libraries requires invasive and laborious modifications for software redesign. These challenges arising from existing GNN implementations motivated our effort in developing HydraGNN [34, 35], which we used as underlying GNN architecture to develop and train our GFM. HydraGNN already provides:

-

MTL capabilities to process multi-source, multi-fidelity data [55];

-

object-oriented programming capabilities to use different MPNN layers [56], which allows flexible switching between different message policies based on the scientific needs of the specific application at hand, as well treating the MPNN layer as a tunable categorical hyperparameter with HPO;

-

invariant and equivariant features that reduce computational redundancy and time to solution [57], therefore contributing to energy saving;

-

scalable input/output (I/O) data management techniques to efficiently scale the training of GNN models on millions of data samples using thousands of GPUs on supercomputing facilities [58]; and

-

portable capabilities that allow conveniently running the GNN training on diverse computing platforms with different hardware and software specifications.

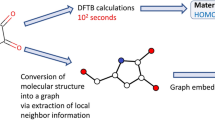

Fig. 1 illustrates the forward pass of the HydraGNN architecture. For graph-structured input data \({\mathcal {G}}= ({\mathcal {V}}, {\mathcal {E}})\), we denote the set of nodes as \({\mathcal {V}}\) and the set of edges as \({\mathcal {E}}\), and the corresponding number of nodes as \(|{\mathcal {V}}|\) and the number of edges as \(|{\mathcal {E}}|\). The input data required by HydraGNN consists of node features \({\varvec{H}}\in {\mathbb {R}}^{h_\text {input}\times |{\mathcal {V}}|}\) and graph connectivity matrix \({\varvec{A}}\), which is a binary matrix with \(|{\mathcal {V}}|\) rows and \(|{\mathcal {V}}|\) columns, such that the entry \({\varvec{A}}(i,j)\) is equal to one if nodes with indices i and j are directly connected by an edge in the graph \({\mathcal {G}}\); otherwise, it is equal to zero. The input features and their dimension \(h_\text {input}\) are determined by the task. For our application, each data batch contains |B| atomistic structures, and each graph sample is associated with a vector of integers \(B({\varvec{A}}) \in {\mathbb {N}}^{|{\mathcal {V}}|}\). Each graph sample within a data batch is uniquely identified by an integer value between 1 and |B|, and this value is used to set the entries of the vector \(B({\varvec{A}})\) to identify which nodes belong to which graph within a data batch. Denoting with L the number of MPNN layers and with \(1\le l\le L\) the index of a generic MPNN layer, the input node features \({\varvec{H}}\) are updated into the hidden features \({\varvec{H}}^l\) with hidden dimension \(h_\text {mpnn}\times |{\mathcal {V}}|\) at each layer l. The hidden features are then extracted from the nodes to the graph batch using a batch pooling function and the information contained in the integer-valued vector \(B({\varvec{A}})\). The hidden features computed by the graph pooling are denoted with \(\tilde{Y}\) and passed as input to an additional stack of C layers. After applying channel mixing operations corresponding to the channel mixing layer denoted with index \(1\le c \le C\), the features \(\tilde{Y}\) are updated into a new set of internal features \(\tilde{Y}^c\) with layer-dependent feature dimensions \(h_c\times |B|\). The final predictions are made in a multitask format with N outputs of task-dependent dimension \(h_n\times |B|\). The non-task-dependent layer count and feature dimensions \(\{L,C,h_\text {mpnn},h_c\}\) are treated as hyperparameters and are tuned with HPO.

HydraGNN supports several MPNN layers, including some that build invariant and/or equivariant features. Invariant and equivariant message passing layers are a specialized type of MPNN layers designed to respect certain symmetries in the input data. It ensures that the output of the layer transforms in a predictable and consistent way under transformations of the input. This is particularly useful in atomistic materials modeling because symmetries play a crucial role in determining properties such as energy and atomic forces. In an invariant MPNN, the hidden features \({\varvec{H}}^l\) remain unchanged under the action of a group, which in our case is the Euclidean group in three dimensions, denoted as E(3). The E(3) group encompasses all rotations, reflections, and translations in three-dimensional space. In an equivariant MPNN, the internal features \({\varvec{H}}^l\) transform consistently under the action of a group. Equivariance ensures that if the input features and positions are transformed by a group element (e.g., rotation, reflection, or translation), the output features and positions transform in a corresponding manner, maintaining consistency with the group action. To preserve invariance and equivariance in the message passing policy, E(3)-invariant and E(3)-equivariant MPNNs use invariant feature embeddings to construct invariant and equivariant features between two nodes, and use these features in the construction of the message.

Figure 2 details the object-oriented programming structure utilized by each of the MPNN models in HydraGNN. The inheritance of the Base class for each MPNN enables the seamless change between message passing policies. It also enables a direct comparison between MPNNs in a unified framework of the Base class.

HydraGNN uses the PyTorch [59, 60] software for automatic differentiation and the PyTorch Geometric [61, 62] software for message passing. The architectural hyperparameters that determine the HydraGNN model size and complexity can be set in a configuration file to tune the model training and inference process easily. Overall, HydraGNN is developed and maintained as a high-quality software product for large-scale training and development of ML models [34]. HydraGNN runs on computing platforms with significantly different hardware and software specification, including personal laptops, and clusters with moderate scale other than supercomputing facilities. For more details about installation requirements and instructions on how to run HydraGNN, we refer the reader to the HydraGNN Wiki webpage https://github.com/ORNL/HydraGNN/wiki and to the user manual [35].

3 Our contribution

The work described in this manuscript is the first-of-its-kind large-scale training of GFMs for atomistic materials modeling using over 154 million atomistic structures as data samples and using over \(91\%\) of the exascale supercomputer Frontier. We have used three key techniques for developing a scalable and trustworthy GFM: 1) scalable data management using a scientific data management library and an in-memory data store, 2) scalable HPO that uses asynchronous Bayesian optimization for efficiently managing computing resources, and 3) ensemble methods for UQ that allows model generalization and concurrently training multiple models. These three advancements collectively enhance the robustness, efficiency, and scalability of the GNN training process.

Compared to previous studies, our work shows near-linear scaling using 10x more GPUs and using much larger volumes of data, which introduces important challenges in I/O that we addressed to reduce computational bottlenecks and minimize communication overheads. Moreover, our results are generated using GPUs of newer generations, namely NVIDIA A100 installed on NERSC-Perlmutter and AMD Instinct™ MI250x installed on OLCF-Frontier, thereby showing that our scaling efficiency is also transferable across technologies manufactured by different vendors.

3.1 Data aggregation

Using large datasets for GFM training is expected to enhance generalizability and ensure resilience to data variance issues that typically arise during downstream tasks. To this end, we aggregated five open-source atomistic materials modeling datasets that are extremely diverse in terms of chemical composition, atomistic configurations, and number of atoms in the system. These datasets, as listed in Table 1, are: ANI1x, QM7x, OC2020, OC2022, and MPTrj.

-

ANI1x [63] consists of over 4,956,005 atomistic structures derived from up to 57 thousand distinct molecular configurations containing the C, H, N, and O chemical elements.

-

QM7x [64] is a comprehensive dataset of 42 physicochemical properties for approximately 4.2 million equilibrium and non-equilibrium structures of small organic molecules with up to seven non-hydrogen atoms from the C, N, O, S, Cl chemical elements.

-

OC2020 [39] provides 1,281,040 density functional theory (DFT) relaxations (134,890,000 single point calculations) across a range of oxide materials, coverages, and adsorbates.

-

OC2022 [40] provides 62,331 DFT relaxations (9,854,504 single point calculations) across a range of oxide materials, coverages, and adsorbates.

-

MPTrj [37]: the version of the dataset from 2020 provides DFT calculations for 83,988 atomistic structures of inorganic materials.

Each dataset is unique for the chemical compositions and the number of atoms in the atomistic structures of the compounds described. Figure 3 shows the distribution of the number of atoms and graph edges per atomistic structure for each dataset. For the MPTrj dataset, approximately half of the atomistic structures are relatively small in size. On the other hand, the OC2020 and OC2022 datasets consist of a more even distribution of atomistic structures with different sizes and edge counts, with the larger structures consisting of over 400 atoms and over 12,500 edges. In total, the data used for training, validating, and testing our GFM consisted of over 154 million atomistic structures that cover more than two-thirds of the periodic table and consume 5.3 terabytes of storage space. These datasets were preprocessed using a scientific data management library into a common format for efficient storage and I/O, as discussed in Sect. 3.3.

Figure 4 provides the heatmap that illustrates the frequency of occurrence of each element of the periodic table across the entire dataset that results from the aggregation of the datasets ANI1x, QM7-X, OC2020, OC2022, and MPTrj. We notice that there is an underrepresentation of the elements from the transition metal groups. This is partially due to the fact that first-principles calculations for materials including these elements (e.g., alloys) are more computationally expensive, and thus are available in smaller volumes with respect to first-principles data for other classes of materials.

3.2 Data cleaning and preprocessing

Some of the atomistic structures were determined to have unrealistic values for atomic forces (on the order of 20,000 eV/angstrom). Most likely these corresponded to configurations visited at early stages of energy minimization during the generation of these datasets. To eliminate these outliers, we first applied a data cleaning operation in which we discarded all atomistic structures with an \(L^2\)-norm (also known as spectral norm) of the force tensor above 100 eV/angstrom to ensure that these data samples did not affect the training of our GFMs. The number of data samples removed from each dataset by this filtering operation is reported in Table 2.

Datasets from different sources were generated with different electronic structure methods, leading to global shifts in the energy calculated for each element of the periodic table, and consequently also on the energies of the organic and inorganic compounds obtained by combining multiple elements. In order to realign the multi-source multi-fidelity data, we adopted the procedure proposed in [65] to transform the energy of each atomistic structure during preprocessing by subtracting a linear regression term. For each dataset, the linear regression term was calculated by solving the following least-squares problem:

where \(e^i_0\) is the reference energy for the atomistic structure i and \(n^i_Z\) counts the number of atoms of element number Z belonging to the atomistic structure i. By separately determining the regression coefficients \(C_Z\) (with the dimension unit of eV/atom) for each dataset, we were able to remove the largest source of variability between datasets. This led to a more consistent training of the GFMs using the labeled energy values. Even if the used datasets cover only two-thirds of the natural elements of the periodic table, we built the linear regression model to account for all the 118 elements (natural and artificial) of the periodic table to ensure that our software infrastructures accommodates future generalizations of our current approach.

The histogram of the values of the energy per atom before and after removing the linear regression term and \(L^2\)-norm of forces after removing data samples with force values unreasonably high is shown in Fig. 5. The partition of each dataset into training, validation, and testing has been performed using the 80%–10%–10% splitting. Figure 6 shows the histograms of the distribution of energies and atomic forces for the training, validation, and testing subsets of each dataset.

Normalized histograms for each dataset of the total energy per atom (top), energy per atom after removing the linear regression term from each dataset (center), and the \(L^2\)-norm of the force tensor (bottom) for each dataset after removing data samples with \(L^2\)-norm of the force tensor unreasonably higher than 100 eV/angstrom

3.3 Scalable data management

HydraGNN implements two optimization strategies that address scalability challenges due to the large volume of data used for training. These strategies aim for: 1) efficient storage and reading of large training data, and 2) fast reading of batch data during the training process. Atomistic materials modeling datasets are typically exported as collections of large numbers of files. Storing them on a shared parallel file system (PFS) and then reading data from files during the training process cause a severe I/O bottleneck during training. Multiple datasets that cumulatively contain tens of thousands of small files exert significant pressure on the PFS’s metadata service. Additionally, frequent access by multiple GPUs from the PFS during training loops results in a substantial slowdown in the training process. To address these issues, we adopted a two-pronged approach to manage large data and reduce the I/O overhead for training the GNN model. First, we preprocessed the input datasets and stored their graph representation in a scientific data format. Secondly, we used a distributed in-memory data store to load data into memory for fast shuffling of data samples during the training process.

3.3.1 ADIOS for high-performance I/O

Several publicly available atomistic materials modeling datasets are stored using bespoke schemas and exported as large collections of files. For example, the OC2020 dataset [39] consists of over 50,000 files. Storing multiple such datasets adds prohibitively high metadata overhead on the PFS and leads to slow data ingestion during the training process. For efficiently storing and reading large volumes of training data, we use the ADIOS [66] scientific data management library, which provides a state-of-the-art solution for managing extreme-scale data. ADIOS is designed to provide scalable I/O on the largest supercomputers in the world and has been successfully used in science applications that write and read several petabytes in a single simulation run.

An ADIOS file is stored in a hierarchical, self-documenting format that consists of a directory with sub-files and metadata files. Data is stored in ADIOS variables and is automatically distributed across several ‘sub-files.’ Users only focus on creating variables and issuing read/write calls, leaving the storage format and organization to ADIOS. For example, we store graph node features in a large array which is automatically distributed among several sub-files when it is written to the ADIOS file. ADIOS internally maintains metadata to track the structure and organization of data. The number of sub-files controls the concurrency level while reading data in parallel. This n:m pattern in which n processes concurrently read data from m sub-files is pivotal to obtaining high read performance. ADIOS provides several options to tune I/O performance, including configuring the number of sub-files.

The conversion of raw input data into ADIOS is performed as a separate preprocessing step. In HydraGNN, we have developed custom data writer and reader modules to store graph data in the ADIOS format and retrieve it during the training process. Raw data is initially read into PyTorch objects, which are then serialized into the ADIOS format following a pre-defined schema. This schema defines the mapping of graph data to ADIOS objects.

The data samples are organized into three logical groups of variables in the ADIOS file: ‘trainset’ for training data, ‘valset’ for validation data, and ‘testset’ for evaluating model performance. Each group further stores molecular data and associated metadata across multiple ADIOS variables. Node-level and graph-level features, which represent atomic and molecular properties, respectively, are stored as multi-dimensional arrays where each dimension corresponds to a specific feature. This approach enables efficient data retrieval as data for multiple molecules can be read in a single I/O request. Key attributes such as the energy, forces, edge attributes, edge connectivity, the number of atoms per molecule, and the 3D position of atoms are similarly stored as vectors or n-dimensional arrays within the ADIOS file. User-defined metadata provides detailed explanations about the graphs and the dataset within the ADIOS file, offering valuable context and additional information about the dataset.

3.3.2 DDStore

Different approaches for shuffling data during the training process. In a), data is read from the shared file system in which each graph object is stored in its own separate file (high file system metadata overhead). In b), data is read from an ADIOS file (low metadata overhead, high I/O bandwidth). In c), all data is read once into DDStore [67], an in-memory data store which uses MPI one-sided RMA operations to obtain data from remote processes (best performance)

DDP [68,69,70,71,72] enables parallel training by distributing the training data across available compute resources. The data is divided into batches, referred to as shards, with each GPU processing one shard at a time. During training, all shards processed by the different processes collectively complete an epoch. Traditionally, these data shards are stored in the PFS and read at the start of each epoch. However, frequent access to the PFS, even with high-performance libraries like ADIOS, is computationally expensive, as I/O operations on PFS are among the slowest in a computing system. Furthermore, shuffling data to improve model generalization can be constrained when relying on the PFS. On-the-fly re-sharding poses additional challenges due to the significant volume of data and the throughput limitations of the PFS.

To provide fast data retrieval during training, we used DDStore [67], a distributed data store that provides in-memory data transfer between processes. When training begins, processes read data from ADIOS files and load it into the node’s memory, which maintains a global map of data samples on each process. When a GPU requests a new batch of data, DDStore fetches the data from remote processes’ memory using low-latency, fast communication techniques rather than reading data from the PFS. By restricting access to the PFS to the initial bootup phase, DDStore ensures that obtaining a batch is a fast, in-memory operation. Experiments described in [67] show that it leads to a 6\(\times \) speedup in overall training time.

DDStore provides options to tune the size of data chunks stored on each process (chunking), replicating a dataset on internal subgroups of processes (replication), and the communication mechanism selected for fetching data. For our experiments, data is split evenly among all processes, and a single replica of the dataset is maintained across all processes. For efficient data retrieval, the low-latency MPI one-sided remote memory access (RMA) operations were used. Figure 7 shows the data loading and caching approach used by DDStore compared to traditional approaches that read data directly from the file system. Section 4 shows the time taken to obtain a batch of data samples for different model sizes and node counts.

3.4 MTL for prediction of energy and atomic forces

MTL uses a single DL architecture to simultaneously predict multiple quantities [73] and allows for a natural and automated incorporation of physics knowledge into the model by extracting correlations between the properties predicted, with manual intervention by a domain expert only needed in determining which quantities to use. MTL is useful for developing GFMs, as it induces the model to use underlying physical correlations to develop physics-informed features that can be transferred on several downstream tasks. In this work, we choose energy and atomic forces as quantities to predict simultaneously. Denoting the number of atoms in an atomistic structure with n and the Cartesian coordinates of the position of the nuclei of atom i (where i can be any integer between 1 and n) with \(\textbf{x}_i\in {\mathbb {R}}^3\), the relation between the energy \(e \in {\mathbb {R}}\) and forces \(\textbf{f}_i \in {\mathbb {R}}^3\) acting on atom i is

In HydraGNN, each predicted quantity is associated with a separate loss function and the global objective function minimized during the training is a linear combination of the individual loss functions. We denote by \(\textbf{F}\in {\mathbb {R}}^{N\times 3}\) the atomic force tensor that contains the atomic forces acting on all the N atoms

where T represents the transposition of a vector. Denoting by P the total number of parameters to train in the GFM architecture, the MTL loss function \(\ell _\textrm{MTL}:{\mathbb {R}}^{P}\rightarrow {\mathbb {R}}^+\) is:

where \(\Vert \cdot \Vert _1\) represents the \(L^1\)-norm of a vector or the induced tensor norm, e and \(\textbf{F}\) represent the true values of energies and forces, \(\textbf{e}_{\text {predict}}\) and \(\textbf{F}_{\text {predict}}\) are the corresponding predictions given by HydraGNN, and the scalar positive weights \(\alpha _\textrm{energy}\) and \(\alpha _\textrm{forces}\) are used to calibrate the individual terms of the loss function for each individual properties. We used the \(L^1\)-norm in the training loss function because it is more robust against outliers than the \(L^2\)-norm. Similarly to [65], we calibrate the scalar positive weights \(\alpha _\textrm{energy}\) and \(\alpha _\textrm{forces}\) to account for the fact that the energy is a global quantity, while the atomic forces are local quantities. Specifically, we set the values of these scalar weights to \(\alpha _\textrm{energy}=1\) and \(\alpha _\textrm{forces}=100\). An illustration of the main components of the HydraGNN architecture used for MTL is described in Fig. 8.

3.5 Scalable HPO

Compared to other DL architectures, GNNs are known for their advantage in learning from graph-structured atomistic materials modeling datasets because they can use the sparsity of the graph connectivity to reduce the training cost by replacing dense tensor algebra operations with sparse ones. However, to achieve high predictive accuracy across chemically diverse datasets, it is essential to fine-tune the hyperparameters of HydraGNN. The task of identifying optimal hyperparameter settings is daunting and has been extensively documented in existing literature [74,75,76,77,78,79]. Manual tuning requires extensive experimentation and often results in sub-optimal performance.

To perform HPO at large scale, we used DeepHyper [80], an open-source Python package designed for optimizing hyperparameters, searching for optimal neural architectures. Specifically, we used asynchronous Bayesian optimization that continuously refines a surrogate model by sampling hyperparameter configurations. The efficacy of DeepHyper’s asynchronous Bayesian optimization has been demonstrated across various DL benchmarks, outperforming methods such as random search, genetic algorithms, and Hyperband in environments equipped with CPUs and GPUs. In the DeepHyper setup, a manager node refines the surrogate model and suggests promising configurations while worker nodes perform the evaluations. Our approach uses a centralized architecture with process-based parallelism, optimizing the allocation of tasks across computing nodes to avoid bottlenecks.

Message passing is the core methodology of GNN models since it prescribes how features of nodes and edges are updated using information contained in neighboring nodes and edges. Various MPNNs have been developed and tailored for different atomistic systems, such as SchNet [81] for organic molecules and CGCNN [82] for solid-state crystals. However, when considering GFMs applicable to a broad range of systems in atomistic materials, it is not practical to confine ourselves to a specific MPNN method. In HydraGNN, the choice of MPNN is configurable through a hyperparameter, allowing the users to select the model that best suits their applications. We include MPNN as a categorical hyperparameter in the HPO runs to allow for the identification of the best performing MPNN layers for the assigned training data.

In HPO, early termination strategies are vital for improving the utilization of computational resources by discarding unpromising candidates based on their performance trends. This decision has proved effective early in the training process [83]. DeepHyper provides three early discarding techniques suited for asynchronous and parallel environments: (1) asynchronous successive halving, which progressively eliminates candidates based on their interim performance; (2) learning curve extrapolation, which predicts future performance from early data and facilitates early termination; and (3) constant fidelity, which sets a fixed resource allocation for each candidate before deciding whether to continue. For our tests, we used constant fidelity as it enables efficient reallocation of resources toward more promising configurations and significantly enhances operational efficiency in large-scale, distributed computing environments. We used 10 epochs as a stopping criterion for each model training in the HPO phase. While HPO has been previously explored for GNNs, our approach uses HPO on a scale previously unattempted.

3.6 Scalable UQ with GNN Ensembles

Ensemble methods are widely utilized in UQ to compile predictions from various models, termed ensemble members, into a unified forecast. The goal of these methods is to enhance model generalization by drawing on the diverse capabilities of each individual model [84]. To promote a varied set of predictions, practices such as different model initializations, techniques like bagging and boosting, and the integration of diverse network architectures are used. Research conducted by Egele et al. [85] showed that expanding the variety of network architectures within an ensemble can improve its diversity, thereby increasing the reliability of uncertainty assessments. They also developed a technique for concurrently training multiple candidate models, which optimizes the use of computational resources. Ensemble methods are acknowledged for their ability to deliver reliable uncertainty estimates and their ease of implementation and scalability, making them practical for various UQ applications.

To account for model (epistemic) uncertainty, we employ ensembles consisting of multiple neural networks (NNs). Our approach involves considering a collection of GNN models generated by DeepHyper, denoted by \({\mathcal {C}} = \{\theta _i, i=1,2,\cdots ,c\}\). We then select K models from this collection to form the ensemble, where \({\mathcal {E}} = \{\theta _i, i=1,2,\cdots ,K\}\) and K denotes the ensemble size. For an input graph G, the ensemble’s prediction is the average of prediction from all model members \(f_{\theta _i}\),

and the uncertainty is measured as the standard deviation (STD),

Our method offers notable advantages in terms of generality and scalability. Central to our approach is the construction of model ensembles, which relies on scalable HPO. This methodology can be applied to any type of NN model. The process begins with using a standard NN architecture, conducting HPO, selecting the most suitable models, and subsequently producing uncertainty estimates. The scalability of our method is anchored in both the scalable nature of the hyperparameter search and the ability to train ensembles efficiently. Working with an ensemble of models enables many options for building consensus models, uncertainty estimation, and active learning [84].

4 Performance measurements

4.1 Setup

For strong and weak scaling studies, we utilize three different sizes of GFM architectures, denoted as SMALL, MEDIUM, and LARGE. They differ in the total number of parameters, ranging from approximately 60,000 to 163 million. Table 3 provides details about the three model sizes.

Experiments were conducted on two DOE supercomputers: Frontier at ORNL and Perlmutter at NERSC. Both systems provide state-of-the-art GPU-based heterogeneous architectures. At the time when the GFM training was performed, Frontier, which is located at the Oak Ridge Leadership Computing Facility at ORNL, was the world’s fastest supercomputer [86]; now it is the second. It comprises a total of 9,408 compute nodes, each featuring a single 64-core AMD EPYC 7763 (Milan) CPU and four AMD Instinct MI250X GPU accelerators, effectively providing eight GPU units per node. Running with one rank per GPU unit, each rank has 64 GB of DDR4 (CPU) and 64 GB of HBM2e (GPU) memory.

Perlmutter, a supercomputer at National Energy Research Scientific Computing Center (NERSC), features approximately 3000 CPU-only nodes and 1800 GPU-accelerated nodes. Our work utilizes only the GPU-accelerated nodes. Each node is equipped with an AMD EPYC 7763 CPU and four NVIDIA Ampere A100 GPUs interconnected via NVLink-3. Running with one rank per GPU unit, each rank has 64 GB of DDR4 (CPU) and 40 GB of HBM2 (GPU) memory. Both Frontier and Perlmutter use HPE Cray \(\hbox {Slingshot}^\mathrm {(TM)}\) interconnects.

To aid in monitoring HydraGNN execution in real-time for a subset of the analysis carried out on Frontier, an AMD Research utility, Omnistat [87], was used to sample a variety of GPU telemetry metrics including occupancy, high-bandwidth memory (HBM) usage, power, temperature, energy consumption, and clock/memory frequencies on a per GCD basis across all nodes assigned to an individual run. This Python-based stand-alone utility was executed entirely in user space implemented as a Prometheus client on each assigned compute node and combines low-overhead sampling via AMD’s system management interface (SMI) at fixed intervals with a temporary Prometheus server [88] instantiated on one CPU core of the master compute host per batch job. Omnistat needed no changes to the application code. Furthermore, minimal job overhead (less than 0.5%) was observed when running HydraGNN training with this approach for sampling intervals down to one second.

4.2 I/O performance for reading large data

In Sect. 3.3, we described using the ADIOS scientific data management library for fast storage and retrieval of large training data. In this section, we show the performance of reading large data in HydraGNN for training models.

Of all the datasets used in this study, the Open Catalyst 2020 dataset is the largest in terms of number of atomistic structures, storage size of the dataset, and number of files across which data is stored. The original dataset consists of over 50,000 files. The dataset was preprocessed into ADIOS and was configured to use just over 50 ADIOS sub-files, which led to a 1000\(\times \) reduction in the metadata footprint.

When training begins, HydraGNN reads ADIOS data in parallel on all processes. This read operation is a two-step process in which first the root process obtains the number of graphs (atomistic structures) followed by the size (number of atoms) and the feature metadata for each graph. This information is broadcast to all other processes that implicitly distribute the graphs evenly among themselves and concurrently read their assigned graphs from the ADIOS file. This set of operations is performed for all atomistic structures groups—training set, validation set, and testing set.

Figure 9 shows the reading performance of the training data on Frontier when all processes read their assigned graphs in parallel. We obtain over 8 terabytes/second for higher node counts and almost 2 terabytes/second on 128 nodes. The high I/O bandwidth is a characteristic feature of the ADIOS library as it permits multiple processes to read data spread over multiple ADIOS sub-files efficiently. A similar run on Perlmutter was not possible due to PFS issues encountered on the system during our study.

The initial step in which the root process reads several small portions of the dataset and broadcasts them is an inherently sequential set of operations. As this slows the overall I/O, we obtain lower I/O bandwidth as the root process performs these tasks for the trainset, valset, and testset data groups to read a total of approximately 500 Gigabytes of initial data. Figure 10 shows the sustained I/O bandwidth achieved when HydraGNN reads the entire OC2020 dataset, which is 4.3 terabytes in size. We obtain a net bandwidth of over 120 Gigabytes/second on Frontier, which allows HydraGNN to ingest the full collection of 120 million graphs in just over 30 s.

Read performance for the entire OC2020 dataset on Frontier that includes training, validation, and testing data. We obtain over 120 Gigabytes/second (approximately 35 s) for reading 4.3 terabytes of data. We will apply techniques to read user metadata in parallel to improve the overall read performance of the complete dataset

4.3 HydraGNN training scaling results

We now analyze the scaling performance of HydraGNN on Frontier and Perlmutter. We present weak and strong scaling trends, along with a breakdown of component operations in HydraGNN as we scale it up. Experiments were performed with up to 2,048 nodes on Frontier and 256 nodes on Perlmutter using the three model sizes discussed in Table 3.

4.3.1 Weak scaling

For the weak scaling runs, we configured each GPU to process 3,500 atomistic structures equally. Figure 11 shows the weak scaling performance on Perlmutter and Frontier as we vary the number of GPUs used for the training. The reported time represents the average training time per epoch. We conducted experiments with up to 2,048 GPUs on Frontier and 1,024 GPUs on Perlmutter. The number of GPUs on Perlmutter was dictated by constraints on available node hours. We observe that the parallel efficiency of weak scaling experiments drops as we increase the number of GPUs beyond 256 for both Perlmutter and Frontier. This is attributed to increased communication costs as we scale the number of GPUs and the overhead associated with using varying graph sizes.

Figure 12 provides a breakdown of the overhead of different components of HydraGNN used in the weak scaling experiments. The terms ‘forward’ and ‘backward’ represent the forward and backward phases of the DL model training, respectively, and ‘dataload’ denotes the cost of obtaining the next batch of data samples from DDStore after a GPU finishes processing its current batch. We notice that ‘dataload’ has a fixed cost, which expectedly becomes more prominent for the small model size and is only a fraction of the runtime as the model size increases. The forward and backward phases show an increase in runtime as we scale up the workflow as synchronization and communication operations become more expensive with increasing GPU counts.

4.3.2 Strong scaling

For strong scaling runs, we trained HydraGNN on a subset of the entire data available, comprising 120 million atomistic graphs (approximately 4TB in size), on Frontier and 2 million atomistic graphs on Perlmutter for the three model sizes. Figure 13 shows the scaling results for 512 to 16,384 GPUs on Frontier and from 64 up to 2,048 GPUs on Perlmutter. The reported time is the average training time per epoch, similar to the weak scaling measurements. While the SMALL model’s performance deviates from the optimal linear dotted line after 2,048 GPUs on Frontier, the MEDIUM and LARGE models maintain close to linear scaling up to 16,384 GPUs on Frontier. We notice a similar trend on Perlmutter where we observe near-linear scaling up to 2,048 GPUs for all model sizes.

The drop in scaling performance is attributed to load imbalance—a known artifact of small graph sizes. As shown in Fig. 3, we use a diverse dataset where graph sizes vary by up to 400 nodes, and the number of edges in the larger graphs exceeds 12,500. This results in an imbalanced workload among GPUs in each batch, causing some GPUs to finish training before others. As GPUs must synchronize for exchanging model weights, the runtime is dominated by the GPUs that must train on larger graphs. Effectively, this leads to substandard utilization of compute resources and poses a challenge toward achieving high-performant, scalable training. Therefore, while training on large volumes of data can help develop robust models because of the diverse nature of data, the computational performance may suffer as the workload can vary greatly.

The EGNN model we used is particularly vulnerable to this problem. The time required for forward calculations in EGNN is directly proportional to the number of edges in the graph sample. For datasets with highly variable edge counts between graph samples, the likelihood of load imbalance between GPUs increases. Figure 14 illustrates the time spent on the forward task in the EGNN model with different model sizes. We observe an almost linear relationship between forward time and graph size at each batch (measured by the number of edges). The SMALL models show large variances on both machines, which is expected due to system noise being more consequential for smaller model sizes. Significant performance differences (e.g., the difference between minimum and maximum time) are observed due to the varying graph sizes in our datasets. However, for other tasks (data loading and backward), we do not observe a similar correlation, as they are agnostic of the graph size. Figure 15 illustrates the average percentage of time spent waiting during three tasks: data loading, forward pass, and backward pass. It highlights a significant waiting period during the forward pass, primarily due to varying graph sizes. This waiting time increases as the disparity in graph sizes among GPUs grows. Other tasks, such as data loading and backward pass, also involve waiting time, but to a much lesser extent.

To quantify the degree of load imbalance between GPUs, we compute the load imbalance factor (LIF) defined by the ratio

where \(T_{max}\) and \(T_{avg}\) represent the maximum runtime and the average runtime for training an epoch, respectively, among all computing resources (GPUs in our case). These times represent the time to perform training (forward and backward calculations) and do not include wait times during synchronization. For a well-balanced workload, LIF approaches 1.0 from above, whereas it increases as the workload imbalance increases. Figure 16 presents the LIF scores that show the imbalance among processes. The trend remains consistent: While data loading and backward pass exhibit nearly balanced workloads (with scores close to 1.0), the forward pass shows imbalanced workload characteristics as it deviates from 1.0.

To address the performance penalties caused by workload imbalance, one potential solution is to implement binning or sharing approaches based on graph sizes. This would help ensure balanced workloads across multiple GPUs during each batch processing. However, there is a concern that this method might negatively impact the quality of training or the training losses during the optimization phase by reducing the stochastic effect, which is crucial for effective training. Given this potential trade-off, it is essential to explore and develop more sophisticated strategies to mitigate load imbalance while maintaining training quality. This will be a key focus for future research and development efforts.

4.4 Scalable HPO

The HPO process is performed using DeepHyper [80]. DeepHyper has been specifically designed to perform efficient and scalable HPO on integrated extreme-scale HPC and leadership class supercomputing facilities, and it thus suits well our purpose. Among the various hyperparameter search algorithms implemented in DeepHyper, we used the centralized Bayesian optimization search [89,90,91,92,93,94], previously named as ‘asynchronous model-based search’ (AMBS) [95]. It follows a manager–workers architecture where the manager runs the Bayesian optimization loop and workers execute parallel evaluations of the black-box function.

The hyperparameter tuning has spanned important architectural hyperparameters described in Table 4. The range of architectural hyperparameters covers regions of the hyperparameter space that allow to construct HydraGNN models of extremely diverse sizes, which include the SMALL model and the LARGE models described in Table 3 as extremes.

We ran four consecutive HPO runs of progressively increasing scale, each restarting from the output of the previous one. During each one of the four HPO runs, each HPO trial is associated with an independent ‘srun’ execution of the SLURM scheduler and occupies 128 Frontier nodes (i.e., 1,024 AMD Instinct MI250x GCDs) for distributed training using DDP. Concurrent HPO trials are executed asynchronously, and the termination of an HPO trial is immediately followed by the start of a new one on the same set of compute nodes. Throughout the entire execution of each HPO run, we used the open-source Omnistat data collection infrastructure highlighted in Sect. 4.1 to simultaneously collect GPU telemetry with measurements saved on a local Lustre PFS. Since each HPO trial is submitted as a separate job step within the global job submission, Omnistat postprocessing allows us to isolate power and energy measurements at the job step level, thereby providing an aggregate GPU energy consumed estimate for each trial independently. In order to ensure that the HPO process is performed in an energy-efficient way on OLCF-Frontier, we early stop the training of HydraGNN models for each HPO trial after 10 epochs. This number of epochs allows to early stop the HPO trials that are clearly underperforming in a timely manner, without wasteful energy consumption caused by further training epochs that would not likely improve their accuracy, while still ensuring that promising HPO trials are distinguishable and selected for the next computational tasks. This approach results in impactful energy savings. Our use of DeepHyper for asynchronous Bayesian optimization, combined with in-band telemetry monitoring and a strategic deployment of early termination strategies, showcases a significant advancement in the field, optimizing GNN training in ways that have not been documented prior to this work.

The first HPO run used 2,048 Frontier nodes in parallel, thereby allowing 16 distinct HydraGNN architectures to be concurrently trained. This first HPO run allowed us to perform a first uninformed exploration of the hyperparameter space, and construct some guidance for the consecutive HPO runs. The results of the first HPO run have been used as inputs to inform the second HPO run, which used 3,072 Frontier nodes in parallel, thereby allowing 28 distinct HydraGNN architectures to be concurrently trained. The results of the second HPO run have been used as input to inform the third HPO run, which use 4,096 Frontier nodes in parallel, thereby allowing 32 distinct HydraGNN architectures to be concurrently trained. Similarly, the output of the third HPO run has been used to successfully guide the fourth and last HPO run, which used 8,560 Frontier nodes in parallel, thereby allowing 67 distinct HydraGNN architectures to be concurrently trained. This extensive scale of the last HPO run not only tests the limits of scalability and efficiency in computational resources, but also addresses the challenges associated with the high dimensionality of the hyperparameter space that needs to be explored, ensuring that a judicious balance between exploitation and exploration is maintained.

Figures 17, 18, 19, and 20 show the validation mean absolute error (MAE) as a function of wall-clock time for each one of the four HPO runs. For each one of the four HPO runs, the scattered distribution of blue dots (corresponding to values of the validation MAE for different HPO trials) shows that the HPO maintains a good degree of exploration throughout the entire execution. The red solid line indicates the minimum validation MAE obtained at a given time during the HPO run. The fact that the red line progressively descends as time progresses confirms that HPO progressively identifies GFM architectures with better accuracy. Moreover, we notice that the HPO trials of the last run are highly concentrated around the minimum (red line) across consecutive HPO runs, thereby confirming that previous HPO runs effectively provided meaningful information to hone in narrow regions of the hyperparameter space where accurate HydraGNN models can be found.

To characterize the dynamic resource behavior of HPO as multiple trials with varying model configurations are running in parallel, telemetry data collected by Omnistat was used to track the GPU memory usage for each trial as a function of time. Figure 21 highlights these memory traces for all trials initiated during the final HPO exercise using 8,560 Frontier nodes during a 6-hour run. Each line on the plot corresponds to one of 390 trials initiated and we observe a dynamic high water mark peaking at 99.9% of available memory. The variability of memory utilization across different trials is due to the fact that different groups of GPUs (associated with different HPO trials) train HydraGNN models of different sizes, which affects the amount of GPU memory engaged at different stages of the model training.

4.5 Energy profiling

To quantify the energy usage as a function of different model sizes, three training epochs of the SMALL, MEDIUM, and LARGE model configurations listed in Table 3 were completed with the Omnistat telemetry tool sampling at one second intervals. Measurements consider the entire run duration including I/O for the initial data loading process. Each model executed using 1,024 GPUs on 128 compute nodes which is the minimum node count needed to accommodate memory requirements for the LARGE model configuration. The resulting GPU energy measurements as a function of model size are summarized in Table 5. While total energy scales with the execution time, note that GPU utilization (occupancy) also influences the energy consumed. Table 5 includes mean utilization observed across all 1,024 GPUs. The LARGE case showed the highest GPU utilization–around 89%. The underling power histories used to compute total energy consumed for each model configuration are shown in Fig. 22. From these plots, we see evidence of the underlying training process with three epoch cycles visible in the power response. Furthermore, the increased GPU utilization for the larger models leads to increased GPU power demand with the LARGE model encountering peak power measurements in excess of 520 watts (W). (The peak TDP power for the AMD MI250 socket is 560W.)

Figure 23 shows a scatterplot of the GPU energy consumption collected using Omnistat telemetry for each HPO trial against the number of parameters in the models, which clearly shows a linear trend between the two quantities.

The energy analysis presented herein is focused on using the coarse–grain energy profiling infrastructure of Omnistat to monitor individual and system-wide GPU power consumption for all the various model configurations considered during HPO. While the data loading stage into host memory does incur non-trivial energy usage, we consider this an up-front fixed cost, and once HPO model optimization begins, the majority of energy use and almost all of the variability are related to model training execution on GPUs for which energy usage is influenced by a number of issues: model size and type, number of GPUs participating, GPU occupancy (utilization), GPU clock frequency, and the time to complete 10 epochs. We chose Omnistat to monitor GPU telemetry for each HPO trial because it provides a non-intrusive, low-overhead mechanism to track occupancy, memory usage, clock frequencies, and power traces separately without the need to modify the HydraGNN application code at all. As a result, we gained a holistic view of the power consumption per GPU socket as a function of time during each HPO trial (e.g., results highlighted in Fig. 22). The main benefit of quantifying coarse–grain energy consumption came after HPO was completed. Energy usage data from a few hundred HPO trials helped prune the model search space and select promising energy-efficient and highly accurate trials for full pre-training of GFMs as described in Sect. 4.6 (e.g., Figures 24 and 25).

4.6 Energy-efficient full training of best performing HydraGNN models identified by scalable HPO

To ensure trustworthiness of our GFM, we refined the pre-training of an ensemble of fifteen HPO trials selected from the four consecutive HPO runs and use them for the ensemble UQ. To this end, we sorted the HPO trials for increasing values of the validation MAE. The results showed that four HPO trials were outperforming all the other in terms of accuracy, with a validation MAE below 0.10. The HydraGNN architectures associated with these HPO trials have been identified as worth being further trained and thus included in the ensemble for UQ as first tier. For the selection of the remaining eleven HPO trials to be included in the ensemble, we noticed that thirty-two HPO trials were clustered within a second tier with a narrow range of the validation MAE between 0.10 and 0.125. Although these models were very close to each other in terms of accuracy, they were quite different in terms of energy consumed during the HPO run. Therefore, to ensure energy efficiency, we applied a second screening of the HPO trials based on energy consumption. To this end, we performed local sorting restricted only among these thirty-two HPO trials based on increasing amount of energy consumed. The eleven models with the lowest energy consumption have been selected and included in the ensemble for UQ as second tier. Figure 24 shows the scatterplot of the energy consumption against the validation MAE for each HPO trial executed during the four consecutive HPO runs. The four HPO trials belonging to the first tier (in red) sit in the left most part of the plot with lowest validation MAE, whereas the eleven HPO trials of the second tier (in pink) sit along the Pareto front of energy consumption vs. validation MAE. The plot also clearly shows that all the HPO trials selected (first and second tier) sit on the side of the Pareto front that partially favors preserving optimal accuracy over energy efficiency.

All fifteen selected HPO trials selected use the EGNN as MPNN layer. Among the different types of MPNN layers tested, the EGNN is an equivariant model. Since equivariant models are supposed to be more data-efficient than non-equivariant models by taking full advantage of symmetries to achieve the maximal accuracy with the minimal requirement on the data, the fact that the automated HPO favors EGNN seems reasonable.

The fifteen selected HydraGNN models have been fully trained for a maximum of 30 epochs to reach convergence of the training, with the option of early stopping if validation MAE does not decrease across ten consecutive epochs and with the option of saving the last performed epochs if the wall-clock allocation time is about to expire. We report the trend of the training loss for all fifteen models in Fig. 25. The training loss flattens at the end of the training history, indicating that the models have reached their maximum predictive capacity. Moreover, the models seem to reach similar final accuracy, thereby confirming that the HPO has indeed thoroughly spanned the hyperparameter space and identified HydraGNN models with similar predictive performance. We then used the ensemble of the fully pre-trained fifteen GFMs to provide ensemble predictions with epistemic uncertainty measurement, as described in Sect. 4.7.

4.7 Ensemble predictions and epistemic UQ on ensemble of pre-trained GFMs

UQ is essential for the trustworthiness of GFMs. Although the scientific community has not reached a consensus yet on a standardized definition of trustworthiness, our definition of trustworthiness treats uncertainty and accuracy as the most critical metrics, because we aim to achieve a correlation between model confidence and prediction accuracy, namely high confidence in accurate predictions and low confidence in inaccurate ones. We calculate the uncertainties of the ensemble predictions in pre-training using the fifteen models from Sect. 4.6. Table 6 provides the MAE and the root mean square error (RMSE) of the ensemble predictions of energy and forces on the training–validation–testing splits of each dataset. Given the limited number of training epochs used for the pre-training of the GFM ensemble, the accuracy achieved is very promising and indicative that the GFMs are indeed learning the underlying chemical principles that describe interactions between atoms of different constituents. Figure 26 presents the parity plot of ensemble averaged predictions for 20,480 atomistic structures from the test split, showing that our ensemble models have learned the generic information from the diverse pre-training data. While the accuracy is not as high as that reported in task-specific AI work, our pre-trained GFMs are expected to stabilize zero-shot predictions via ensemble averaging and reduce the fine-tuning efforts across a broad set of domain-specific tasks.

Figure 27 shows the histogram of epistemic uncertainty (left column) of the corresponding energy and force predictions in pre-training, calculated on 4,096 atomistic structure from the test split of each dataset (in total, 20,480 structures). The uncertainty \(\sigma _{\tilde{y}}\) is defined as the STD of ensemble predictions in Equation (7), which is commonly used but biased toward large true values and may not accurately reflect actual prediction quality. We also plot the relative uncertainty to data STD (right column) in Fig. 27. Three datasets are observed to yield higher relative uncertainties—ANI1x, qm7x, and MPTrj—due to two factors. First, datasets ANI1x and qm7x each contain approximately 4-5 million organic compounds, in contrast to the other datasets, which include more than 145 million inorganic compounds. Second, dataset MPTrj includes higher-fidelity solutions but is much smaller in size compared to the others. Some of the atomistic structures from the datasets qm7x and ANI1x are associated with significantly inaccurate predictions of atomic forces, which correspond to the data samples sitting on the horizontal point cloud in the parity plot for the prediction of atomic forces. Since the ensemble of GFMs correctly associate high uncertainty to atomistic structures whose predictions of atomic forces are notably inaccurate, this corroborates the fact that our GFMs are self-aware of their own regime of trustworthiness, which is an important indicator of robustness.

This uncertainty information—namely varying uncertainty values stemming from imbalanced representation in training data—not only provides a confidence measure of our GFM predictions but also guides data collection for further model development in an active learning setting.

Parity plot of ensemble predictions (using fifteen pre-trained models from Sect. 4.6) of energy and atomic forces for 20,480 atomistic structures from the test split in pre-training. The red diagonal line represents the perfect model prediction. We present the parity plots for each dataset separately in the supplementary material

Histogram of epistemic uncertainty \(\sigma _{\tilde{y}}\) (left column) and relative uncertainty \(\sigma _{\tilde{y}}/\sigma _{D}\) (right column) (with \(\sigma _{D}\) being the data STD for each dataset and being summarized in the supplementary material) in energy and force predictions for each dataset used in pre-training

5 Conclusions and future work

In this work, we described our approach toward developing and training trustworthy and energy-efficient predictive GFMs by scaling the HydraGNN architecture on over 154 million of atomistic materials modeling data using two DOE leadership class supercomputers, viz. NERSC-Perlmutter and OLCF-Frontier. We discussed optimizations and tools used for developing a GFM and running HPO at large scale.

We used distributed data management capabilities to partition large volumes of data across distributed computing resources and efficiently exchange data samples across devices using low-latency communication methods. This helped preserve global data shuffling, which is crucial for maintaining good convergence of the GFM training. By scaling HPO on over 91% of the exascale OLCF-Frontier supercomputer, we have assessed the importance of thoroughly exploring a large set of hyperparameter configurations to identify HydraGNN architectures with high predictive accuracy. The use of Omnistat tools at extreme scale allowed us to conduct a thorough analysis to select HydraGNN architectures with high predictive accuracy and low energy cost. Moreover, access to exceptionally performing large-scale computing facilities allowed us to develop and test ensemble UQ capabilities to measure the degree of confidence associated with the HydraGNN predictions, which contributes to achieving trustworthiness. Performing HPO and ensemble UQ at unprecedented scale on supercomputing facilities confirms the computational readiness of HydraGNN to develop trustworthy and energy-efficient GFMs to support AI-accelerated materials discovery and design.

Future work will be devoted to improving the scaling performance of the GFM pre-training on thousands of GPUs by exploring and developing more sophisticated strategies to mitigate load imbalance between GPUs, while maintaining high quality in the attainable trustworthiness of the pre-trained GFMs. We also plan to apply techniques to read the metadata in parallel to improve the overall bandwidth of reading large volume of datasets on supercomputers. Once the GFMs are pre-trained, we will deploy them to downstream tasks, where we will illustrate the efficacy of our GFMs in reducing the amount of training data and computational resources needed to develop robust and transferable DL models for domain-specific applications. More specifically, we will focus on two types of downstream tasks: (1) tasks where the GFM needs to be used for predictions of atomization energy and atomic forces for new atomistic structures (e.g., to develop ML force fields to accelerate molecular dynamics simulations), and (2) tasks where the GFM needs to be used for predictions of other material properties (e.g., band gap for semiconductors, HOMO–LUMO gap for organic molecules, vibrational spectra of organic and inorganic compounds). For (1), either the pre-trained GFM provides already sufficiently accurate predictions, or the pre-trained GFM needs to be fine-tuned with a small amount of application-specific data. For (2), we will remove the heads of the HydraGNN architectures used during the pre-training and replace them with new head(s) for fine-tuning the GFMs on the new quantities of interest, while freezing the parameters of the shared message passing layers.

Data Availability

The set of ADIOS files that contain the preprocessed data and the ensemble of pre-trained GFMs that can be used for zero-shot inference and fine-tuning on downstream tasks are available open-source on the OLCF Data Constellation Facility [96]. Python scripts that illustrate how to load the pre-trained GFMs and use them for inference are available on HuggingFace at the following link: https://huggingface.co/mlupopa/HydraGNN_Predictive_GFM_2024/tree/main

Code availability

The code used to develop, pre-train, and use the pre-trained GFMs is available at the following branch https://github.com/ORNL/HydraGNN/tree/Predictive_GFM_2024 of the ORNL GitHub repository for HydraGNN. More specifically, the scripts used to preprocess the data, generate the ADIOS files, run HPO, and continue the pre-training are available in the directory called examples/multidataset_hpo. The scripts that use the pre-trained ensemble of GFMs for ensemble averaging and epistemic UQ are available in the directory called examples/ensemble_learning.

References

US Department of Energy - Frontiers in energy research newsletter scientific playgrounds accelerating clean energy research. https://www.energyfrontier.us/content/scientific-playgrounds-accelerating-clean-energy-research

Balabin RM, Lomakina I (2009) Neural network approach to quantum-chemistry data: accurate prediction of density functional theory energies. J Chem Phys 131(7):074104. https://doi.org/10.1063/1.3206326

Chandrasekaran A, Deepak K, Batra E, Kim C, Chen L, Ramprasad R (2019) Solving the electronic structure problem with machine learning. NPJ Comput Mater. https://doi.org/10.1038/s41524-019-0162-7

Brockherde F, Vogt L, Tuckerman ME, Burke K, Müller KR (2017) Bypassing the Kohn-Sham equations with machine learning. Nat Commun. https://doi.org/10.1038/s41467-017-00839-3

Sinitskiy AV, Pande VS Deep neural network computes electron densities and energies of a large set of organic molecules faster than density functional theory (DFT). arXiv:1809.02723. https://doi.org/10.48550/arXiv.1809.02723

Custódio CA, Filletti ER, França VV (2019) Artificial neural networks for density-functional optimizations in fermionic systems. Sci Report. https://doi.org/10.1038/s41598-018-38345-3

Schleder GR, Padhila ACM, Acosta CM, Costa M, Fazzio A (2019) From DFT to machine learning: recent approaches to materials science-a review. J Phys Mater. https://doi.org/10.1088/2515-7639/ab084b

Vasudevan RK, Choudhary K, Mehta A, Smith R, Kusne G, Tavazza F, Vlcek L, Ziatdinov M, Kalinin SV, Hattrick-Simpers J (2019) Materials science in the AI age: high-throughput library generation, machine learning and a pathway from correlations to the underpinning physics. MRS Commun 9(3):10–1557201995. https://doi.org/10.1557/mrc.2019.95

Maguire J, Benedict M, Woodcock L, LeClair S (2001) Artificial intelligence in materials science: application to molecular and particulate simulations. MRS Commun. https://doi.org/10.1557/PROC-700-S8.1

Wang C, Tharval A, Kitchin JR (2018) A density functional theory parameterised neural network model of zirconia. Mol Simul 44(8):623–630. https://doi.org/10.1080/08927022.2017.1420185

Lupo Pasini M, Li YW, Yin J, Zhang J, Barros K, Eisenbach M (2020) Fast and stable deep-learning predictions of material properties for solid solution alloys. J Phys Condens Matter 33(8):084005. https://doi.org/10.1088/1361-648X/abcb10

Park CW, Kornbluth M, Vandermause J, Wolverton C, Kozinsky B, Mailoa JP (2021) Accurate and scalable graph neural network force field and molecular dynamics with direct force architecture. NPJ Comput. Mater. 7:73. https://doi.org/10.1038/s41524-021-00543-3

Axelrod S, Schwalbe-Koda D, Mohapatra S, Damewood J, Greenman KP, Gómez-Bombarelli R (2022) Learning matter: materials design with machine learning and atomistic simulations. Acc Mater Res 3:343–357. https://doi.org/10.1021/accountsmr.1c00238